Link to download entire source code at the end of this article!

In recent years, artificial intelligence has made remarkable strides, with machine learning playing a central role in its evolution. One of the most fascinating and rapidly advancing branches of machine learning is generative modeling. Unlike traditional models that focus on classification or prediction, generative modeling enables AI to create entirely new data — be it images, text, music, or even videos — that resemble real-world examples. This ability to generate synthetic yet realistic data has opened the door to groundbreaking applications in content creation, design, and even scientific research.

To fully grasp the significance of generative modeling, it is essential to understand how it differs from discriminative modeling, the more commonly used approach in machine learning. Discriminative models excel at tasks such as classifying emails as spam or non-spam, identifying faces in photos, or predicting stock market trends. These models learn to differentiate between categories by drawing decision boundaries between them. In contrast, generative models focus on understanding the underlying patterns and structures in data, enabling them to generate new examples that fit within the learned distribution. For example, a discriminative model can determine whether a painting is by Van Gogh, whereas a generative model can create an entirely new painting that mimics his style.

Generative modeling is not just an academic curiosity — it has become a driving force in modern AI. From deepfake technology and AI-generated artwork to realistic video game graphics and automated content creation, generative models are revolutionizing the way we interact with artificial intelligence. Moreover, they are paving the way for more advanced AI systems capable of creative problem-solving and simulation, bringing us closer to machines that can imagine, innovate, and assist in human-like ways.

This article will provide a comprehensive guide to generative modeling, starting with fundamental concepts and progressing through the various types of generative models that dominate the field today. We will explore the core probabilistic principles that underpin these models, examine the key differences between explicit, approximate, and implicit density models, and discuss how modern deep learning techniques like GANs, VAEs, and diffusion models have revolutionized AI-generated content. Additionally, we will walk through the practical aspects of working with generative models, including how to set up the Generative Deep Learning codebase and begin building models yourself.

By the end of this guide, you will have a clear understanding of what generative modeling is, why it is so powerful, and how it is shaping the future of artificial intelligence. Whether you’re an AI enthusiast, a researcher, or a developer looking to implement generative techniques in your projects, this article will provide you with a strong foundation to explore the exciting world of AI-driven creativity.

Section 1: What is Generative Modeling?

Generative modeling is a branch of machine learning focused on creating new data instances that mimic a given dataset. Unlike traditional models that classify or predict labels, generative models learn the underlying patterns and structures of the data, enabling them to produce novel outputs — such as images of horses that never existed or synthetic text indistinguishable from human writing.

Key Concepts and Workflow

Capturing Patterns, Not Classifications

Generative models differ from discriminative models (e.g., classifiers) in their objective. While a discriminative model learns boundaries to separate classes (e.g., cats vs. dogs), a generative model learns the full distribution of the data. For example, a generative adversarial network (GAN) trained on horse images learns the joint probabilities of pixel values, textures, and shapes to create realistic new images.

Code Example: Contrasting Discriminative vs. Generative Models

Below is a simplified comparison using PyTorch:

# Discriminative Model (Classifier) import torch.nn as nn class Classifier(nn.Module): def __init__(self): super().__init__() self.layers = nn.Sequential( nn.Linear(784, 256), # Input: Flattened 28x28 image nn.ReLU(), nn.Linear(256, 1) # Output: Probability of class "horse" ) def forward(self, x): return self.layers(x) # Generative Model (Simplified Variational Autoencoder) class VAE(nn.Module): def __init__(self): super().__init__() # Encoder: Maps data to latent space self.encoder = nn.Sequential( nn.Linear(784, 256), nn.ReLU(), nn.Linear(256, 32) # Outputs mean and log-variance ) # Decoder: Maps latent space back to data space self.decoder = nn.Sequential( nn.Linear(16, 256), # Latent dimension = 16 nn.ReLU(), nn.Linear(256, 784), nn.Sigmoid() # Outputs pixel probabilities ) def reparameterize(self, mu, logvar): # Probabilistic sampling std = torch.exp(0.5 * logvar) eps = torch.randn_like(std) return mu + eps * std def forward(self, x): h = self.encoder(x) mu, logvar = h[:, :16], h[:, 16:] # Split into mean and variance z = self.reparameterize(mu, logvar) return self.decoder(z), mu, logvarIn the VAE, the encoder captures patterns in the data by compressing it into a probabilistic latent space (mu and logvar), while the decoder generates new data from this compressed representation. The reparameterize function introduces stochasticity, a hallmark of probabilistic generative models.

The Role of Probabilistic Methods

Generative models rely heavily on probability theory to handle uncertainty. For instance:

Latent Variables: Models like VAEs assume data is generated from hidden variables (e.g., pose, color in horse images).

Sampling: New data is created by sampling from learned distributions (e.g., Gaussian in VAEs).

Loss Functions: Objectives often involve maximizing the likelihood of the training data or minimizing divergence metrics (e.g., KL divergence in VAEs).

The Challenge of High-Dimensional Data

High-dimensional data (e.g., images, audio) poses a significant challenge. A single 256x256 RGB image has 196,608 dimensions — far too many for brute-force modeling. Generative models address this by:

Dimensionality Reduction: Learning compact latent spaces (e.g., 16–512 dimensions).

Hierarchical Learning: Capturing coarse-to-fine features (e.g., using convolutional layers in GANs).

Code Example: Handling High-Dimensional Data in VAEs

The VAE code above reduces a 784-dimensional MNIST image to a 16-dimensional latent space. During training, the model minimizes:

def vae_loss(recon_x, x, mu, logvar): # Reconstruction loss (e.g., BCE) + KL divergence bce = nn.functional.binary_cross_entropy(recon_x, x, reduction='sum') kld = -0.5 * torch.sum(1 + logvar - mu.pow(2) - logvar.exp()) return bce + kldHere, bce ensures the decoded output matches the input, while kld regularizes the latent space to follow a standard normal distribution.

Why Does It Matter?

Generative modeling unlocks capabilities like creative AI, data augmentation, and anomaly detection. By mastering probabilistic patterns and taming high-dimensional spaces, these models push the boundaries of what machines can create.

Section 2: Generative vs. Discriminative Models

In the realm of machine learning, models are broadly categorized into generative and discriminative models. Understanding the distinction between these two types is crucial for selecting the appropriate approach for a given task. This section delves into the fundamental differences, mathematical underpinnings, and practical implications of generative and discriminative models, supplemented with illustrative examples and code snippets.

2.1 Discriminative Models

Discriminative models focus on modeling the decision boundary between classes. Their primary goal is classification, i.e., identifying the category or label of a given input. For instance, a discriminative model can be trained to identify Van Gogh paintings by distinguishing them from works of other artists.

Key Characteristics:

Objective: Learn the conditional probability P(y∣x)P(y \mid x)P(y∣x), where y is the label and x is the input data.

Data Requirement: Requires labeled data for training.

Functionality: Predicts labels based on learned features from the input data.

Limitation: Cannot generate new data samples.

Mathematical Representation:

The discriminative approach models the conditional probability directly:

P(y∣x)P(y \mid x)P(y∣x)

This represents the probability of a label y given an observation x.

Example: Logistic Regression for Binary Classification

Consider a binary classification task where we aim to classify paintings as either Van Gogh or Non-Van Gogh. Logistic Regression is a quintessential discriminative model suitable for this task.

import numpy as np import matplotlib.pyplot as plt from sklearn.linear_model import LogisticRegression from sklearn.datasets import make_classification from sklearn.model_selection import train_test_split from sklearn.metrics import accuracy_score # Generate synthetic data for illustration X, y = make_classification(n_samples=1000, n_features=20, n_informative=15, n_redundant=5, n_classes=2, random_state=42) # Split into training and testing sets X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42) # Initialize and train the Logistic Regression model clf = LogisticRegression(max_iter=1000) clf.fit(X_train, y_train) # Predict on test data y_pred = clf.predict(X_test) # Evaluate the model accuracy = accuracy_score(y_test, y_pred) print(f"Logistic Regression Accuracy: {accuracy * 100:.2f}%")Explanation:

Data Generation: We create a synthetic dataset with 20 features, where 15 are informative for the classification task.

Model Training: The Logistic Regression model learns the decision boundary that best separates the two classes.

Prediction & Evaluation: The model predicts labels for the test set and evaluates accuracy, reflecting its classification performance.

2.2 Generative Models

In contrast, generative models aim to model the joint probability distribution P(x,y)P(x, y)P(x,y). Their primary objective is to generate new data samples that resemble the training data. For example, a generative model can create new images of apples by learning the distribution of apple images.

Key Characteristics:

Objective: Learn the joint probability P(x,y)P(x, y)P(x,y) or the data distribution P(x)P(x)P(x).

Data Requirement: Can operate with unlabeled data (when modeling P(x)P(x)P(x)) or labeled data (when modeling P(x,y)P(x, y)P(x,y)).

Functionality: Capable of generating new samples that are similar to the training data.

Flexibility: Can perform tasks like data augmentation, anomaly detection, and more.

Mathematical Representation:

Generative models primarily focus on the marginal probability:

P(x)P(x)P(x)

This represents the probability of observing a data point xxx from the learned distribution.

For models that condition on labels, known as conditional generative models, the representation becomes:

P(x∣y)P(x \mid y)P(x∣y)

This allows generating specific outputs based on the provided label y (e.g., generating images of apples when y corresponds to “apple”).

Example: Gaussian Mixture Model for Data Generation

A Gaussian Mixture Model (GMM) is a probabilistic generative model that assumes data is generated from a mixture of several Gaussian distributions.

from sklearn.mixture import GaussianMixture from sklearn.datasets import make_blobs # Generate synthetic data X, _ = make_blobs(n_samples=500, centers=3, cluster_std=1.0, random_state=42) # Initialize and fit the Gaussian Mixture Model gmm = GaussianMixture(n_components=3, covariance_type='full', random_state=42) gmm.fit(X) # Generate new samples X_new, _ = gmm.sample(100) # Plot original and generated data plt.scatter(X[:, 0], X[:, 1], label='Original Data', alpha=0.5) plt.scatter(X_new[:, 0], X_new[:, 1], label='Generated Data', alpha=0.5) plt.legend() plt.title('Gaussian Mixture Model: Original vs Generated Data') plt.show()Explanation:

Data Generation: We create a synthetic dataset with three clusters.

Model Training: The GMM learns the parameters of the Gaussian distributions that best fit the data.

Sample Generation: The model generates new data points by sampling from the learned Gaussian components.

Visualization: The plot compares original data points with those generated by the GMM, illustrating the model’s ability to reproduce the data distribution.

2.3 Conditional Generative Models

Conditional generative models extend generative models by allowing the generation of data conditioned on specific inputs, typically labels. This capability enables the creation of targeted outputs, such as generating images of apples or specific styles of art.

Example: Conditional Generative Adversarial Network (cGAN)

Conditional GANs are an extension of GANs where both the generator and discriminator receive additional information (e.g., class labels) as input.

import tensorflow as tf from tensorflow.keras import layers # Define generator model def build_generator(latent_dim, num_classes): label_input = layers.Input(shape=(1,), dtype='int32') label_embedding = layers.Embedding(num_classes, latent_dim)(label_input) label_embedding = layers.Flatten()(label_embedding) noise_input = layers.Input(shape=(latent_dim,)) model_input = layers.multiply([noise_input, label_embedding]) x = layers.Dense(128, activation='relu')(model_input) x = layers.BatchNormalization()(x) x = layers.Dense(784, activation='sigmoid')(x) x = layers.Reshape((28, 28, 1))(x) generator = tf.keras.Model([noise_input, label_input], x, name='generator') return generator # Define discriminator model def build_discriminator(img_shape, num_classes): img_input = layers.Input(shape=img_shape) label_input = layers.Input(shape=(1,), dtype='int32') label_embedding = layers.Embedding(num_classes, np.prod(img_shape))(label_input) label_embedding = layers.Flatten()(label_embedding) label_embedding = layers.Reshape(img_shape)(label_embedding) concatenated = layers.Concatenate()([img_input, label_embedding]) x = layers.Flatten()(concatenated) x = layers.Dense(512, activation='relu')(x) x = layers.Dense(1, activation='sigmoid')(x) discriminator = tf.keras.Model([img_input, label_input], x, name='discriminator') return discriminator # Parameters latent_dim = 100 num_classes = 10 img_shape = (28, 28, 1) # Build and compile models generator = build_generator(latent_dim, num_classes) discriminator = build_discriminator(img_shape, num_classes) discriminator.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']) # Combined model for training generator discriminator.trainable = False noise = layers.Input(shape=(latent_dim,)) label = layers.Input(shape=(1,)) img = generator([noise, label]) validity = discriminator([img, label]) combined = tf.keras.Model([noise, label], validity) combined.compile(optimizer='adam', loss='binary_crossentropy')Explanation:

Generator: Takes random noise and a class label as input, and generates an image corresponding to the label.

Discriminator: Receives an image and a label, and determines whether the image is real or generated, conditioned on the label.

Training Setup: The discriminator is trained to distinguish real images from generated ones, while the generator learns to produce images that the discriminator classifies as real, conditioned on the input label.

2.4 Why Discriminative Models Cannot Generate New Samples

While discriminative models excel at classification tasks by learning decision boundaries, they lack the mechanism to generate new data samples. This limitation stems from their focus on modeling P(y∣x)P(y \mid x)P(y∣x) rather than the data distribution P(x)P(x)P(x).

Detailed Explanation:

Discriminative Focus: By concentrating solely on the relationship between inputs and labels, discriminative models optimize their parameters to maximize classification accuracy. They do not learn the underlying structure or distribution of the input data.

Absence of Data Generation Capability: Since discriminative models do not model P(x)P(x)P(x), they lack the necessary information to sample or generate new instances of xxx. Their learned parameters are tailored for distinguishing between existing classes rather than reproducing or creating new data points.

Perfect Training Scenario: Even if a discriminative model is perfectly trained (i.e., it achieves 100% classification accuracy on the training data), it still does not possess the generative properties required to synthesize new data. The model’s architecture and training objective do not facilitate the reconstruction or creation of new inputs.

Illustration:

Consider a perfectly trained Logistic Regression model for classifying Van Gogh paintings. While the model can flawlessly assign the correct label to any input painting, it cannot produce a new painting in the style of Van Gogh because it has never learned the distribution of pixel values or artistic features that constitute a Van Gogh painting. In contrast, a generative model trained on Van Gogh’s works could potentially generate new images that mimic his style by understanding the distribution P(x)P(x)P(x) of his paintings.

Understanding the distinction between generative and discriminative models is pivotal for effectively tackling machine learning problems. Discriminative models are ideal for tasks requiring accurate classification based on existing data, leveraging labeled datasets to learn decision boundaries. Generative models, on the other hand, offer the flexibility to generate new data, model complex distributions, and perform tasks beyond classification, such as data augmentation and unsupervised learning. Selecting the appropriate model type hinges on the specific requirements of the application at hand.

Section 3: The Rise of Generative Modeling

For many years, the field of machine learning was predominantly dominated by discriminative models. These models, including logistic regression, support vector machines (SVMs), and convolutional neural networks (CNNs), excelled in tasks that involved classification and prediction. Their practicality and straightforward objectives made them the go-to choice for a myriad of applications, ranging from image and speech recognition to natural language processing and medical diagnostics. Discriminative models thrive on their ability to discern patterns and make accurate predictions based on input data, which fueled their widespread adoption across various industries. Their success can be attributed to the relative simplicity of their goals: given an input, determine the appropriate label or category. This clear focus facilitated the development of robust algorithms and the accumulation of extensive research dedicated to enhancing classification accuracy.

However, while discriminative models achieved remarkable success, generative modeling remained a more elusive and challenging frontier. Generative models aim to create new data instances that resemble a given dataset, rather than merely classifying existing data. For instance, while a discriminative model can accurately identify whether an image contains a cat or a dog, a generative model endeavors to produce entirely new images of cats or dogs that appear realistic and indistinguishable from real photographs. This fundamental difference in objectives introduces a layer of complexity that has historically hindered the progress and adoption of generative models.

One of the primary challenges in generative modeling lies in the inherent difficulty of creating realistic images compared to classifying them. Classification tasks involve learning the boundaries between different classes, which, while complex, are often more tractable than the intricate task of generating new data. Generative models must capture the entire data distribution, encompassing subtle nuances, variations, and intricate details that define the data’s essence. This requires a profound understanding of the underlying structures and relationships within the data, making the task significantly more demanding.

Moreover, generative models operate in high-dimensional spaces, especially when dealing with data types such as images, videos, or audio. Images, for example, consist of thousands or even millions of pixels, each contributing to the overall structure and appearance of the image. Modeling such high-dimensional distributions necessitates sophisticated architectures capable of handling vast feature spaces, which in turn demand substantial computational resources and expertise. The sheer dimensionality exacerbates the complexity of training generative models, often leading to prolonged training times and increased susceptibility to issues like overfitting and instability during the training process.

Another significant hurdle in generative modeling is the evaluation of the generated data’s quality. Unlike classification accuracy, which can be objectively measured, assessing the realism and diversity of generated images is inherently subjective. Metrics such as the Inception Score (IS) and Fréchet Inception Distance (FID) have been developed to quantify the quality of generated images by comparing them to real data distributions. However, these metrics are not without limitations and often require supplementary human judgment to ensure the generated content meets the desired standards of realism and diversity.

Despite these formidable challenges, the landscape of generative modeling has undergone a transformative shift in recent years, largely propelled by advancements in deep learning. The maturation of deep neural networks has provided the necessary tools and frameworks to tackle the complexities of generative modeling, leading to groundbreaking breakthroughs that have significantly narrowed the gap between discriminative and generative capabilities.

The advent of Generative Adversarial Networks (GANs), introduced by Ian Goodfellow and his colleagues in 2014, marked a pivotal moment in generative modeling. GANs consist of two neural networks — the Generator and the Discriminator — that engage in a competitive game. The Generator creates synthetic data samples, while the Discriminator evaluates their authenticity, distinguishing between real and fake data. This adversarial training process drives the Generator to produce increasingly realistic data, as it learns to fool the Discriminator into believing its outputs are genuine. Variants of GANs, such as StyleGAN, CycleGAN, and BigGAN, have pushed the boundaries of what generative models can achieve, enabling the creation of lifelike human faces, artistic transformations, and large-scale image synthesis with unprecedented fidelity.

Another significant advancement came with Variational Autoencoders (VAEs), which offer a probabilistic approach to generative modeling. VAEs learn latent representations of data by encoding input data into a lower-dimensional latent space and then decoding samples from this space back into the original data domain. This architecture allows VAEs to model complex distributions and generate new data instances by sampling from the learned latent space. The interpretability and flexibility of VAEs make them invaluable for applications such as image generation, anomaly detection, and data compression, where understanding and manipulating the latent representations can yield meaningful insights and enhancements.

Diffusion Models have also emerged as a powerful alternative to GANs, particularly in generating high-fidelity images. These models work by gradually adding noise to data and then learning to reverse this process to generate coherent and detailed outputs. Denoising Diffusion Probabilistic Models (DDPMs), for instance, have demonstrated impressive capabilities in producing realistic images by iteratively refining noisy data into clear and structured outputs. This approach offers different strengths and trade-offs compared to GANs, often providing more stable training dynamics and avoiding issues like mode collapse, where GANs sometimes generate limited varieties of outputs.

The integration of Transformer Architectures into generative tasks has further expanded the horizons of generative modeling. Originally successful in natural language processing tasks, transformers have been adapted for image and multimodal data generation, exemplified by models like GPT-3 and GPT-4. These models leverage the attention mechanism to capture long-range dependencies and intricate patterns within data, enabling the generation of coherent and contextually relevant text, images, and even combined data types. The versatility and scalability of transformers have made them a cornerstone in modern generative modeling, driving innovations across various domains.

The culmination of these advancements has unlocked a myriad of applications for generative models, showcasing their creative potential and practical utility across diverse industries. AI-generated content is one of the most prominent areas where generative models have made significant inroads. Text generation models like GPT-4 can produce human-like text, facilitating applications such as automated content creation, chatbots, and virtual assistants. These models can generate articles, stories, code snippets, and even poetry, enhancing productivity and creativity by automating repetitive and time-consuming tasks.

In the realm of image generation, models like DALL·E and Midjourney have revolutionized the way images are created from textual descriptions. These models can generate detailed and imaginative images based on simple prompts, providing invaluable tools for graphic design, advertising, and artistic endeavors. The ability to rapidly prototype and visualize concepts has streamlined workflows and opened new avenues for creativity, enabling artists and designers to explore ideas with unprecedented ease and flexibility.

Video generation is another burgeoning application of generative models, albeit still in its nascent stages compared to image and text generation. Video-generative models are making strides in producing short clips, animations, and special effects, with potential applications in filmmaking, gaming, and virtual reality. The capability to generate dynamic and coherent video content holds promise for transforming entertainment and media production, offering new tools for creators to bring their visions to life.

The proliferation of APIs for automatic content creation has democratized access to powerful generative models, allowing developers and businesses to integrate sophisticated generative capabilities into their applications without requiring deep expertise in machine learning. Companies like OpenAI provide APIs that facilitate the generation of text-based content, enabling applications ranging from automated writing assistants to interactive conversational agents. Similarly, image generation APIs allow users to create images from textual prompts, suitable for a wide array of creative and commercial applications. Platforms like RunwayML offer comprehensive suites of generative tools for video, image, and audio processing, seamlessly integrating into creative workflows and enhancing the capabilities of artists, designers, and developers.

Generative models have also found transformative use cases in game design. Procedural Content Generation (PCG) leverages generative models to automatically create game levels, terrains, and environments, ensuring diverse and replayable experiences for players. This automation accelerates the development process and allows for the creation of expansive and varied game worlds without the need for extensive manual input. Additionally, generative models aid in the creation of unique character designs, textures, and animations, reducing the manual workload on artists and designers and enabling the rapid iteration of game assets.

In cinematography, generative models offer powerful tools for enhancing creativity and efficiency. They can automate the creation of complex visual effects (VFX), reducing production time and costs while enabling filmmakers to achieve stunning visual feats that were previously unattainable. Generative models also assist in scriptwriting by generating plot ideas, dialogues, and story arcs, serving as creative partners for writers and enriching the storytelling process. Moreover, virtual cinematography, facilitated by generative models, allows directors to simulate camera movements, lighting scenarios, and scene compositions, providing new perspectives and creative options that enhance the visual storytelling of films.

The impact of generative modeling extends into business applications, driving innovation and operational efficiency across various sectors. In marketing and advertising, generative models create personalized advertisements, promotional materials, and social media content tailored to specific audiences, enhancing engagement and effectiveness. In product design, these models generate design prototypes and variations, facilitating rapid iteration and fostering innovation by allowing designers to explore a vast array of styles and functionalities. Additionally, generative models aid in data augmentation by producing synthetic data that enhances training datasets, improving the performance and robustness of machine learning models without compromising sensitive information.

To illustrate the practical aspects of generative modeling, let us delve into a detailed implementation of a Deep Convolutional Generative Adversarial Network (DCGAN) using PyTorch. DCGANs are a class of GANs that utilize deep convolutional networks for both the Generator and Discriminator, enabling the generation of high-resolution and detailed images. This implementation will demonstrate the intricate architecture and training dynamics that underpin advanced generative models.

Implementing a Deep Convolutional GAN (DCGAN) in PyTorch

To embark on this implementation, ensure that you have the necessary libraries installed. You can install them using pip:

pip install torch torchvision matplotlibImporting Libraries

First, import the essential libraries required for building and training the DCGAN.

import torch import torch.nn as nn import torch.optim as optim from torchvision import datasets, transforms from torch.utils.data import DataLoader import torchvision.utils as vutils import matplotlib.pyplot as plt import numpy as np import osDefining Hyperparameters

Set the hyperparameters that will guide the training process.

# Hyperparameters batch_size = 128 lr = 0.0002 num_epochs = 50 latent_dim = 100 image_size = 64 # DCGAN typically uses 64x64 images channels = 3 # RGB images beta1 = 0.5 # Beta1 hyperparam for Adam optimizers ngpu = 1 # Number of GPUs available. Use 0 for CPU modePreparing the Dataset

For this example, we’ll use the CelebA dataset, which consists of celebrity faces. The dataset will be transformed to match the input requirements of the DCGAN.

# Create the dataset directory if it doesn't exist os.makedirs('data', exist_ok=True) # Transformations: Resize to image_size, center crop, convert to tensor, and normalize to [-1, 1] transform = transforms.Compose([ transforms.Resize(image_size), transforms.CenterCrop(image_size), transforms.ToTensor(), transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)), ]) # Download and create the dataset dataset = datasets.CelebA(root='data', split='train', download=True, transform=transform) # Create the dataloader dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True, num_workers=4, pin_memory=True)Defining the Weight Initialization Function

Proper weight initialization is crucial for the stable training of GANs. DCGAN recommends initializing the weights to follow a normal distribution with mean=0 and standard deviation=0.02.

def weights_init_normal(m): classname = m.__class__.__name__ if classname.find('Conv') != -1: nn.init.normal_(m.weight.data, 0.0, 0.02) elif classname.find('BatchNorm') != -1: nn.init.normal_(m.weight.data, 1.0, 0.02) nn.init.constant_(m.bias.data, 0)Building the Generator and Discriminator

Generator: The Generator network transforms a latent vector into a high-resolution image using a series of transposed convolutional layers, batch normalization, and ReLU activations.

class Generator(nn.Module): def __init__(self, ngpu): super(Generator, self).__init__() self.ngpu = ngpu self.main = nn.Sequential( # Input: latent_dim x 1 x 1 nn.ConvTranspose2d(latent_dim, 512, 4, 1, 0, bias=False), nn.BatchNorm2d(512), nn.ReLU(True), # State: 512 x 4 x 4 nn.ConvTranspose2d(512, 256, 4, 2, 1, bias=False), nn.BatchNorm2d(256), nn.ReLU(True), # State: 256 x 8 x 8 nn.ConvTranspose2d(256, 128, 4, 2, 1, bias=False), nn.BatchNorm2d(128), nn.ReLU(True), # State: 128 x 16 x 16 nn.ConvTranspose2d(128, 64, 4, 2, 1, bias=False), nn.BatchNorm2d(64), nn.ReLU(True), # State: 64 x 32 x 32 nn.ConvTranspose2d(64, channels, 4, 2, 1, bias=False), nn.Tanh() # Output: channels x 64 x 64 ) def forward(self, input): return self.main(input)Discriminator: The Discriminator network evaluates whether an input image is real or fake by passing it through a series of convolutional layers, batch normalization, and LeakyReLU activations, culminating in a sigmoid activation to output a probability.

class Discriminator(nn.Module): def __init__(self, ngpu): super(Discriminator, self).__init__() self.ngpu = ngpu self.main = nn.Sequential( # Input: channels x 64 x 64 nn.Conv2d(channels, 64, 4, 2, 1, bias=False), nn.LeakyReLU(0.2, inplace=True), # State: 64 x 32 x 32 nn.Conv2d(64, 128, 4, 2, 1, bias=False), nn.BatchNorm2d(128), nn.LeakyReLU(0.2, inplace=True), # State: 128 x 16 x 16 nn.Conv2d(128, 256, 4, 2, 1, bias=False), nn.BatchNorm2d(256), nn.LeakyReLU(0.2, inplace=True), # State: 256 x 8 x 8 nn.Conv2d(256, 512, 4, 2, 1, bias=False), nn.BatchNorm2d(512), nn.LeakyReLU(0.2, inplace=True), # State: 512 x 4 x 4 nn.Conv2d(512, 1, 4, 1, 0, bias=False), nn.Sigmoid() # Output: 1 x 1 x 1 ) def forward(self, input): return self.main(input).view(-1, 1).squeeze(1)Initializing the Models and Applying Weight Initialization

Instantiate the Generator and Discriminator, move them to the appropriate device (GPU or CPU), and apply the weight initialization function to ensure stable training.

# Decide which device to use device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu") # Create the generator netG = Generator(ngpu).to(device) # Apply the weights_init_normal function to randomly initialize all weights netG.apply(weights_init_normal) # Print the model print(netG) # Create the Discriminator netD = Discriminator(ngpu).to(device) # Apply the weights_init_normal function netD.apply(weights_init_normal) # Print the model print(netD)Setting Up the Loss Function and Optimizers

Define the loss function and optimizers for both the Generator and Discriminator. Binary Cross-Entropy (BCE) loss is commonly used for GANs.

# Loss function criterion = nn.BCELoss() # Create batch of latent vectors that we will use to visualize the progression of the generator fixed_noise = torch.randn(64, latent_dim, 1, 1, device=device) # Labels for real and fake images real_label = 1. fake_label = 0. # Setup Adam optimizers for both G and D optimizerD = optim.Adam(netD.parameters(), lr=lr, betas=(beta1, 0.999)) optimizerG = optim.Adam(netG.parameters(), lr=lr, betas=(beta1, 0.999))Training the DCGAN

The training loop involves alternating between training the Discriminator and the Generator. The Discriminator learns to distinguish real images from fake ones, while the Generator learns to produce images that can fool the Discriminator.

# Lists to keep track of progress img_list = [] G_losses = [] D_losses = [] iters = 0 print("Starting Training Loop...") for epoch in range(num_epochs): for i, data in enumerate(dataloader, 0): ############################ # (1) Update D network ########################### ## Train with all-real batch netD.zero_grad() real_images = data[0].to(device) b_size = real_images.size(0) label = torch.full((b_size,), real_label, dtype=torch.float, device=device) output = netD(real_images) errD_real = criterion(output, label) errD_real.backward() D_x = output.mean().item() ## Train with all-fake batch noise = torch.randn(b_size, latent_dim, 1, 1, device=device) fake_images = netG(noise) label.fill_(fake_label) output = netD(fake_images.detach()) errD_fake = criterion(output, label) errD_fake.backward() D_G_z1 = output.mean().item() errD = errD_real + errD_fake optimizerD.step() ############################ # (2) Update G network ########################### netG.zero_grad() label.fill_(real_label) # fake labels are real for generator cost output = netD(fake_images) errG = criterion(output, label) errG.backward() D_G_z2 = output.mean().item() optimizerG.step() # Save Losses for plotting later G_losses.append(errG.item()) D_losses.append(errD.item()) # Check how the generator is doing by saving G's output on fixed_noise if (iters % 500 == 0) or ((epoch == num_epochs-1) and (i == len(dataloader)-1)): with torch.no_grad(): fake = netG(fixed_noise).detach().cpu() img_grid = vutils.make_grid(fake, padding=2, normalize=True) img_list.append(img_grid) plt.figure(figsize=(8,8)) plt.axis("off") plt.title(f"Epoch {epoch+1}") plt.imshow(np.transpose(img_grid, (1,2,0))) plt.show() iters += 1 # Save images at the end of each epoch if not os.path.exists('output_images'): os.makedirs('output_images') with torch.no_grad(): fake = netG(fixed_noise).detach().cpu() img_grid = vutils.make_grid(fake, padding=2, normalize=True) vutils.save_image(fake, f"output_images/epoch_{epoch+1}.png", normalize=True)Visualizing Training Progress

After training, visualize the Generator and Discriminator losses to assess the training stability and the quality of generated images over epochs.

# Plot the losses plt.figure(figsize=(10,5)) plt.title("Generator and Discriminator Loss During Training") plt.plot(G_losses,label="G") plt.plot(D_losses,label="D") plt.xlabel("Iterations") plt.ylabel("Loss") plt.legend() plt.show() # Visualize the progression of generated images fig = plt.figure(figsize=(8,8)) plt.axis("off") ims = [[plt.imshow(np.transpose(img, (1,2,0)), animated=True)] for img in img_list] ani = animation.ArtistAnimation(fig, ims, interval=1000, repeat_delay=1000, blit=True) # To save the animation, uncomment the following lines: # ani.save('dcgan_training_progress.gif', writer='imagemagick') plt.show()Enhancements and Advanced Techniques

While the above implementation provides a robust foundation for understanding DCGANs, several advanced techniques can further enhance the model’s performance and stability:

Spectral Normalization: This technique normalizes the weights of the Discriminator to stabilize training and prevent mode collapse by controlling the Lipschitz constant of the network.

Wasserstein GAN (WGAN): By utilizing the Wasserstein distance instead of BCE loss, WGANs offer smoother gradients and more stable training dynamics, reducing the likelihood of mode collapse.

Gradient Penalty: Implementing a gradient penalty in WGANs enforces the Lipschitz constraint more effectively, further enhancing training stability.

Progressive Growing: Gradually increasing the resolution of generated images allows the model to learn coarse features before fine details, resulting in higher-quality outputs.

Conditional GANs (cGANs): Incorporating additional information, such as class labels, enables the generation of specific categories of data, enhancing control over the generated content.

Implementing these advanced techniques requires a deeper understanding of GAN architectures and training methodologies but can significantly improve the quality and diversity of generated samples.

The Impact of Generative Modeling Across Industries

The advancements in generative modeling have not only demonstrated the creative potential of AI but have also introduced transformative changes across various industries. In the entertainment and media sectors, generative models automate the creation of scripts, storyboards, and promotional materials, enabling faster production cycles and personalized content tailored to individual preferences. This automation enhances engagement and allows creators to focus on more nuanced aspects of storytelling and production.

In healthcare, generative models play a pivotal role in drug discovery by generating molecular structures with desired properties, accelerating the identification of promising pharmaceuticals. They also aid in medical imaging by synthesizing realistic medical images for training and diagnostic purposes, improving the accuracy and efficiency of medical professionals without compromising patient privacy.

The financial industry benefits from generative models through the generation of synthetic financial data for testing algorithms, ensuring robustness without exposing sensitive information. Additionally, these models assist in risk assessment by modeling complex financial scenarios to predict and mitigate potential risks, enhancing decision-making processes.

In the automotive sector, generative models revolutionize design and prototyping by generating design variations for vehicles, facilitating rapid iteration and innovation. They also enhance simulation processes by creating realistic driving scenarios for testing autonomous vehicles, improving safety and reliability.

The fashion and retail industries leverage generative models to automate the creation of clothing designs, enabling brands to explore a vast array of styles and trends with minimal manual input. Virtual try-ons powered by generative models generate realistic images of products on virtual models, enhancing the online shopping experience by allowing customers to visualize products in various settings and on different body types.

Ethical Considerations and Challenges

While generative modeling offers immense benefits, it also raises critical ethical considerations that must be diligently addressed. The ability to create highly realistic images and videos, often referred to as deepfakes, poses significant risks to privacy, security, and trust. Deepfakes can be exploited to create misleading or harmful content, potentially undermining public trust and facilitating the spread of misinformation.

Intellectual property concerns arise when generative models are trained on existing works, as they may inadvertently replicate or infringe upon copyrighted material. Ensuring that generative models do not violate intellectual property rights is essential to prevent legal and ethical violations.

Bias and fairness are additional concerns, as generative models trained on biased datasets can perpetuate or amplify existing biases, leading to unfair or discriminatory outputs. It is imperative to curate training datasets carefully and implement techniques to mitigate biases to ensure that generated content is fair and inclusive.

The dual-use nature of generative AI necessitates thoughtful regulation and oversight to prevent misuse while fostering innovation. Collaborative efforts between researchers, policymakers, and industry stakeholders are essential to establish guidelines, develop detection tools, and promote responsible AI practices that harness the full potential of generative modeling without compromising ethical standards.

Section 4: Generative Modeling and the Future of AI

As artificial intelligence (AI) continues to evolve, the role of generative modeling becomes increasingly pivotal in shaping the future landscape of intelligent systems. While discriminative models have laid the groundwork for AI’s capabilities in classification and prediction, generative models are poised to drive the next wave of advancements by enabling machines to understand, simulate, and create data in ways that mirror human intelligence. This section delves into why generative modeling is essential for the evolution of AI, its theoretical significance, its role in reinforcement learning, and how it aligns with the generative capacities inherent in human cognition.

Generative Modeling: The Cornerstone of AI Evolution

Generative modeling represents a fundamental shift in how AI systems perceive and interact with the world. Unlike discriminative models, which focus on drawing boundaries between different classes of data, generative models aim to capture the underlying distribution of data, enabling them to generate new, plausible instances that resemble the training data. This capability is not merely an extension of classification tasks but signifies a deeper level of understanding and interaction with data. By modeling the full data distribution, generative models can perform a wide array of tasks, including data augmentation, anomaly detection, and creative content generation, which are beyond the scope of traditional discriminative approaches.

The evolution of AI hinges on its ability to move beyond passive analysis and into active creation and simulation. Generative models empower AI systems to not only recognize patterns and make predictions but also to generate new data that can be used for training, testing, and interacting with environments in a meaningful way. This transformation is crucial for developing AI that can adapt to new situations, generate novel solutions, and exhibit a form of creativity that is essential for tackling complex, real-world problems.

Theoretical Importance: Beyond Classification to Comprehensive Data Understanding

From a theoretical standpoint, the significance of generative modeling lies in its capacity to provide a more holistic understanding of data. Classification tasks, while important, represent only a slice of the broader spectrum of data interactions. By focusing solely on predicting labels, discriminative models inherently limit their scope to what is observable and measurable within predefined categories. In contrast, generative models strive to comprehend the entirety of the data’s structure and variability, capturing intricate relationships and dependencies that define the data’s essence.

This comprehensive understanding is foundational for AI systems that aspire to achieve human-like intelligence. Humans do not merely categorize objects and events; they imagine variations, predict future scenarios, and simulate different realities based on their experiences and knowledge. Similarly, for AI to reach advanced levels of intelligence, it must develop the ability to generate and manipulate data in a manner that reflects a deep comprehension of the underlying distributions and patterns. Generative models provide the theoretical framework necessary for this level of sophistication, enabling AI to engage in tasks that require creativity, adaptability, and nuanced decision-making.

Generative Modeling in Reinforcement Learning: Training Robots with World Models

One of the most compelling applications of generative modeling lies in the realm of reinforcement learning (RL), particularly in training autonomous agents and robots. Traditional reinforcement learning approaches involve agents interacting with real-world environments or highly detailed simulations, learning optimal behaviors through trial and error. However, this process can be computationally expensive, time-consuming, and sometimes impractical, especially when real-world trials pose safety risks or require substantial resources.

Generative models address these challenges by enabling the creation of world models, which are simplified, abstracted representations of the environment. These world models simulate the dynamics of the environment, allowing agents to predict the outcomes of their actions without the need for continuous interaction with the actual environment. By training robots using these world models, RL agents can explore a vast array of scenarios, learn from diverse experiences, and optimize their strategies in a controlled and efficient manner.

For example, consider a robot tasked with navigating complex terrain. Training this robot solely in the real world would involve numerous trials, each potentially risking damage to the robot or the environment. Instead, a generative world model can simulate various terrains, enabling the robot to practice and refine its navigation strategies in a virtual setting. This approach not only accelerates the training process but also enhances the robot’s ability to generalize its learned behaviors to real-world scenarios.

Generative Models Mimicking Human Intelligence

Human intelligence is inherently generative. Humans possess the remarkable ability to imagine variations of existing concepts, predict future events, and simulate different realities in their minds. This generative capacity underpins our creativity, problem-solving skills, and adaptability. To achieve a comparable level of intelligence, AI systems must develop similar generative abilities, allowing them to conceive novel ideas, anticipate outcomes, and navigate complex, dynamic environments.

Generative models in AI aim to replicate these human-like generative processes by learning to create and manipulate data in ways that reflect a deep understanding of the underlying structures and patterns. For instance, in creative fields such as art and music, generative models can produce original pieces that exhibit stylistic coherence and innovation, akin to human artists. In predictive analytics, these models can simulate future trends based on historical data, providing valuable insights for decision-making.

Moreover, the ability to generate and manipulate data is crucial for developing AI systems that can interact seamlessly with humans and adapt to ever-changing circumstances. By embodying generative capabilities, AI can enhance its role as a collaborative tool, augmenting human creativity and intelligence rather than merely serving as a reactive system.

Advanced Code Example: Integrating a Generative World Model with Reinforcement Learning

To illustrate the practical integration of generative modeling within reinforcement learning, let’s explore a detailed implementation that combines a Variational Autoencoder (VAE) as a world model with a reinforcement learning agent using Proximal Policy Optimization (PPO). This example demonstrates how a generative model can simulate an environment, allowing an RL agent to train efficiently.

Importing Necessary Libraries

First, we import the essential libraries required for building the VAE, PPO agent, and managing the training process.

import torch import torch.nn as nn import torch.optim as optim from torch.distributions import Categorical import gym import numpy as np from torch.utils.data import DataLoader, TensorDataset import matplotlib.pyplot as pltDefining the Variational Autoencoder (VAE) as the World Model

The VAE will learn to encode observations into a latent space and decode latent vectors back into observations, effectively modeling the environment’s dynamics.

class VAE(nn.Module): def __init__(self, input_dim, latent_dim): super(VAE, self).__init__() # Encoder self.encoder = nn.Sequential( nn.Linear(input_dim, 512), nn.ReLU(), nn.Linear(512, 256), nn.ReLU(), ) self.fc_mu = nn.Linear(256, latent_dim) self.fc_logvar = nn.Linear(256, latent_dim) # Decoder self.decoder = nn.Sequential( nn.Linear(latent_dim, 256), nn.ReLU(), nn.Linear(256, 512), nn.ReLU(), nn.Linear(512, input_dim), nn.Sigmoid(), # Assuming input is normalized between 0 and 1 ) def encode(self, x): h = self.encoder(x) mu = self.fc_mu(h) logvar = self.fc_logvar(h) return mu, logvar def reparameterize(self, mu, logvar): std = torch.exp(0.5 * logvar) eps = torch.randn_like(std) return mu + eps * std def decode(self, z): return self.decoder(z) def forward(self, x): mu, logvar = self.encode(x) z = self.reparameterize(mu, logvar) reconstructed = self.decode(z) return reconstructed, mu, logvarTraining the VAE

We train the VAE using observations collected from the real environment. The VAE learns to compress and reconstruct observations, capturing the essential features of the environment.

def train_vae(env, vae, epochs=10, batch_size=128, learning_rate=1e-3): optimizer = optim.Adam(vae.parameters(), lr=learning_rate) criterion = nn.BCELoss(reduction='sum') # Collect data from the environment data = [] state = env.reset() for _ in range(10000): # Collect 10,000 observations action = env.action_space.sample() next_state, reward, done, _ = env.step(action) data.append(state) state = next_state if done: state = env.reset() data = torch.tensor(data, dtype=torch.float32) dataset = TensorDataset(data) dataloader = DataLoader(dataset, batch_size=batch_size, shuffle=True) vae.train() for epoch in range(epochs): total_loss = 0 for batch in dataloader: inputs = batch[0] optimizer.zero_grad() reconstructed, mu, logvar = vae(inputs) # Reconstruction loss recon_loss = criterion(reconstructed, inputs) # KL divergence kl_loss = -0.5 * torch.sum(1 + logvar - mu.pow(2) - logvar.exp()) loss = recon_loss + kl_loss loss.backward() optimizer.step() total_loss += loss.item() print(f"Epoch {epoch+1}, Loss: {total_loss/len(dataloader.dataset):.4f}") return vaeDefining the Proximal Policy Optimization (PPO) Agent

The PPO agent will interact with the generative world model instead of the real environment, learning to optimize its policy based on simulated experiences.

class PPOAgent(nn.Module): def __init__(self, state_dim, action_dim, hidden_dim=128): super(PPOAgent, self).__init__() # Policy network self.policy = nn.Sequential( nn.Linear(state_dim, hidden_dim), nn.ReLU(), nn.Linear(hidden_dim, action_dim), nn.Softmax(dim=-1), ) # Value network self.value = nn.Sequential( nn.Linear(state_dim, hidden_dim), nn.ReLU(), nn.Linear(hidden_dim, 1), ) def forward(self, x): policy_dist = self.policy(x) value = self.value(x) return policy_dist, valueImplementing the PPO Algorithm

The PPO algorithm optimizes the policy by maximizing a clipped surrogate objective, ensuring stable and efficient learning.

def ppo_update(agent, optimizer, states, actions, rewards, dones, next_states, gamma=0.99, eps_clip=0.2, K_epochs=4): # Compute advantages and returns values = agent.value(states).squeeze() next_values = agent.value(next_states).squeeze() returns = rewards + gamma * next_values * (1 - dones) advantages = returns - values for _ in range(K_epochs): # Recompute policy distribution and value estimates policy_dist, value = agent(states) dist = Categorical(policy_dist) log_probs = dist.log_prob(actions) entropy = dist.entropy().mean() # Compute the ratio (pi_theta / pi_theta_old) old_log_probs = log_probs.detach() ratio = torch.exp(log_probs - old_log_probs) # Compute surrogate loss surr1 = ratio * advantages surr2 = torch.clamp(ratio, 1 - eps_clip, 1 + eps_clip) * advantages loss = -torch.min(surr1, surr2) + 0.5 * nn.MSELoss()(value, returns) - 0.01 * entropy # Take gradient step optimizer.zero_grad() loss.mean().backward() optimizer.step()Integrating the VAE World Model with the PPO Agent

We combine the VAE and PPO agent to create a simulated environment where the agent can train using the generative world model.

def train_agent_with_world_model(env, vae, agent, epochs=50, batch_size=64, learning_rate=1e-4): optimizer = optim.Adam(agent.parameters(), lr=learning_rate) for epoch in range(epochs): state = env.reset() done = False while not done: # Encode the current state to the latent space with torch.no_grad(): state_tensor = torch.tensor(state, dtype=torch.float32).unsqueeze(0) mu, logvar = vae.encode(state_tensor) z = vae.reparameterize(mu, logvar) simulated_state = vae.decode(z).squeeze().numpy() # Select action based on the agent's policy state_sim = torch.tensor(simulated_state, dtype=torch.float32).unsqueeze(0) policy_dist, value = agent(state_sim) dist = Categorical(policy_dist) action = dist.sample() # Interact with the real environment to get the next state next_state, reward, done, _ = env.step(action.item()) # Encode the next state with torch.no_grad(): next_state_tensor = torch.tensor(next_state, dtype=torch.float32).unsqueeze(0) mu_next, logvar_next = vae.encode(next_state_tensor) z_next = vae.reparameterize(mu_next, logvar_next) simulated_next_state = vae.decode(z_next).squeeze().numpy() # Store transitions transitions = { 'states': state_sim, 'actions': action, 'rewards': torch.tensor(reward, dtype=torch.float32), 'dones': torch.tensor(done, dtype=torch.float32), 'next_states': torch.tensor(simulated_next_state, dtype=torch.float32) } # Perform PPO update ppo_update(agent, optimizer, transitions['states'], transitions['actions'], transitions['rewards'], transitions['dones'], transitions['next_states']) state = next_state print(f"Epoch {epoch+1} completed.")Training Pipeline

We bring everything together by initializing the environment, training the VAE, and then training the PPO agent using the trained VAE as the world model.

if __name__ == "__main__": # Initialize environment env = gym.make('CartPole-v1') state_dim = env.observation_space.shape[0] action_dim = env.action_space.n # Initialize VAE vae = VAE(input_dim=state_dim, latent_dim=32) print("Training VAE...") train_vae(env, vae, epochs=20, batch_size=128, learning_rate=1e-3) # Initialize PPO Agent agent = PPOAgent(state_dim=32, action_dim=action_dim) print("Training PPO Agent with World Model...") train_agent_with_world_model(env, vae, agent, epochs=50, batch_size=64, learning_rate=1e-4) # Save models torch.save(vae.state_dict(), "vae_world_model.pth") torch.save(agent.state_dict(), "ppo_agent.pth") env.close()Evaluating the Trained Agent

After training, we evaluate the performance of the PPO agent within the generative world model to assess its ability to perform the task.

def evaluate_agent(env, vae, agent, episodes=10): agent.eval() total_rewards = [] for episode in range(episodes): state = env.reset() done = False episode_reward = 0 while not done: # Encode state to latent space with torch.no_grad(): state_tensor = torch.tensor(state, dtype=torch.float32).unsqueeze(0) mu, logvar = vae.encode(state_tensor) z = vae.reparameterize(mu, logvar) simulated_state = vae.decode(z).squeeze().numpy() # Select action state_sim = torch.tensor(simulated_state, dtype=torch.float32).unsqueeze(0) policy_dist, _ = agent(state_sim) dist = Categorical(policy_dist) action = dist.sample().item() # Interact with the real environment next_state, reward, done, _ = env.step(action) episode_reward += reward state = next_state total_rewards.append(episode_reward) print(f"Episode {episode+1}: Reward = {episode_reward}") average_reward = np.mean(total_rewards) print(f"Average Reward over {episodes} episodes: {average_reward}") # Evaluate the trained agent print("Evaluating the trained PPO Agent...") evaluate_agent(env, vae, agent, episodes=10)Discussion of the Implementation

In this implementation, the VAE serves as a generative world model that captures the dynamics of the real environment. By encoding states into a latent space and decoding latent vectors back into states, the VAE enables the PPO agent to simulate interactions within the environment without the need for real-world trials. This approach offers several advantages:

Efficiency: Training within a simulated environment significantly reduces the computational and temporal resources required compared to interacting with the real environment.

Safety: Especially in scenarios where real-world trials could be hazardous (e.g., training robots in dangerous terrains), using a generative world model ensures that learning occurs in a safe, controlled setting.

Scalability: The generative model can simulate a wide variety of scenarios, providing the agent with diverse experiences that enhance its ability to generalize and adapt to new situations.

Data Augmentation: The VAE can generate additional data samples, enriching the training dataset and improving the agent’s performance by exposing it to a broader range of experiences.

However, this integration also introduces challenges. The quality of the generative world model directly impacts the agent’s learning efficacy. If the VAE fails to accurately capture the environment’s dynamics, the agent may learn suboptimal or even detrimental behaviors. Therefore, ensuring that the generative model is sufficiently robust and representative of the real environment is paramount for the success of this approach.

Generative Models Mimicking Human Intelligence

Humans possess an innate ability to imagine, predict, and simulate various scenarios based on their experiences and knowledge. This generative capacity allows us to anticipate future events, devise creative solutions, and adapt to new challenges seamlessly. For AI systems to achieve a comparable level of intelligence, they must develop similar generative abilities that enable them to perform these complex cognitive tasks.

Generative models in AI emulate this aspect of human intelligence by learning to create and manipulate data in ways that reflect a deep understanding of the underlying patterns and structures. For instance, in creative endeavors such as art and music, generative models can produce original works that exhibit stylistic coherence and innovation, paralleling human creativity. In predictive analytics, these models can simulate future trends and outcomes based on historical data, providing valuable insights for decision-making processes.

Moreover, generative models enhance AI’s adaptability by allowing systems to simulate and plan for a multitude of potential scenarios. This capability is crucial for applications requiring foresight and strategic planning, such as autonomous driving, where anticipating and reacting to a variety of road conditions and unexpected events is essential for safety and efficiency.

The Imperative for AI to Develop Generative Abilities

To transcend the limitations of current AI systems and move towards advanced intelligence, it is imperative that AI develops robust generative capabilities. This involves not only the ability to generate realistic data but also the capacity to understand and manipulate complex environments, predict outcomes, and devise creative solutions. Generative modeling serves as the foundation for these capabilities, providing AI systems with the tools to engage in more sophisticated, human-like reasoning and problem-solving.

As AI continues to integrate into various facets of society, the demand for systems that can interact naturally, adapt dynamically, and exhibit creativity becomes increasingly pronounced. Generative models fulfill these requirements by enabling AI to generate and refine data in a manner that is both intelligent and contextually relevant. This advancement is crucial for developing AI that can serve as a true collaborator, augmenting human capabilities and contributing meaningfully to diverse domains such as healthcare, education, entertainment, and beyond.

Section 5: A Simple Example — Your First Generative Model

To truly grasp the essence of generative modeling, it is invaluable to start with a straightforward, illustrative example. This section presents a toy generative modeling example that encapsulates the fundamental principles of the field. Through this example, we will explore how to estimate an underlying distribution, employ a simple box model to generate new points, and understand the conceptual framework that defines a robust generative model in terms of accuracy, generation capability, and meaningful representation.

A Toy Generative Modeling Example: Points Generated by an Unknown Rule

Imagine you are presented with a set of points plotted on a two-dimensional plane. These points are the result of an unknown rule that governs their distribution. Your objective is to understand this rule and develop a model that can generate new points resembling those in the original set. This scenario is a quintessential example of generative modeling: given a dataset, infer the underlying distribution and use it to create new, plausible data points.

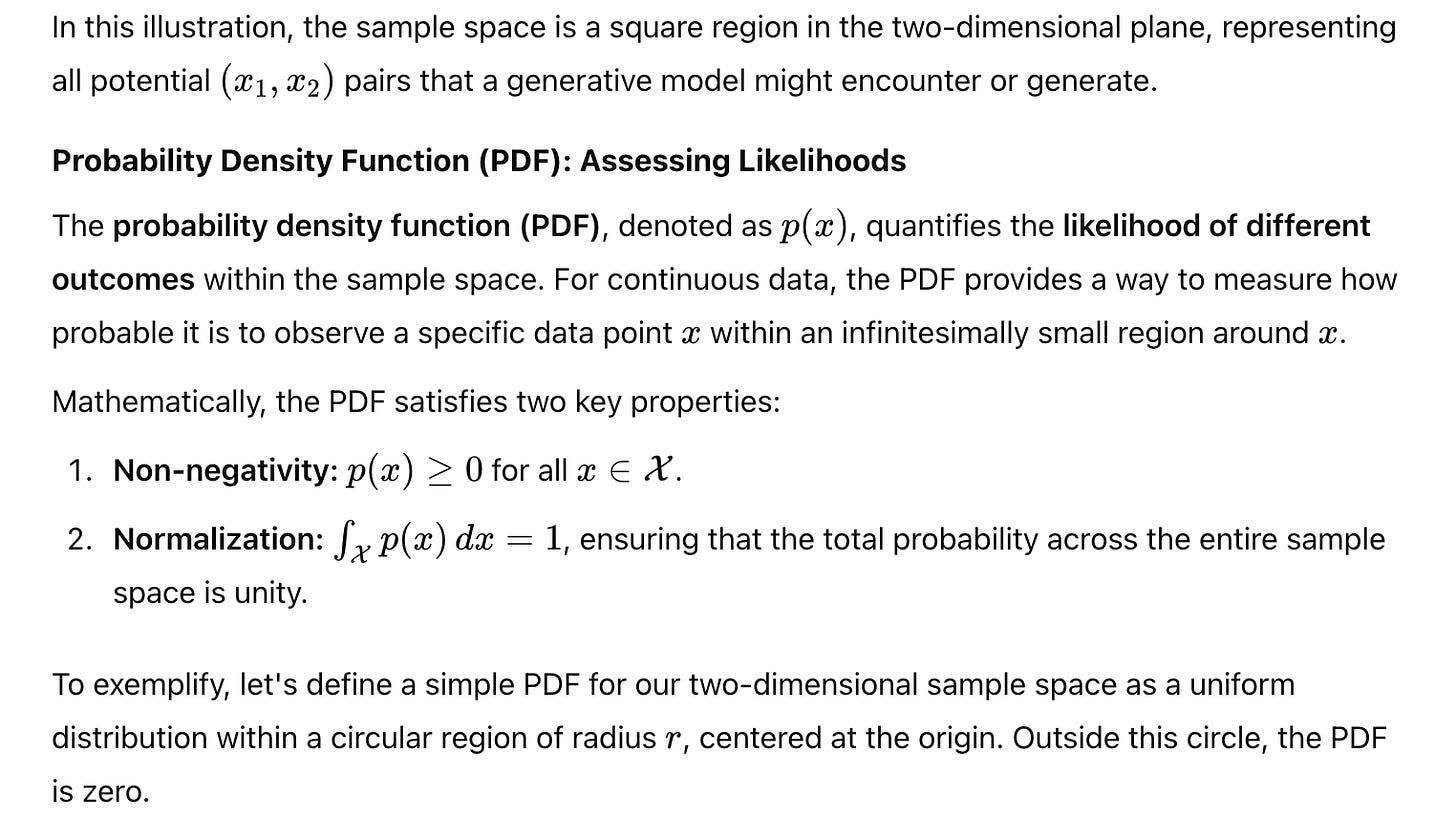

Consider the following illustration:

Figure 5–1: A set of points in two dimensions, generated by an unknown rule.

The distribution of these points is not immediately apparent, and discerning the pattern or rule that generated them requires careful analysis and modeling. The challenge lies in capturing the nuances of the data to generate new points that seamlessly integrate into the existing set.

Estimating the Underlying Distribution

The first step in generative modeling is to estimate the underlying distribution that governs the data. In our toy example, the points are scattered within a specific region of the plane, suggesting that there is a higher probability of finding points within this area and a lower probability elsewhere.

To estimate this distribution, we can employ various statistical and machine learning techniques. However, for simplicity and clarity, we will adopt a rudimentary approach: assuming that the data points are uniformly distributed within a bounded region. This assumption forms the basis of our simple box model, which we will explore in the next section.

Before proceeding, it’s essential to visualize the distribution of the data points to inform our modeling strategy. Using Python’s matplotlib and numpy libraries, we can plot the points and observe their spread:

import numpy as np import matplotlib.pyplot as plt # Generate toy data: points within a circle with some noise np.random.seed(42) num_points = 500 radius = 10 angles = 2 * np.pi * np.random.rand(num_points) radii = radius * np.sqrt(np.random.rand(num_points)) x = radii * np.cos(angles) + np.random.normal(0, 1, num_points) y = radii * np.sin(angles) + np.random.normal(0, 1, num_points) # Plot the original data points plt.figure(figsize=(6,6)) plt.scatter(x, y, alpha=0.5, edgecolors='w', s=50) plt.title('Original Data Points') plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.grid(True) plt.show()The plot reveals that the points are predominantly concentrated within a circular region, with occasional outliers. This visualization aids in formulating our initial hypothesis about the data’s distribution, guiding the development of our generative model.

Using a Simple Box Model to Generate New Points

Given the observed distribution, a logical starting point is to employ a box model, which assumes that the data points are uniformly distributed within a rectangular boundary. This model simplifies the complexity of the underlying distribution, allowing us to generate new points by sampling uniformly within the defined bounds.

To implement the box model, we first determine the minimum and maximum values along both the X and Y axes from the original data. These values define the boundaries of our box.

# Determine the boundaries of the box x_min, x_max = x.min(), x.max() y_min, y_max = y.min(), y.max() print(f"X-axis range: {x_min:.2f} to {x_max:.2f}") print(f"Y-axis range: {y_min:.2f} to {y_max:.2f}")output:

X-axis range: -10.62 to 10.61 Y-axis range: -10.56 to 10.59With these boundaries, we can define our box model and generate new points by uniformly sampling within these ranges:

# Function to generate new points using the box model def generate_box_model_points(n_points, x_min, x_max, y_min, y_max): new_x = np.random.uniform(x_min, x_max, n_points) new_y = np.random.uniform(y_min, y_max, n_points) return new_x, new_y # Generate new points new_x_box, new_y_box = generate_box_model_points(num_points, x_min, x_max, y_min, y_max) # Plot the generated points plt.figure(figsize=(6,6)) plt.scatter(new_x_box, new_y_box, alpha=0.5, color='orange', edgecolors='w', s=50) plt.title('Generated Points using Box Model') plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.grid(True) plt.show()At first glance, the box model seems to capture the general spread of the original data. However, upon closer inspection, it introduces a significant number of points outside the true data distribution, particularly in the regions where the original data has sparse or no points. This discrepancy highlights the limitations of oversimplifying the underlying distribution, emphasizing the need for more nuanced models in generative tasks.

Conceptual Framework: Accuracy, Generation, and Representation

To evaluate the effectiveness of our generative model, we must consider three key aspects: accuracy, generation capability, and representation. These components form the conceptual framework that defines the quality and utility of any generative model.

Accuracy: The model’s ability to ensure that generated data points resemble the real data is paramount. High accuracy means that the synthetic data maintains the essential characteristics of the original dataset, minimizing the introduction of unrealistic or irrelevant points. In our box model example, while the generated points cover the entire range of the data, they fail to accurately reflect the denser regions and the circular pattern of the original data, resulting in decreased accuracy.

Generation Capability: This refers to the model’s efficiency and ease in producing new samples. A robust generative model should facilitate the seamless generation of new data points without excessive computational overhead or complexity. Our box model excels in this regard, as generating points within a defined rectangular boundary is computationally straightforward and scalable.

Representation: The model must capture meaningful patterns and structures inherent in the data. Effective representation learning enables the model to understand and replicate the intricate relationships between data features, leading to more realistic and coherent generated samples. The box model, by imposing a rectangular boundary, oversimplifies the data’s structure, failing to capture the circular distribution and resulting in a loss of meaningful representation.

Balancing these three aspects is crucial for developing generative models that are both practical and effective. Striving for high accuracy and meaningful representation often necessitates more sophisticated modeling techniques, albeit with increased complexity and computational demands.

Enhancing the Box Model: Introducing a Gaussian Mixture Model

Recognizing the limitations of the simple box model, we can explore a more refined approach to better capture the underlying distribution of the data. One such method is the Gaussian Mixture Model (GMM), which assumes that the data is generated from a mixture of several Gaussian distributions. This model offers a balance between simplicity and the ability to capture complex data structures, enhancing both accuracy and representation without significantly compromising generation capability.

Understanding Gaussian Mixture Models

A Gaussian Mixture Model represents the data distribution as a combination of multiple Gaussian distributions, each characterized by its mean and covariance. The model assumes that each data point is generated by one of these Gaussian components, allowing it to capture multimodal distributions and more intricate data patterns.

Implementing the Gaussian Mixture Model

Leveraging Python’s scikit-learn library, we can implement a GMM to better model the data distribution and generate more accurate synthetic points.

from sklearn.mixture import GaussianMixture # Prepare data for GMM data = np.column_stack((x, y)) # Fit a Gaussian Mixture Model with 3 components gmm = GaussianMixture(n_components=3, covariance_type='full', random_state=42) gmm.fit(data) # Generate new points from the GMM new_data_gmm, _ = gmm.sample(num_points) # Plot the generated points plt.figure(figsize=(6,6)) plt.scatter(new_data_gmm[:,0], new_data_gmm[:,1], alpha=0.5, color='green', edgecolors='w', s=50) plt.title('Generated Points using Gaussian Mixture Model') plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.grid(True) plt.show()The GMM-generated points exhibit a distribution that more closely mirrors the original data. By capturing multiple clusters within the data, the GMM reduces the number of unrealistic outliers introduced by the box model, enhancing both accuracy and representation. This improvement underscores the importance of selecting appropriate modeling techniques that align with the data’s inherent structure.

3. Visualizing the Gaussian Components

To further understand how the GMM models the data, we can visualize the individual Gaussian components and their influence on the overall distribution.

# Function to plot GMM components def plot_gmm_components(gmm, data): plt.figure(figsize=(6,6)) plt.scatter(data[:,0], data[:,1], s=10, alpha=0.5, label='Original Data') ax = plt.gca() colors = ['red', 'blue', 'green'] for i, (mean, covar, color) in enumerate(zip(gmm.means_, gmm.covariances_, colors)): eigenvalues, eigenvectors = np.linalg.eigh(covar) order = eigenvalues.argsort()[::-1] eigenvalues, eigenvectors = eigenvalues[order], eigenvectors[:, order] angle = np.degrees(np.arctan2(*eigenvectors[:,0][::-1])) width, height = 2 * np.sqrt(eigenvalues) ellipse = plt.matplotlib.patches.Ellipse(mean, width, height, angle, edgecolor=color, facecolor='none', linewidth=2, label=f'Component {i+1}') ax.add_patch(ellipse) plt.title('Gaussian Mixture Model Components') plt.xlabel('X-axis') plt.ylabel('Y-axis') plt.legend() plt.grid(True) plt.show() # Plot GMM components plot_gmm_components(gmm, data)The plotted ellipses represent the covariance and mean of each Gaussian component within the GMM. These components collectively capture the multimodal distribution of the original data, allowing the model to generate new points that align more closely with the true distribution. This visualization emphasizes how GMMs can effectively model complex data structures by decomposing them into simpler, interpretable components.

Evaluating the Enhanced Generative Model

With the GMM in place, we can reassess the three pillars of our conceptual framework to evaluate the model’s effectiveness.

Accuracy: The GMM demonstrates improved accuracy by generating points that adhere more closely to the original data distribution. The reduction in outliers and better alignment with the data’s natural clusters indicate a higher fidelity in the synthetic data.

Generation Capability: The GMM maintains efficient generation capabilities, as sampling from a mixture of Gaussian distributions remains computationally straightforward. The model can effortlessly produce large batches of new points without significant computational overhead.

Representation: By decomposing the data into multiple Gaussian components, the GMM captures meaningful patterns and structures inherent in the data. This decomposition not only enhances the realism of the generated points but also provides interpretability, as each component represents a distinct cluster within the data.

Overall, the Gaussian Mixture Model offers a more nuanced and effective approach to generative modeling compared to the simplistic box model, striking a balance between accuracy, generation efficiency, and meaningful representation.

Extending the Framework: Beyond Simple Models

While the GMM provides a substantial improvement over the box model, real-world generative modeling often requires handling more complex and high-dimensional data. In such scenarios, more sophisticated models like Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), and Normalizing Flows are employed to capture intricate data distributions and generate highly realistic samples.

These advanced models incorporate deep learning architectures that can learn hierarchical representations and non-linear relationships within the data, enabling them to handle the complexities that simple statistical models cannot. For instance, VAEs use encoder-decoder frameworks to learn latent spaces that capture the essence of the data, while GANs utilize adversarial training to produce data that is virtually indistinguishable from real samples.

Moreover, these models often incorporate techniques to ensure stability, prevent mode collapse, and enhance the diversity of generated samples, addressing the challenges that arise in high-dimensional and multimodal data distributions. As the field of generative modeling continues to evolve, these advanced models will play a crucial role in expanding the boundaries of what AI systems can achieve in terms of data generation and simulation.

Section 6: Representation Learning and Latent Space

In the realm of generative modeling, representation learning stands as a cornerstone, enabling artificial intelligence systems to comprehend and manipulate complex data with remarkable efficiency. Central to this concept is the notion of latent space, a powerful abstraction that transforms high-dimensional data into more manageable, lower-dimensional representations. This section delves into the intricacies of latent space, illustrating its significance through a tangible example, exploring how deep learning facilitates automatic representation learning, and elucidating the transformative potential of latent space manipulations in generating meaningful and coherent outputs.

Understanding Latent Space

At its core, latent space refers to a hidden, lower-dimensional space that encapsulates the essential features and structures of high-dimensional data. Imagine attempting to describe a vast, intricate landscape solely through its individual pixel values; the task is not only computationally intensive but also abstract and unwieldy. Latent space circumvents this complexity by providing a distilled representation that captures the underlying patterns and variations within the data.

Consider the example of images depicting biscuit tins. Each image, composed of thousands of pixels, embodies myriad details such as color gradients, textures, and shapes. However, the critical attributes that distinguish one biscuit tin from another might be as simple as height and width. By representing these images in a latent space defined by just two dimensions — height and width — we can significantly simplify the complexity without sacrificing the ability to generate or distinguish between different tins.

Example: Representing Biscuit Tins with Latent Variables

To concretize the concept, let us explore how images of biscuit tins can be represented using latent variables. Suppose we have a dataset of biscuit tin images, each varying in height and width. Instead of processing each image pixel-by-pixel, a generative model can learn to encode these images into a latent space where each point corresponds to specific height and width values.