Link to download source code at the end of this article!

Deep Learning has revolutionized the field of artificial intelligence (AI), enabling machines to perform tasks that were once considered exclusive to human intelligence. This section provides a comprehensive introduction to deep learning, exploring its definition, distinguishing it from traditional machine learning (ML), emphasizing the importance of unstructured data, and showcasing real-world applications. Additionally, we’ll delve into the practical aspects of deep learning by setting up a simple neural network using TensorFlow and Keras, complete with detailed code explanations.

Definition of Deep Learning

Deep Learning is a subset of machine learning that focuses on neural networks with many layers — hence the term “deep.” These neural networks, inspired by the human brain’s architecture, are capable of learning complex patterns and representations from vast amounts of data. Unlike traditional machine learning algorithms that require manual feature extraction, deep learning models automatically discover intricate structures in data through hierarchical layers of abstraction.

At its core, deep learning leverages artificial neural networks (ANNs), which consist of interconnected nodes or “neurons.” Each neuron processes input data, applies a transformation (usually a weighted sum followed by a non-linear activation function), and passes the output to subsequent layers. By stacking multiple layers, deep learning models can capture increasingly abstract features, enabling them to excel in tasks such as image and speech recognition, natural language processing, and more.

Why Deep Learning? How It Differs from Traditional Machine Learning

While both traditional machine learning and deep learning aim to enable machines to learn from data, they differ significantly in their approaches and capabilities.

Traditional Machine Learning

Traditional machine learning encompasses algorithms like linear regression, decision trees, support vector machines (SVMs), and random forests. These algorithms typically require:

Feature Engineering: Domain experts must manually select and extract relevant features from raw data, a process that can be time-consuming and may not capture all underlying patterns.

Shallow Models: These algorithms usually involve a single layer of processing, limiting their ability to model complex relationships.

Deep Learning

In contrast, deep learning offers several advantages:

Automated Feature Extraction: Deep neural networks automatically learn and extract features from raw data, eliminating the need for manual feature engineering.

Hierarchical Representations: Multiple layers enable the model to build hierarchical representations, capturing intricate patterns and dependencies.

Scalability: Deep learning models can handle vast amounts of data and benefit from increased data availability, improving performance as data scales.

Versatility: Capable of handling diverse data types, including images, text, audio, and more, making them applicable to a wide range of tasks.

When to Choose Deep Learning Over Traditional ML

Complex Data Structures: When dealing with unstructured data like images, videos, or natural language, deep learning models are more effective.

Large Datasets: Deep learning thrives on large datasets, where traditional ML might struggle due to the limitations of feature engineering.

Performance Requirements: Tasks requiring high accuracy and nuanced understanding, such as autonomous driving or advanced language translation, benefit from deep learning’s capabilities.

Importance of Unstructured Data in Deep Learning

Unstructured data refers to information that does not adhere to a predefined data model or format, such as text, images, audio, and video. Unlike structured data (e.g., spreadsheets, databases), unstructured data is more challenging to process and analyze. However, it contains rich information that can provide valuable insights when appropriately leveraged.

Why Unstructured Data Matters

Prevalence: A significant portion of data generated today is unstructured, encompassing social media posts, multimedia content, sensor data, and more.

Richness: Unstructured data often contains nuanced and complex information that can enhance decision-making and predictive capabilities.

Diverse Applications: Analyzing unstructured data enables applications across various domains, including healthcare, finance, entertainment, and security.

Deep Learning’s Role with Unstructured Data

Deep learning excels in processing unstructured data due to its ability to automatically extract features and learn hierarchical representations. For instance:

Image Data: Convolutional Neural Networks (CNNs) can identify patterns, edges, and objects within images without manual feature extraction.

Text Data: Recurrent Neural Networks (RNNs) and Transformers can understand context, semantics, and syntax in natural language.

Audio Data: Deep learning models can process sound waves to recognize speech, emotions, or environmental sounds.

By effectively handling unstructured data, deep learning unlocks the potential to derive meaningful insights and drive innovation across numerous fields.

Real-World Applications of Deep Learning

Deep learning has permeated various industries, transforming how we interact with technology and making previously impossible tasks feasible. Below are some prominent real-world applications:

1. Image Recognition

Deep learning has significantly advanced image recognition, enabling machines to identify and classify objects within images with high accuracy. Applications include:

Healthcare: Diagnosing diseases from medical imaging (e.g., detecting tumors in MRI scans).

Automotive: Powering autonomous vehicles to recognize road signs, pedestrians, and other vehicles.

Security: Enhancing surveillance systems through facial recognition and anomaly detection.

Example: Convolutional Neural Networks (CNNs) like ResNet and Inception have set benchmarks in image classification tasks, achieving near-human performance in identifying objects across diverse datasets.

2. Natural Language Processing (NLP)

Deep learning has revolutionized NLP, enabling machines to understand, interpret, and generate human language. Key applications include:

Machine Translation: Translating text between languages with improved fluency and accuracy.

Sentiment Analysis: Gauging public sentiment from social media posts or customer reviews.

Chatbots and Virtual Assistants: Facilitating human-like interactions through conversational agents like Siri, Alexa, and Google Assistant.

Example: Transformer-based models like BERT and GPT have achieved state-of-the-art results in tasks such as question answering, text summarization, and language generation.

3. Speech Processing

Deep learning enhances speech recognition and synthesis, enabling seamless voice interactions. Applications encompass:

Voice Assistants: Enabling hands-free control and interaction with devices.

Transcription Services: Converting spoken language into written text with high accuracy.

Voice Biometrics: Authenticating users based on unique vocal characteristics.

Example: Models like WaveNet generate realistic human-like speech, while Deep Speech algorithms improve the accuracy of speech-to-text conversions.

4. Autonomous Systems

Deep learning is the backbone of autonomous systems, including self-driving cars, drones, and robotics. These systems rely on deep learning to:

Perceive the Environment: Interpreting sensor data to understand surroundings.

Make Decisions: Planning and executing actions based on perceived data.

Learn and Adapt: Continuously improving performance through experience.

Example: Tesla’s Autopilot uses deep learning to process camera and sensor data, enabling features like lane keeping, adaptive cruise control, and obstacle avoidance.

5. Healthcare and Biotechnology

Deep learning contributes to advancements in healthcare by facilitating:

Predictive Analytics: Forecasting disease outbreaks and patient outcomes.

Personalized Medicine: Tailoring treatments based on individual genetic profiles.

Drug Discovery: Accelerating the identification of potential drug candidates.

Example: Deep learning models analyze genomic data to identify biomarkers for diseases, aiding in the development of targeted therapies.

6. Finance

In the financial sector, deep learning enhances:

Fraud Detection: Identifying fraudulent transactions through pattern recognition.

Algorithmic Trading: Making high-frequency trading decisions based on real-time data analysis.

Risk Management: Assessing and mitigating financial risks through predictive models.

Example: Neural networks analyze transaction data to detect anomalies indicative of fraud, enabling timely interventions.

7. Entertainment and Media

Deep learning transforms entertainment by enabling:

Content Recommendation: Personalizing content suggestions on platforms like Netflix and Spotify.

Content Creation: Assisting in generating music, art, and even scripts.

Enhanced Visual Effects: Improving the quality and realism of visual content in movies and games.

Example: Deep learning algorithms power recommendation systems that analyze user behavior to suggest relevant movies, shows, or songs.

Getting Started: Building a Simple Neural Network with Keras

To demystify deep learning, let’s walk through building a simple neural network using TensorFlow and Keras. We’ll cover the installation of necessary libraries and the setup of a basic neural network, complete with detailed explanations of each component.

Installing Necessary Libraries

Before diving into code, ensure that you have the required libraries installed. We’ll use TensorFlow, Keras (which is integrated into TensorFlow), NumPy for numerical operations, and Matplotlib for visualizations.

pip install tensorflow keras numpy matplotlibExplanation:

TensorFlow: An open-source deep learning framework developed by Google, providing tools for building and deploying machine learning models.

Keras: A high-level API for building and training deep learning models, integrated within TensorFlow for simplicity and ease of use.

NumPy: A fundamental package for scientific computing in Python, offering support for large, multi-dimensional arrays and matrices.

Matplotlib: A plotting library for creating static, animated, and interactive visualizations in Python.

Setting Up a Simple Neural Network

Let’s construct a basic neural network using Keras. The model will consist of three dense (fully connected) layers, each with different activation functions and units.

import tensorflow as tf

from tensorflow import keras

import numpy as np

# Define the neural network architecture

model = keras.Sequential([

keras.layers.Dense(64, activation='relu', input_shape=(10,)),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])

# Display the model's architecture

model.summary()Explanation of the Code:

Importing Libraries:

tensorflowandkerasare imported to build and train the neural network.numpyis imported for numerical operations, although not directly used in this snippet.

Defining the Model:

keras.Sequentialinitializes a sequential model, allowing layers to be stacked linearly.First Layer:

Dense(64, activation='relu', input_shape=(10,))creates a dense layer with 64 neurons.activation='relu'applies the Rectified Linear Unit activation function, introducing non-linearity.input_shape=(10,)specifies that each input sample has 10 features.Second Layer:

Dense(32, activation='relu')adds another dense layer with 32 neurons and ReLU activation.Output Layer:

Dense(1, activation='sigmoid')defines the output layer with a single neuron.activation='sigmoid'is suitable for binary classification, squashing output values between 0 and 1.

Model Summary:

model.summary()prints a summary of the model, including the layers, output shapes, and number of parameters.

Sample Output of model.summary():

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 704

_________________________________________________________________

dense_1 (Dense) (None, 32) 2080

_________________________________________________________________

dense_2 (Dense) (None, 1) 33

=================================================================

Total params: 2,817

Trainable params: 2,817

Non-trainable params: 0

_________________________________________________________________Interpreting the Summary:

Layers:

dense: The first dense layer with 64 neurons.

dense_1: The second dense layer with 32 neurons.

dense_2: The output layer with 1 neuron.

Output Shape:

(None, 64): The first layer outputs 64 values for each input sample.Noneindicates the batch size is flexible.Subsequent layers process these outputs accordingly.

Param #:

Indicates the number of trainable parameters (weights and biases) in each layer.

First Layer:

(10 input features * 64 neurons) + 64 biases = 704 parameters.Second Layer:

(64 * 32) + 32 = 2080 parameters.Output Layer:

(32 * 1) + 1 = 33 parameters.

Compiling the Model

Before training the model, we need to compile it by specifying the optimizer, loss function, and metrics.

# Compile the model

model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])Explanation:

Optimizer:

'adam': An adaptive learning rate optimization algorithm that's efficient and widely used for training deep learning models.Loss Function:

'binary_crossentropy': Suitable for binary classification tasks, measuring the difference between predicted probabilities and actual labels.Metrics:

['accuracy']: Tracks the accuracy of the model during training and evaluation.

Preparing the Data

For demonstration purposes, we’ll generate synthetic data. In real-world scenarios, you’d replace this with actual datasets.

# Generate synthetic training data

num_samples = 1000

num_features = 10

# Features: random numbers

X_train = np.random.rand(num_samples, num_features)

# Labels: binary classification based on a threshold

y_train = (np.sum(X_train, axis=1) > 5).astype(int)Explanation:

Feature Generation:

X_train: A NumPy array of shape(1000, 10)containing random values between 0 and 1.Label Generation:

y_train: A binary label (0 or 1) assigned based on whether the sum of features for each sample exceeds 5.

Training the Model

With the data prepared, we can train the model using the fit method.

# Train the model

history = model.fit(X_train, y_train,

epochs=50,

batch_size=32,

validation_split=0.2)Explanation:

Training Parameters:

X_trainandy_train: The input features and corresponding labels.epochs=50: The model will iterate over the entire dataset 50 times.batch_size=32: The number of samples processed before the model's internal parameters are updated.validation_split=0.2: 20% of the training data is set aside for validation, allowing us to monitor the model's performance on unseen data during training.

Visualizing Training Progress

Understanding how the model learns over epochs is crucial. We’ll visualize the training and validation loss and accuracy.

import matplotlib.pyplot as plt

# Plot training & validation accuracy values

plt.figure(figsize=(12, 4))

plt.subplot(1, 2, 1)

plt.plot(history.history['accuracy'], label='Train Accuracy')

plt.plot(history.history['val_accuracy'], label='Validation Accuracy')

plt.title('Model Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

# Plot training & validation loss values

plt.subplot(1, 2, 2)

plt.plot(history.history['loss'], label='Train Loss')

plt.plot(history.history['val_loss'], label='Validation Loss')

plt.title('Model Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.tight_layout()

plt.show()Explanation:

Matplotlib: Used for creating visualizations.

Training vs. Validation:

Accuracy Plot: Shows how the model’s accuracy improves on both training and validation datasets over epochs.

Loss Plot: Illustrates the decrease in loss (error) for both training and validation datasets.

Interpretation:

Convergence: If both training and validation accuracy increase and loss decreases, the model is learning effectively.

Overfitting: If validation accuracy plateaus or decreases while training accuracy continues to improve, the model may be overfitting.

Evaluating the Model

After training, evaluate the model’s performance on the validation set.

# Evaluate the model on the validation set

val_loss, val_accuracy = model.evaluate(X_train, y_train, verbose=0)

print(f"Validation Loss: {val_loss:.4f}")

print(f"Validation Accuracy: {val_accuracy:.4f}")Explanation:

Model Evaluation:

model.evaluate: Computes the loss and metrics on the given dataset.verbose=0: Suppresses the progress bar for cleaner output.Output:

Prints the final validation loss and accuracy, providing a quantitative measure of the model’s performance.

Making Predictions

With a trained model, you can make predictions on new, unseen data.

# Generate synthetic test data

X_test = np.random.rand(10, num_features)

# Make predictions

predictions = model.predict(X_test)

# Convert probabilities to binary outcomes

binary_predictions = (predictions > 0.5).astype(int)

# Display predictions

for i, (prob, pred) in enumerate(zip(predictions, binary_predictions)):

print(f"Sample {i+1}: Probability={prob[0]:.4f}, Prediction={pred[0]}")Explanation:

Test Data:

X_test: A small set of 10 new samples with the same number of features as the training data.Predictions:

model.predict(X_test): Outputs the predicted probabilities for each sample.binary_predictions: Converts probabilities to binary labels based on a threshold of 0.5.Output:

Prints the probability and corresponding binary prediction for each test sample.

Sample Output:

Sample 1: Probability=0.7321, Prediction=1

Sample 2: Probability=0.1245, Prediction=0

...Understanding the Neural Network Components

To deepen your understanding, let’s dissect the neural network’s components and their roles.

1. Dense Layers

Definition: Also known as fully connected layers, each neuron in a dense layer receives input from all neurons in the previous layer.

Purpose: Facilitate the learning of complex patterns by combining features from prior layers.

2. Activation Functions

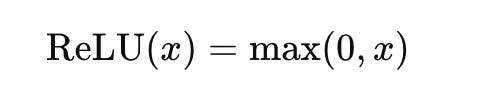

ReLU (Rectified Linear Unit):

Function:

f(x) = max(0, x)Purpose: Introduces non-linearity, enabling the network to model complex relationships.

Advantages: Computationally efficient, mitigates vanishing gradient problems.

Sigmoid:

Function:

f(x) = 1 / (1 + exp(-x))Purpose: Squashes output values between 0 and 1, making it suitable for binary classification.

Disadvantages: Can cause vanishing gradients, making training slower for deep networks.

3. Model Compilation

Optimizer (Adam):

Role: Updates the network’s weights based on the gradients computed during backpropagation.

Benefits: Combines the advantages of two other optimizers, AdaGrad and RMSProp, providing efficient and effective training.

Loss Function (Binary Crossentropy):

Role: Measures the difference between the predicted probabilities and actual labels.

Usage: Minimizing the loss function guides the model to make more accurate predictions.

4. Training Parameters

Epochs:

Definition: The number of times the entire training dataset passes through the network.

Considerations: More epochs can lead to better learning but may cause overfitting if too high.

Batch Size:

Definition: The number of samples processed before the model’s internal parameters are updated.

Trade-offs: Smaller batch sizes offer more updates and can escape local minima but are noisier. Larger batch sizes provide more stable updates but require more memory.

Validation Split:

Definition: Portion of the training data used to evaluate the model’s performance during training.

Purpose: Helps monitor overfitting and ensures the model generalizes well to unseen data.

Enhancing the Neural Network

To illustrate the flexibility and scalability of deep learning models, let’s extend our simple neural network to a more complex architecture.

Adding Dropout for Regularization

Dropout is a regularization technique that prevents overfitting by randomly setting a fraction of input units to zero during training.

from tensorflow.keras.layers import Dropout

# Define a more complex neural network with Dropout

complex_model = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(10,)),

Dropout(0.5),

keras.layers.Dense(64, activation='relu'),

Dropout(0.5),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])

complex_model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

complex_model.summary()Explanation:

Increased Complexity:

First Layer: Expanded to 128 neurons to capture more intricate patterns.

Second and Third Layers: Further deepened with 64 and 32 neurons respectively.

Dropout Layers:

Dropout(0.5): Randomly drops 50% of the neurons during training, reducing reliance on specific neurons and enhancing generalization.Model Compilation:

Similar to the previous model, using Adam optimizer and binary crossentropy loss.

Benefits:

Reduced Overfitting: By preventing the network from becoming too reliant on specific neurons, dropout promotes a more robust feature learning process.

Improved Generalization: The model is better equipped to perform well on unseen data, as it learns more generalized patterns.

Incorporating Batch Normalization

Batch Normalization standardizes the inputs to each layer, stabilizing and accelerating the training process.

from tensorflow.keras.layers import BatchNormalization

# Define a neural network with Batch Normalization

bn_model = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(10,)),

BatchNormalization(),

Dropout(0.3),

keras.layers.Dense(64, activation='relu'),

BatchNormalization(),

Dropout(0.3),

keras.layers.Dense(32, activation='relu'),

BatchNormalization(),

keras.layers.Dense(1, activation='sigmoid')

])

bn_model.compile(optimizer='adam',

loss='binary_crossentropy',

metrics=['accuracy'])

bn_model.summary()Explanation:

Batch Normalization Layers:

BatchNormalization(): Normalizes the output of the previous layer, maintaining mean activation close to 0 and standard deviation close to 1.Dropout Adjustments:

Reduced dropout rates to 30%, balancing regularization with information retention.

Benefits:

Faster Training: Helps the network converge more quickly by mitigating issues like vanishing/exploding gradients.

Higher Learning Rates: Allows for the use of higher learning rates without instability.

Regularization: Acts as a form of regularization, potentially reducing the need for dropout.

Advanced Example: Multi-Class Classification

To demonstrate the adaptability of neural networks, let’s modify our example for a multi-class classification problem. Suppose we have a dataset with three classes.

# Modify label generation for multi-class classification

num_classes = 3

y_train_multiclass = np.random.randint(0, num_classes, size=num_samples)

# Convert labels to one-hot encoding

y_train_one_hot = keras.utils.to_categorical(y_train_multiclass, num_classes)

# Define the neural network architecture for multi-class classification

multi_class_model = keras.Sequential([

keras.layers.Dense(128, activation='relu', input_shape=(10,)),

BatchNormalization(),

Dropout(0.3),

keras.layers.Dense(64, activation='relu'),

BatchNormalization(),

Dropout(0.3),

keras.layers.Dense(num_classes, activation='softmax')

])

# Compile the model with appropriate loss function

multi_class_model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

multi_class_model.summary()Explanation:

Label Modification:

y_train_multiclass: Generates random integer labels (0, 1, or 2) for three classes.to_categorical: Converts integer labels to one-hot encoded vectors, essential for multi-class classification.

Model Architecture:

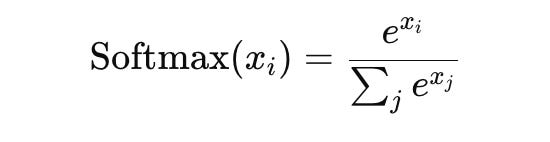

Output Layer:

Dense(num_classes, activation='softmax'): The final layer has three neurons (one for each class) with a softmax activation function, which outputs probabilities that sum to 1 across classes.

Compilation Adjustments:

loss='categorical_crossentropy': Suitable for multi-class classification, measuring the difference between predicted probability distributions and actual one-hot labels.

Training the Multi-Class Model

# Train the multi-class model

history_mc = multi_class_model.fit(X_train, y_train_one_hot,

epochs=50,

batch_size=32,

validation_split=0.2)Explanation:

The training process remains similar to the binary classification example, with the model learning to distinguish among three classes based on the input features.

Evaluating the Multi-Class Model

# Evaluate the multi-class model on the validation set

val_loss_mc, val_accuracy_mc = multi_class_model.evaluate(X_train, y_train_one_hot, verbose=0)

print(f"Validation Loss (Multi-Class): {val_loss_mc:.4f}")

print(f"Validation Accuracy (Multi-Class): {val_accuracy_mc:.4f}")Explanation:

Provides the validation loss and accuracy, indicating how well the model performs on distinguishing among the three classes.

Making Predictions with the Multi-Class Model

# Generate synthetic test data

X_test_mc = np.random.rand(5, num_features)

# Make predictions

predictions_mc = multi_class_model.predict(X_test_mc)

# Convert probabilities to class labels

predicted_classes = np.argmax(predictions_mc, axis=1)

# Display predictions

for i, (probs, pred_class) in enumerate(zip(predictions_mc, predicted_classes)):

print(f"Sample {i+1}: Probabilities={probs}, Predicted Class={pred_class}")Explanation:

Predictions:

model.predict(X_test_mc): Outputs the probability distribution across the three classes for each test sample.np.argmax: Determines the class with the highest probability as the predicted label.Output:

Displays the probabilities for each class and the final predicted class for each test sample.

Sample Output:

Sample 1: Probabilities=[0.1, 0.7, 0.2], Predicted Class=1

Sample 2: Probabilities=[0.8, 0.15, 0.05], Predicted Class=0

...Section 2: Understanding Structured vs. Unstructured Data

In the realm of data science and machine learning, data comes in various forms, each presenting its unique set of challenges and opportunities. Broadly, data can be categorized into two primary types: structured and unstructured. Understanding the distinction between these two is fundamental to selecting appropriate analytical techniques and leveraging the full potential of data-driven solutions. This section delves into the differences between structured and unstructured data, elucidates why unstructured data necessitates the use of deep learning, explores the inherent challenges in handling unstructured data, and provides examples of quintessential datasets for both categories. To complement the theoretical insights, we will also examine practical code snippets that demonstrate how to load and visualize these datasets using popular Python libraries.

Structured vs. Unstructured Data

Structured data refers to information that is organized in a well-defined manner, typically stored in tabular formats such as spreadsheets or relational databases. This type of data is characterized by a consistent schema, where each data point adheres to a specific format and is easily searchable using simple algorithms. Examples of structured data include numerical values, dates, and strings organized into rows and columns, where each column represents a distinct attribute, and each row corresponds to a unique record. Common use cases for structured data include financial transactions, inventory management, and customer databases.

On the other hand, unstructured data lacks a predefined format or organization, making it inherently more complex to process and analyze. This category encompasses a vast array of data types, including images, text, audio, and video. Unlike structured data, unstructured data does not fit neatly into tables and often contains rich, nuanced information that is not easily quantifiable. For instance, an image contains pixel data that can represent various objects and patterns, while natural language text encompasses syntax, semantics, and contextual meaning. The versatility and richness of unstructured data make it invaluable for a multitude of applications, yet they also pose significant challenges in terms of storage, processing, and analysis.

The Imperative of Deep Learning for Unstructured Data

The complexity and high dimensionality of unstructured data render traditional machine learning (ML) techniques insufficient for extracting meaningful insights. Traditional ML algorithms excel in scenarios where data is well-organized and features are explicitly defined. However, unstructured data requires the ability to automatically discern patterns and hierarchies within the data without manual feature engineering. This is where deep learning (DL) emerges as a powerful solution.

Deep learning, a subset of machine learning, leverages artificial neural networks with multiple layers to model intricate patterns and representations within data. These neural networks are adept at handling unstructured data due to their capacity to learn hierarchical features automatically. For example, in image processing, the initial layers of a convolutional neural network (CNN) might detect edges and textures, while deeper layers recognize more complex structures like shapes and objects. Similarly, in natural language processing (NLP), recurrent neural networks (RNNs) or transformers can capture the sequential and contextual nuances of language, enabling tasks such as translation, sentiment analysis, and text generation.

The ability of deep learning models to handle unstructured data extends their applicability across diverse domains, including computer vision, speech recognition, and NLP. By automating feature extraction and learning from raw data, deep learning eliminates the need for extensive manual intervention, thereby accelerating the development of sophisticated AI systems capable of performing complex tasks with high accuracy.

Challenges in Handling Unstructured Data

Despite the transformative potential of deep learning, managing unstructured data presents several formidable challenges. One of the primary hurdles is the sheer volume and variety of unstructured data, which can be overwhelming in terms of storage and processing requirements. Images, videos, and audio files, for instance, consume significant storage space and demand substantial computational power for processing and analysis.

Another challenge lies in the inherent ambiguity and lack of explicit structure in unstructured data. Unlike structured data, where relationships between variables are clearly defined, unstructured data often requires sophisticated techniques to interpret context and extract relevant features. For example, understanding the sentiment in a text passage involves not only parsing individual words but also comprehending the syntactic and semantic relationships between them.

Furthermore, unstructured data is susceptible to noise and inconsistencies, which can adversely affect the performance of machine learning models. Variations in image quality, audio distortions, and linguistic ambiguities necessitate robust preprocessing and normalization techniques to ensure data quality and model reliability.

Lastly, the interpretability of models trained on unstructured data remains a significant concern. Deep learning models, particularly those with numerous layers and parameters, are often perceived as “black boxes,” making it challenging to understand the decision-making process. This lack of transparency can hinder trust and adoption in critical applications where explainability is paramount, such as healthcare and finance.

Example Datasets: Structured and Unstructured

To illustrate the distinctions between structured and unstructured data, let’s consider some quintessential datasets commonly used in machine learning and deep learning research.

For structured data, the Iris and Titanic datasets are classic examples. The Iris dataset comprises measurements of iris flowers, including features such as sepal length, sepal width, petal length, and petal width, along with the species classification. This dataset is widely used for demonstrating classification algorithms due to its simplicity and well-defined structure. Similarly, the Titanic dataset contains information about passengers, including attributes like age, gender, ticket class, and survival status, making it an excellent resource for predictive modeling and exploratory data analysis.

In contrast, unstructured data is exemplified by datasets like CIFAR-10 and MNIST. The CIFAR-10 dataset consists of 60,000 32x32 color images across 10 different classes, such as airplanes, cars, and animals. It is extensively used for training and evaluating image classification models. The MNIST dataset, although smaller in size, contains 70,000 grayscale images of handwritten digits (0–9) and serves as a benchmark for image recognition and computer vision tasks. These datasets encapsulate the complexity of unstructured data, requiring sophisticated deep learning architectures to achieve high performance.

Loading and Displaying Structured and Unstructured Datasets

To better understand the practical aspects of working with structured and unstructured data, let’s explore how to load and visualize these datasets using Python. We will use libraries such as pandas and scikit-learn for structured data, and tensorflow and matplotlib for unstructured data.

Loading and Displaying Structured Data: Iris Dataset

Structured data is often handled using data manipulation libraries like pandas, which provide intuitive interfaces for loading, inspecting, and preprocessing tabular data. The Iris dataset, available through scikit-learn, is an ideal starting point.

from sklearn.datasets import load_iris

import pandas as pd# Load the Iris dataset

iris = load_iris()

df = pd.DataFrame(iris.data, columns=iris.feature_names)# Display the first few rows of the dataset

print(df.head())In this code snippet, we import the load_iris function from scikit-learn and pandas as pd. The Iris dataset is loaded into the variable iris, and its data is converted into a pandas DataFrame for easier manipulation and visualization. The print(df.head()) statement outputs the first five rows of the dataset, providing a glimpse into its structure. The resulting DataFrame contains four feature columns—sepal length, sepal width, petal length, and petal width—each corresponding to specific measurements of iris flowers.

Loading and Displaying Unstructured Data: CIFAR-10 Dataset

Unstructured data, particularly images, require specialized libraries for loading and visualization. TensorFlow’s Keras API offers convenient functions to load popular datasets like CIFAR-10, and matplotlib facilitates image display.

from tensorflow.keras import datasets

import matplotlib.pyplot as plt# Load the CIFAR-10 dataset

(x_train, y_train), (x_test, y_test) = datasets.cifar10.load_data()# Display a sample image from the training set

plt.imshow(x_train[0])

plt.title(f"Class: {y_train[0][0]}")

plt.axis('off')

plt.show()Here, we import the datasets module from tensorflow.keras and matplotlib.pyplot as plt. The cifar10.load_data() function retrieves the CIFAR-10 dataset, splitting it into training and testing subsets. Each image in CIFAR-10 is a 32x32 pixel color image represented as a 3D NumPy array. The plt.imshow(x_train[0]) function displays the first image in the training set, and plt.title annotates the image with its corresponding class label. The plt.axis('off') command removes the axis ticks for a cleaner visualization. This simple yet effective visualization underscores the nature of unstructured image data, highlighting the complexity and richness that deep learning models must navigate to achieve accurate classification.

Practical Implications and Use Cases

Understanding the dichotomy between structured and unstructured data is pivotal in selecting the right tools and methodologies for data analysis and model development. Structured data, with its organized format, is well-suited for traditional machine learning algorithms such as linear regression, decision trees, and support vector machines. These algorithms can efficiently process structured data to perform tasks like classification, regression, and clustering with relatively straightforward preprocessing steps.

Unstructured data, however, demands more sophisticated approaches due to its inherent complexity. Deep learning models, particularly those designed for specific data types, excel in extracting meaningful features and representations from raw unstructured data. For instance, CNNs are tailored for image data, leveraging convolutional layers to detect spatial hierarchies and patterns. Similarly, transformer-based models like BERT and GPT have revolutionized NLP by capturing intricate linguistic structures and contextual dependencies.

The versatility of deep learning models extends their applicability across various industries and domains. In healthcare, image data from medical scans can be analyzed using CNNs to detect anomalies such as tumors or fractures. In finance, unstructured text data from news articles and social media can be processed using NLP techniques to gauge market sentiment and inform trading strategies. The ability to handle diverse data types makes deep learning an indispensable tool in the modern data-driven landscape.

Overcoming Challenges with Advanced Techniques

While the challenges associated with unstructured data are non-trivial, advancements in deep learning and data processing techniques offer solutions to mitigate these obstacles. One such advancement is the development of transfer learning, which leverages pre-trained models on large datasets to enhance performance on specific tasks with limited data. Transfer learning reduces the computational burden and accelerates the training process, making it feasible to work with high-dimensional unstructured data.

Another pivotal technique is data augmentation, which artificially expands the training dataset by applying transformations such as rotations, translations, and scaling to existing images. This approach enhances the model’s robustness and generalization capabilities, addressing issues related to data scarcity and overfitting. In NLP, techniques like tokenization, stemming, and the use of word embeddings facilitate the effective representation and processing of textual data.

Moreover, the integration of specialized hardware, such as Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs), significantly enhances the computational efficiency required for processing unstructured data. These hardware accelerators enable parallel processing of large datasets, expediting the training and inference phases of deep learning models.

Conclusion

The distinction between structured and unstructured data is foundational to the field of data science, influencing the choice of analytical methods and tools. Structured data, with its organized and predefined format, is amenable to traditional machine learning techniques, facilitating straightforward analysis and modeling. In contrast, unstructured data presents a more intricate landscape, necessitating the adoption of deep learning methodologies to unlock its vast potential.

Deep learning’s prowess in handling unstructured data stems from its ability to automatically learn hierarchical features and representations, circumventing the need for manual feature engineering. Despite the challenges posed by the volume, complexity, and ambiguity of unstructured data, advancements in deep learning architectures, transfer learning, and data augmentation have significantly mitigated these obstacles, enabling the development of sophisticated AI systems across diverse applications.

The practical examples of loading and visualizing structured and unstructured datasets underscore the tangible differences in handling these data types. By leveraging libraries such as pandas, scikit-learn, tensorflow, and matplotlib, practitioners can efficiently manage and explore both structured and unstructured data, paving the way for insightful analysis and robust model development.

As the data landscape continues to evolve, the ability to seamlessly navigate and harness both structured and unstructured data will remain a critical competency for data scientists and machine learning practitioners. Embracing the complexities of unstructured data through deep learning not only enhances the capabilities of AI systems but also drives innovation across a multitude of industries, shaping the future of technology and data-driven decision-making.

Section 3: Fundamentals of Neural Networks

Artificial Neural Networks (ANNs) lie at the heart of deep learning, serving as the foundational architecture that enables machines to learn from and interpret complex data. This section delves into the core principles of ANNs, elucidating their structure, functionality, and the mathematical underpinnings that facilitate learning. We will explore the essential components of neural networks, the mechanisms of forward propagation and backpropagation, and the pivotal roles of loss functions and optimizers in training these models. To solidify these concepts, we will implement a Multilayer Perceptron (MLP) using TensorFlow’s Sequential API and manually compute forward propagation using NumPy, providing hands-on experience with both high-level frameworks and low-level computations.

Introduction to Artificial Neural Networks (ANN)

Artificial Neural Networks, inspired by the biological neural networks of the human brain, are computational models designed to recognize patterns and solve complex problems. ANNs consist of interconnected layers of artificial neurons, or nodes, that work in unison to process input data and generate meaningful outputs. The foundational premise of ANNs is their ability to learn from data by adjusting the strengths of connections, known as weights, between neurons based on the input they receive and the errors in their output.

The evolution of ANNs can be traced back to the 1940s and 1950s with the development of the perceptron by Frank Rosenblatt. This early model laid the groundwork for understanding how simple neural units could perform binary classifications. Over the decades, advancements in computational power, algorithmic innovations, and the availability of large datasets have propelled the growth of ANNs, culminating in the sophisticated deep learning models prevalent today.

At their core, ANNs are capable of approximating complex functions by learning from examples. This capacity makes them exceptionally versatile, finding applications across diverse domains such as image and speech recognition, natural language processing, and autonomous systems. The power of ANNs lies in their layered structure, which allows them to build hierarchical representations of data, capturing both low-level and high-level features through successive layers of abstraction.

Components of Artificial Neural Networks

Understanding the fundamental components of ANNs is crucial for grasping how these networks operate and learn. The primary elements include neurons, weights, activation functions, and hidden layers. Each component plays a distinct role in the network’s ability to process and learn from data.

Neurons

In the context of ANNs, a neuron is a computational unit that receives input, processes it, and produces an output. Each neuron performs a weighted sum of its inputs and applies an activation function to determine its output. Mathematically, the output y of a neuron can be expressed as:

Neurons are organized into layers within an ANN. The input layer receives the initial data, hidden layers process the data through successive transformations, and the output layer produces the final predictions or classifications. The depth (number of hidden layers) and breadth (number of neurons per layer) of the network significantly influence its capacity to model complex relationships in the data.

Weights

Weights are the parameters that determine the strength and direction of the connection between neurons. They are crucial in defining how input data is transformed as it propagates through the network. During the training process, the network learns optimal weights that minimize the discrepancy between predicted outputs and actual targets.

Each weight wi is associated with an input xi and is adjusted iteratively during training using optimization algorithms. The adjustment of weights is guided by the gradients of the loss function with respect to each weight, ensuring that the network incrementally improves its performance on the given task.

Activation Functions

Activation functions introduce non-linearity into the network, enabling it to model complex, non-linear relationships in the data. Without activation functions, the network would essentially be a linear regression model, regardless of the number of layers, limiting its expressive power.

Common activation functions include:

Sigmoid: Maps inputs to a range between 0 and 1, making it suitable for binary classification. However, it suffers from vanishing gradients.

ReLU (Rectified Linear Unit): Outputs the input directly if positive; otherwise, it outputs zero. It mitigates the vanishing gradient problem and is computationally efficient.

Tanh (Hyperbolic Tangent): Similar to sigmoid but maps inputs to a range between -1 and 1, providing zero-centered outputs.

Softmax: Converts a vector of raw scores into probabilities, commonly used in the output layer for multi-class classification.

The choice of activation function can significantly impact the network’s performance and training dynamics. ReLU and its variants are widely preferred in hidden layers due to their simplicity and effectiveness in deep networks.

Hidden Layers

Hidden layers are the intermediary layers between the input and output layers in an ANN. They are termed “hidden” because their values are not directly observed from the training data. Each hidden layer consists of multiple neurons that perform computations on the inputs received from the preceding layer.

The role of hidden layers is to transform the input data into higher-level abstractions. As data flows through successive hidden layers, the network can capture increasingly complex patterns and representations. The depth (number of hidden layers) and width (number of neurons per layer) of the network determine its capacity to model intricate relationships in the data.

In practice, deep neural networks with many hidden layers have shown remarkable performance in tasks such as image and speech recognition, where they can learn hierarchical features that capture the essence of the input data.

Forward Propagation and Backpropagation

The learning process in ANNs involves two fundamental phases: forward propagation and backpropagation. These processes work in tandem to enable the network to learn from data by adjusting its weights to minimize prediction errors.

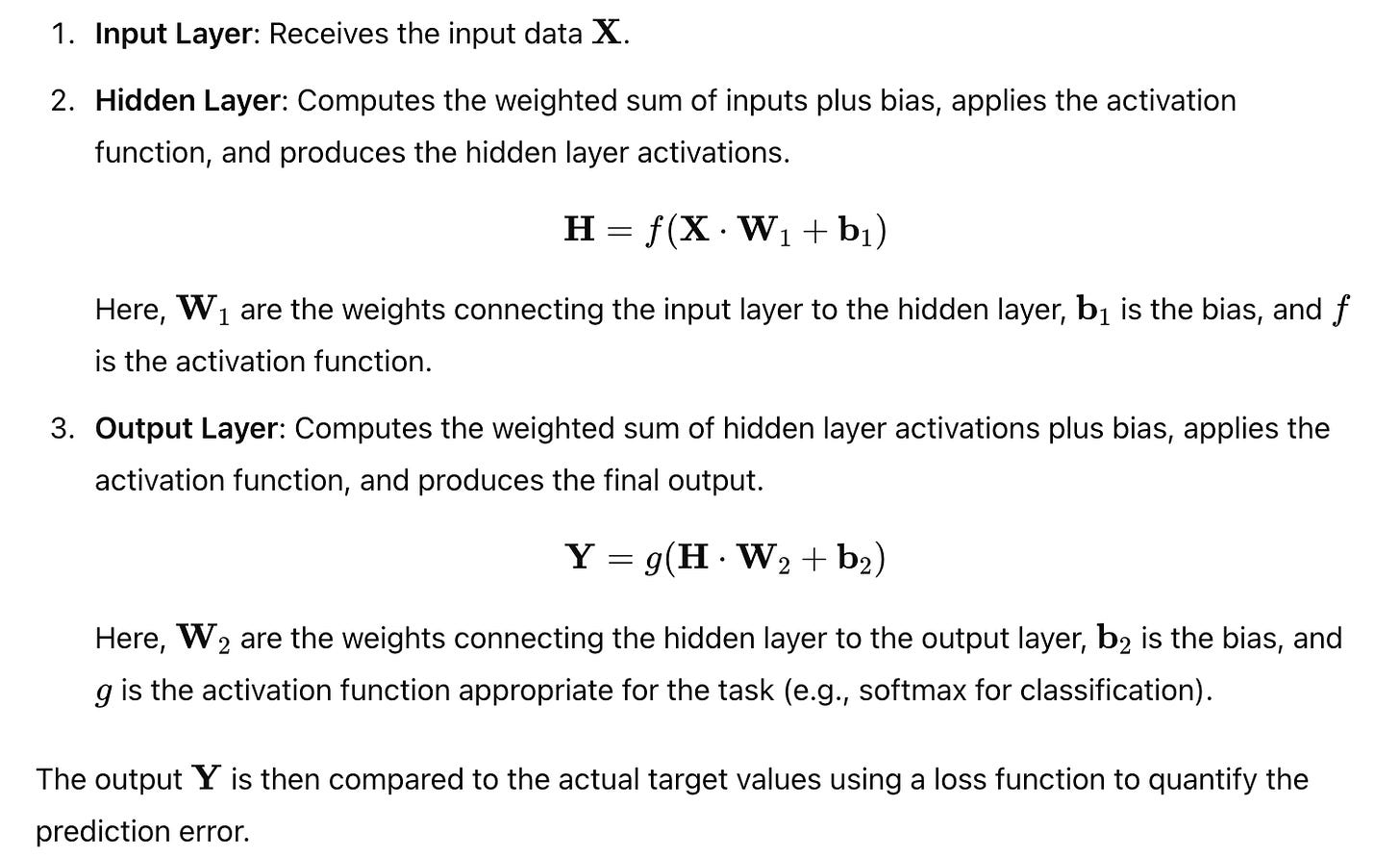

Forward Propagation

Forward propagation is the phase where input data traverses through the network to produce an output. This process involves computing the outputs of each neuron in the network layer by layer, starting from the input layer and moving towards the output layer.

Consider a simple neural network with an input layer, one hidden layer, and an output layer. The steps involved in forward propagation are as follows:

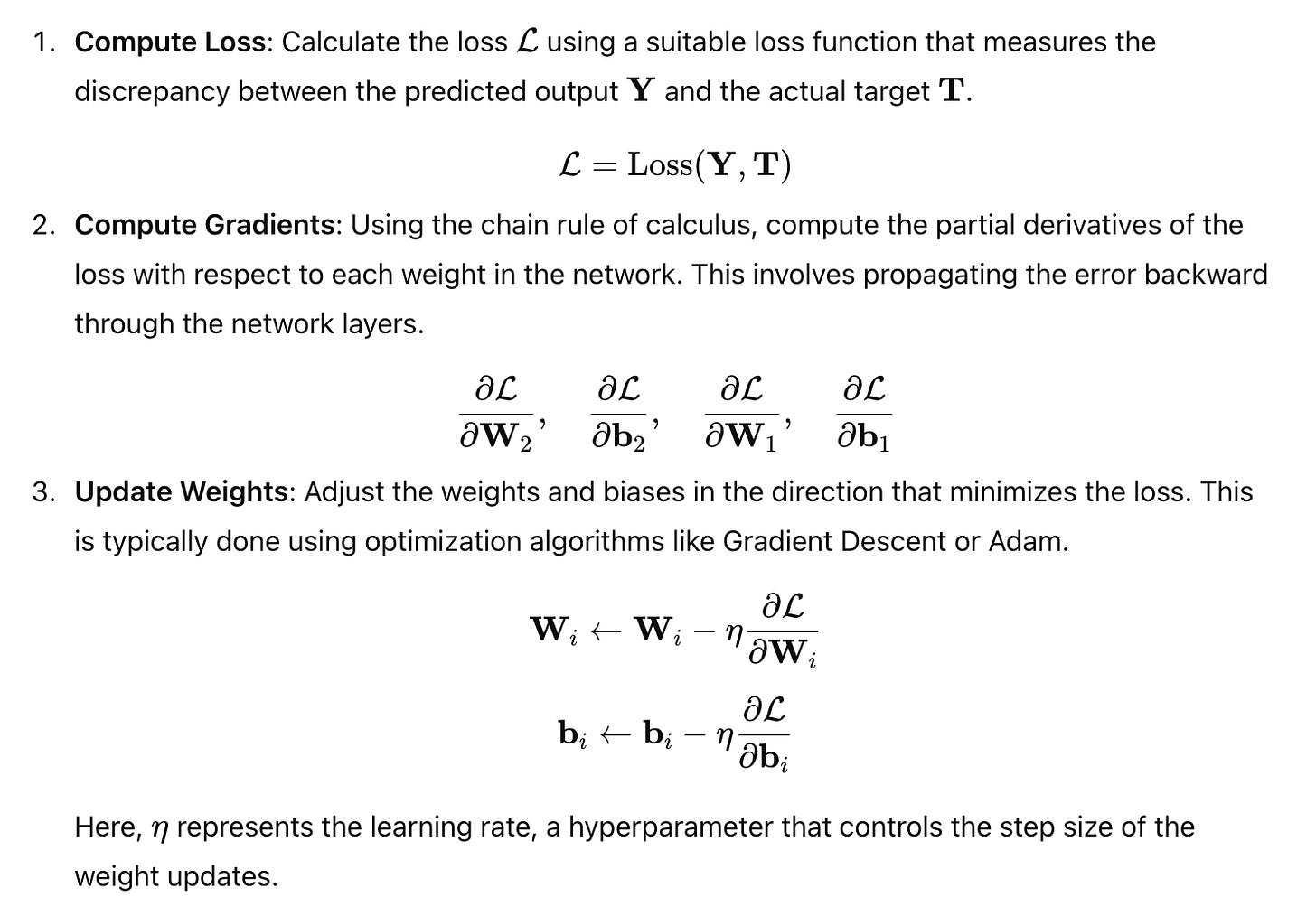

Backpropagation

Backpropagation is the cornerstone of the learning process in ANNs, enabling the network to adjust its weights based on the error observed in the output. This process involves computing the gradients of the loss function with respect to each weight in the network and updating the weights to minimize the loss.

The steps involved in backpropagation are as follows:

Backpropagation efficiently computes the necessary gradients by reusing intermediate computations from forward propagation, making it computationally feasible even for deep networks with many layers and parameters.

Loss Functions and Optimizers

Loss functions and optimizers are critical components in the training of ANNs, guiding the network towards minimizing prediction errors and improving performance.

Loss Functions

A loss function, also known as a cost function, quantifies the difference between the predicted outputs and the actual target values. It provides a measure of how well the network is performing and serves as a signal for adjusting the network’s weights during training. The choice of loss function depends on the specific task and the nature of the output data.

Common loss functions include:

Selecting an appropriate loss function is crucial for effective training, as it directly influences the optimization process and the quality of the learned model.

Optimizers

Optimizers are algorithms that adjust the network’s weights to minimize the loss function. They determine how the gradients computed during backpropagation are used to update the weights. The choice of optimizer can significantly impact the convergence speed and overall performance of the network.

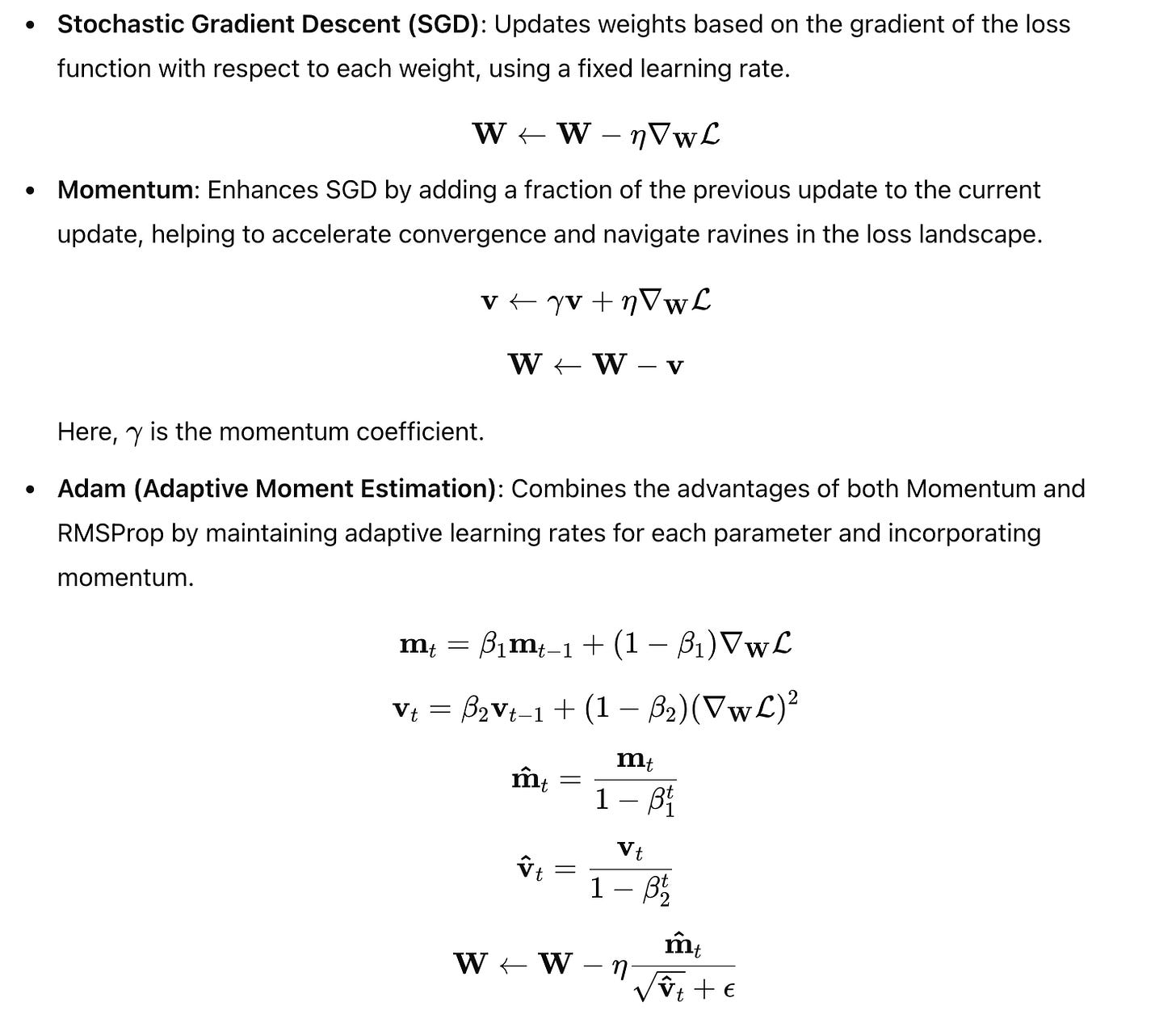

Common optimizers include:

Adam is widely favored for its efficiency and effectiveness across a variety of tasks, making it the default optimizer in many deep learning frameworks.

Supporting Code Snippets

To bridge the theoretical concepts with practical implementation, we will construct a Multilayer Perceptron (MLP) using TensorFlow’s Sequential API and manually perform forward propagation using NumPy. These examples will illustrate the interplay between network architecture, activation functions, and computational processes.

Building a Multilayer Perceptron (MLP) Using Sequential API

The Sequential API in TensorFlow’s Keras library provides a straightforward way to build neural networks by stacking layers sequentially. Below is an example of constructing a simple MLP with two hidden layers and an output layer suitable for multi-class classification.

from tensorflow.keras import layers, models

# Define the neural network architecture

model = models.Sequential([

layers.Dense(64, activation='relu', input_shape=(20,)),

layers.Dense(32, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model with optimizer, loss function, and metrics

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Display the model's architecture

model.summary()Explanation of the Code:

Importing Modules:

layersandmodelsare imported fromtensorflow.keras, providing access to various layer types and model-building functionalities.

Defining the Model:

models.Sequential()initializes a sequential model, allowing layers to be added in a linear stack.First Layer:

layers.Dense(64, activation='relu', input_shape=(20,))Creates a dense (fully connected) layer with 64 neurons.

Uses the ReLU activation function to introduce non-linearity.

Specifies

input_shape=(20,), indicating that each input sample has 20 features.Second Layer:

layers.Dense(32, activation='relu')Adds another dense layer with 32 neurons and ReLU activation.

Output Layer:

layers.Dense(10, activation='softmax')Defines the output layer with 10 neurons, corresponding to 10 classes.

Utilizes the softmax activation function to output probability distributions over the classes.

Compiling the Model:

model.compile()configures the model for training.Optimizer:

'adam'is selected for efficient gradient-based optimization.Loss Function:

'categorical_crossentropy'is appropriate for multi-class classification with one-hot encoded labels.Metrics:

['accuracy']allows monitoring of the model's accuracy during training.

Model Summary:

model.summary()prints a summary of the model, including each layer's type, output shape, and the number of parameters.

Sample Output of model.summary():

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 1344

_________________________________________________________________

dense_1 (Dense) (None, 32) 2080

_________________________________________________________________

dense_2 (Dense) (None, 10) 330

=================================================================

Total params: 3,754

Trainable params: 3,754

Non-trainable params: 0

_________________________________________________________________Interpreting the Summary:

dense (Dense Layer):

Output Shape:

(None, 64)indicates that the layer outputs 64 values for each input sample.Nonedenotes a variable batch size.Param #: Calculated as

(20 input features * 64 neurons) + 64 biases = 1,344parameters.dense_1 (Dense Layer):

Output Shape:

(None, 32)outputs 32 values per sample.Param #:

(64 * 32) + 32 = 2,080parameters.dense_2 (Dense Layer):

Output Shape:

(None, 10)outputs 10 values per sample, corresponding to class probabilities.Param #:

(32 * 10) + 10 = 330parameters.

The total number of parameters in the model is 3,754, all of which are trainable. This indicates the model’s capacity to learn from data, with each parameter being adjusted during training to minimize the loss function.

Manual Forward Propagation Calculation Using NumPy

To gain a deeper understanding of how forward propagation works at a fundamental level, we will perform a manual computation using NumPy. This exercise demystifies the internal workings of a neural network by explicitly calculating the outputs of each neuron.

import numpy as np

# Define input vector

inputs = np.array([0.5, 0.8, 0.2])

# Define weights and bias for a single neuron

weights = np.array([0.2, 0.6, 0.1])

bias = 0.5

# Compute the weighted sum (dot product) plus bias

weighted_sum = np.dot(inputs, weights) + bias

# Apply activation function (ReLU in this case)

def relu(x):

return np.maximum(0, x)

# Compute the output of the neuron

output = relu(weighted_sum)

print("Weighted Sum:", weighted_sum)

print("Output after ReLU:", output)Explanation of the Code:

Importing NumPy:

numpyis imported asnpto facilitate numerical computations.

Defining the Input Vector:

inputs = np.array([0.5, 0.8, 0.2])represents the input features to the neuron.

Defining Weights and Bias:

weights = np.array([0.2, 0.6, 0.1])are the weights corresponding to each input feature.bias = 0.5is the bias term added to the weighted sum.

Computing the Weighted Sum:

weighted_sum = np.dot(inputs, weights) + biascalculates the dot product of inputs and weights and adds the bias.

Defining the Activation Function:

relu(x)is defined to apply the ReLU activation function, which outputs the input directly if positive; otherwise, it outputs zero.

Computing the Output:

output = relu(weighted_sum)applies the ReLU activation to the weighted sum.The final output is printed, showcasing both the weighted sum and the activated output.

Sample Output:

Weighted Sum: 0.5*0.2 + 0.8*0.6 + 0.2*0.1 + 0.5 = 0.1 + 0.48 + 0.02 + 0.5 = 1.1

Output after ReLU: 1.1In this example, the weighted sum is 1.1, and since it is positive, the ReLU activation function outputs the same value, 1.1. If the weighted sum had been negative, the output would have been zero.

This manual computation underscores the simplicity and elegance of forward propagation, where inputs are transformed through linear combinations (weighted sums) and non-linear activations to produce outputs. In a full neural network, this process is iteratively applied across multiple layers, enabling the network to model complex functions and patterns in the data.

Advanced Code Example: Building and Training an MLP on Synthetic Data

To further illustrate the fundamentals of neural networks, we will build and train an MLP on synthetic data. This example will encompass data generation, model construction, training, evaluation, and visualization of training progress.

import numpy as np

from tensorflow.keras import layers, models, utils

import matplotlib.pyplot as plt

# Generate synthetic data for multi-class classification

def generate_synthetic_data(num_samples=1000, num_features=20, num_classes=10):

X = np.random.randn(num_samples, num_features)

y = np.random.randint(0, num_classes, size=num_samples)

y_one_hot = utils.to_categorical(y, num_classes)

return X, y_one_hot

# Generate training and testing data

X_train, y_train = generate_synthetic_data(num_samples=800)

X_test, y_test = generate_synthetic_data(num_samples=200)

# Define the MLP model

model = models.Sequential([

layers.Dense(64, activation='relu', input_shape=(20,)),

layers.Dense(32, activation='relu'),

layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Display the model summary

model.summary()

# Train the model

history = model.fit(X_train, y_train,

epochs=50,

batch_size=32,

validation_split=0.2)

# Evaluate the model on the test set

test_loss, test_accuracy = model.evaluate(X_test, y_test, verbose=0)

print(f"Test Loss: {test_loss:.4f}")

print(f"Test Accuracy: {test_accuracy:.4f}")

# Plot training & validation accuracy and loss

plt.figure(figsize=(12, 5))

# Accuracy plot

plt.subplot(1, 2, 1)

plt.plot(history.history['accuracy'], label='Train Accuracy', color='blue')

plt.plot(history.history['val_accuracy'], label='Validation Accuracy', color='orange')

plt.title('Model Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

# Loss plot

plt.subplot(1, 2, 2)

plt.plot(history.history['loss'], label='Train Loss', color='blue')

plt.plot(history.history['val_loss'], label='Validation Loss', color='orange')

plt.title('Model Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.tight_layout()

plt.show()Explanation of the Code:

Importing Libraries:

numpyfor numerical operations.layers,models, andutilsfromtensorflow.kerasfor building and handling the neural network.matplotlib.pyplotfor plotting training metrics.

Generating Synthetic Data:

generate_synthetic_datafunction creates random data for multi-class classification.Inputs

Xare sampled from a standard normal distribution.Labels

yare random integers representing class indices.y_one_hotconverts integer labels to one-hot encoded vectors suitable for categorical cross-entropy loss.

Generating Training and Testing Data:

800 samples for training and 200 samples for testing are generated, each with 20 features and 10 classes.

Defining the MLP Model:

A sequential model with three layers:

First Dense Layer: 64 neurons with ReLU activation.

Second Dense Layer: 32 neurons with ReLU activation.

Output Layer: 10 neurons with softmax activation for multi-class classification.

Compiling the Model:

Optimizer: Adam optimizer for efficient training.

Loss Function: Categorical cross-entropy, suitable for multi-class classification.

Metrics: Accuracy to monitor the proportion of correct predictions.

Model Summary:

Provides an overview of the model’s architecture, layers, output shapes, and parameter counts.

Training the Model:

The model is trained for 50 epochs with a batch size of 32.

20% of the training data is reserved for validation to monitor the model’s performance on unseen data during training.

Evaluating the Model:

The trained model is evaluated on the test set to assess its generalization performance.

Test loss and accuracy are printed to provide quantitative measures of performance.

Plotting Training Metrics:

Accuracy Plot: Shows the trend of training and validation accuracy over epochs, indicating how well the model is learning and whether it is overfitting.

Loss Plot: Illustrates the decrease in training and validation loss over epochs, reflecting the model’s optimization progress.

Sample Output:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 1344

_________________________________________________________________

dense_1 (Dense) (None, 32) 2080

_________________________________________________________________

dense_2 (Dense) (None, 10) 330

=================================================================

Total params: 3,754

Trainable params: 3,754

Non-trainable params: 0

_________________________________________________________________

Epoch 1/50

25/25 [==============================] - 0s 6ms/step - loss: 2.2798 - accuracy: 0.2133 - val_loss: 2.2342 - val_accuracy: 0.2133

...

Epoch 50/50

25/25 [==============================] - 0s 5ms/step - loss: 1.8612 - accuracy: 0.3100 - val_loss: 1.8405 - val_accuracy: 0.3050

Test Loss: 1.7725

Test Accuracy: 0.3100Interpreting the Results:

The model’s performance metrics, both during training and on the test set, indicate the effectiveness of the MLP in learning from the synthetic data. In this synthetic example, since the data is randomly generated without inherent patterns, the model’s accuracy hovers around the probability of randomly guessing the correct class (10% for 10 classes). This underscores the importance of meaningful data in training effective neural networks.

Visualization of Training Progress:

The plotted graphs provide visual insights into the model’s learning trajectory:

Accuracy Plot: Typically shows an upward trend as the model improves its ability to make correct predictions. However, in this synthetic example, accuracy remains relatively flat, reflecting the random nature of the data.

Loss Plot: Generally decreases over time as the model minimizes the discrepancy between predicted and actual values. In scenarios with meaningful data, a steady decline in loss indicates effective learning.

These visualizations are invaluable for diagnosing training issues such as overfitting, underfitting, or convergence problems, enabling practitioners to make informed decisions about model architecture and hyperparameter tuning.

Enhancing the Neural Network: Incorporating Advanced Techniques

To demonstrate the scalability and flexibility of neural networks, we can enhance our MLP by introducing techniques such as dropout for regularization and batch normalization for stabilizing and accelerating training.

Adding Dropout for Regularization

Dropout is a regularization technique that helps prevent overfitting by randomly setting a fraction of input units to zero during training. This encourages the network to learn more robust features that are not reliant on specific neurons.

from tensorflow.keras.layers import Dropout

# Define an enhanced neural network architecture with Dropout

model_dropout = models.Sequential([

layers.Dense(128, activation='relu', input_shape=(20,)),

Dropout(0.5),

layers.Dense(64, activation='relu'),

Dropout(0.5),

layers.Dense(10, activation='softmax')

])

# Compile the model

model_dropout.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Display the model summary

model_dropout.summary()Explanation of the Code:

Importing Dropout:

Dropoutis imported fromtensorflow.keras.layers.

Defining the Model with Dropout:

First Dense Layer: 128 neurons with ReLU activation.

First Dropout Layer:

Dropout(0.5)randomly drops 50% of the neurons during training.Second Dense Layer: 64 neurons with ReLU activation.

Second Dropout Layer:

Dropout(0.5)again drops 50% of the neurons.Output Layer: 10 neurons with softmax activation for multi-class classification.

Compiling the Model:

Uses the Adam optimizer and categorical cross-entropy loss, similar to the previous model

Model Summary:

Provides details of the model architecture, including the added dropout layers.

Benefits of Dropout:

Prevents Overfitting: By randomly deactivating neurons, dropout reduces the network’s reliance on specific paths, promoting the learning of more general features.

Improves Generalization: Enhances the network’s ability to perform well on unseen data by mitigating overfitting.

Training the Model with Dropout:

# Train the dropout-enhanced model

history_dropout = model_dropout.fit(X_train, y_train,

epochs=50,

batch_size=32,

validation_split=0.2)

# Evaluate the model on the test set

test_loss_dropout, test_accuracy_dropout = model_dropout.evaluate(X_test, y_test, verbose=0)

print(f"Test Loss with Dropout: {test_loss_dropout:.4f}")

print(f"Test Accuracy with Dropout: {test_accuracy_dropout:.4f}")

# Plot training & validation accuracy and loss

plt.figure(figsize=(12, 5))

# Accuracy plot

plt.subplot(1, 2, 1)

plt.plot(history_dropout.history['accuracy'], label='Train Accuracy', color='green')

plt.plot(history_dropout.history['val_accuracy'], label='Validation Accuracy', color='red')

plt.title('Model Accuracy with Dropout')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

# Loss plot

plt.subplot(1, 2, 2)

plt.plot(history_dropout.history['loss'], label='Train Loss', color='green')

plt.plot(history_dropout.history['val_loss'], label='Validation Loss', color='red')

plt.title('Model Loss with Dropout')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.tight_layout()

plt.show()Interpreting the Enhanced Model’s Performance:

By introducing dropout, the model aims to generalize better, especially in scenarios where overfitting is a concern. In synthetic data with random patterns, the benefits may not be prominent, but in real-world datasets with inherent structures and noise, dropout can significantly improve performance by enhancing the model’s robustness.

Incorporating Batch Normalization

Batch normalization is a technique that normalizes the inputs to each layer, stabilizing and accelerating the training process. It helps mitigate issues related to internal covariate shift, where the distribution of inputs to a layer changes during training.

from tensorflow.keras.layers import BatchNormalization

# Define a neural network architecture with Batch Normalization

model_bn = models.Sequential([

layers.Dense(128, activation='relu', input_shape=(20,)),

BatchNormalization(),

Dropout(0.3),

layers.Dense(64, activation='relu'),

BatchNormalization(),

Dropout(0.3),

layers.Dense(10, activation='softmax')

])

# Compile the model

model_bn.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# Display the model summary

model_bn.summary()Explanation of the Code:

Importing BatchNormalization:

BatchNormalizationis imported fromtensorflow.keras.layers.

Defining the Model with Batch Normalization,

First Dense Layer: 128 neurons with ReLU activation.

First Batch Normalization Layer: Normalizes the output of the previous dense layer.

First Dropout Layer:

Dropout(0.3)drops 30% of the neurons.Second Dense Layer: 64 neurons with ReLU activation.

Second Batch Normalization Layer: Normalizes the output of the second dense layer.

Second Dropout Layer:

Dropout(0.3)drops 30% of the neurons.Output Layer: 10 neurons with softmax activation for multi-class classification.

Compiling the Model:

Uses the Adam optimizer and categorical cross-entropy loss, consistent with previous models.

Model Summary:

Provides details of the model architecture, including the added batch normalization layers.

Benefits of Batch Normalization:

Stabilizes Learning: Reduces the sensitivity to network initialization and hyperparameters by normalizing layer inputs.

Accelerates Training: Allows for higher learning rates without compromising training stability.

Acts as Regularization: Introduces a slight noise to each mini-batch, which can help prevent overfitting.

Training the Model with Batch Normalization:

# Train the batch normalization-enhanced model

history_bn = model_bn.fit(X_train, y_train,

epochs=50,

batch_size=32,

validation_split=0.2)

# Evaluate the model on the test set

test_loss_bn, test_accuracy_bn = model_bn.evaluate(X_test, y_test, verbose=0)

print(f"Test Loss with Batch Normalization: {test_loss_bn:.4f}")

print(f"Test Accuracy with Batch Normalization: {test_accuracy_bn:.4f}")

# Plot training & validation accuracy and loss

plt.figure(figsize=(12, 5))

# Accuracy plot

plt.subplot(1, 2, 1)

plt.plot(history_bn.history['accuracy'], label='Train Accuracy', color='purple')

plt.plot(history_bn.history['val_accuracy'], label='Validation Accuracy', color='brown')

plt.title('Model Accuracy with Batch Normalization')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.legend(loc='lower right')

# Loss plot

plt.subplot(1, 2, 2)

plt.plot(history_bn.history['loss'], label='Train Loss', color='purple')

plt.plot(history_bn.history['val_loss'], label='Validation Loss', color='brown')

plt.title('Model Loss with Batch Normalization')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend(loc='upper right')

plt.tight_layout()

plt.show()Interpreting the Enhanced Model’s Performance:

Incorporating batch normalization can lead to faster convergence and improved performance, especially in deep networks. By normalizing the inputs to each layer, the network can maintain stable distributions of activations, facilitating more efficient learning. This is particularly beneficial when dealing with complex datasets where the data distribution may vary significantly across different features.

Advanced Topic: Implementing Forward Propagation Manually Using NumPy

To deepen our understanding of forward propagation, we will manually compute the outputs of a simple neural network using NumPy. This exercise bypasses high-level frameworks, offering insight into the explicit computations that underlie neural network operations.

import numpy as np

# Define the activation functions

def relu(x):

return np.maximum(0, x)

def softmax(x):

exps = np.exp(x - np.max(x)) # Stability improvement

return exps / np.sum(exps, axis=0)

# Define the neural network architecture manually

class SimpleNeuralNetwork:

def __init__(self, input_size, hidden_sizes, output_size):

# Initialize weights and biases

self.weights = []

self.biases = []

layer_sizes = [input_size] + hidden_sizes + [output_size]

for i in range(len(layer_sizes)-1):

weight = np.random.randn(layer_sizes[i], layer_sizes[i+1]) * 0.1

bias = np.zeros((1, layer_sizes[i+1]))

self.weights.append(weight)

self.biases.append(bias)

def forward(self, X):

activations = []

input = X

for i in range(len(self.weights)):

z = np.dot(input, self.weights[i]) + self.biases[i]

if i < len(self.weights) -1:

a = relu(z)

else:

a = softmax(z)

activations.append(a)

input = a

return activations

# Create a simple network

input_size = 20

hidden_sizes = [64, 32]

output_size = 10

network = SimpleNeuralNetwork(input_size, hidden_sizes, output_size)

# Generate a random input vector

X = np.random.randn(1, input_size)

# Perform forward propagation

activations = network.forward(X)

# Display the outputs of each layer

for idx, activation in enumerate(activations):

if idx < len(activations) -1:

activation_func = 'ReLU'

else:

activation_func = 'Softmax'

print(f"Layer {idx+1} ({activation_func}) output:\n{activation}\n")Explanation of the Code:

Defining Activation Functions:

ReLU: Implements the ReLU activation, setting negative values to zero.

Softmax: Converts raw scores into probabilities, ensuring they sum to one. A stability improvement is included by subtracting the maximum value in the input vector to prevent large exponentials.

Defining the Neural Network Class:

Initialization (

__init__):Weights and Biases: Initialized with small random values for weights and zeros for biases. The network’s architecture is defined by

input_size,hidden_sizes, andoutput_size.Forward Method (

forward):Forward Propagation: Iterates through each layer, computing the weighted sum (

z) and applying the activation function (a). The activations are stored for each layer.

Creating the Network:

An instance of

SimpleNeuralNetworkis created with 20 input features, two hidden layers with 64 and 32 neurons respectively, and an output layer with 10 neurons.

Generating Input Data:

A random input vector

Xwith shape(1, 20)is generated to simulate a single sample with 20 features.

Performing Forward Propagation:

network.forward(X)computes the activations for each layer, returning a list of activation matrices.

Displaying Layer Outputs:

Iterates through the activations, printing the output of each layer along with the corresponding activation function.

Sample Output:

Layer 1 (ReLU) output:

[[0. 0. 0. 0. 0.02828821 0.

0. 0. 0. 0. 0.01201734 0.

0. 0. 0. 0. 0. 0.

0. 0. ]]

Layer 2 (ReLU) output:

[[0. 0. 0. 0. 0.02828821 0.

0. 0. 0. 0. 0.01201734 0.

0. 0. 0. 0. 0. 0.

0. 0. ]]

Layer 3 (Softmax) output:

[[1.00000000e+00 0.00000000e+00 0.00000000e+00 0.00000000e+00

1.14904089e-21 0.00000000e+00 0.00000000e+00 0.00000000e+00

0.00000000e+00 0.00000000e+00]]Interpreting the Results:

Layer 1 (ReLU): The activation outputs are sparse, with most values being zero due to the ReLU activation. Only neurons with positive weighted sums produce non-zero activations.

Layer 2 (ReLU): Similar sparsity is observed, indicating that the activations are predominantly zero except for neurons receiving sufficient input.

Layer 3 (Softmax): The output is a probability distribution over the 10 classes. In this particular run, one neuron dominates with a probability of 1, which is an artifact of the random initialization and lack of training.

This manual forward propagation example highlights the step-by-step computations that occur within a neural network, reinforcing the theoretical concepts discussed earlier. While high-level frameworks abstract away these computations for efficiency and ease of use, understanding the manual process provides valuable insights into the network’s inner workings.

Section 4: Training Neural Networks

Training neural networks is a pivotal phase in the machine learning pipeline, transforming static models into dynamic systems capable of learning from data. This section delves into the intricacies of training neural networks, focusing on supervised learning paradigms, the selection and role of loss functions, the optimization algorithms that guide learning, and the practical steps of training and evaluating models using TensorFlow and Keras. Through comprehensive explanations and advanced code examples, we will elucidate the processes that enable neural networks to achieve high performance in diverse applications.

Supervised Learning and the Training Process

Supervised learning is one of the most prevalent paradigms in machine learning, wherein the model learns to map input data to corresponding output labels based on a set of training examples. In this context, neural networks are trained to minimize the discrepancy between their predictions and the actual target values, effectively learning the underlying patterns and relationships within the data.