Algorithmic Trading: The Power of Data

Mastering Financial Data Acquisition, Storage, and Manipulation with Python

Data is the lifeblood of algorithmic trading. As Rob Thomas rightly observed, “Data beats algorithms.” While sophisticated algorithms are crucial, their effectiveness hinges on the quality, quantity, and relevance of the data they consume. Without comprehensive and accurate data, even the most complex models will struggle to generate reliable predictions. In the fast-paced world of finance, where milliseconds can translate into significant profits or losses, understanding and leveraging data effectively is paramount. This article will delve into the practical aspects of acquiring, managing, and storing financial data, specifically focusing on the structured data essential for backtesting trading strategies.

Four primary categories of financial data are typically encountered in algorithmic trading:

Price and Volume Data: This includes the core time series data that forms the foundation of most trading strategies. Examples include opening, high, low, and closing (OHLC) prices, as well as the volume traded for a specific asset over a specific period.

Order Book Data: This represents the state of the market in terms of buy and sell orders at various price levels. It provides insights into market liquidity and order imbalances.

Fundamental Data: This encompasses financial statements, such as balance sheets, income statements, and cash flow statements, as well as macroeconomic indicators, industry reports, and other factors that can influence asset prices.

News and Sentiment Data: This includes news articles, social media posts, analyst reports, and other textual data sources that can be used to gauge market sentiment and predict price movements.

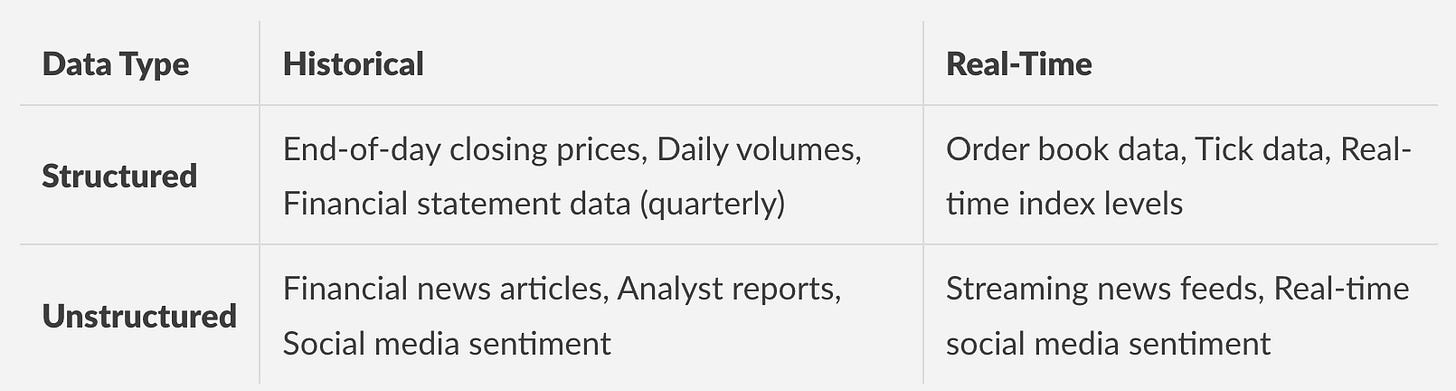

To effectively manage and utilize these diverse data types, it’s helpful to classify them along two key dimensions: historical vs. real-time and structured vs. unstructured.

The table above provides a basic classification. The distinction between historical and real-time data is straightforward: historical data reflects past events, while real-time data provides a continuous stream of current information. The structured/unstructured distinction is a bit more nuanced. Structured data is organized in a predefined format, such as tables or spreadsheets, making it readily accessible for analysis. Think of end-of-day closing prices, where each row represents a specific date and contains columns for the open, high, low, close, and volume. Unstructured data, on the other hand, lacks a predefined format and often requires more complex processing to extract meaningful information. Financial news articles, social media feeds, and analyst reports fall into this category.

This article will primarily focus on structured data, encompassing both historical and real-time types. Specifically, we’ll concentrate on acquiring and working with historical, structured data, using end-of-day closing values as a primary example. However, the techniques and concepts discussed are readily adaptable to other types of structured data, including intraday data like 1-minute bar data, and even real-time data streams (which will be addressed in more detail later). The fundamental goal is to provide the necessary financial data in a format that is suitable for the effective backtesting of trading ideas.

Reading Financial Data From Different Sources Using Pandas

The cornerstone of working with financial data in Python is the pandas library. pandas provides powerful data structures, such as DataFrame, and a comprehensive set of tools for data manipulation and analysis. We’ll explore how to read financial data from various sources, starting with simple CSV files and expanding to more complex data sources like APIs.

First, let’s consider a common scenario: reading historical price data stored in a CSV file. Assume we have a CSV file named AAPL.csv containing daily closing prices for Apple stock. The file might look something like this:

Date,Open,High,Low,Close,Volume

2023-01-03,130.28,130.89,124.17,125.07,112117700

2023-01-04,126.89,128.66,125.08,126.36,89186000

2023-01-05,127.13,127.76,125.86,126.74,85762300

2023-01-06,127.26,130.28,126.30,129.30,87754700

2023-01-09,130.48,133.42,129.89,130.19,70790800

The following Python code demonstrates how to read this data into a pandas DataFrame:

import pandas as pd

# Specify the path to your CSV file

file_path = 'AAPL.csv'

# Read the CSV file into a pandas DataFrame

try:

df = pd.read_csv(file_path)

print("Data successfully loaded from CSV.")

except FileNotFoundError:

print(f"Error: The file '{file_path}' was not found.")

except Exception as e:

print(f"An error occurred: {e}")

# Display the first few rows of the DataFrame

if 'df' in locals():

print(df.head())

# Example output (first 5 rows)

# Date Open High Low Close Volume

# 0 2023-01-03 130.28 130.89 124.17 125.07 112117700

# 1 2023-01-04 126.89 128.66 125.08 126.36 89186000

# 2 2023-01-05 127.13 127.76 125.86 126.74 85762300

# 3 2023-01-06 127.26 130.28 126.30 129.30 87754700

# 4 2023-01-09 130.48 133.42 129.89 130.19 70790800

This code snippet utilizes pd.read_csv() to load the CSV data into a DataFrame. The try...except block handles potential errors, such as the file not being found. The .head() method then displays the first few rows of the DataFrame, allowing us to verify that the data has been loaded correctly.

A crucial step is converting the ‘Date’ column to the correct datetime format and setting it as the index for easier time-series analysis. This can be achieved as follows:

import pandas as pd

file_path = 'AAPL.csv'

try:

df = pd.read_csv(file_path)

# Convert the 'Date' column to datetime objects

df['Date'] = pd.to_datetime(df['Date'])

# Set the 'Date' column as the index

df.set_index('Date', inplace=True)

print("Data successfully loaded from CSV and processed.")

except FileNotFoundError:

print(f"Error: The file '{file_path}' was not found.")

except Exception as e:

print(f"An error occurred: {e}")

if 'df' in locals():

print(df.head())

print(df.index) # Verify the index

# Example output (first 5 rows with date as index)

# Open High Low Close Volume

# Date

# 2023-01-03 130.28 130.89 124.17 125.07 112117700

# 2023-01-04 126.89 128.66 125.08 126.36 89186000

# 2023-01-05 127.13 127.76 125.86 126.74 85762300

# 2023-01-06 127.26 130.28 126.30 129.30 87754700

# 2023-01-09 130.48 133.42 129.89 130.19 70790800

# DatetimeIndex(['2023-01-03', '2023-01-04', '2023-01-05', '2023-01-06',

# '2023-01-09', '2023-01-10', '2023-01-11', '2023-01-12',

# '2023-01-13', '2023-01-17', '2023-01-18', '2023-01-19',

# '2023-01-20', '2023-01-23', '2023-01-24', '2023-01-25',

# '2023-01-26', '2023-01-27', '2023-01-30', '2023-01-31',

# '2023-02-01', '2023-02-02', '2023-02-03', '2023-02-06',

# '2023-02-07', '2023-02-08', '2023-02-09', '2023-02-10',

# '2023-02-13', '2023-02-14', '2023-02-15', '2023-02-16',

# '2023-02-17', '2023-02-21', '2023-02-22', '2023-02-23',

# '2023-02-24', '2023-02-27', '2023-02-28', '2023-03-01',

# '2023-03-02', '2023-03-03', '2023-03-06', '2023-03-07',

# '2023-03-08', '2023-03-09', '2023-03-10', '2023-03-13',

# '2023-03-14', '2023-03-15', '2023-03-16', '2023-03-17',

# '2023-03-20', '2023-03-21', '2023-03-22', '2023-03-23',

# '2023-03-24', '2023-03-27', '2023-03-28', '2023-03-29',

# '2023-03-30', '2023-03-31', '2023-04-03', '2023-04-04',

# '2023-04-05', '2023-04-06', '2023-04-10', '2023-04-11',

# '2023-04-12', '2023-04-13', '2023-04-14', '2023-04-17',

# '2023-04-18', '2023-04-19', '2023-04-20', '2023-04-21',

# '2023-04-24', '2023-04-25', '2023-04-26', '2023-04-27',

# '2023-04-28', '2023-05-01', '2023-05-02', '2023-05-03',

# '2023-05-04', '2023-05-05', '2023-05-08', '2023-05-09',

# '2023-05-10', '2023-05-11', '2023-05-12', '2023-05-15',

# '2023-05-16', '2023-05-17', '2023-05-18', '2023-05-19',

# '2023-05-22', '2023-05-23', '2023-05-24', '2023-05-25',

# '2023-05-26', '2023-05-30', '2023-05-31', '2023-06-01',

# '2023-06-02', '2023-06-05', '2023-06-06', '2023-06-07',

# '2023-06-08', '2023-06-09', '2023-06-12', '2023-06-13',

# '2023-06-14', '2023-06-15', '2023-06-16', '2023-06-19',

# '2023-06-20', '2023-06-21', '2023-06-22', '2023-06-23',

# '2023-06-26', '2023-06-27', '2023-06-28', '2023-06-29',

# '2023-06-30', '2023-07-03', '2023-07-05', '2023-07-06',

# '2023-07-07', '2023-07-10', '2023-07-11', '2023-07-12',

# '2023-07-13', '2023-07-14', '2023-07-17', '2023-07-18',

# '2023-07-19', '2023-07-20', '2023-07-21', '2023-07-24',

# '2023-07-25', '2023-07-26', '2023-07-27', '2023-07-28',

# '2023-07-31', '2023-08-01', '2023-08-02', '2023-08-03',

# '2023-08-04', '2023-08-07', '2023-08-08', '2023-08-09',

# '2023-08-10', '2023-08-11', '2023-08-14', '2023-08-15',

# '2023-08-16', '2023-08-17', '2023-08-18', '2023-08-21',

# '2023-08-22', '2023-08-23', '2023-08-24', '2023-08-25',

# '2023-08-28', '2023-08-29', '2023-08-30', '2023-08-31',

# '2023-09-01', '2023-09-05', '2023-09-06', '2023-09-07',

# '2023-09-08', '2023-09-11', '2023-09-12', '2023-09-13',

# '2023-09-14', '2023-09-15', '2023-09-18', '2023-09-19',

# '2023-09-20', '2023-09-21', '2023-09-22', '2023-09-25',

# '2023-09-26', '2023-09-27', '2023-09-28', '2023-09-29',

# '2023-10-02', '2023-10-03', '2023-10-04', '2023-10-05',

# '2023-10-06', '2023-10-09', '2023-10-10', '2023-10-11',

# '2023-10-12', '2023-10-13', '2023-10-16', '2023-10-17',

# '2023-10-18', '2023-10-19', '2023-10-20', '2023-10-23',

# '2023-10-24', '2023-10-25', '2023-10-26', '2023-10-27',

# '2023-10-30', '2023-10-31', '2023-11-01', '2023-11-02',

# '2023-11-03', '2023-11-06', '2023-11-07', '2023-11-08',

# '2023-11-09', '2023-11-10', '2023-11-13', '2023-11-14',

# '2023-11-15', '2023-11-16', '2023-11-17', '2023-11-20',

# '2023-11-21', '2023-11-22', '2023-11-24', '2023-11-27',

# '2023-11-28', '2023-11-29', '2023-11-30', '2023-12-01',

# '2023-12-04', '2023-12-05', '2023-12-06', '2023-12-07',

# '2023-12-08', '2023-12-11', '2023-12-12', '2023-12-13',

# '2023-12-14', '2023-12-15', '2023-12-18', '2023-12-19',

# '2023-12-20', '2023-12-21', '2023-12-22', '2023-12-26',

# '2023-12-27', '2023-12-28', '2023-12-29']

Here, pd.to_datetime() converts the date strings into datetime objects, enabling time-series specific operations. The set_index() function sets the ‘Date’ column as the index. This is critical for time-series analysis, as it allows efficient indexing, slicing, and resampling based on dates.

After loading the data, you can perform basic data exploration and cleaning. For instance, you might check for missing values:

import pandas as pd

file_path = 'AAPL.csv'

try:

df = pd.read_csv(file_path)

df['Date'] = pd.to_datetime(df['Date'])

df.set_index('Date', inplace=True)

# Check for missing values

print(df.isnull().sum())

except FileNotFoundError:

print(f"Error: The file '{file_path}' was not found.")

except Exception as e:

print(f"An error occurred: {e}")

# Example output (after removing unnecessary columns)

# Open 0

# High 0

# Low 0

# Close 0

# Volume 0

# dtype: int64

The .isnull().sum() method returns the number of missing values for each column. In this example, if there are no missing values, the output will be all zeros. If there are missing values, you’ll need to decide how to handle them, such as by imputing values (e.g., using the mean or a more sophisticated method) or removing the rows containing missing data.

Working with Open Data Sources (Quandl)

While CSV files are useful for local data, accessing data directly from reliable online sources is often more convenient and efficient. Quandl (now part of Nasdaq Data Link) provides an API for accessing a vast array of financial and economic data. To access Quandl data, you’ll need an API key, which you can obtain by creating a free account on the Nasdaq Data Link website.

First, install the quandl package:

pip install quandl

Here’s an example of how to retrieve historical data for Apple (AAPL) from Quandl using the quandl library:

import quandl

import pandas as pd

# Replace 'YOUR_API_KEY' with your actual API key

quandl.ApiConfig.api_key = 'YOUR_API_KEY'

try:

# Retrieve data for Apple (AAPL) from the WIKI dataset

data = quandl.get('WIKI/AAPL', start_date='2023-01-01', end_date='2023-12-31')

print("Quandl data successfully retrieved.")

# Display the first few rows of the DataFrame

print(data.head())

except quandl.errors.quandl_error.QuandlError as e:

print(f"Quandl API Error: {e}")

except Exception as e:

print(f"An unexpected error occurred: {e}")

# Example output (first 5 rows) - note that the exact data will vary

# Open High Low Close Volume Ex-Dividend Split Ratio Adj. Open Adj. High Adj. Low Adj. Close Adj. Volume

# Date

# 2023-01-03 130.28 130.89 124.17 125.07 112117700 0.0 1.0 130.28 130.89 124.17 125.07 112117700

# 2023-01-04 126.89 128.66 125.08 126.36 89186000 0.0 1.0 126.89 128.66 125.08 126.36 89186000

# 2023-01-05 127.13 127.76 125.86 126.74 85762300 0.0 1.0 127.13 127.76 125.86 126.74 85762300

# 2023-01-06 127.26 130.28 126.30 129.30 87754700 0.0 1.0 127.26 130.28 126.30 129.30 87754700

# 2023-01-09 130.48 133.42 129.89 130.19 70790800 0.0 1.0 130.48 133.42 129.89 130.19 70790800

This code retrieves data for Apple (AAPL) from the “WIKI” dataset on Quandl. The quandl.get() function fetches the data, and you specify the start and end dates to limit the data range. The .head() method displays the first few rows of the DataFrame.

Notice that the Quandl data often includes more columns than a simple CSV file. You can select specific columns or rename them as needed:

import quandl

import pandas as pd

quandl.ApiConfig.api_key = 'YOUR_API_KEY'

try:

data = quandl.get('WIKI/AAPL', start_date='2023-01-01', end_date='2023-12-31')

# Select specific columns and rename them

df = data[['Open', 'High', 'Low', 'Close', 'Volume']].copy() # Use .copy() to avoid SettingWithCopyWarning

df.rename(columns={'Open': 'Open', 'High': 'High', 'Low': 'Low', 'Close': 'Close', 'Volume': 'Volume'}, inplace=True)

print("Quandl data successfully retrieved and processed.")

print(df.head())

except quandl.errors.quandl_error.QuandlError as e:

print(f"Quandl API Error: {e}")

except Exception as e:

print(f"An unexpected error occurred: {e}")

# Example output (first 5 rows) - with selected and renamed columns

# Open High Low Close Volume

# Date

# 2023-01-03 130.28 130.89 124.17 125.07 112117700

# 2023-01-04 126.89 128.66 125.08 126.36 89186000

# 2023-01-05 127.13 127.76 125.86 126.74 85762300

# 2023-01-06 127.26 130.28 126.30 129.30 87754700

# 2023-01-09 130.48 133.42 129.89 130.19 70790800

This code snippet selects the ‘Open’, ‘High’, ‘Low’, ‘Close’, and ‘Volume’ columns and renames them using the .rename() method. The .copy() method is used to create a copy of the selected columns to avoid the SettingWithCopyWarning. This is a good practice to ensure that the modifications are not affecting the original DataFrame. The inplace=True argument ensures that the changes are applied directly to the DataFrame.

Quandl offers various datasets. To find the correct dataset code, you can search on the Nasdaq Data Link website. For example, you might use the “WIKI” dataset for historical stock prices, “FRED” for macroeconomic data, or “EOD” for end-of-day data from Refinitiv.

Eikon Data API (Refinitiv Eikon Data API wrapper)

For more comprehensive data, particularly for advanced analysis and real-time data feeds, consider using the Refinitiv Eikon Data API. This API provides access to a vast range of financial data, including real-time market data, news, and fundamental data. To use the Eikon Data API, you’ll need an Eikon terminal or Refinitiv Workspace subscription and the necessary API credentials.

The Eikon Data API is typically accessed through a Python wrapper. This allows you to interact with the API using familiar Python syntax. We will use the eikon package, which provides a convenient way to interact with the Eikon API.

First, install the eikon package:

pip install eikon

Before you can use the eikon package, you need to set up your API credentials. This typically involves configuring the location of your Eikon terminal or Refinitiv Workspace. Refer to the Refinitiv documentation for specific instructions on setting up your API credentials.

Here’s a basic example demonstrating how to retrieve historical price data using the eikon package:

import eikon as ek

import pandas as pd

# Initialize the Eikon API

ek.set_app_key('YOUR_EIKON_APP_KEY') # Replace with your Eikon app key

try:

# Define the RIC (Refinitiv Instrument Code) for Apple

ric = 'AAPL.O'

# Retrieve historical price data

data = ek.get_timeseries(ric, start_period='2023-01-01', end_period='2023-12-31', fields=['OPEN', 'HIGH', 'LOW', 'CLOSE', 'VOLUME'])

print("Eikon data successfully retrieved.")

print(data.head())

except Exception as e:

print(f"An error occurred: {e}")

# Example output (first 5 rows) - with Eikon data

# OPEN HIGH LOW CLOSE VOLUME

# 2023-01-03 130.2800 130.8899 124.1700 125.0700 112117700

# 2023-01-04 126.8899 128.6600 125.0800 126.3600 89186000

# 2023-01-05 127.1300 127.7600 125.8600 126.7399 85762300

# 2023-01-06 127.2600 130.2799 126.3000 129.2999 87754700

# 2023-01-09 130.4799 133.4200 129.8900 130.1900 70790800

The ek.get_timeseries() function retrieves the historical price data for the specified instrument (using its RIC). The fields parameter allows you to specify which data fields to retrieve. The output is a pandas DataFrame with the date as the index and the requested data fields as columns.

The Eikon API offers a wealth of other data. You can retrieve fundamental data, news, and more. The ek.get_data() function is another versatile function in the eikon library for retrieving data, providing flexibility in requesting different types of information. For example, to retrieve fundamental data such as revenue and net

Having established a foundational understanding of financial data and the Python environment, we now turn our attention to a powerful and versatile tool that will become indispensable throughout this article: the pandas library. Pandas is a cornerstone of data analysis in Python, and its capabilities are particularly well-suited to the complexities and demands of financial data analysis. This introduction serves as a bridge, smoothly transitioning from the preliminary concepts to the practical application of pandas in reading, handling, and storing financial data. Its versatility allows us to work with a wide array of data sources, making it an ideal tool for tackling real-world financial challenges. As we move forward, we will see how pandas simplifies the process of data manipulation, enabling us to focus on the analytical insights rather than getting bogged down in the intricacies of data wrangling.

Unveiling the Power of Pandas in Finance

Pandas provides robust support for the diverse range of data formats commonly encountered in the financial world. Whether it’s historical stock prices, economic indicators, or portfolio performance data, pandas offers a consistent and efficient way to handle and manipulate these datasets. The library simplifies the often complex process of importing and preparing financial data for analysis. This simplification stems from its two primary data structures: the DataFrame and the Series. A Series is essentially a one-dimensional labeled array capable of holding any data type, such as integers, strings, floating-point numbers, Python objects, and more. A DataFrame, on the other hand, is a two-dimensional labeled data structure with columns of potentially different types. Think of a DataFrame as a spreadsheet or a SQL table, where each column represents a variable, and each row represents an observation. These structures are designed for efficient data storage and manipulation, providing functionalities such as data alignment, missing data handling, and flexible reshaping.

Pandas also offers a rich set of functions for data cleaning, transformation, and analysis. Functions for handling missing data, such as fillna() and dropna(), are crucial in financial analysis where data gaps are common. Data transformation techniques like creating new columns based on existing ones, or applying custom functions to entire datasets, are made easy. Furthermore, pandas integrates seamlessly with other Python libraries like NumPy, allowing for efficient numerical computations, and Matplotlib, enabling the creation of insightful visualizations. This combination of features makes pandas an invaluable tool for financial analysts, researchers, and anyone working with financial data.

As we will see in the following sections, pandas’ ability to read data from various sources is particularly important. From simple CSV files to complex databases, pandas provides the tools needed to access and prepare data for analysis.

Acquiring Financial Data: A Journey Through Diverse Sources

The ability to acquire data from various sources is a fundamental skill in financial analysis. The remainder of this section will explore how pandas empowers us to read financial data from a variety of sources. We will delve into practical examples and code snippets, demonstrating how to import data from different file formats and data repositories. We will cover the most common sources you will encounter in financial analysis, including CSV files, Excel spreadsheets, and databases. We will also briefly touch on importing data from APIs and other online sources. Each example will provide a clear understanding of the process, allowing you to adapt these techniques to your specific needs. Let’s begin our exploration of data acquisition using pandas.

Reading Data from CSV Files: The Foundation of Data Acquisition

Comma-separated value (CSV) files are a ubiquitous format for storing and exchanging data. They are simple, text-based files that can be easily created and manipulated. In finance, CSV files are frequently used to store historical price data, financial statements, and other types of information. Pandas provides the read_csv() function to read data from CSV files. This function is highly versatile and can handle a wide range of CSV file formats.

Let’s begin with a simple example. Imagine you have a CSV file named “AAPL.csv” containing historical stock prices for Apple. The file might look something like this (although the exact format can vary):

Date,Open,High,Low,Close,Adj Close,Volume

2023-01-03,130.27,130.89,124.17,125.07,124.35,112117500

2023-01-04,128.87,129.34,125.08,126.36,125.63,89148700

2023-01-05,127.77,128.04,124.27,126.04,125.31,83768600

...

Now, let’s read this CSV file into a pandas DataFrame.

import pandas as pd

# Read the CSV file into a DataFrame

try:

df = pd.read_csv("AAPL.csv")

print(df.head()) # Print the first 5 rows

except FileNotFoundError:

print("Error: AAPL.csv not found. Please ensure the file exists in the current directory or specify the correct path.")

except Exception as e:

print(f"An error occurred: {e}")

In this code, we first import the pandas library, aliasing it as pd. The pd.read_csv() function is then used to read the CSV file. The head() method is used to display the first five rows of the DataFrame, which allows us to quickly inspect the data and verify that it has been read correctly. The try...except block handles potential errors, such as the file not being found, making the code more robust. This is crucial since the file path can vary depending on the environment.

The output of the print(df.head()) command will display the first few rows of the DataFrame, showing the “Date”, “Open”, “High”, “Low”, “Close”, “Adj Close”, and “Volume” columns, along with their corresponding data. This allows us to quickly verify that the file was read successfully.

Now, let’s consider some common customizations for read_csv():

Specifying the delimiter: By default,

read_csv()assumes that the delimiter (the character separating the values) is a comma (,). If the file uses a different delimiter, such as a semicolon (;), you can specify it using thesepparameter.

df = pd.read_csv("data.csv", sep=";") # Assuming the delimiter is a semicolon

Skipping rows: The

skiprowsparameter allows you to skip rows at the beginning of the file. This is useful if the file contains header information or comments that you don’t want to include in your data.

df = pd.read_csv("data.csv", skiprows=1) # Skip the first row

Specifying the header: Sometimes, the header row might not be at the very beginning. You can specify the row number containing the column headers using the

headerparameter.

df = pd.read_csv("data.csv", header=1) # Use the second row as the header

Handling missing values: Missing data is common in financial datasets. The

na_valuesparameter lets you specify which values should be treated as missing. The default missing value indicators areNaN,#N/A,#NA,NULL,null,None.

df = pd.read_csv("data.csv", na_values=["-999", "?"]) # Treat -999 and ? as missing

Parsing dates: The

parse_datesparameter allows you to automatically parse date columns.

df = pd.read_csv("AAPL.csv", parse_dates=['Date']) # Parse the 'Date' column

print(df.dtypes) # Verify the data types

After parsing dates, you can perform time-series operations like resampling and calculating rolling statistics. The print(df.dtypes) statement confirms that the ‘Date’ column has the ‘datetime64[ns]’ data type. This is a crucial step for any time-series analysis.

These are just a few examples of the many options available with the read_csv() function. By understanding and utilizing these options, you can efficiently import and prepare a wide range of CSV files for financial analysis.

Reading Data from Excel Spreadsheets

Excel spreadsheets are another common format for storing and sharing financial data. Pandas provides the read_excel() function to read data from Excel files. This function can handle both .xls and .xlsx file formats.

Let’s consider an example. Suppose you have an Excel file named “Financials.xlsx” containing financial statements. This file might have multiple sheets, each containing different data, such as the income statement, balance sheet, and cash flow statement.

import pandas as pd

try:

# Read the first sheet of the Excel file

df = pd.read_excel("Financials.xlsx")

print(df.head())

# Read a specific sheet by name

df_income_statement = pd.read_excel("Financials.xlsx", sheet_name="Income Statement")

print(df_income_statement.head())

# Read a specific sheet by index

df_balance_sheet = pd.read_excel("Financials.xlsx", sheet_name=1) # sheet_name is the index, starting from 0

print(df_balance_sheet.head())

except FileNotFoundError:

print("Error: Financials.xlsx not found. Please ensure the file exists.")

except Exception as e:

print(f"An error occurred: {e}")

In this code, we use pd.read_excel() to read data from the Excel file. By default, it reads the first sheet in the file. The sheet_name parameter can be used to specify which sheet to read. You can specify the sheet name as a string (e.g., “Income Statement”) or its index (starting from 0).

The read_excel() function also supports a variety of options similar to read_csv(), including:

Specifying the header row: You can use the

headerparameter to indicate which row contains the column headers.Skipping rows: The

skiprowsparameter allows you to skip rows at the beginning of the sheet.Handling missing values: The

na_valuesparameter lets you specify values to be treated as missing.Parsing dates: The

parse_datesparameter allows you to automatically parse date columns.

Here’s an example:

try:

df_income_statement = pd.read_excel("Financials.xlsx", sheet_name="Income Statement", header=1, na_values=["N/A"])

print(df_income_statement.head())

except FileNotFoundError:

print("Error: Financials.xlsx not found.")

except Exception as e:

print(f"An error occurred: {e}")

This code reads the “Income Statement” sheet, uses the second row (index 1) as the header, and treats “N/A” values as missing. Excel files can have complex formatting, so you may need to experiment with the parameters to get the data in the format you need.

Interacting with Databases: Accessing Structured Financial Data

Many financial institutions and data providers store their data in databases. Databases provide a structured and efficient way to store, manage, and retrieve large volumes of data. Pandas provides the read_sql() function to read data from SQL databases. This function requires a database connection and a SQL query. To connect to a database, you’ll typically need a database driver, such as psycopg2 for PostgreSQL, pymysql for MySQL, or sqlite3 for SQLite.

Let’s consider an example using SQLite, a simple, file-based database that doesn’t require a separate server. First, let’s create a SQLite database and a table within it.

import sqlite3

# Create a connection to the database

conn = sqlite3.connect('financial_data.db')

# Create a cursor object to execute SQL commands

cursor = conn.cursor()

# Create a table

cursor.execute('''

CREATE TABLE IF NOT EXISTS stock_prices (

Date TEXT,

Open REAL,

High REAL,

Low REAL,

Close REAL,

Volume INTEGER

)

''')

# Insert some sample data

data = [

('2023-01-03', 130.27, 130.89, 124.17, 125.07, 112117500),

('2023-01-04', 128.87, 129.34, 125.08, 126.36, 89148700),

('2023-01-05', 127.77, 128.04, 124.27, 126.04, 83768600)

]

cursor.executemany("INSERT INTO stock_prices VALUES (?, ?, ?, ?, ?, ?)", data)

# Commit the changes

conn.commit()

# Close the connection

conn.close()

This code creates a SQLite database named “financial_data.db” and a table named “stock_prices”. It then inserts some sample stock price data. Now, let’s read this data into a pandas DataFrame.

import pandas as pd

import sqlite3

# Create a connection to the database

conn = sqlite3.connect('financial_data.db')

try:

# Define the SQL query

query = "SELECT * FROM stock_prices"

# Read the data into a DataFrame

df = pd.read_sql_query(query, conn)

# Print the DataFrame

print(df.head())

except sqlite3.Error as e:

print(f"An error occurred: {e}")

finally:

# Close the connection

if 'conn' in locals():

conn.close()

In this code, we first establish a connection to the SQLite database. Then, we define a SQL query to select all columns from the “stock_prices” table. The pd.read_sql_query() function is used to execute the query and read the results into a DataFrame. Finally, we print the first five rows of the DataFrame. The finally block ensures that the database connection is closed, even if an error occurs. This is essential to avoid resource leaks.

For other database systems, you’ll need to install the appropriate database driver (e.g., psycopg2 for PostgreSQL, pymysql for MySQL) and modify the connection string accordingly. The SQL query will also need to be adjusted based on the database schema.

Here’s an example using a PostgreSQL database (assuming you have psycopg2 installed and a database connection configured):

import pandas as pd

import psycopg2

# Replace with your database credentials

db_host = "localhost"

db_name = "your_database_name"

db_user = "your_username"

db_password = "your_password"

try:

# Create a connection to the PostgreSQL database

conn = psycopg2.connect(host=db_host, database=db_name, user=db_user, password=db_password)

# Define the SQL query

query = "SELECT * FROM stock_prices" # Assuming a similar table exists

# Read the data into a DataFrame

df = pd.read_sql_query(query, conn)

# Print the DataFrame

print(df.head())

except psycopg2.Error as e:

print(f"An error occurred: {e}")

finally:

# Close the connection

if 'conn' in locals():

conn.close()

This example demonstrates how to connect to a PostgreSQL database and read data using pandas. Remember to replace the placeholder database credentials with your actual database details.

Reading Data from APIs and Online Sources (Brief Overview)

Financial data is often available through APIs (Application Programming Interfaces) and online sources. APIs provide a programmatic way to access data, and many financial data providers offer APIs to their customers. Pandas, in conjunction with other libraries like requests, can be used to read data from these sources.

Here’s a conceptual example of how you might use the requests library to fetch data from a hypothetical API and then load it into a pandas DataFrame. This is a simplified illustration, and the exact implementation will vary depending on the API.

import pandas as pd

import requests

import json

# Replace with your API endpoint and API key

api_endpoint = "https://api.example.com/stock_prices"

api_key = "YOUR_API_KEY"

try:

# Make the API request

headers = {"Authorization": f"Bearer {api_key}"}

response = requests.get(api_endpoint, headers=headers)

# Check for successful response

response.raise_for_status() # Raise an exception for bad status codes

# Parse the JSON response

data = response.json()

# Create a DataFrame from the JSON data (adjust based on the API's response structure)

df = pd.DataFrame(data)

# Print the DataFrame

print(df.head())

except requests.exceptions.RequestException as e:

print(f"An error occurred during the API request: {e}")

except json.JSONDecodeError as e:

print(f"An error occurred while decoding the JSON response: {e}")

except Exception as e:

print(f"An unexpected error occurred: {e}")

In this example, we first make a request to the API endpoint using the requests.get() function. We handle potential errors during the request using a try...except block. If the request is successful, we parse the JSON response using response.json() and then create a pandas DataFrame from the parsed data. The specific structure of the data (e.g., the keys and values) will depend on the API’s response format.

Reading data from online sources often involves dealing with API authentication, rate limits, and data formatting. You’ll need to consult the API documentation to understand how to authenticate, how to construct requests, and how the data is structured.

Conclusion of Data Acquisition

This section has provided a comprehensive overview of how to read financial data from various sources using pandas. We’ve explored techniques for reading data from CSV files, Excel spreadsheets, and databases. We’ve also briefly touched on reading data from APIs and online sources. Each example included practical code snippets, demonstrating the core functionalities and common customizations.

By mastering these techniques, you have equipped yourself with the fundamental skills needed to acquire and prepare financial data for analysis. The ability to read data from different sources is a critical first step in any financial analysis project. The flexibility and power of pandas make it a perfect tool for this task.

Having established the foundational principles of financial data analysis and the importance of data quality, we now turn our attention to the practical application of these concepts. This section will focus on working with a specific financial dataset: Apple Inc.'s (AAPL) stock price data. We will be using historical data obtained from the Eikon Data API, specifically covering the month of April 2020. This period provides a relevant context for examining market fluctuations and understanding the dynamics of a major technology company during a time of considerable economic uncertainty.

Accessing and Examining the Raw Data

The initial step in any data analysis project involves acquiring and understanding the data. In this case, the historical financial data is stored in a comma-separated values (CSV) file. CSV files are a common format for storing tabular data, making them relatively easy to work with. Before we delve into more advanced techniques, let’s start by examining the data using pure Python to get a feel for its structure and content. This initial inspection will help us identify potential challenges and inform our subsequent data processing steps.

We will use Python’s built-in file handling capabilities to read and display the contents of the CSV file. This approach allows us to understand the fundamental structure of the data without relying on external libraries. The following code snippet demonstrates how to open, read, and print the first few lines of the CSV file:

# Define the file path to the CSV data

file_path = "AAPL_April_2020.csv"

# Open the CSV file in read mode using a 'with' statement

# This ensures the file is automatically closed after use

with open(file_path, 'r') as file:

# Loop through the first 5 lines of the file, including the header

for i in range(5):

# Read a single line from the file

line = file.readline()

# Print the line to the console, using 'end=' to prevent extra newlines

print(line, end='')

Let’s break down this code line by line:

file_path = "AAPL_April_2020.csv": This line defines the file path of the CSV file. Replace"AAPL_April_2020.csv"with the actual file name and location if your data file is located elsewhere.with open(file_path, 'r') as file:: This is the core of the file-handling operation. Thewith open()statement is a context manager. It opens the file specified byfile_pathin read mode ('r'). Theas filepart assigns the opened file object to the variablefile. The advantage of usingwithis that it automatically closes the file, even if errors occur. This is crucial for preventing resource leaks.for i in range(5):: This loop iterates five times, allowing us to read and display the first five lines of the file. Adjust therange()value to see more or fewer lines.line = file.readline(): Inside the loop,file.readline()reads a single line from the file, including the newline character (\n) at the end of the line.print(line, end=''): This line prints thelineto the console. Theend=''argument prevents theprint()function from adding an extra newline character after each line, ensuring that the output is displayed in a cleaner format. Withoutend='', each line would be followed by an extra blank line.

Assuming the CSV file is named AAPL_April_2020.csv and contains the stock price data, running this code will produce the following output (or something very similar, depending on minor variations in the data):

Date,HIGH,CLOSE,LOW,OPEN,COUNT,VOLUME

2020-04-01,61.455,60.35,59.71,59.95,368598,20140872

2020-04-02,61.14,60.36,59.44,60.04,291599,19821312

2020-04-03,60.73,59.75,58.22,59.03,356719,34764680

2020-04-06,62.79,62.3,60.7,60.7,417305,35677648

Understanding the Data Structure

The output from the previous code snippet provides us with a glimpse into the structure of the data. The first line, commonly referred to as the header row, labels each column. The subsequent lines contain the actual financial data for each trading day. Let’s examine the columns:

Date: The date of the trading day (e.g., 2020-04-01).

HIGH: The highest price at which the stock traded during the day.

CLOSE: The closing price of the stock at the end of the trading day.

LOW: The lowest price at which the stock traded during the day.

OPEN: The opening price of the stock at the beginning of the trading day.

COUNT: The number of trades that occurred during the day.

VOLUME: The total number of shares traded during the day.

Understanding these columns is crucial for any subsequent analysis. For example, we can use the HIGH and LOW prices to calculate the daily range, or the OPEN and CLOSE prices to determine the daily price movement. The VOLUME provides insights into the trading activity, indicating the level of investor interest.

However, it is important to note that this initial output is simply a text-based representation of the data. The data is not yet in a format that allows us to perform mathematical operations or other data manipulations directly within Python. Each line is a string, and the individual values (prices, volume, etc.) are also strings, separated by commas. Before we can begin any meaningful analysis, we need to transform this raw data into a more usable structure.

Limitations of this Approach and Next Steps

While the above method provides a quick and easy way to inspect the contents of the CSV file, it has significant limitations. The primary drawback is that the data is not loaded into memory in a format that’s readily accessible for further processing and analysis within Python. We can’t, for instance, easily calculate the average closing price for the month, plot the stock price over time, or perform any other quantitative analysis directly on this data. We would need to parse each line, convert the string values to numerical data types, and organize the data into a more appropriate data structure, such as a list of lists or a dictionary.

The next step, therefore, is to load the data into a more suitable data structure. Specifically, we will explore how to use Python’s built-in data structures and then introduce the csv module to parse the CSV file and store the data in a more organized manner. This will allow us to perform more complex data manipulation and analysis tasks. This transition is vital as we move from simple inspection to more advanced financial analysis techniques. We’ll then move into the use of specialized libraries like pandas to further streamline the process, significantly improving efficiency and analysis capabilities.

Having previously discussed the acquisition and storage of financial data in CSV format, the next crucial step is to bring this data into a Python environment for analysis. Python, with its rich ecosystem of libraries, offers a powerful platform for various financial computations, from simple statistical analysis to complex algorithmic trading strategies. The initial task involves importing the CSV data into a suitable data structure that Python can easily manipulate.

Python’s built-in csv module provides the fundamental tools for handling CSV files. This module allows us to read, write, and process data stored in the CSV format. The primary goal here is to transform the raw data from the CSV file into a usable Python data structure. This transformation is a prerequisite for any subsequent data analysis, allowing us to perform calculations, generate visualizations, and build predictive models.

There are two primary methods for reading CSV files using the csv module: csv.reader and csv.DictReader. Each method offers a different way of representing the CSV data within Python, and the choice between them often depends on the specific needs of the analysis. The first approach uses csv.reader to read the file, and the second approach utilizes csv.DictReader.

Reading CSV Data with csv.reader

The csv.reader function is the most basic way to read a CSV file. It treats the CSV data as a sequence of rows, where each row is a list of strings. Let’s delve into how this works.

The csv.reader function takes a file object as input. It returns an iterator that yields a list for each row in the CSV file. To fully utilize the data, we need to convert this iterator into a concrete data structure, typically a list of lists.

Here’s a code snippet illustrating how to use csv.reader:

import csv

# Replace 'financial_data.csv' with the actual file name

fn = 'financial_data.csv'

# Open the CSV file in read mode ('r')

with open(fn, 'r') as file:

# Create a csv reader object

csv_reader = csv.reader(file)

# Convert the csv reader object into a list of lists

data = list(csv_reader)

# Print the first five rows

print(data[:5])

Let’s dissect this code. First, we import the csv module. Then, we specify the filename. The with open(fn, 'r') as file: statement opens the CSV file. The 'r' mode signifies read mode, indicating that we intend to read data from the file. The csv.reader(file) function then creates a reader object, an iterator that will process the CSV file row by row. Finally, we convert this iterator into a list of lists using list(csv_reader). This creates a data structure where each inner list represents a row in the CSV file.

The output of data[:5] might look something like this, depending on the contents of your financial_data.csv file:

[['Date', 'Open', 'High', 'Low', 'Close', 'Volume'],

['2023-01-03', '175.88', '177.88', '175.00', '177.57', '5197300'],

['2023-01-04', '177.71', '178.99', '175.94', '178.76', '4845400'],

['2023-01-05', '178.86', '180.00', '177.99', '179.92', '5282000'],

['2023-01-06', '179.92', '180.88', '178.78', '180.58', '4654700']]

Notice the structure: the first inner list generally represents the header row, containing the column names. Subsequent lists represent the actual data rows. Each element within these inner lists is a string.

While this list-of-lists structure provides a way to access the data, its usability has limitations. Accessing specific data points requires knowing the index of the column. For instance, to access the closing price for the first data entry, you would need to remember that the ‘Close’ column is, say, at index 4 (assuming the header row is at index 0). This isn’t inherently intuitive and can lead to errors, especially when dealing with CSV files containing a large number of columns.

Consider the task of calculating the average closing price. With the list-of-lists structure, you’d have to iterate through each row, extract the closing price (converting it from a string to a float), and then calculate the average. While feasible, this process is not particularly efficient or readable. The need for more streamlined data access becomes apparent, which is why we turn to the next approach.

Reading CSV Data with csv.DictReader

The csv.DictReader class provides a more convenient way to access data within a CSV file. Instead of a list of lists, csv.DictReader treats each row as a dictionary. This means each data element can be accessed using a key, which is derived from the header row of the CSV file.

Let’s see how this works in practice:

import csv

# Replace 'financial_data.csv' with the actual file name

fn = 'financial_data.csv'

# Open the CSV file in read mode ('r')

with open(fn, 'r') as file:

# Create a csv DictReader object

csv_reader = csv.DictReader(file)

# Convert the csv reader object into a list of dictionaries

data = list(csv_reader)

# Print the first three rows

print(data[:3])

In this modified code, we’re still using the csv module, but we’re now using csv.DictReader instead of csv.reader. The csv.DictReader function takes the file object as input and automatically uses the first row of the CSV file as the keys for the dictionaries. The rest of the rows are then read as dictionaries, where the keys are the column headers, and the values are the corresponding data points.

The output of data[:3] would look something like this:

[{'Date': '2023-01-03', 'Open': '175.88', 'High': '177.88', 'Low': '175.00', 'Close': '177.57', 'Volume': '5197300'},

{'Date': '2023-01-04', 'Open': '177.71', 'High': '178.99', 'Low': '175.94', 'Close': '178.76', 'Volume': '4845400'},

{'Date': '2023-01-05', 'Open': '178.86', 'High': '180.00', 'Low': '177.99', 'Close': '179.92', 'Volume': '5282000'}]

Notice how each element of the list is now a dictionary. Each dictionary represents a row from the CSV file. The keys of each dictionary are the column headers (e.g., ‘Date’, ‘Open’, ‘High’, etc.), and the values are the corresponding data points for that row. This structure makes accessing specific data points much more straightforward.

Comparing and Contrasting the Approaches

The csv.DictReader approach provides significant advantages over the list-of-lists approach when working with CSV files. The primary benefit is improved data access. Instead of relying on integer indices to retrieve data, you can now use descriptive column names as keys. This makes the code more readable, less prone to errors, and easier to maintain.

For example, let’s revisit the calculation of the average closing price. With csv.DictReader, accessing the closing price is as simple as accessing the value associated with the ‘Close’ key in each dictionary.

Here’s how to calculate the average closing price using a list comprehension with the csv.DictReader data structure:

import csv

fn = 'financial_data.csv'

with open(fn, 'r') as file:

csv_reader = csv.DictReader(file)

data = list(csv_reader)

# Calculate the average closing price

average_closing_price = sum([float(row['Close']) for row in data]) / len(data)

print(f"Average Closing Price: {average_closing_price:.2f}")

In this code, row['Close'] directly accesses the closing price for each row. We then convert the ‘Close’ value to a float using float() before summing it. The result is then divided by the number of rows to calculate the average. This approach is significantly more readable and less prone to errors compared to accessing the closing price using an index.

Here’s a more comprehensive example demonstrating other calculations using csv.DictReader:

import csv

fn = 'financial_data.csv'

with open(fn, 'r') as file:

csv_reader = csv.DictReader(file)

data = list(csv_reader)

# Calculate the average opening price

average_opening_price = sum([float(row['Open']) for row in data]) / len(data)

print(f"Average Opening Price: {average_opening_price:.2f}")

# Calculate the highest closing price

highest_closing_price = max([float(row['Close']) for row in data])

print(f"Highest Closing Price: {highest_closing_price:.2f}")

# Calculate the lowest closing price

lowest_closing_price = min([float(row['Close']) for row in data])

print(f"Lowest Closing Price: {lowest_closing_price:.2f}")

# Calculate the daily price range (High - Low) for each day

daily_price_ranges = [float(row['High']) - float(row['Low']) for row in data]

print(f"Daily Price Ranges (first 5): {daily_price_ranges[:5]}")

# Calculate the average daily price range

average_daily_price_range = sum(daily_price_ranges) / len(daily_price_ranges)

print(f"Average Daily Price Range: {average_daily_price_range:.2f}")

# Example of filtering data - finding days where the closing price was above a certain threshold

threshold = 180

above_threshold_days = [row for row in data if float(row['Close']) > threshold]

print(f"\nDays with Closing Price Above {threshold}:")

for day in above_threshold_days:

print(f"Date: {day['Date']}, Close: {day['Close']}")

# Example of calculating the total volume traded

total_volume = sum([int(row['Volume']) for row in data])

print(f"\nTotal Volume Traded: {total_volume}")

This extended example showcases how csv.DictReader enables easy access to different data points and makes performing various calculations relatively straightforward. The code calculates the average opening price, the highest and lowest closing prices, the daily price ranges, and the total trading volume. It also includes an example of filtering the data based on a closing price threshold.

However, even with csv.DictReader, these calculations still involve writing code to iterate through the data and perform the necessary operations. While more readable and accessible than the list-of-lists approach, it might still require a significant amount of code for more complex financial analyses. The csv module is useful for basic CSV file handling, but it can become cumbersome for more sophisticated tasks, especially when dealing with large datasets or complex calculations.

The limitations of the csv module, particularly in the context of financial data analysis, become more apparent when we consider the need to perform more advanced operations, such as time series analysis, data cleaning, and integration with other financial data sources. This is where more powerful libraries come into play. As such, the next logical step is to explore more specialized tools designed for financial data manipulation, such as the pandas library. Pandas provides more convenient and often faster methods for importing, handling, and analyzing financial time series data, as we shall see.

Having explored the fundamental principles of data handling, we now turn our attention to a specific, yet ubiquitous, application: financial data analysis. The techniques and tools developed here will serve as a foundation for understanding more complex analytical methods. To illustrate the process, we’ll leverage the powerful pandas library to work with a financial dataset, specifically Apple (AAPL) stock price data. Pandas offers a streamlined and efficient approach to working with tabular data, vastly simplifying and accelerating the process of reading CSV files compared to using Python’s standard library alone. This efficiency is crucial in the fast-paced world of financial analysis, where timely access and manipulation of data are paramount.

Importing Data with read_csv()

The cornerstone of data import in pandas is the read_csv() function. This versatile tool provides a comprehensive set of options for reading data from CSV (Comma Separated Values) files, a common format for storing and exchanging data. The flexibility of read_csv() lies in its numerous parameters, which allow for extensive customization of the import process, handling everything from simple data files to complex ones with missing values, different delimiters, and varied data types.

The read_csv() function returns a DataFrame object. The DataFrame is the core data structure in pandas, designed to store and manipulate tabular data, much like a spreadsheet or SQL table. It provides labeled axes (rows and columns), enabling efficient data selection, filtering, and transformation. The DataFrame is the workhorse of pandas and is especially well-suited for financial analysis, where data often comes in a structured, tabular format. Its ability to handle time series data, perform statistical calculations, and integrate with other libraries makes it an indispensable tool for any financial analyst.

Let’s dive into a practical example. Suppose we have a CSV file named AAPL.csv containing historical Apple stock data. This file might have columns like “Date”, “Open”, “High”, “Low”, “Close”, “Volume”, and “Adj Close”. Our goal is to load this data into a pandas DataFrame for analysis. The following code demonstrates how to accomplish this:

import pandas as pd

# Define the filename for the CSV file. Replace with the actual path if needed.

fn = 'AAPL.csv'

# Use read_csv to load the data, specifying the index column and date parsing.

try:

data = pd.read_csv(fn, index_col=0, parse_dates=True)

print(f"Successfully read {fn} into a pandas DataFrame.")

except FileNotFoundError:

print(f"Error: The file '{fn}' was not found. Ensure the file exists and the path is correct.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

In this code:

We begin by importing the pandas library using

import pandas as pd. This line makes all the pandas functionality accessible through the aliaspd, which is the standard and widely accepted convention. This is much easier than writingpandas.read_csv()every time we call a pandas function.We define the filename of our CSV file using the

fnvariable. It’s good practice to store this as a variable for easier maintenance and modification.The core of the code is the

pd.read_csv()function call. We pass the filename (fn) as the first argument, telling pandas which file to load. We also include two crucial parameters:index_col=0: This parameter tells pandas to use the first column (index 0) of the CSV file as the index for theDataFrame. In our example, the first column is likely the “Date” column, which is ideal for time series analysis. The index is critical for efficient data access and alignment.parse_dates=True: This parameter instructs pandas to attempt to parse the index column (the “Date” column in our case) as dates. If successful, the index will be aDatetimeIndex, which unlocks powerful time-based functionalities.

We wrap the

read_csvfunction in atry...exceptblock to handle potential errors, such as the file not being found or other issues during the reading process. This is crucial for writing robust and user-friendly code. This is important because it prevents our program from crashing and provides informative error messages to the user if something goes wrong.

After running this code, the data variable will hold a pandas DataFrame containing the Apple stock price data.

Understanding the DataFrame with .info()

Once the data is loaded into a DataFrame, it’s essential to understand its structure and contents. The info() method provides a concise summary of the DataFrame, giving a quick overview of its characteristics. It’s a vital step in any data analysis workflow. Let’s explore how to use and interpret the output of data.info():

import pandas as pd

# Assuming the 'AAPL.csv' file is in the same directory or the correct path is provided

fn = 'AAPL.csv'

try:

data = pd.read_csv(fn, index_col=0, parse_dates=True)

print(f"Successfully read {fn} into a pandas DataFrame.\n")

data.info() # Call the info() method to get a summary of the DataFrame

except FileNotFoundError:

print(f"Error: The file '{fn}' was not found. Ensure the file exists and the path is correct.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

When you execute data.info(), the output will resemble something like this (the exact numbers will depend on the specific data in your AAPL.csv file):

<class 'pandas.core.frame.DataFrame'>

DatetimeIndex: 2517 entries, 2013-01-02 to 2023-12-29

Data columns (total 6 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Open 2517 non-null float64

1 High 2517 non-null float64

2 Low 2517 non-null float64

3 Close 2517 non-null float64

4 Adj Close 2517 non-null float64

5 Volume 2517 non-null int64

dtypes: float64(5), int64(1)

memory usage: 132.4 KB

Let’s break down this output:

<class 'pandas.core.frame.DataFrame'>: This confirms that thedatavariable is indeed a pandasDataFrameobject.DatetimeIndex: 2517 entries, 2013-01-02 to 2023-12-29: This indicates that the index of theDataFrameis aDatetimeIndex, meaning the index consists of date and time values. It also tells us the range of dates covered by the data (from January 2, 2013, to December 29, 2023, in this example) and the total number of entries (2517, representing the number of trading days in the given period).Data columns (total 6 columns):: This line shows that theDataFramehas 6 columns.The numbered list of columns and their details: This is the core of the information. Each row in this list provides details about a specific column:

#: The column number (starting from 0).Column: The name of the column (e.g., “Open”, “High”, “Low”, “Close”, “Adj Close”, “Volume”).Non-Null Count: The number of non-null (i.e., not missing) values in the column. A value of 2517 for all columns in this example suggests that there are no missing data points. If there were missing values, this number would be less than the total number of entries.Dtype: The data type of the values in the column. Common data types include:float64: Floating-point numbers (used for prices).int64: Integer numbers (used for volume).object: Usually represents strings (though not in this example).datetime64: Date and time values (for the index).

dtypes: float64(5), int64(1): This summarizes the data types used in the DataFrame, listing the number of columns for each data type.memory usage: 132.4 KB: This indicates the amount of memory (in kilobytes) used by theDataFrame. This can be useful for understanding the size of your dataset and potentially optimizing memory usage if dealing with very large datasets.

The info() method is invaluable for:

Data Quality Assessment: Checking for missing values (indicated by

Non-Null Countnot matching the total number of entries).Data Type Verification: Ensuring that columns have the correct data types for subsequent analysis (e.g., prices are floats, dates are datetime objects).

Understanding Data Structure: Getting a quick overview of the number of rows, columns, and the nature of the data.

Memory Usage Awareness: Monitoring memory usage, particularly when working with large datasets.

Previewing the Data with .tail()

After loading and inspecting the data’s structure using info(), it’s often helpful to view a sample of the data to understand its contents and format. The tail() method provides a quick and easy way to display the last few rows of the DataFrame. By default, it shows the last five rows, but you can specify a different number of rows as an argument (e.g., data.tail(10) for the last ten rows). This is useful for verifying that the data has been loaded correctly and for getting a feel for the data’s values.

import pandas as pd

# Assuming the 'AAPL.csv' file is in the same directory or the correct path is provided

fn = 'AAPL.csv'

try:

data = pd.read_csv(fn, index_col=0, parse_dates=True)

print(f"Successfully read {fn} into a pandas DataFrame.\n")

print("DataFrame Info:")

data.info()

print("\nLast 5 rows of the DataFrame:")

print(data.tail()) # Display the last 5 rows

except FileNotFoundError:

print(f"Error: The file '{fn}' was not found. Ensure the file exists and the path is correct.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

The output of data.tail() will display the last five rows of the DataFrame, which will look something like this (the values will vary depending on the AAPL.csv data):

Last 5 rows of the DataFrame:

Open High Low Close Adj Close Volume

Date

2023-12-22 195.100006 195.419998 193.500000 195.029999 194.412292 20554100

2023-12-26 195.699997 197.669998 195.170000 197.570007 196.945938 28991500

2023-12-27 198.339996 198.669998 197.229996 198.080002 197.454132 21254100

2023-12-28 199.020004 199.669998 197.750000 198.479996 197.852844 24184000

2023-12-29 197.940002 199.089996 197.490005 198.970001 198.341370 34247000

This output provides a clear view of the data:

Index (Date): The dates are displayed in the first column (the index).

Column Values: The values for each column (Open, High, Low, Close, Adj Close, and Volume) are shown for each date.

By examining the output of tail(), you can quickly verify that:

The data has been loaded correctly, as the dates and values align with your expectations.

The data is in the expected format.

There are no obvious data entry errors or inconsistencies.

The tail() method is a simple but essential tool for exploratory data analysis, giving you a quick glimpse of your data and confirming that the import process has been successful.

Basic Data Analysis: Calculating the Mean Closing Price

Now that we’ve successfully loaded the data and examined its structure, let’s perform a simple data analysis task to demonstrate the power of pandas. We’ll calculate the mean (average) of the closing stock prices. This single calculation provides a key statistical insight into the stock’s performance over the period covered by the data.

import pandas as pd

# Assuming the 'AAPL.csv' file is in the same directory or the correct path is provided

fn = 'AAPL.csv'

try:

data = pd.read_csv(fn, index_col=0, parse_dates=True)

print(f"Successfully read {fn} into a pandas DataFrame.\n")

# Calculate the mean of the 'CLOSE' column

mean_closing_price = data['Close'].mean()

print(f"The mean closing price of Apple stock is: ${mean_closing_price:.2f}")

except FileNotFoundError:

print(f"Error: The file '{fn}' was not found. Ensure the file exists and the path is correct.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

In this code:

We load the Apple stock data into a pandas DataFrame, as we did before.

We use the

data['Close']syntax to select the “Close” column from the DataFrame. This selects the column containing the closing stock prices.We then apply the

mean()method to the selected column (data['Close'].mean()). This calculates the average of all the values in the “Close” column.Finally, we print the result, formatted to two decimal places for readability.

The output will be a single number representing the mean closing price of Apple stock during the period covered by the AAPL.csv file. This mean value provides a valuable summary statistic of the stock’s performance, indicating the average price at which the stock closed during the period. This is the most basic of many metrics that can be calculated using the pandas library.

Beyond the Basics

This section has demonstrated the fundamental steps involved in reading CSV data with pandas, understanding the DataFrame structure, and performing basic calculations. We’ve covered the read_csv() function, the info() and tail() methods for data inspection, and a simple example of calculating the mean. This provides a solid foundation for more advanced financial data analysis.

The pandas library offers a vast array of functionalities for data manipulation, analysis, and visualization. In the subsequent sections, we will delve into more advanced techniques, including data cleaning, time series analysis, and more sophisticated statistical calculations. We’ll explore how to:

Handle missing data and outliers.

Resample time series data (e.g., from daily to monthly).

Calculate rolling statistics (e.g., moving averages).

Perform more complex statistical analyses, such as correlation and regression.

For a comprehensive understanding of pandas and the DataFrame class, refer to the official pandas documentation (https://pandas.pydata.org/docs/) and the book “Python for Data Analysis” by Wes McKinney (2017). These resources provide in-depth explanations, numerous examples, and detailed coverage of all the features and capabilities of pandas. The benefits of using pandas for CSV file reading are immediate: the simplicity and efficiency of the read_csv() function and its seamless integration with data analysis capabilities. As we move forward, you will discover the power and flexibility of pandas for financial data analysis.

Having effectively harnessed the power of Python and the pandas library for data manipulation and analysis, the next crucial step in any financial workflow often involves sharing the processed data with others. This might entail collaborating with colleagues, presenting findings to stakeholders, or integrating data into other systems that may not have direct access to the Python environment. This is where data exchange formats become invaluable.

The Importance of Data Exchange Formats

In the fast-paced world of finance, where data is the lifeblood of decision-making, seamless data exchange is paramount. Data exchange formats are standardized ways of structuring data so that it can be easily transferred and interpreted by different software applications, systems, and individuals. This is particularly crucial in the financial industry, where collaboration and data sharing are commonplace across various platforms and geographical locations.

Consider a scenario where you’ve developed a sophisticated trading strategy analysis using Python. You’ve cleaned, transformed, and aggregated historical stock data into a DataFrame. Now, you need to share these results with your team, who might use Excel for their day-to-day analysis, or with a compliance department that needs to review the data in a specific format. Or perhaps, you need to feed the results into a reporting system. Without a reliable means of exporting and importing the data, these tasks become needlessly complex and time-consuming. The ability to export data in a readily accessible format allows for broader accessibility and collaboration, ensuring everyone involved can leverage the insights gained from your analysis.

Two of the most widely adopted data exchange formats in the financial industry are Excel and JSON. Excel is ubiquitous, especially within the business world, offering a familiar interface for viewing, manipulating, and presenting data. JSON (JavaScript Object Notation), on the other hand, is a lightweight, human-readable format often used for data transmission between systems and applications. Both formats provide a bridge between the Python environment and a wider ecosystem of tools and stakeholders.

Leveraging pandas for Data Export

Fortunately, the pandas library simplifies the process of exporting DataFrames to these popular formats. pandas provides straightforward methods that allow you to convert your DataFrame objects into Excel spreadsheets and JSON files with just a few lines of code. This efficiency is a significant advantage, saving you time and effort while ensuring that your data is readily available for use outside of your Python environment.

Let’s delve into how pandas facilitates the export of DataFrames to Excel and JSON formats. The key methods we’ll be using are .to_excel() and .to_json(). These methods are designed to convert your DataFrame data into the respective formats.

Exporting Data to Excel

The .to_excel() method allows you to export your DataFrame to an Excel file. It’s a versatile tool that provides a number of parameters to customize the output. Let’s look at a basic example:

import pandas as pd

# Sample data (replace with your actual DataFrame)

data = pd.DataFrame({

'Date': pd.to_datetime(['2023-01-01', '2023-01-02', '2023-01-03', '2023-01-04', '2023-01-05']),

'AAPL': [170.00, 172.50, 175.00, 176.00, 177.00],

'MSFT': [250.00, 252.00, 253.50, 254.00, 255.00]

})

# Export the DataFrame to an Excel file

data.to_excel('data/stock_prices.xlsx', sheet_name='Stock Data', index=False)

In this code snippet, we first import the pandas library. Then, we create a sample DataFrame containing stock prices for Apple (AAPL) and Microsoft (MSFT) over a series of dates. The core of the example lies in the .to_excel() method. The first argument, 'data/stock_prices.xlsx', specifies the file path and name for the Excel file. The sheet_name='Stock Data' parameter sets the name of the sheet within the Excel file. The index=False argument prevents the DataFrame index from being written to the Excel file. Without this, the DataFrame’s index would be included as a column in the Excel file.

Let’s dissect this further and explore some of the customizable parameters available to enhance the export process.

import pandas as pd

# Sample DataFrame (simulating financial data)

data = pd.DataFrame({

'Date': pd.to_datetime(['2023-01-01', '2023-01-02', '2023-01-03', '2023-01-04', '2023-01-05']),

'AAPL_Open': [170.00, 172.50, 175.00, 176.00, 177.00],

'AAPL_High': [171.00, 173.00, 176.50, 177.00, 178.00],

'AAPL_Low': [169.00, 172.00, 174.50, 175.50, 176.00],

'AAPL_Close': [170.50, 172.75, 175.50, 176.50, 177.50],

'MSFT_Open': [250.00, 252.00, 253.50, 254.00, 255.00],

'MSFT_High': [251.00, 253.00, 254.50, 255.00, 256.00],

'MSFT_Low': [249.00, 251.00, 253.00, 253.50, 254.00],

'MSFT_Close': [250.50, 252.50, 254.00, 254.50, 255.50]

})

# Export to Excel with multiple parameters

try:

data.to_excel(

'data/stock_prices_detailed.xlsx', # File path

sheet_name='Detailed Data', # Sheet name

index=False, # Don't write the index

startrow=2, # Start writing data from row 2

startcol=1, # Start writing data from column 1

header=True, # Write column headers

freeze_panes=(1, 1) # Freeze the first row and first column

)

print("Data successfully exported to 'data/stock_prices_detailed.xlsx'")

except Exception as e:

print(f"An error occurred: {e}")

In this more advanced example, we’ve expanded the sample DataFrame to include more data points (Open, High, Low, Close) for both AAPL and MSFT. The .to_excel() method now utilizes several more parameters:

'data/stock_prices_detailed.xlsx': Specifies the file path for the Excel file, saving it in a directory named “data”.sheet_name='Detailed Data': Sets the sheet name to “Detailed Data.”index=False: Prevents the DataFrame index from being included in the output.startrow=2: Starts writing the data from the third row (index 2) of the Excel sheet.startcol=1: Starts writing the data from the second column (index 1) of the Excel sheet. This can be useful for creating space for titles or other information above or to the left of your data.header=True: Writes the column headers to the Excel file. This is the default behavior, but explicitly setting it improves code clarity.freeze_panes=(1, 1): Freezes the first row and the first column in the Excel sheet. This is helpful for keeping headers and the index visible while scrolling through large datasets.

To ensure that the code executes without issue, we wrap the export operation within a try...except block. This will gracefully handle any potential exceptions during the file writing process, providing informative error messages if something goes wrong. This is good practice when dealing with file I/O operations.

Exporting Data to JSON

JSON (JavaScript Object Notation) has become a standard format for data exchange due to its lightweight nature and readability. The .to_json() method in pandas is designed to export DataFrames to JSON format. The primary advantage of JSON is its structure, which allows for hierarchical data representation. This makes it ideal for representing complex datasets and exchanging data between different systems.

Here’s how you can export the same data to JSON:

import pandas as pd

import os # Import the os module

# Sample data (replace with your actual DataFrame)

data = pd.DataFrame({

'Date': pd.to_datetime(['2023-01-01', '2023-01-02', '2023-01-03', '2023-01-04', '2023-01-05']),

'AAPL': [170.00, 172.50, 175.00, 176.00, 177.00],

'MSFT': [250.00, 252.00, 253.50, 254.00, 255.00]

})

# Create the 'data' directory if it doesn't exist

if not os.path.exists('data'):

os.makedirs('data')

# Export the DataFrame to a JSON file

try:

data.to_json('data/stock_prices.json', orient='records', indent=4)

print("Data successfully exported to 'data/stock_prices.json'")

except Exception as e:

print(f"An error occurred: {e}")

In this example, the .to_json() method is used. The first argument, 'data/stock_prices.json', specifies the file path and name for the JSON file. The orient='records' parameter is crucial, as it defines the JSON structure. Setting orient='records' represents each row of the DataFrame as a separate JSON object, which is often a desirable format for data exchange. The indent=4 parameter adds indentation to the JSON output, making it more readable.

Before attempting to save the JSON file, the code checks if the ‘data’ directory exists using os.path.exists('data'). If the directory doesn’t exist, it creates it using os.makedirs('data'). This is important as it ensures that your code doesn’t produce an error due to the target directory not being present.

Let’s expand on the JSON export capabilities:

import pandas as pd

import os

# Sample financial data

data = pd.DataFrame({

'Date': pd.to_datetime(['2023-01-01', '2023-01-02', '2023-01-03', '2023-01-04', '2023-01-05']),

'AAPL_Open': [170.00, 172.50, 175.00, 176.00, 177.00],

'AAPL_High': [171.00, 173.00, 176.50, 177.00, 178.00],

'AAPL_Low': [169.00, 172.00, 174.50, 175.50, 176.00],

'AAPL_Close': [170.50, 172.75, 175.50, 176.50, 177.50],

'MSFT_Open': [250.00, 252.00, 253.50, 254.00, 255.00],

'MSFT_High': [251.00, 253.00, 254.50, 255.00, 256.00],

'MSFT_Low': [249.00, 251.00, 253.00, 253.50, 254.00],

'MSFT_Close': [250.50, 252.50, 254.00, 254.50, 255.50]

})

# Create the 'data' directory if it doesn't exist

if not os.path.exists('data'):

os.makedirs('data')

# Export to JSON with different orientations

try:

# Export with 'records' orientation

data.to_json('data/stock_prices_records.json', orient='records', indent=4)

print("Data successfully exported to 'data/stock_prices_records.json' with 'records' orientation")

# Export with 'index' orientation

data.to_json('data/stock_prices_index.json', orient='index', indent=4)

print("Data successfully exported to 'data/stock_prices_index.json' with 'index' orientation")

# Export with 'columns' orientation

data.to_json('data/stock_prices_columns.json', orient='columns', indent=4)