Chapter 3: Going on a random walk

A fundamental concept in time series analysis, particularly relevant in financial markets, is the random walk process.

Understanding random walks is crucial because many real-world series, including stock prices, often exhibit this behavior, making them challenging to forecast beyond very short horizons.

Link To Download Source Code at end!

Defining a Random Walk

Conceptually, a random walk is a time series where the current value is equal to the previous value plus a random shock. Imagine a drunkard stumbling through a field: their next position is their current position plus a random step in some direction. This “random step” is the key.

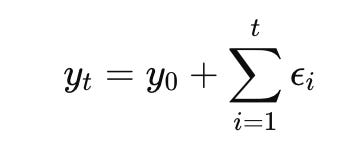

Formally, a random walk process, denoted as Yt, can be defined by the equation:

Yt = Yt − 1 + ϵt

Here: * Yt represents the value of the series at time t. * Yt − 1 represents the value of the series at the previous time step, t − 1. * ϵt (epsilon) represents a white noise error term at time t.

The ϵt term is what makes the walk “random.” It signifies an unpredictable, random fluctuation. For a series to be considered a true random walk, the ϵt term must adhere to the properties of white noise.

Understanding White Noise

White noise is a critical concept in time series. It’s a sequence of random variables that are independent and identically distributed (i.i.d.) with a mean of zero and a constant variance. In simpler terms, for a white noise series: * Mean is zero: On average, the random shocks don’t push the series consistently up or down. * Constant variance: The magnitude of the random shocks doesn’t systematically increase or decrease over time. * No autocorrelation: The current shock ϵt is not correlated with any past shock ϵt − k (where k ≠ 0). This is the most important property for time series analysis: past errors provide no information about future errors.

For a random walk, each new step is entirely independent of the previous steps, influenced only by the current random shock. This means that the best prediction for the next value is simply the current value, as past movements offer no predictive power for the direction or magnitude of the next random step.

Simulating a Simple Random Walk

To solidify our understanding, let’s simulate a basic random walk using Python. We’ll start with a given initial value and then add random steps (our white noise) iteratively.

First, we need to import the necessary libraries: numpy for numerical operations (especially generating random numbers) and matplotlib.pyplot for plotting.

import numpy as np

import matplotlib.pyplot as plt

# Set a random seed for reproducibility

np.random.seed(42)Here, np.random.seed(42) ensures that if you run this code multiple times, you’ll get the exact same “random” sequence, which is very helpful for debugging and ensuring consistent examples.

Next, we define the starting point for our random walk and how many steps it will take.

# Define the starting point and number of steps

start_value = 100

num_steps = 250We’ve set our initial value to 100 and decided to simulate 250 time steps.

Now, we generate the random shocks (our white noise) and then compute the random walk by cumulatively summing these shocks from the start_value.

# Generate random steps (white noise)

# These steps are drawn from a normal distribution with mean 0 and standard deviation 1

random_steps = np.random.normal(loc=0, scale=1, size=num_steps)

# Initialize the random walk array with the start value

random_walk = np.zeros(num_steps)

random_walk[0] = start_value

# Compute the random walk by cumulatively adding the steps

for i in range(1, num_steps):

random_walk[i] = random_walk[i-1] + random_steps[i]In this block: * np.random.normal(loc=0, scale=1, size=num_steps) creates an array of num_steps random numbers. loc=0 means the average step size is zero, and scale=1 means the typical deviation of a step is 1 unit. This effectively simulates our ϵt white noise. * We initialize random_walk with thestart_value. * The for loop then implements the core random walk equation: Y_t = Y_{t-1} + epsilon_t. Each new value is the previous value plus a new random step.

Finally, let’s visualize our simulated random walk.

# Plot the simulated random walk

plt.figure(figsize=(10, 6))

plt.plot(random_walk, label='Simulated Random Walk')

plt.title('Simulated Random Walk Process')

plt.xlabel('Time Step')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()This code plots the random_walk array over time. When you observe this plot, you’ll notice key visual characteristics: * No clear mean reversion: The series doesn’t tend to return to a particular average value. It wanders without a central tendency. * Persistent trends: While random, the series might appear to trend up or down for periods, but these trends are not deterministic; they are just accumulated random steps. * Unpredictable fluctuations: It’s hard to predict the next value based on previous patterns. Each step seems to be a new, independent shock.

The Importance of Stationarity

One of the most critical concepts in time series analysis is stationarity. Many powerful time series models, such as ARIMA models, assume that the underlying data generating process is stationary.

A time series is considered stationary if its statistical properties — like its mean, variance, and autocorrelation structure — do not change over time. In essence, a stationary series looks roughly the same at any point in time, regardless of when you observe it.

Why is stationarity desirable? 1. Predictability: If the statistical properties of a series are constant over time, we can use past data to make reliable forecasts about future values. If the mean or variance is constantly changing, any model trained on past data might not be relevant for future predictions. 2. Model Simplicity: Many econometric and statistical models are built upon the assumption of stationarity. Non-stationary series often require complex modeling or transformations before standard techniques can be applied. 3. Inference: Statistical inference (e.g., hypothesis testing, confidence intervals) is more straightforward and reliable with stationary data.

A random walk, by its very definition, is a non-stationary process. Its mean is not constant (it wanders), and its variance increases over time (the longer the walk, the further it can deviate from its starting point). This non-stationarity is a significant challenge for forecasting.

Differencing: Achieving Stationarity

Since many models require stationarity, how do we handle non-stationary series like random walks? One common and effective technique is differencing.

Differencing involves computing the difference between consecutive observations in a time series. The goal is to remove trends, seasonality, or other non-stationary components, thereby making the series stationary.

For a simple first-order differencing, we calculate: ΔYt = Yt − Yt − 1

Let’s consider our random walk equation again: Yt = Yt − 1 + ϵt

If we apply first-order differencing to this random walk, we get: Yt − Yt − 1 = (Yt − 1+ϵt) − Yt − 1 Yt − Yt − 1 = ϵt

This result is profound: differencing a random walk yields a white noise series! Since white noise is by definition stationary (constant mean of zero, constant variance, no autocorrelation), differencing successfully transforms a non-stationary random walk into a stationary series.

Let’s apply differencing to our simulated random walk and observe the result.

# Calculate the first-order difference of the simulated random walk

differenced_random_walk = np.diff(random_walk)

# Plot the differenced random walk

plt.figure(figsize=(10, 6))

plt.plot(differenced_random_walk, label='Differenced Random Walk (White Noise)')

plt.title('Differenced Simulated Random Walk')

plt.xlabel('Time Step')

plt.ylabel('Change in Value')

plt.grid(True)

plt.axhline(0, color='red', linestyle='--', linewidth=0.8, label='Mean = 0') # Add a line at y=0

plt.legend()

plt.show()The np.diff() function calculates the difference between consecutive elements in an array. When you plot differenced_random_walk, you’ll see a series that fluctuates randomly around zero, with no apparent trend or changing variance. This visually confirms that we’ve transformed the non-stationary random walk into a stationary white noise series.

The Autocorrelation Function (ACF)

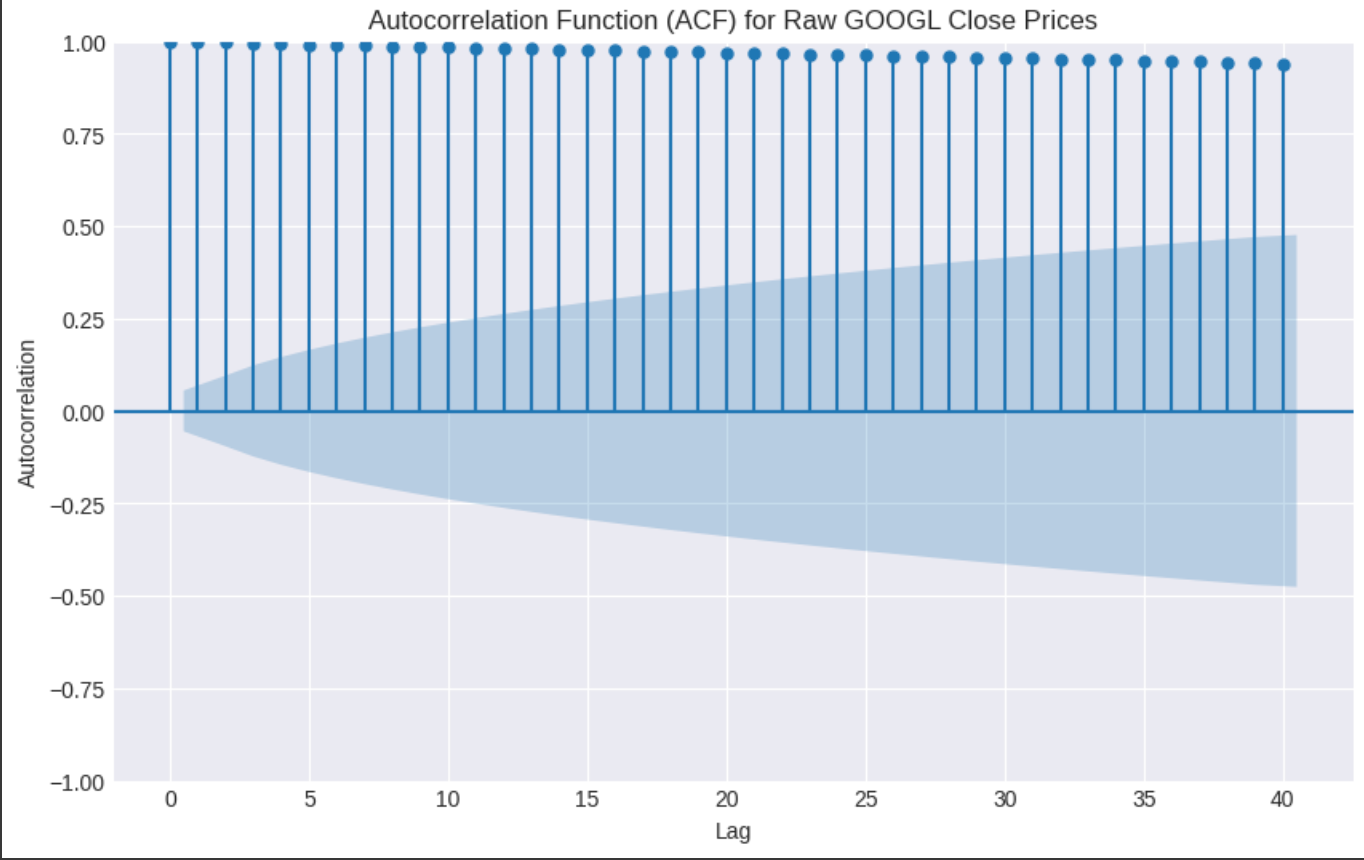

Beyond visual inspection, a powerful statistical tool for identifying the properties of a time series, including its stationarity and the presence of random walk behavior, is the Autocorrelation Function (ACF).

The ACF measures the correlation between a time series and its lagged values. For example: * ACF at lag 1: Correlation between Yt and Yt − 1. * ACF at lag 2: Correlation between Yt and Yt − 2. * And so on.

By plotting the ACF values for various lags, we get an autocorrelation plot (often called a correlogram). * For a stationary series, the ACF typically drops off quickly to zero. This indicates that past values have little to no linear relationship with current values beyond a few lags. * For a random walk (non-stationary series), the ACF plot shows a very distinct pattern: it decays very slowly. This slow decay indicates strong, persistent correlation between current values and past values, which is characteristic of a non-stationary series where past values heavily influence current values without mean reversion. * For white noise, the ACF values are close to zero for all lags (except lag 0, which is always 1). This confirms that there is no linear relationship between a value and its past values.

Understanding the ACF is crucial for identifying the underlying process of a time series and for selecting appropriate forecasting models.

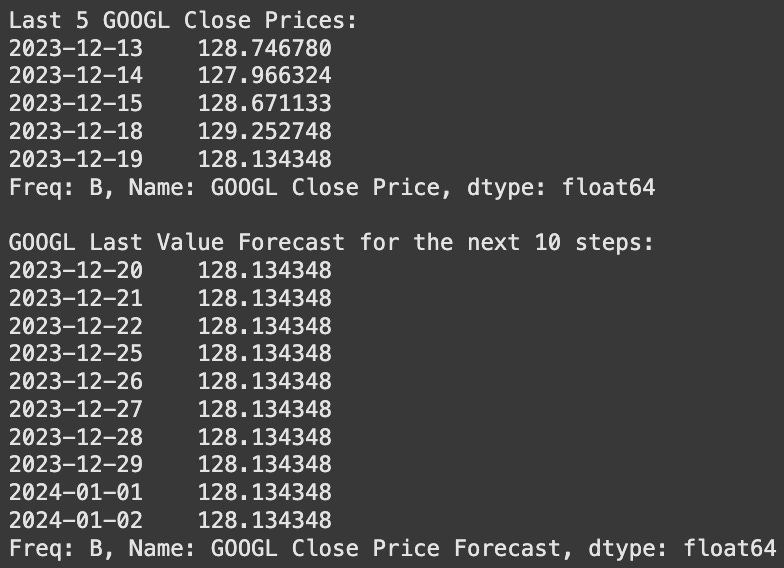

Random Walks in the Real World: GOOGL Stock Prices

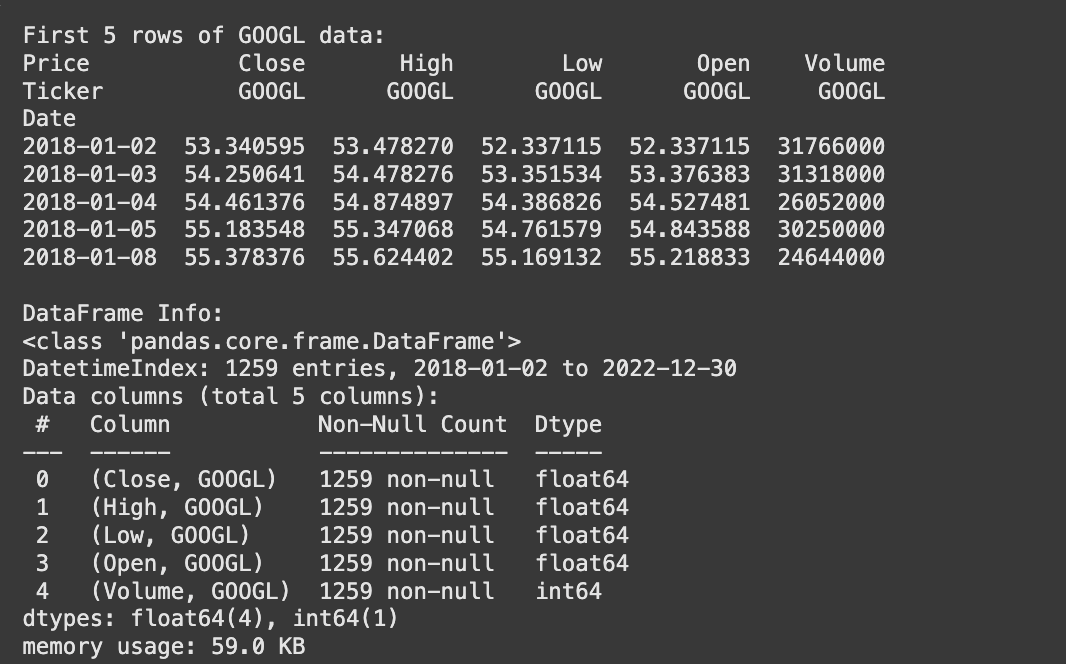

One of the most prominent real-world examples of time series that often behave like random walks are financial asset prices, particularly stock prices. Let’s consider the closing price of Google stock (GOOGL).

The reason stock prices often resemble random walks is tied to the Efficient Market Hypothesis (EMH). In its strong form, EMH suggests that all available information is immediately and fully reflected in asset prices. This implies that future price movements are unpredictable because any predictable patterns would have already been exploited by traders, driving the price to reflect that information instantly. Therefore, the only thing that can move the price is new, unpredictable information, which effectively acts like the “random shock” (ϵt) in our random walk equation.

Let’s load and plot some historical GOOGL stock data to see this in action. We’ll use pandas for data handling and matplotlib for plotting.

import pandas as pd

import matplotlib.pyplot as plt

# Load the GOOGL dataset (assuming it's in a CSV file named 'GOOGL.csv'

# in the same directory, with a 'Date' column and a 'Close' column)

# For demonstration, we'll create a dummy DataFrame if the file isn't present.

try:

googl_df = pd.read_csv('GOOGL.csv', parse_dates=['Date'], index_col='Date')

except FileNotFoundError:

print("GOOGL.csv not found. Creating a dummy dataset for demonstration.")

# Create a dummy random walk-like dataset for demonstration purposes

np.random.seed(43) # Different seed for dummy data

dummy_start_value = 1500

dummy_num_steps = 500

dummy_random_steps = np.random.normal(loc=0.5, scale=5, size=dummy_num_steps) # A slight positive drift

dummy_random_walk_data = np.cumsum(np.insert(dummy_random_steps, 0, dummy_start_value))[:-1] # Correct length

dummy_dates = pd.date_range(start='2020-01-01', periods=dummy_num_steps, freq='B') # Business days

googl_df = pd.DataFrame({'Close': dummy_random_walk_data}, index=dummy_dates)

# Display the first few rows of the DataFrame

print("GOOGL Data Head:")

print(googl_df.head())

# Display basic information about the DataFrame

print("\nGOOGL Data Info:")

googl_df.info()This code snippet attempts to load a GOOGL.csv file. If the file is not found (which is likely for a generic example), it creates a dummy DataFrame that simulates random walk behavior, ensuring the code runs and demonstrates the plotting. The parse_dates=['Date'] and index_col='Date' arguments are crucial for treating the ‘Date’ column as a proper datetime index, which is standard practice for time series data.

Now, let’s plot the closing prices of GOOGL.

# Plot the GOOGL closing prices

plt.figure(figsize=(12, 7))

plt.plot(googl_df['Close'], label='GOOGL Closing Price')

plt.title('GOOGL Stock Closing Prices (Example of a Random Walk)')

plt.xlabel('Date')

plt.ylabel('Price (USD)')

plt.grid(True)

plt.legend()

plt.tight_layout()

plt.show()When you examine this plot, you’ll see characteristics very similar to our simulated random walk: * No clear mean reversion: The price doesn’t consistently return to a fixed average. * Apparent trends: There might be periods of sustained upward or downward movement, but these are generally not deterministic and can reverse unpredictably. * Unpredictability: It’s very difficult to forecast the exact next day’s price movement from the chart alone.

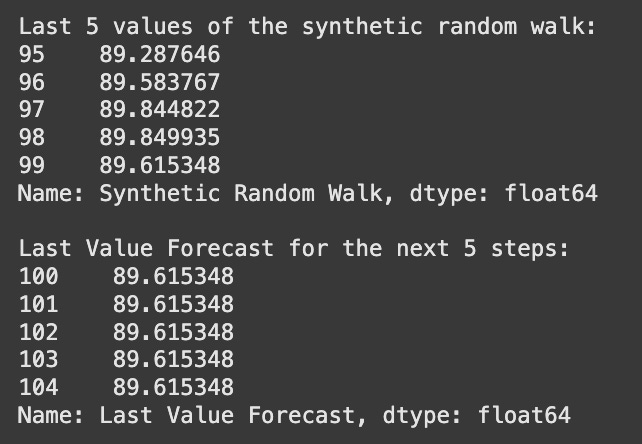

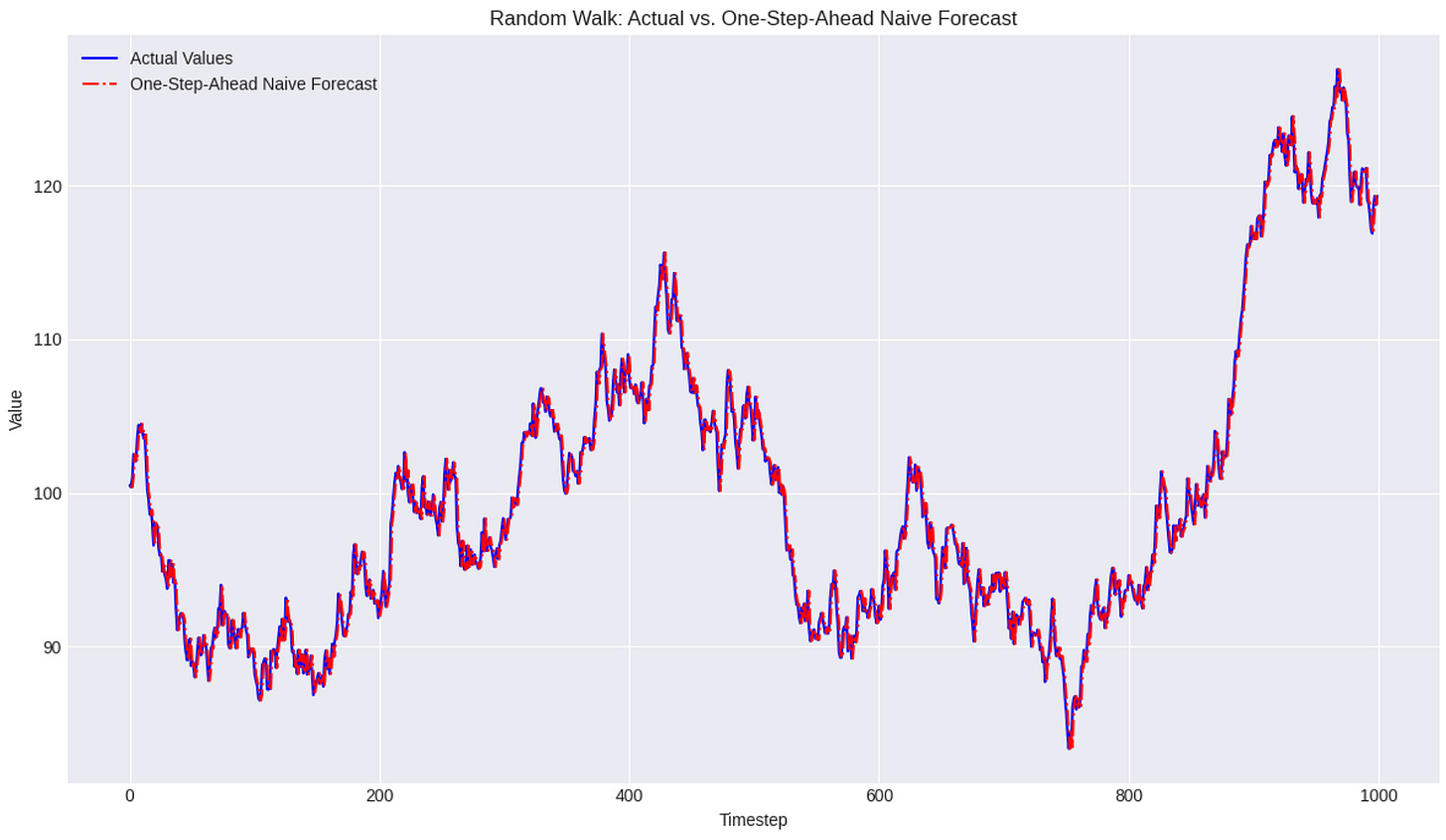

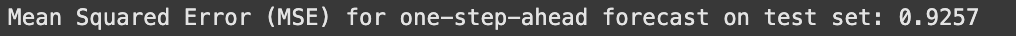

For a series that behaves like a random walk, the most effective “forecast” is often simply the last observed value. This is precisely the naive forecast we discussed in the previous chapter. If today’s GOOGL price is $X, the best forecast for tomorrow’s price, given a random walk assumption, is also $X. This highlights why understanding random walks is so important: it tells us when simple baseline models might be the most appropriate forecasting approach.

The Random Walk Process

Understanding the behavior of time series data is fundamental to effective forecasting. While some series exhibit clear patterns or stationarity, others appear to move unpredictably. Among these, the random walk process stands out as a critical concept, particularly prevalent in financial markets and economic data. A random walk describes a process where the current value is derived from the previous value plus a random shock. This seemingly simple definition has profound implications for how we model and forecast such series.

Defining the Random Walk

At its core, a random walk is a mathematical model for a sequence of random steps. Imagine a person taking steps, where each step’s direction and size are random. The person’s position at any given time is the sum of all previous steps. In time series, this translates to the current observation being the sum of the previous observation and a random, unpredictable change.

The mathematical expression of a random walk process is given by:

y_t = C + y_{t-1} + ε_tLet’s break down each component of this equation:

y_t: This represents the value of the time series at the current time pointt. It’s what we are trying to model or predict.y_{t-1}: This is the value of the time series at the previous time point,t-1. The equation clearly shows a strong dependence on the immediate past value, which is a defining characteristic of a random walk.C: This is a constant term, often referred to as the drift. It represents the average step size or the systematic tendency of the series to increase or decrease over time.ε_t(epsilon_t): This is the white noise error term or “random shock” at timet. This term is the unpredictable part of the random walk, representing the random fluctuations that occur from one period to the next.

Deconstructing White Noise (ε_t)

The ε_t term is the engine of randomness in a random walk. It’s what makes the series unpredictable in the short term and drives its erratic long-term behavior. To truly understand a random walk, we must understand the properties of white noise.

White noise is a sequence of random variables with the following key properties:

Zero Mean: The expected value (average) of

ε_tis zero, i.e.,E[ε_t] = 0. This means that, on average, the random shocks do not systematically push the series up or down. They are equally likely to be positive or negative.Constant Variance: The variance of

ε_tis constant over time, i.e.,Var[ε_t] = σ²(sigma squared). This implies that the magnitude of the random shock is consistent and does not grow or shrink over time.No Autocorrelation: The white noise terms are independent of each other. The covariance between

ε_tandε_sis zero for anyt ≠ s. This means that a shock at one point in time provides no information about a shock at any other point in time. There are no patterns or memory in the error terms themselves.

Often, for simplicity and analytical convenience, ε_t is assumed to be a realization of a standard normal distribution. This means that ε_t values are drawn from a normal distribution with a mean of 0 and a variance (and thus standard deviation) of 1, denoted as N(0, 1).

Why N(0, 1)? * Symmetry: The normal distribution is symmetric around its mean (0), reinforcing the idea that positive and negative shocks are equally likely. * Mathematical Tractability: It simplifies many statistical analyses and theoretical derivations. * Central Limit Theorem: In many real-world scenarios, random errors or sums of many small, independent random effects tend to converge to a normal distribution.

The choice of N(0, 1) specifically for white noise implies that the random number has an “equal chance of going up or down by a random number” because the distribution is centered at zero. While the standard normal distribution is common, white noise can technically come from any distribution that satisfies the three properties above (zero mean, constant variance, no autocorrelation). However, the implications of using other distributions (e.g., a uniform distribution or a t-distribution) would primarily affect the shape of the distribution of the shocks, potentially leading to more extreme values (fat tails for t-distribution) or bounded values (uniform distribution). For most introductory purposes, the standard normal assumption is sufficient and widely used.

The Role of Drift (C)

The constant C plays a crucial role in determining the long-term behavior of a random walk.

Pure Random Walk (when

C = 0): IfC = 0, the equation simplifies toy_t = y_{t-1} + ε_t. In this case, the series has no inherent tendency to increase or decrease. It simply wanders randomly around its starting point. While it can deviate significantly from its initial value, it does so without a systematic direction. Think of a drunkard stumbling around a lamp post – they might wander far, but there’s no overall direction to their movement.Random Walk with Drift (when

C ≠ 0): IfCis a non-zero value, it introduces a systematic trend into the series.If

C > 0, the series will tend to increase over time, on average, byCunits per period, in addition to the random shock.If

C < 0, the series will tend to decrease over time. The drift term ensures that, in the long run, the series will move predictably in the direction ofC, even with the superimposed random fluctuations. Consider the drunkard now walking on a slight incline – they still stumble randomly, but there’s an underlying tendency to move uphill or downhill.

Observed Characteristics and the Equation

The mathematical formulation of a random walk directly explains its observed characteristics:

Long periods of apparent trend: Even in a pure random walk (

C=0), the cumulative effect of theε_tterms can lead to extended periods where the series appears to trend upwards or downwards. This is purely coincidental, as there’s no underlying systematic trend. With drift (C ≠ 0), these trends become even more pronounced and systematic.Sudden changes in direction: Large positive or negative

ε_tvalues can cause the series to abruptly change its direction or significantly accelerate its movement in a particular direction.Non-stationarity: A key implication of the random walk equation is that the series is non-stationary. This means its statistical properties (like mean and variance) change over time.

The mean of a random walk with drift will change over time (it will tend towards

C*t).The variance of a random walk grows with time (

Var[y_t] = t * σ²). This property means that the further out you go in time, the wider the possible range of values fory_tbecomes, making long-term forecasting very uncertain.

Numerical Step-by-Step Example

Let’s illustrate how a random walk sequence is generated with a simple numerical example.

Assume: * Initial value y_0 = 100 * Drift C = 1 * A sequence of white noise values ε_t: [0.5, -1.2, 0.8, -0.3, 1.5]

Let’s calculate the first few steps:

Time t=1:

y_1 = C + y_0 + ε_1y_1 = 1 + 100 + 0.5 = 101.5Time t=2:

y_2 = C + y_1 + ε_2y_2 = 1 + 101.5 + (-1.2) = 101.3Time t=3:

y_3 = C + y_2 + ε_3y_3 = 1 + 101.3 + 0.8 = 103.1Time t=4:

y_4 = C + y_3 + ε_4y_4 = 1 + 103.1 + (-0.3) = 103.8Time t=5:

y_5 = C + y_4 + ε_5y_5 = 1 + 103.8 + 1.5 = 106.3

This step-by-step process shows how the series evolves, with each new value building on the previous one, influenced by the constant drift and the random shock.

Simulating Random Walks in Python

To truly grasp the dynamics of a random walk, simulating one is invaluable. We’ll use Python’s numpy library for numerical operations and matplotlib for plotting.

First, let’s import the necessary libraries.

import numpy as np

import matplotlib.pyplot as plt

# Set a random seed for reproducibility

np.random.seed(42)We import numpy for numerical operations, especially for generating random numbers, and matplotlib.pyplot for plotting. Setting a random seed ensures that our simulations are reproducible; running the code multiple times will yield the same random walk path.

Simulating a Pure Random Walk (C = 0)

A pure random walk has no drift, meaning C = 0. The series simply accumulates random shocks.

# Define parameters for the pure random walk

n_steps = 200 # Number of time steps to simulate

initial_value = 0 # y_0, starting point of the walk

mean_epsilon = 0 # Mean of the white noise

std_epsilon = 1 # Standard deviation of the white noise (for N(0,1))Here, we define the simulation parameters. n_steps determines the length of our time series. We start initial_value at 0 for simplicity, and define the properties of our white noise (epsilon_t) as a standard normal distribution (mean_epsilon=0, std_epsilon=1).

# Generate white noise (epsilon_t)

# These are the random shocks at each step

epsilon_values = np.random.normal(loc=mean_epsilon, scale=std_epsilon, size=n_steps)We use np.random.normal to generate n_steps random numbers that follow a normal distribution with the specified mean and standard deviation. These are our epsilon_t values for each time step.

# Initialize the random walk series

pure_rw_series = np.zeros(n_steps + 1) # +1 for y_0

pure_rw_series[0] = initial_value

# Simulate the random walk

for t in range(n_steps):

# y_t = y_{t-1} + epsilon_t (since C=0)

pure_rw_series[t+1] = pure_rw_series[t] + epsilon_values[t]We create an array pure_rw_series to store the values of our random walk, initializing the first element with initial_value. Then, we loop through each time step, applying the random walk formula: the current value is the previous value plus the corresponding random shock.

# Plot the pure random walk

plt.figure(figsize=(12, 6))

plt.plot(pure_rw_series, label='Pure Random Walk (C=0)', color='blue')

plt.title('Simulation of a Pure Random Walk')

plt.xlabel('Time Step')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()Finally, we plot the generated series. You will observe that the path appears to wander without a clear direction, often exhibiting what looks like trends for periods, only to reverse. This visual behavior is a direct consequence of the cumulative random shocks.

Simulating a Random Walk with Drift (C ≠ 0)

Now, let’s introduce a non-zero drift term C to see its effect.

# Define parameters for the random walk with drift

drift_constant = 0.5 # Our constant CWe define drift_constant as our C value. A positive value means the series will tend to increase over time.

# Initialize the random walk with drift series

rw_drift_series = np.zeros(n_steps + 1)

rw_drift_series[0] = initial_value # Start from the same initial value

# Simulate the random walk with drift

for t in range(n_steps):

# y_t = C + y_{t-1} + epsilon_t

rw_drift_series[t+1] = drift_constant + rw_drift_series[t] + epsilon_values[t]Similar to the pure random walk, we initialize the series and loop through the steps. The key difference is the addition of drift_constant in each iteration, systematically pushing the series in one direction. We reuse the same epsilon_values for direct comparison with the pure random walk.

# Plot both random walks for comparison

plt.figure(figsize=(12, 6))

plt.plot(pure_rw_series, label='Pure Random Walk (C=0)', color='blue', alpha=0.7)

plt.plot(rw_drift_series, label=f'Random Walk with Drift (C={drift_constant})', color='red', alpha=0.7)

plt.title('Comparison of Pure Random Walk vs. Random Walk with Drift')

plt.xlabel('Time Step')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()By plotting both series together, the impact of the drift term becomes immediately apparent. The random walk with drift will show a clear upward (or downward, if C were negative) trajectory, even with the random fluctuations superimposed. This illustrates how a constant drift can fundamentally change the long-term behavior of a series.

Practical Applications

The random walk model, especially the concept of a random walk with drift, is widely used in finance to model asset prices, such as stock prices or exchange rates. The Efficient Market Hypothesis in its weak form suggests that stock prices follow a random walk, meaning past price movements cannot be used to predict future movements, as all available information is already reflected in the current price. While real-world financial series are more complex, the random walk serves as a powerful baseline and a crucial concept for understanding non-stationary data. Beyond finance, random walks are used in physics (Brownian motion), biology (population dynamics), and other fields where cumulative random changes occur.

Simulating a Random Walk Process

Building upon the theoretical understanding of random walk processes, this section transitions into their practical simulation and visualization using Python. Simulating these processes is crucial for developing intuition about their behavior, understanding their properties, and preparing for more complex time series modeling tasks.

The Building Block: White Noise

At the heart of a simple random walk is a concept known as white noise. Conceptually, white noise represents a series of purely random, unpredictable shocks or innovations that drive the changes in the random walk process.

Formally, a series of random variables ϵt is considered white noise if it satisfies the following conditions: * Zero Mean: The expected value of each ϵt is zero, i.e., E[ϵt] = 0. This means, on average, the shocks do not systematically push the series up or down. * Constant Variance: The variance of each ϵt is constant and finite, i.e., Var(ϵt) = σ2 < ∞. This implies that the magnitude of the shocks does not change over time. * No Autocorrelation: The covariance between any two different shock terms is zero, i.e., Cov(ϵt,ϵs) = 0 for t ≠ s. This is a critical property, meaning that past shocks provide no information about future shocks; they are independent.

In many practical simulations, especially for pedagogical purposes, white noise is often generated from a standard normal distribution, where ϵt ∼ N(0,1). This means each shock has a mean of 0 and a variance of 1.

The Random Walk as a Cumulative Sum

Recall the mathematical formulation of a simple random walk:

yt = yt − 1 + ϵt

where yt is the value of the random walk at time t, yt − 1 is the value at the previous time step, and ϵt is a white noise term.

Let’s expand this equation step-by-step to see why a random walk is essentially a cumulative sum of white noise. Assume an initial value y0 = 0 for simplicity:

y1 = y0 + ϵ1 = 0 + ϵ1 = ϵ1 y2 = y1 + ϵ2 = (ϵ1) + ϵ2 y3 = y2 + ϵ3 = (ϵ1+ϵ2) + ϵ3

Following this pattern, for any time t, the value of the random walk yt is the sum of all white noise terms up to time t:

$y_t = \sum_{i=1}^{t} \epsilon_i$

This direct relationship to a cumulative sum is fundamental for understanding and simulating random walks.

To illustrate this with a small, concrete example, consider a random walk over 5 steps starting at y0 = 0, with the following generated white noise values: ϵ1 = 0.5 ϵ2 = − 0.2 ϵ3 = 0.8 ϵ4 = 0.1 ϵ5 = − 0.4

The random walk values would be: y0 = 0 (Initial value) y1 = y0 + ϵ1 = 0 + 0.5 = 0.5 y2 = y1 + ϵ2 = 0.5 + (−0.2) = 0.3 y3 = y2 + ϵ3 = 0.3 + 0.8 = 1.1 y4 = y3 + ϵ4 = 1.1 + 0.1 = 1.2 y5 = y4 + ϵ5 = 1.2 + (−0.4) = 0.8

As you can see, each step is simply the previous value plus a new random shock.

Simulating a Basic Random Walk in Python

We will now simulate a basic random walk using Python. Our goal is to create a random walk series that starts at zero and is driven by standard normal white noise.

First, we need to import the necessary libraries: numpy for numerical operations (especially random number generation and cumulative sums) and matplotlib.pyplot for plotting.

import numpy as np

import matplotlib.pyplot as plt

# Set a random seed for reproducibility

# This ensures that every time you run the code, you get the same random numbers,

# making your simulations consistent and verifiable.

np.random.seed(42)Setting a random seed is a crucial best practice in any simulation. It allows you to reproduce the exact sequence of “random” numbers generated by the computer. Without it, each run of the code would produce a different random walk, making it challenging to debug or compare results. The number 42 is arbitrary; any integer can be used.

Next, we generate the white noise terms, which will serve as the “steps” or “innovations” for our random walk. We’ll simulate 1000 time steps.

# Define the number of steps for our random walk

num_steps = 1000

# Generate 'num_steps' random numbers from a standard normal distribution (mean=0, std=1)

# These represent the 'epsilon_t' (white noise) terms for each time step.

epsilon_steps = np.random.standard_normal(num_steps)

# To ensure our random walk starts at 0 (y_0 = 0), we explicitly set the first 'step' to 0.

# This makes the first value of the cumulative sum also 0, aligning with y_0=0.

epsilon_steps[0] = 0.0

print(f"First 5 epsilon_steps (innovations): {epsilon_steps[:5]}")The num_steps variable determines the length of our simulated time series. A value of 1000 steps is typically sufficient to observe the characteristic behaviors of a random walk. np.random.standard_normal(num_steps) generates an array of 1000 random numbers drawn from a standard normal distribution. These are our ϵt values. Setting epsilon_steps[0] = 0.0 ensures that when we compute the cumulative sum, the random walk series effectively starts at a value of zero, as its first “change” from an implicit y0 will be zero.

Now, we compute the random walk by applying the cumulative sum to our epsilon_steps.

# Calculate the cumulative sum of the epsilon_steps to generate the random walk series.

# This directly implements y_t = sum(epsilon_i from i=1 to t).

random_walk = np.cumsum(epsilon_steps)

print(f"First 5 random_walk values: {random_walk[:5]}")The np.cumsum() function is perfectly suited for this task. It takes an array and returns an array where each element is the cumulative sum of the elements up to that position in the input array. For example, [a, b, c] becomes [a, a+b, a+b+c]. Since we set epsilon_steps[0] to 0, random_walk[0] will also be 0, correctly representing our starting point y0.

Finally, we visualize the simulated random walk using matplotlib.

# Create a figure and an axes object for plotting

fig, ax = plt.subplots(figsize=(10, 6))

# Plot the simulated random walk

ax.plot(random_walk, label='Simulated Random Walk')

# Set labels for the x and y axes

ax.set_xlabel('Time Step')

ax.set_ylabel('Value')

# Set the title of the plot

ax.set_title('Simulated Random Walk Process (No Drift, y_0=0)')

# Add a grid for better readability

ax.grid(True)

# Display the legend

ax.legend()

# Adjust plot layout to prevent labels from overlapping

plt.tight_layout()

# Show the plot

plt.show()The plot will reveal the characteristic behaviors of a random walk: * Non-Stationarity: Unlike stationary series that tend to revert to a mean, random walks can wander significantly from their starting point. * No Mean Reversion: There’s no inherent tendency for the series to return to a central value. * Long-Term Trends: Even without an explicit “drift” term, random walks can exhibit apparent trends (upward or downward) over long periods due to the accumulation of random shocks. These trends are not deterministic but are merely the result of the random process. * Sudden Changes: The series can experience abrupt shifts in direction.

This visual representation is crucial for recognizing random walk behavior in real-world data, such as financial asset prices.

Variations of the Random Walk

The basic random walk simulation demonstrates the core concept. However, random walks can have additional components that influence their behavior.

Random Walk with Drift

A random walk with drift includes a constant term C that systematically pushes the series in one direction (upwards if C > 0, downwards if C < 0). This is represented as:

yt = C + yt − 1 + ϵt

Expanding this, we get:

$y_t = y_0 + C \cdot t + \sum_{i=1}^{t} \epsilon_i$

The C * t term clearly shows the linear trend introduced by the drift.

Let’s simulate a random walk with a positive drift and compare it to our original simulation.

# Define a drift constant

drift = 0.1

# Generate new epsilon_steps for clarity, though you could reuse the previous ones

# For a more direct comparison, we'll reuse the original epsilon_steps and add drift.

# (Ensure epsilon_steps[0] is still 0 if you want y_0=0 for the drift walk too)

# Calculate the random walk with drift

# Each step now includes the constant drift term

drift_random_walk = np.cumsum(epsilon_steps + drift)

# Plot both the original and the drift random walk for comparison

fig, ax = plt.subplots(figsize=(10, 6))

ax.plot(random_walk, label='Simulated Random Walk (No Drift)')

ax.plot(drift_random_walk, label=f'Simulated Random Walk (Drift = {drift})', linestyle='--')

ax.set_xlabel('Time Step')

ax.set_ylabel('Value')

ax.set_title('Simulated Random Walks: No Drift vs. With Drift')

ax.grid(True)

ax.legend()

plt.tight_layout()

plt.show()You will observe that the random walk with drift tends to move consistently in the direction of the drift over the long term, creating a more pronounced upward or downward trend compared to the no-drift version, which wanders aimlessly. This drift term is particularly relevant in financial modeling, where assets might have an expected positive return over time.

Random Walk with a Non-Zero Initial Value

Another common variation is a random walk that starts at a value other than zero. This simply shifts the entire series up or down by a constant amount.

Here, y0 is the initial value.

# Define a non-zero initial value

initial_value = 50.0

# Calculate the random walk with a non-zero initial value

# We add the initial value to the entire cumulative sum of errors.

initial_value_random_walk = initial_value + np.cumsum(epsilon_steps)

# Plot this new random walk

fig, ax = plt.subplots(figsize=(10, 6))

ax.plot(initial_value_random_walk, label=f'Simulated Random Walk (y_0 = {initial_value})')

ax.set_xlabel('Time Step')

ax.set_ylabel('Value')

ax.set_title(f'Simulated Random Walk Process (Starting at {initial_value})')

ax.grid(True)

ax.legend()

plt.tight_layout()

plt.show()This simulation will look identical in shape to the original zero-starting random walk, but it will be vertically shifted so that it begins at the specified initial_value. This demonstrates that the starting point primarily affects the level of the series, not its fundamental random walk behavior.

Practical Applications and Implications

Simulating random walks provides critical insights into real-world phenomena:

Financial Markets: Stock prices, exchange rates, and commodity prices are often modeled as random walks (or variations thereof) in the short term. The Efficient Market Hypothesis suggests that asset prices follow a random walk, meaning future price movements are unpredictable based on past movements. Understanding this helps traders and analysts recognize that apparent patterns in price charts might just be random fluctuations.

Brownian Motion: In physics, the random movement of particles suspended in a fluid (Brownian motion) is a classic example of a random walk.

Sensor Readings: Certain types of sensor data, especially in noisy environments, can exhibit random walk characteristics.

Identifying Non-Stationarity: Random walks are prime examples of non-stationary time series. Their mean and variance are not constant over time (e.g., the variance grows with time, and the “mean” can drift). Recognizing this non-stationarity is a crucial first step in time series analysis, as many traditional forecasting models (like ARIMA) assume stationarity. Often, differencing a random walk (i.e., taking yt − yt − 1 = ϵt) transforms it into a stationary white noise process.

Saving Your Plots

For reports, presentations, or future reference, it’s often useful to save your generated plots to a file. matplotlib makes this straightforward.

# Re-create the plot from the basic random walk simulation for saving

fig, ax = plt.subplots(figsize=(10, 6))

ax.plot(random_walk, label='Simulated Random Walk')

ax.set_xlabel('Time Step')

ax.set_ylabel('Value')

ax.set_title('Simulated Random Walk Process (For Saving)')

ax.grid(True)

ax.legend()

plt.tight_layout()

# Save the figure to a file

# You can specify different formats like .png, .jpg, .pdf, .svg

# 'dpi' (dots per inch) controls the resolution of the saved image.

fig.savefig('random_walk_simulation.png', dpi=300)

print("Plot saved as random_walk_simulation.png")

# Close the plot to free up memory (optional, especially if not showing)

plt.close(fig)Saving plots ensures that your results are reproducible and easily shareable without needing to rerun the entire simulation.

Identifying a Random Walk

Understanding whether a time series exhibits a random walk behavior is a critical step in time series analysis and forecasting. A random walk process has unique characteristics that dictate the appropriate modeling approach.

Defining a Random Walk Through Its Properties

At its core, a time series is considered a random walk if its first difference is a stationary and uncorrelated process. Let’s break down these crucial components.

Recall from previous sections that a random walk is defined by the equation: Yt = Yt − 1 + ϵt

Where Yt is the value at time t, Yt − 1 is the value at the previous time step, and ϵt is a white noise error term.

If we rearrange this equation, we get: Yt − Yt − 1 = ϵt

The term Yt − Yt − 1 is what we call the first difference of the series Yt. This simple rearrangement reveals the fundamental characteristic: the first difference of a random walk is simply the white noise error term, ϵt.

Since ϵt is by definition a white noise process, it is both stationary and uncorrelated. Therefore, the defining features of a random walk are:

Its first difference is stationary.

Its first difference is uncorrelated (i.e., it behaves like white noise).

Let’s explore these concepts in more detail.

The First Difference: Capturing Change

The first difference of a time series, denoted as ΔYt or Y′t, is simply the change in the value of the series from one time step to the next. It is calculated as Yt − Yt − 1. This operation is incredibly powerful because it transforms a series of absolute values into a series of changes.

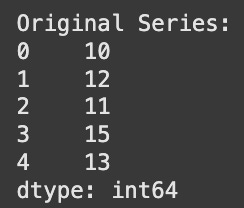

Consider a simple numerical example to illustrate the first difference:

Time (t)Original Series (Yt)First Difference (ΔYt = Yt − Yt − 1)110–21212 − 10 = 231111 − 12 = − 141313 − 11 = 251414 − 13 = 1

Notice that the first difference series starts from the second data point, as the first difference requires a preceding value.

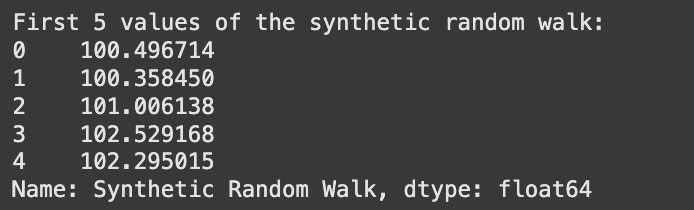

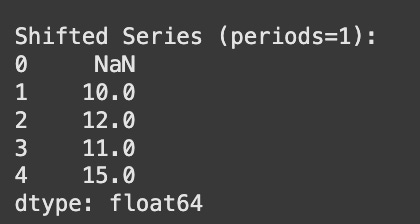

In Python, calculating the first difference is straightforward using the diff() method available for pandas Series or DataFrames. Let’s simulate a simple random walk and observe its first difference.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# Set a seed for reproducibility

np.random.seed(42)

# Generate a synthetic random walk

# Start at 100 and add random steps (white noise)

steps = np.random.normal(loc=0, scale=1, size=100) # White noise errors

random_walk = np.cumsum(steps) + 100 # Cumulative sum of steps starting at 100

# Convert to a pandas Series for easy differencing

rw_series = pd.Series(random_walk)

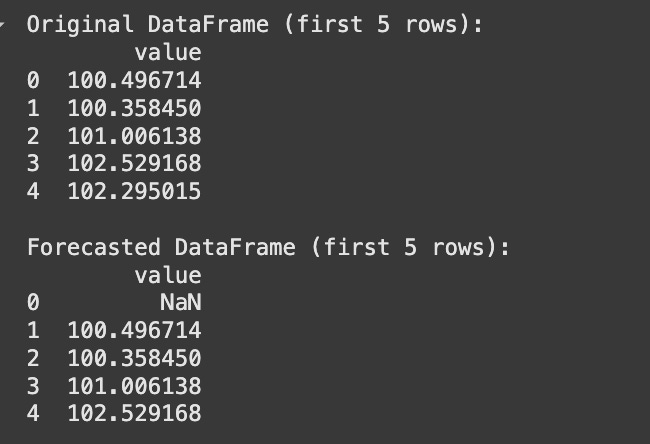

print("Original Random Walk (first 5 values):\n", rw_series.head())This code snippet first imports necessary libraries and sets a random seed for consistent results. It then generates a sequence of random steps, representing the white noise errors (ϵt), and cumulatively sums them to create a random walk series. We convert this numpy array into a pandas Series, which provides convenient time series functionalities.

Now, let’s compute its first difference:

# Calculate the first difference

rw_diff = rw_series.diff().dropna() # .dropna() removes the first NaN value

print("\nFirst Difference of Random Walk (first 5 values):\n", rw_diff.head())

print(f"\nOriginal series length: {len(rw_series)}")

print(f"Differenced series length: {len(rw_diff)}")Here, rw_series.diff() computes the difference between consecutive elements. The first element of the differenced series will be NaN because there is no preceding value to subtract from. We use .dropna() to remove this NaN value, resulting in a differenced series that is one element shorter than the original.

Visualizing both the original random walk and its first difference highlights the transformation:

# Plot the original random walk and its first difference

plt.figure(figsize=(12, 6))

# Plot original random walk

plt.subplot(2, 1, 1) # 2 rows, 1 column, first plot

plt.plot(rw_series)

plt.title('Simulated Random Walk')

plt.xlabel('Time')

plt.ylabel('Value')

plt.grid(True)

# Plot first difference

plt.subplot(2, 1, 2) # 2 rows, 1 column, second plot

plt.plot(rw_diff, color='orange')

plt.title('First Difference of Random Walk')

plt.xlabel('Time')

plt.ylabel('Change in Value')

plt.grid(True)

plt.tight_layout() # Adjust layout to prevent overlapping titles/labels

plt.show()The top plot shows a typical random walk: it drifts without a clear mean and its variance appears to increase over time. The bottom plot, however, shows the first difference, which fluctuates around zero with a relatively constant variance. This visual difference is a strong hint about the underlying properties we’re about to discuss.

Stationarity: A Stable Baseline

A time series is considered stationary if its statistical properties (mean, variance, and autocorrelation structure) remain constant over time. In simpler terms, a stationary series looks roughly the same regardless of when you observe it.

Random walks are inherently non-stationary. They typically exhibit a trend (even if it’s just a random drift) and their variance tends to increase over time, meaning they spread out more as time progresses. This non-stationarity makes them difficult to model with traditional time series techniques that assume stationarity.

Why apply differencing? The primary reason we apply differencing to a non-stationary series is to make it stationary. By looking at the changes rather than the absolute values, we often remove the trend and stabilize the variance, transforming a non-stationary series into one that is more amenable to modeling. For a random walk, differencing perfectly “undoes” the random walk component, leaving only the white noise error, which is by definition stationary.

Autocorrelation: The Memory of a Series

Autocorrelation measures the correlation of a time series with its own lagged values. In essence, it tells us how much the current value of a series is related to its past values. For example, a high positive autocorrelation at lag 1 means that if today’s value is high, yesterday’s value was likely also high.

For a random walk, the original series typically exhibits strong positive autocorrelation that decays very slowly. This is because each value is heavily dependent on the previous value (Yt = Yt − 1 + ϵt). If Yt − 1 was high, Yt is also likely to be high, unless ϵt is a very large negative shock.

However, the first difference of a random walk, which is simply the white noise error term (ϵt), should have no significant autocorrelation at any lag other than lag 0 (the correlation of the series with itself, which is always 1). This is the definition of a white noise process: uncorrelated, zero mean, constant variance. The absence of autocorrelation in the first differenced series is a key diagnostic indicator that the original series was a random walk.

Let’s visualize the autocorrelation using an Autocorrelation Function (ACF) plot for both our simulated random walk and its first difference.

from statsmodels.graphics.tsa.plot_acf import plot_acf

# Plot ACF for the original random walk

fig, axes = plt.subplots(2, 1, figsize=(10, 8))

plot_acf(rw_series, lags=20, ax=axes[0], title='ACF of Original Random Walk')

axes[0].set_xlabel('Lag')

axes[0].set_ylabel('Autocorrelation')

axes[0].grid(True)

# Plot ACF for the first difference

plot_acf(rw_diff, lags=20, ax=axes[1], title='ACF of First Difference (White Noise)')

axes[1].set_xlabel('Lag')

axes[1].set_ylabel('Autocorrelation')

axes[1].grid(True)

plt.tight_layout()

plt.show()The plot_acf function from statsmodels is a powerful tool to visualize autocorrelation. The blue shaded area represents the confidence interval; if a bar extends beyond this area, the autocorrelation at that lag is considered statistically significant.

In the first ACF plot (original random walk), you should observe that the autocorrelation decays very slowly, remaining significant for many lags. This slow decay is a hallmark of non-stationary series, particularly random walks. In contrast, the second ACF plot (first difference) should show that only the bar at lag 0 is significant (as any series is perfectly correlated with itself at lag 0). All other lags should fall within the blue confidence band, indicating no statistically significant autocorrelation. This pattern is characteristic of a white noise process.

The Diagnostic Workflow: Identifying a Random Walk

To identify if a given time series is a random walk, you can follow a systematic diagnostic workflow:

Visualize the Time Series: Plot the raw time series data. A random walk will typically show a wandering pattern, no clear mean reversion, and potentially increasing variance over time. It will not appear stationary.

Calculate the First Difference: Compute the first difference of the original series.

Visualize the First Difference: Plot the differenced series. If the original series was a random walk, this differenced series should now appear stationary (fluctuating around a constant mean, typically zero, with constant variance).

Analyze Autocorrelation of the First Difference: Generate an ACF plot for the first differenced series.

If the original series was a random walk, its first difference should resemble white noise. This means the ACF plot of the first difference should show no significant spikes at any lag (other than lag 0). All autocorrelation coefficients should fall within the confidence interval.

If all these conditions are met, particularly the last point regarding the ACF of the first difference, it provides strong evidence that the original time series is a random walk.

Implications for Forecasting

Identifying a time series as a random walk has profound implications for forecasting:

Optimal Forecast is the Last Observed Value: For a random walk, the best possible forecast for the next period is simply the current period’s value. That is, E[Yt + 1|Yt] = Yt. Any more complex model will likely not yield better results than this simple “naive” forecast. This is because the future movement is entirely unpredictable, driven solely by the random shock ϵt.

Market Efficiency: In financial markets, if asset prices (like stock prices) follow a random walk, it supports the Efficient Market Hypothesis (EMH). The EMH suggests that all available information is already reflected in the current price, making it impossible to consistently “beat the market” by using past price patterns. Future price movements are essentially random.

Simpler Models Suffice: Recognizing a random walk prevents analysts from over-complicating their forecasting efforts. Instead of trying to fit complex ARIMA or other sophisticated models, a simple persistence model (forecasting the last value) is the most appropriate and often the most accurate.

In summary, the process of identifying a random walk is crucial for correctly interpreting time series behavior and selecting the most effective forecasting strategy. It moves us from theoretical understanding to practical diagnostic skills essential for real-world data analysis.

Identifying a random walk

Stationarity

Stationarity is a fundamental concept in time series analysis, serving as a cornerstone for building robust forecasting models. A stationary time series is one whose statistical properties — such as mean, variance, and autocorrelation — are constant over time. This stability is crucial because many classical time series models, like Autoregressive (AR), Moving Average (MA), Autoregressive Moving Average (ARMA), and Autoregressive Integrated Moving Average (ARIMA), assume that the underlying data generating process is stationary. Without this assumption, it becomes challenging to make reliable predictions, as the patterns observed in the past may not hold true in the future.

While there are different definitions of stationarity, in practical time series analysis, we primarily focus on weak-sense stationarity (also known as covariance stationarity). A time series yt is weak-sense stationary if it satisfies three key conditions:

Constant Mean: The expected value of the series is constant over time: E[yt] = μ for all t. This means there is no overall trend in the data.

Constant Variance: The variance of the series is constant over time: Var(yt) = σ2 for all t. This implies that the fluctuations around the mean do not change in magnitude over time.

Constant Autocovariance (or Autocorrelation): The covariance between any two observations depends only on the lag (the time difference between them), not on the specific time points: Cov(yt,yt − k) = γk for all t and k. This means the relationship between an observation and its past values remains consistent over time.

In contrast, a strictly stationary series is one where the joint probability distribution of any set of observations remains the same regardless of when the observations are taken. Strict stationarity is a stronger condition that is rarely met in real-world data and is often too restrictive for practical modeling. Weak-sense stationarity is usually sufficient for most analytical purposes.

Why is Stationarity Crucial?

The importance of stationarity stems from the very nature of forecasting. If the statistical properties of a time series change over time, then any model trained on historical data will become unreliable when applied to future data. Imagine trying to predict stock prices if their average growth rate, volatility, or the way they relate to past prices kept changing drastically every week. Such a scenario would make accurate prediction virtually impossible.

Many forecasting models rely on the assumption that the underlying process generating the data is stable. For instance, classical regression models assume that the relationship between dependent and independent variables is constant. Similarly, in time series, if the mean or variance is drifting, or if the autocorrelation structure is evolving, then the estimated model parameters will not be good representations of the future process. A stationary series allows us to use past observations to reliably infer future behavior because the statistical characteristics remain consistent.

Common examples of non-stationary time series include: * Series with a trend: Like the steadily increasing GOOGL stock price or GDP growth. * Series with seasonality: Like monthly retail sales that peak every December. * Series with increasing or decreasing variance: Often seen in financial markets where volatility might increase during periods of economic uncertainty.

Transformations to Achieve Stationarity

When a time series is identified as non-stationary, various transformations can be applied to stabilize its properties. The most common transformations address trends, seasonality, and varying variance.

Differencing for Mean Stabilization

Differencing is a powerful technique used to remove trends and seasonality from a time series, thereby stabilizing its mean. It involves computing the difference between consecutive observations.

The first-order differencing operation is defined as:

y′t = yt − yt − 1

where y′t is the differenced series at time t, and yt is the original series at time t.

Let’s illustrate how differencing removes a simple linear trend with a numerical example.

Consider a series with a constant linear trend: y = [10,12,14,16,18]

Applying first-order differencing: y′2 = y2 − y1 = 12 − 10 = 2 y′3 = y3 − y2 = 14 − 12 = 2 y′4 = y4 − y3 = 16 − 14 = 2 y′5 = y5 − y4 = 18 − 16 = 2

The differenced series becomes [2,2,2,2]. This new series has a constant mean (2) and no trend, demonstrating how differencing effectively removes the linear trend.

For seasonal patterns, seasonal differencing is used. This involves subtracting an observation from the observation at the same period in the previous season. For example, with monthly data and an annual seasonality, you would use a lag of 12:

y′t = yt − yt − 12

Let’s demonstrate differencing using Python and NumPy. We’ll generate a synthetic time series with a clear linear trend and some noise, then apply differencing.

import numpy as np

import matplotlib.pyplot as plt

# Set a random seed for reproducibility to ensure consistent results

np.random.seed(42)

# Generate a synthetic time series with a linear trend and some noise

time_points = np.arange(1, 101) # Create 100 time points (1 to 100)

trend = 0.5 * time_points # Define a linear trend component

noise = np.random.normal(0, 5, 100) # Add random noise from a normal distribution

original_series = trend + noise # Combine trend and noise to form the original seriesHere, we initialize our environment by importing numpy for efficient numerical operations on arrays and matplotlib.pyplot for data visualization. We then construct a synthetic time series, original_series, specifically designed to exhibit a clear upward linear trend, which represents a common form of non-stationarity in real-world data. Random noise is added to simulate realistic data fluctuations.

# Plot the original series to visually identify the trend

plt.figure(figsize=(12, 6))

plt.plot(time_points, original_series, label='Original Series')

plt.title('Original Series with Linear Trend')

plt.xlabel('Time')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()Visual inspection is the first and often most intuitive step in diagnosing stationarity. This plot clearly shows the prominent upward trend in our original_series, confirming that its mean is not constant over time, thus indicating non-stationarity.

# Apply first-order differencing using numpy's diff function

differenced_series = np.diff(original_series, n=1)

# Differencing reduces the number of data points by 'n' (here, 1).

# The first element of the differenced series corresponds to the second element of the original series (y_2 - y_1).

# Therefore, the time points for the differenced series start from the second time point onwards.

differenced_time_points = time_points[1:]The np.diff() function from NumPy is an efficient way to compute the differences between consecutive elements in an array. By setting n=1, we perform first-order differencing, which calculates yt − yt − 1. It’s crucial to understand that this operation results in a series with one fewer data point for each order of differencing, as the first difference (y_1 - y_0) cannot be computed without a preceding value.

# Plot the differenced series to observe the effect of trend removal

plt.figure(figsize=(12, 6))

plt.plot(differenced_time_points, differenced_series, label='Differenced Series (Order 1)', color='orange')

plt.title('Differenced Series: Trend Removed')

plt.xlabel('Time')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()This plot displays the differenced_series. Observe how the prominent linear trend seen in the original series has now been removed. The series now fluctuates around a relatively constant mean (close to zero in this case, as the original trend was linear), and its values appear more stable. This visual confirms that differencing has successfully stabilized the mean of the series, making it more amenable to stationary time series models.

A key implication of differencing is that it introduces a NaN (Not a Number) or loses the first observation, as y1 − y0 cannot be computed if y0 is not available. When working with real datasets, be mindful of how your chosen differencing method handles these boundary conditions.

While differencing is effective, it’s important to avoid over-differencing. Applying differencing more times than necessary can introduce new patterns (like moving average components) or make the series appear more random than it truly is, potentially leading to less accurate forecasts or unnecessarily complex models. The goal is to achieve stationarity, not to completely eliminate all structure.

Log Transformation for Variance Stabilization

The log transformation is particularly useful when the variance of a time series increases with its mean. This phenomenon, known as heteroscedasticity, is common in financial data, where larger values tend to exhibit larger fluctuations. For example, a stock price of $100 might fluctuate by $1-$2, while a stock price of $1000 might fluctuate by $10-$20. In such cases, the absolute magnitude of variability grows with the level of the series.

The log transformation, typically the natural logarithm (ln or log), compresses larger values more than smaller values, thereby stabilizing the variance. This is because the difference between log(100) and log(101) is smaller than the difference between log(1000) and log(1001), reflecting a proportional change rather than an absolute one.

The transformation is applied as:

y′t = log (yt)

For this transformation to be valid, all values in the series must be positive. If your series contains zero or negative values, you might need to add a constant to shift all values to be positive before applying the log transformation.

Let’s illustrate the effect of a log transformation using Python. We’ll generate a synthetic series where the variance clearly increases over time.

# Generate a synthetic time series with increasing variance

time_points_var = np.arange(1, 101) # Time points for the series

# Base value increasing over time, simulating a growing series

base_values = 10 * time_points_var

# Noise component where variance increases proportionally with time

# This creates the "fanning out" effect typical of heteroscedasticity

variance_noise = np.random.normal(0, 0.1 * time_points_var, 100)

# Combine to create a series where fluctuations grow with the base value

series_increasing_variance = base_values + variance_noiseHere, we create another synthetic series, series_increasing_variance. The base_values component ensures an increasing trend, and crucially, thevariance_noise component is scaled by 0.1 * time_points_var. This scaling ensures that the magnitude of the random fluctuations (noise) increases as thetime_points_var (and thus base_values) increases, visually demonstrating heteroscedasticity.

# Plot the series to visually confirm the increasing variance

plt.figure(figsize=(12, 6))

plt.plot(time_points_var, series_increasing_variance, label='Series with Increasing Variance')

plt.title('Original Series with Increasing Variance (Heteroscedastic)')

plt.xlabel('Time')

plt.ylabel('Value')

plt.grid(True)

plt.legend()

plt.show()This plot clearly shows the “fanning out” effect, where the amplitude of the oscillations increases as the series progresses, indicating increasing variance. This is a classic visual cue for heteroscedasticity, a form of non-stationarity in the variance.

# Apply natural logarithm transformation to the series

# np.log computes the natural logarithm (base e) element-wise.

log_transformed_series = np.log(series_increasing_variance)The np.log() function applies the natural logarithm element-wise to our series. This operation aims to compress the larger values and proportionally expand the smaller values, thereby making the spread of the data more uniform across the range of values. This effectively stabilizes the variance.

# Plot the log-transformed series to observe the effect on variance

plt.figure(figsize=(12, 6))

plt.plot(time_points_var, log_transformed_series, label='Log Transformed Series', color='green')

plt.title('Log Transformed Series: Variance Stabilized')

plt.xlabel('Time')

plt.ylabel('Log(Value)')

plt.grid(True)

plt.legend()

plt.show()After the log transformation, the plot of log_transformed_series shows that the variance of the fluctuations has been significantly stabilized. The “fanning out” effect is largely gone, and the spread of the data around its trend appears much more consistent, demonstrating the effectiveness of the log transformation in addressing heteroscedasticity.

It’s important to remember that log transformation only works for positive values. If your data contains zeros or negative numbers, you might need to apply a shift (e.g., np.log(y_t + C) where C is a constant that makes all values positive) or consider other transformations like the Box-Cox transformation, which can handle a wider range of data types.

Inverse Transformations

After applying transformations like differencing or log transformations to achieve stationarity for modeling, the forecasts generated by the model will be in the transformed scale. To make these forecasts interpretable and useful in the original scale, we must apply the inverse transformation.

Inverse of Differencing: To revert a differenced series back to its original scale, you perform an inverse differencing operation, which is essentially a cumulative sum. If y′t = yt − yt − 1, then yt = y′t + yt − 1. This requires an initial value from the original series to “rebuild” the series. The

np.cumsum()function can be used for this, often combined with the first value of the original series to correctly anchor the rebuilt series.Inverse of Log Transformation: To revert a log-transformed series back to its original scale, you apply the exponential function. If y′t = log (yt), then yt = exp (y′t). The

np.exp()function is used for this purpose.

The order of inverse operations matters if multiple transformations were applied. For example, if you first log-transformed and then differenced the series, you would first apply the inverse differencing (cumulative sum) and then the inverse log transformation (exponential) to get back to the original scale.

Diagnosing Stationarity

While visual inspection of plots (like those shown above) can provide strong clues about stationarity, it is often insufficient for definitive diagnosis, especially for subtle forms of non-stationarity. More rigorous methods are available:

Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF) Plots: These plots are invaluable diagnostic tools. For a non-stationary series (especially one with a trend), the ACF typically decays very slowly, often remaining significantly high for many lags. This indicates that past values have a strong, persistent influence on current values. For a stationary series, the ACF generally drops off quickly to zero after a few lags, and the PACF usually shows a sharp cut-off. These patterns help distinguish between different types of non-stationarity and guide the choice of transformation.

Statistical Tests for Stationarity: Formal statistical tests provide a more objective assessment. The most common ones include:

Augmented Dickey-Fuller (ADF) Test: This test checks for the presence of a unit root, which is a characteristic of non-stationary series. The null hypothesis of the ADF test is that a unit root is present (i.e., the series is non-stationary). A low p-value (typically less than 0.05) suggests that we can reject the null hypothesis, implying the series is stationary.

Kwiatkowski-Phillips-Schmidt-Shin (KPSS) Test: This test is an alternative to ADF, with the null hypothesis that the series is stationary around a deterministic trend (or mean). A high p-value suggests stationarity, while a low p-value indicates non-stationarity.

We will delve deeper into the practical application and interpretation of ACF/PACF plots and statistical tests for stationarity in subsequent sections, as they are critical steps in the time series modeling workflow.

Identifying a random walk

Testing for Stationarity

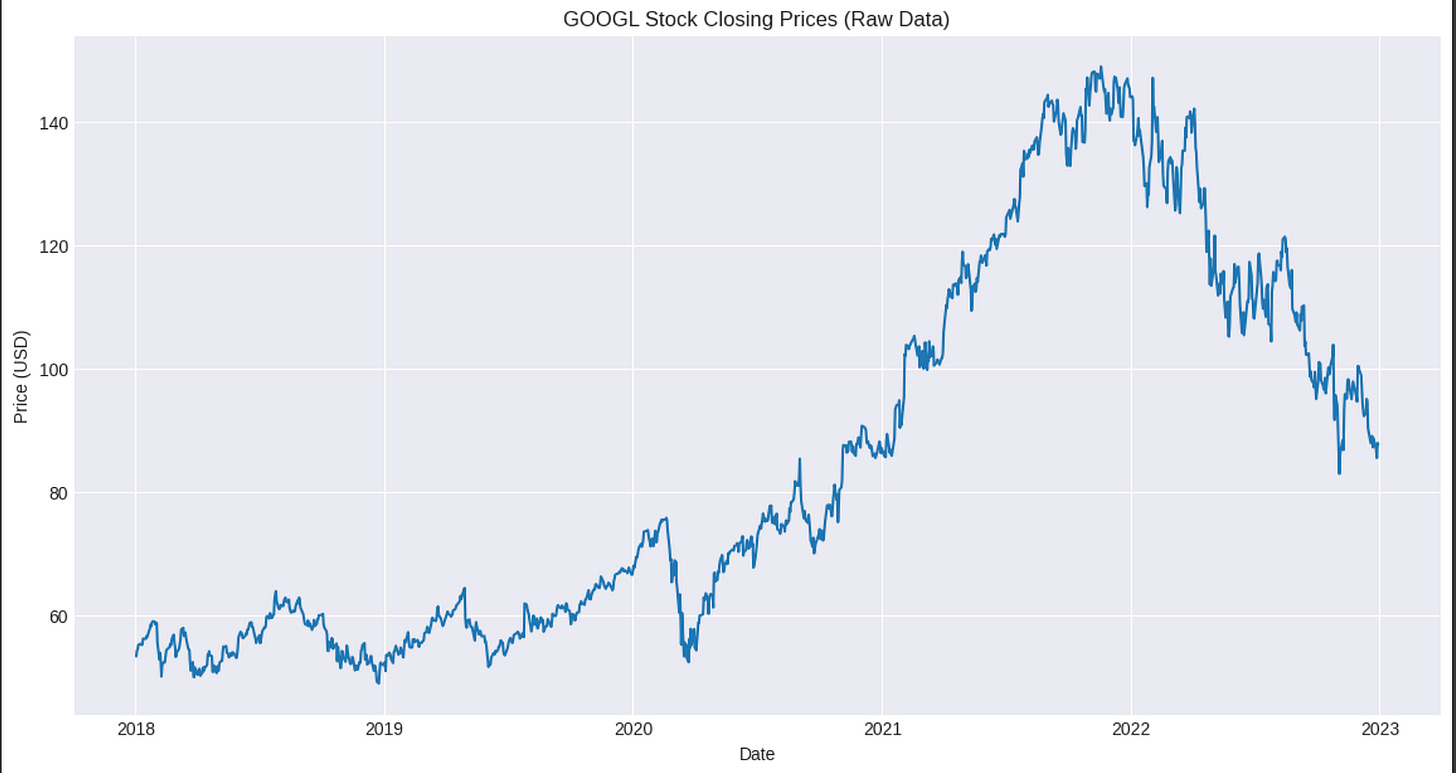

Stationarity is a cornerstone concept in classical time series analysis. While visual inspection of a time series plot, along with its rolling mean and variance, can provide strong qualitative evidence, a rigorous statistical test is often required to formally determine if a series is stationary. The Augmented Dickey-Fuller (ADF) test is one of the most widely used statistical tests for this purpose. It helps us determine if a unit root is present in a time series, which is a key indicator of non-stationarity.

Understanding the Augmented Dickey-Fuller (ADF) Test

The ADF test is a type of unit root test. A unit root is a characteristic of some stochastic processes that can cause problems in statistical inference involving time series models. Essentially, if a time series has a unit root, it means that a shock to the system will persist indefinitely, causing the series to wander randomly rather than reverting to a mean. This “wandering” behavior is precisely what we observe in a random walk.

The ADF test works by testing the null hypothesis that a unit root is present in the time series against the alternative hypothesis that it is not.

Null Hypothesis (H0): The time series has a unit root (i.e., it is non-stationary).

Alternative Hypothesis (H1): The time series does not have a unit root (i.e., it is stationary).

The test calculates a test statistic (the ADF statistic), which is then compared to critical values. It also provides a p-value. The interpretation is standard for hypothesis testing:

If the p-value is less than or equal to a chosen significance level (e.g., 0.05), we reject the null hypothesis. This implies that the series is likely stationary.

If the p-value is greater than the significance level, we fail to reject the null hypothesis. This suggests that the series is non-stationary and has a unit root.

The Intuition Behind a Unit Root

Consider a simple autoregressive process of order 1, denoted as AR(1):

yt = ϕyt − 1 + ϵt

Here, yt is the value of the series at time t, yt − 1 is the value at the previous time step, ϕ (phi) is the autoregressive coefficient, and ϵt (epsilon) is a white noise error term.

Stationary Case (|ϕ| < 1): If the absolute value of ϕ is less than 1 (e.g., ϕ = 0.5), any shock ϵt will have a diminishing impact over time. The series will tend to revert to its mean. This is analogous to a stable system where disturbances eventually die out. In terms of the “unit circle” concept often used in signal processing, for stationarity, the roots of the characteristic equation of the AR process must lie outside the unit circle. For an AR(1) process, this simply means |ϕ| < 1.

Non-Stationary Case (Unit Root, ϕ = 1): If ϕ = 1, the equation becomes:

yt = yt − 1 + ϵt

This is precisely the definition of a random walk. In this scenario, a shock ϵt has a permanent effect on the series. The series does not revert to a mean, and its variance grows over time. This is what we call a unit root. The term “unit root” comes from the fact that the root of the characteristic equation for this process is exactly 1.

Simulating Time Series for Stationarity Analysis

To understand the ADF test practically, let’s simulate both a stationary and a non-stationary time series and observe their characteristics. We will use NumPy for numerical operations and Matplotlib for plotting.

First, we import the necessary libraries and set a random seed for reproducibility.

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from statsmodels.tsa.stattools import adfuller # Important for ADF test

# Set a random seed for reproducibility

np.random.seed(42)We import statsmodels.tsa.stattools.adfuller here because it’s central to this section. pandas is also imported as it’s useful for time series data, especially for rolling calculations and handling NaN values.

Simulating a Stationary AR(1) Process

Let’s simulate a stationary AR(1) process defined by the equation yt = 0.5 ⋅ yt − 1 + ϵt. Here, ϕ = 0.5, which is less than 1 in magnitude, indicating stationarity.

# Parameters for simulation

n_points = 500

phi_stationary = 0.5 # Autoregressive coefficient (phi)

initial_value_stationary = 0.0

# Generate white noise (epsilon_t)

# np.random.standard_normal generates random samples from a standard normal distribution (mean=0, std=1)

white_noise_stationary = np.random.standard_normal(n_points)

# Initialize the time series array

stationary_series = np.zeros(n_points)

stationary_series[0] = initial_value_stationary

# Generate the stationary AR(1) series iteratively

for i in range(1, n_points):

stationary_series[i] = phi_stationary * stationary_series[i-1] + white_noise_stationary[i]In this code block, we first define the number of data points and the autoregressive coefficient phi_stationary. We then generate n_points of white noise, which represents the random shocks to our system. The core of the simulation is the for loop, where each stationary_series[i] is calculated based on the previous value and the current white noise term, following the AR(1) equation.

Now, let’s visualize this stationary series and its rolling mean and variance to confirm our understanding.

# Convert to pandas Series for rolling calculations

stationary_series_pd = pd.Series(stationary_series)

# Calculate rolling mean and standard deviation (for variance, we'd square std)

window_size = 30 # A common window size for rolling statistics

rolling_mean_stationary = stationary_series_pd.rolling(window=window_size).mean()

rolling_std_stationary = stationary_series_pd.rolling(window=window_size).std()

# Plot the stationary series

fig, axes = plt.subplots(3, 1, figsize=(12, 10), sharex=True)

axes[0].plot(stationary_series, label='Stationary AR(1) Series')

axes[0].set_title('Simulated Stationary AR(1) Process')

axes[0].set_ylabel('Value')

axes[0].grid(True)

# Plot rolling mean

axes[1].plot(rolling_mean_stationary, label=f'Rolling Mean (Window={window_size})', color='orange')

axes[1].axhline(y=stationary_series.mean(), color='r', linestyle='--', label='Overall Mean')

axes[1].set_title('Rolling Mean of Stationary Series')

axes[1].set_ylabel('Mean')

axes[1].grid(True)

# Plot rolling variance (squared rolling standard deviation)

axes[2].plot(rolling_std_stationary**2, label=f'Rolling Variance (Window={window_size})', color='green')

axes[2].axhline(y=stationary_series.var(), color='r', linestyle='--', label='Overall Variance')

axes[2].set_title('Rolling Variance of Stationary Series')

axes[2].set_xlabel('Time Step')

axes[2].set_ylabel('Variance')

axes[2].grid(True)

plt.tight_layout()

plt.show()Here, we convert our NumPy array to a Pandas Series to conveniently use its rolling() method for calculating rolling statistics. The window_size determines the number of observations included in each rolling calculation. We plot the series itself, its rolling mean, and its rolling variance. Notice how both the rolling mean and rolling variance for a stationary series tend to hover around a constant value, confirming our conceptual understanding.

Simulating a Non-Stationary Random Walk (Unit Root) Process

Now, let’s simulate a non-stationary random walk process, which is an AR(1) process where ϕ = 1. The equation is yt = yt − 1 + ϵt.

# Parameters for simulation

n_points_rw = 500

initial_value_rw = 0.0

# Generate white noise (epsilon_t)

white_noise_rw = np.random.standard_normal(n_points_rw)

# Generate the random walk (cumulative sum of white noise)

# np.cumsum calculates the cumulative sum along an axis.

random_walk_series = initial_value_rw + np.cumsum(white_noise_rw)This is the standard way to simulate a random walk: by taking the cumulative sum of a series of independent random shocks (white noise). This is equivalent to an AR(1) process with ϕ = 1.

Next, we visualize this random walk and its rolling mean and variance.

# Convert to pandas Series for rolling calculations

random_walk_series_pd = pd.Series(random_walk_series)

# Calculate rolling mean and standard deviation (for variance)

rolling_mean_rw = random_walk_series_pd.rolling(window=window_size).mean()

rolling_std_rw = random_walk_series_pd.rolling(window=window_size).std()

# Plot the random walk series

fig, axes = plt.subplots(3, 1, figsize=(12, 10), sharex=True)

axes[0].plot(random_walk_series, label='Random Walk Series')

axes[0].set_title('Simulated Random Walk (Non-Stationary)')

axes[0].set_ylabel('Value')

axes[0].grid(True)

# Plot rolling mean

axes[1].plot(rolling_mean_rw, label=f'Rolling Mean (Window={window_size})', color='orange')

axes[1].set_title('Rolling Mean of Random Walk')

axes[1].set_ylabel('Mean')

axes[1].grid(True)

# Plot rolling variance (squared rolling standard deviation)

axes[2].plot(rolling_std_rw**2, label=f'Rolling Variance (Window={window_size})', color='green')

axes[2].set_title('Rolling Variance of Random Walk')

axes[2].set_xlabel('Time Step')

axes[2].set_ylabel('Variance')

axes[2].grid(True)

plt.tight_layout()

plt.show()Observe the plots for the random walk. The series itself wanders without a clear mean. Crucially, the rolling mean shows a clear trend, and the rolling variance tends to increase over time. This dynamic behavior of mean and variance is characteristic of non-stationary processes.

Performing the Augmented Dickey-Fuller Test in Python

The statsmodels library provides a convenient function, adfuller, to perform the Augmented Dickey-Fuller test.

# Function to print ADF test results in a readable format

def print_adfuller_results(test_statistic, p_value, critical_values, series_name):

print(f"--- ADF Test Results for {series_name} ---")

print(f"ADF Statistic: {test_statistic:.4f}")

print(f"P-value: {p_value:.4f}")

print("Critical Values:")

for key, value in critical_values.items():

print(f" {key}: {value:.4f}")

# Decision based on p-value

if p_value <= 0.05:

print("\nConclusion: Reject the Null Hypothesis (H0). The series is likely stationary.")

else:

print("\nConclusion: Fail to Reject the Null Hypothesis (H0). The series is likely non-stationary (has a unit root).")

print("-" * 50)This helper function print_adfuller_results will make it easier to interpret the output of the adfuller function, providing a clear conclusion based on the p-value.

ADF Test on the Simulated Stationary Series

Let’s apply the ADF test to our stationary_series.

# Perform ADF test on the stationary series

adfuller_results_stationary = adfuller(stationary_series)

# Extract results

test_statistic_s, p_value_s, _, _, critical_values_s, _ = adfuller_results_stationary

# Print formatted results