Chapter 5: . Modeling an autoregressive process

In the previous chapter, we explored Moving Average (MA) processes, where the current value of a time series is modeled as a linear combination of past errorterms.

We observed that MA models exhibit a “finite memory,” meaning their influence on future values diminishes rapidly, typically cutting off after a specific number of lags in the Autocorrelation Function (ACF) plot. While effective for certain types of time series, many real-world phenomena exhibit a different kind of dependency: their current values are directly influenced by their own past values. This is the realm of Autoregressive (AR) processes.

Download Link at the end of this article!

Understanding the Autoregressive Concept

An Autoregressive (AR) process is a time series model where the current observation, Yt, is expressed as a linear combination of its past observations and a white noise error term. The term “autoregressive” literally means “regressing on itself,” emphasizing that the series’ future values are predicted using its own past values.

Consider a simple example: predicting tomorrow’s stock price based on today’s and yesterday’s prices. This is distinct from an MA model, where you might predict based on the magnitude of past prediction errors. In an AR model, the direct history of the series itself is the primary driver.

The AR(p) Notation

Autoregressive models are denoted as AR(p), where p signifies the order of the autoregressive process. The order p indicates the number of past observations (or “lags”) that are included in the model to predict the current value.

An

AR(1)process means that the current valueYtis primarily influenced by the immediately preceding valueYt-1.An

AR(2)process meansYtis influenced byYt-1andYt-2.Generally, an

AR(p)process implies thatYtdepends onYt-1, Yt-2, ..., Yt-p.

The general mathematical form of an AR(p) process is:

Yt = c + φ1 * Yt-1 + φ2 * Yt-2 + ... + φp * Yt-p + εt

Here: * Yt is the current observation. * c is a constant term (intercept). * φ1, φ2, ..., φp are the autoregressive coefficients, which quantify the strength and direction of the influence of past observations. * Yt-1, Yt-2, ..., Yt-p are the past observations at lags 1 to p. * εt is the white noise error term, representing random fluctuations not explained by past values.

Distinguishing AR from MA using ACF and PACF Plots

One of the most crucial steps in identifying the appropriate time series model is analyzing the Autocorrelation Function (ACF) and Partial Autocorrelation Function (PACF) plots. These plots reveal the underlying correlation structure of the series.

Recap: MA Model Signatures

ACF for MA(q): The ACF plot for a Moving Average process of order

qwill show significant spikes (correlations) at lags up toq, and then cut offabruptly (i.e., correlations become non-significant) at lags greater thanq. This reflects the finite memory of MA processes.PACF for MA(q): The PACF plot for an MA process will typically decay slowly (either exponentially or sinusoidally).

AR Model Signatures: The Decaying ACF

ACF for AR(p): In stark contrast to MA processes, the ACF plot for an Autoregressive process of order

pwill typically show a slowly decaying pattern. This decay can be exponential (smoothly tapering off) or sinusoidal (oscillating before tapering off).Why does the ACF decay for an AR process? Consider an

AR(1)process:Yt = c + φ1 * Yt-1 + εt.

Ytis directly correlated withYt-1(due toφ1). This strong correlation shows up at lag 1 in the ACF.Yt-1is also correlated withYt-2(sinceYt-1itself depends onYt-2).Because

Ytdepends onYt-1, andYt-1depends onYt-2,Ytalso has an indirect correlation withYt-2. This indirect correlation is weaker than the direct one.This chain of dependencies continues:

Ytis indirectly correlated withYt-3throughYt-1andYt-2, and so on. Each step further back in time weakens the overall correlation, causing the ACF values to gradually diminish rather than abruptly cut off. This creates the characteristic decaying pattern.

If the

φcoefficients are negative or if there are multiple AR terms, this decay might be oscillatory or sinusoidal, indicating alternating positive and negative correlations that gradually die out.PACF for AR(p): The PACF plot for an Autoregressive process of order

pwill show significant spikes at lags up top, and then cut off abruptly (i.e., correlations become non-significant) at lags greater thanp.Why does the PACF cut off for an AR process? The Partial Autocorrelation Function measures the direct correlation between an observation and a lagged observation, after removing the effects of the intermediate lags. For an

AR(p)process, the current valueYtis directly dependent only on the previouspvalues (Yt-1, ..., Yt-p). Once the influence of thesepdirect lags is accounted for, any further lags (Yt-p-1, etc.) have no additional direct correlation withYt. Therefore, the PACF effectively isolates the orderpby showing a sharp cutoff.

This distinct behavior of ACF (decaying) and PACF (cutting off) is the primary visual cue for identifying an AR process and determining its order p.

What’s Next?

In the following sections, we will delve deeper into the practical aspects of modeling autoregressive processes:

Formal Definition and Properties: We will mathematically define AR processes, discuss their stationarity conditions, and explore their theoretical properties.

Identifying the Order

p: We will learn how to systematically use the PACF plot to determine the appropriate orderpfor an AR model based on empirical data.Fitting AR Models: We will walk through the process of estimating the coefficients (

φvalues) of an AR model using Python libraries likestatsmodels. This will involve preparing time series data and interpreting model output.Forecasting with AR Models: Finally, we will apply our fitted AR models to generate future forecasts, evaluate their accuracy, and understand their limitations in real-world scenarios.

Predicting the Average Weekly Foot Traffic in a Retail Store

Understanding and predicting future trends is paramount for businesses to optimize operations, manage resources, and make informed strategic decisions. For a retail store, forecasting average weekly foot traffic is a critical application of time series analysis. Accurate predictions allow store managers to optimize staff scheduling, ensuring adequate coverage during peak hours while minimizing unnecessary labor costs during quieter periods. It also aids in inventory management, marketing campaign planning, and overall store layout optimization.

This section delves into a practical case study: forecasting weekly foot traffic. We will follow the standard workflow for time series model identification, emphasizing the steps necessary to prepare data for Autoregressive (AR) modeling and introduce a new crucial tool: the Partial Autocorrelation Function (PACF).

Understanding the Problem and Initial Data Exploration

Our objective is to forecast the average weekly foot traffic for a retail store. We’ll start by examining historical data. For educational purposes, we will generate a synthetic dataset that mimics realistic foot traffic patterns, including a clear upward trend, which is common in growing businesses or expanding markets.

Generating Synthetic Foot Traffic Data

Real-world datasets can be complex and sometimes proprietary. For learning purposes, generating synthetic data allows us to create specific patterns (like trends or seasonality) that illustrate key concepts effectively. Here, we’ll create a dataset with a clear upward trend and some random noise, representing weekly foot traffic over several years.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

# Set a seed for reproducibility

np.random.seed(42)

# Generate weekly dates for 5 years

dates = pd.date_range(start='2019-01-01', periods=5*52, freq='W')

# Generate a base foot traffic with an upward trend

# Start low and gradually increase

base_traffic = np.linspace(1500, 3000, len(dates))

# Add some weekly noise

noise = np.random.normal(loc=0, scale=150, size=len(dates))

# Add a subtle seasonal component (e.g., higher traffic towards year-end)

# This is a simplified seasonality, more complex patterns exist in real data

seasonal_component = 100 * np.sin(np.arange(len(dates)) * (2 * np.pi / 52))

# Combine components to create the final foot traffic series

foot_traffic = base_traffic + noise + seasonal_component

# Create a Pandas Series with dates as index

df = pd.DataFrame({'Foot_Traffic': foot_traffic}, index=dates)

# Display the first few rows

print("First 5 rows of the synthetic foot traffic data:")

print(df.head())The code above initializes our time series by creating a Pandas Series named df. We generate a sequence of weekly dates for five years. The base_trafficcomponent creates a linearly increasing trend, simulating growth. We then add noise using a normal distribution to mimic random fluctuations and a seasonal_component to introduce annual patterns, such as higher traffic during holiday seasons. Combining these elements results in our synthetic Foot_Traffic time series.

Visualizing the Time Series

Visualizing the raw time series is the crucial first step in any time series analysis. It allows us to observe patterns such as trends, seasonality, and unusual spikes or drops, which are key indicators for subsequent modeling steps.

# Plotting the raw time series

plt.figure(figsize=(14, 7))

sns.lineplot(data=df, x=df.index, y='Foot_Traffic', color='skyblue')

plt.title('Average Weekly Foot Traffic Over 5 Years', fontsize=16)

plt.xlabel('Date', fontsize=12)

plt.ylabel('Foot Traffic', fontsize=12)

plt.grid(True, linestyle='--', alpha=0.7)

plt.show()This plot (similar to what might be presented as “Figure 5.1” in a textbook) immediately reveals key characteristics of our data. We can clearly observe an upward trend, indicating that foot traffic is generally increasing over time. This visual cue is vital for assessing stationarity, a fundamental concept in time series analysis.

Assessing Stationarity

Most classical time series models, including Autoregressive (AR) models, assume that the underlying process generating the data is stationary. A stationary time series is one whose statistical properties (like mean, variance, and autocorrelation) do not change over time.

Why is Stationarity Important?

Model Simplification: Stationary series are easier to model because their behavior is consistent over time.

Reliable Forecasting: If a series is non-stationary, statistical models fitted to it might produce unreliable forecasts as the relationships between observations change over time.

Parameter Stability: The parameters of stationary models are constant, making them more interpretable and stable.

Visually, a series with a clear trend or varying variance (heteroscedasticity) is non-stationary. Our foot traffic plot clearly shows an upward trend, suggesting non-stationarity. While visual inspection is a good starting point, formal statistical tests are necessary for a more rigorous assessment.

The Augmented Dickey-Fuller (ADF) Test

The Augmented Dickey-Fuller (ADF) test is a widely used statistical test to check for stationarity in a time series. It’s a type of unit root test.

Null Hypothesis (H0): The time series has a unit root, meaning it is non-stationary.

Alternative Hypothesis (H1): The time series does not have a unit root, meaning it is stationary.

To interpret the test, we look at the p-value. * If the p-value is greater than a chosen significance level (e.g., 0.05), we fail to reject the null hypothesis, concluding that the series is non-stationary. * If the p-value is less than or equal to the significance level, we reject the null hypothesis, concluding that the series is stationary.

from statsmodels.tsa.stattools import adfuller

# Perform the ADF test on the raw foot traffic data

adf_result = adfuller(df['Foot_Traffic'])

# Extract and print the results

print(f'ADF Statistic: {adf_result[0]:.2f}')

print(f'p-value: {adf_result[1]:.3f}')

print('Critical Values:')

for key, value in adf_result[4].items():

print(f' {key}: {value:.2f}')

# Interpret the results

if adf_result[1] > 0.05:

print("\nConclusion: The series is likely non-stationary (p-value > 0.05).")

else:

print("\nConclusion: The series is likely stationary (p-value <= 0.05).")The output of the ADF test confirms our visual observation: the p-value is high (e.g., 0.999), indicating that the Foot_Traffic series is indeed non-stationary. This means we need to transform the data to achieve stationarity before applying most AR models.

Achieving Stationarity: Transformations (Differencing)

Since our series is non-stationary due to a trend, the most common and effective transformation is differencing. Differencing involves computing the difference between consecutive observations. This operation helps remove trend and seasonality, making the series stationary.

What is Differencing?

A first-order difference is calculated as: Y′t = Yt − Yt − 1. This effectively removes a linear trend. If the series still exhibits non-stationarity after first differencing (e.g., if it has a quadratic trend), a second-order difference might be applied: Y″t = Y′t − Y′t − 1.

For our foot traffic data, which shows a clear linear trend, a first-order differencing is typically sufficient.

# Apply first-order differencing

df['Foot_Traffic_Diff'] = df['Foot_Traffic'].diff().dropna()

# Display the first few rows of the differenced series

print("\nFirst 5 rows of the differenced foot traffic data:")

print(df['Foot_Traffic_Diff'].head())

# Plot the differenced time series

plt.figure(figsize=(14, 7))

sns.lineplot(data=df, x=df.index, y='Foot_Traffic_Diff', color='indianred')

plt.title('Differenced Average Weekly Foot Traffic', fontsize=16)

plt.xlabel('Date', fontsize=12)

plt.ylabel('Differenced Foot Traffic', fontsize=12)

plt.grid(True, linestyle='--', alpha=0.7)

plt.show()After differencing, the plot of Foot_Traffic_Diff should appear much more stable, without a clear upward or downward trend. The mean should hover around zero, and the variance should appear more constant.

Re-testing Stationarity after Differencing

It’s crucial to re-run the ADF test on the differenced series to confirm that stationarity has been achieved.

# Perform the ADF test on the differenced foot traffic data

adf_result_diff = adfuller(df['Foot_Traffic_Diff'])

# Extract and print the results

print(f'ADF Statistic (Differenced): {adf_result_diff[0]:.2f}')

print(f'p-value (Differenced): {adf_result_diff[1]:.3f}')

print('Critical Values:')

for key, value in adf_result_diff[4].items():

print(f' {key}: {value:.2f}')

# Interpret the results

if adf_result_diff[1] > 0.05:

print("\nConclusion: The differenced series is likely non-stationary (p-value > 0.05).")

else:

print("\nConclusion: The differenced series is likely stationary (p-value <= 0.05).")Now, the p-value for the differenced series should be significantly lower (e.g., less than 0.05), allowing us to reject the null hypothesis and conclude that the series is stationary. This confirms that differencing has successfully removed the trend.

Once our time series is stationary, we can proceed to identify the order of the Autoregressive (AR) process. For this, we rely on two powerful tools: the Autocorrelation Function (ACF) and the Partial Autocorrelation Function (PACF).

Autocorrelation Function (ACF)

The ACF measures the correlation between a time series and its lagged values. For a stationary series, the ACF helps us understand the extent to which past values influence current values.

For an Autoregressive (AR) process, the ACF typically exhibits an exponential decay or a sinusoidal pattern. This decay indicates that the correlation gradually diminishes as the lag increases. It does not cut off abruptly, unlike the PACF for an AR process.

A common point of confusion: A slowly decaying ACF in a non-stationary series is often the visual cue that differencing is needed. Once differenced and stationary, an AR process’s ACF will still decay, but it’s the PACF that provides direct information about the AR order.

Partial Autocorrelation Function (PACF)

The PACF measures the correlation between an observation and a lagged observation, after removing the effects of the intermediate observations. For example, the partial autocorrelation at lag 3 is the correlation between Yt and Yt − 3 that is not explained by the linear dependence on Yt − 1 and Yt − 2.

The PACF is particularly useful for identifying the order of an Autoregressive (AR) model. For an AR(p) process, the PACF will show significant spikes at lags 1 through p and then “cut off” (become non-significant) at lags greater than p.

Plotting ACF and PACF

Let’s plot the ACF and PACF for our differenced (stationary) foot traffic data.

from statsmodels.graphics.tsaplots import plot_acf, plot_pacf

# Plot ACF

plt.figure(figsize=(14, 6))

plot_acf(df['Foot_Traffic_Diff'], lags=40, ax=plt.gca(), title='Autocorrelation Function (ACF) of Differenced Foot Traffic')

plt.xlabel('Lag', fontsize=12)

plt.ylabel('Autocorrelation', fontsize=12)

plt.grid(True, linestyle='--', alpha=0.7)

plt.show()

# Plot PACF

plt.figure(figsize=(14, 6))

plot_pacf(df['Foot_Traffic_Diff'], lags=40, ax=plt.gca(), title='Partial Autocorrelation Function (PACF) of Differenced Foot Traffic')

plt.xlabel('Lag', fontsize=12)

plt.ylabel('Partial Autocorrelation', fontsize=12)

plt.grid(True, linestyle='--', alpha=0.7)

plt.show()When interpreting these plots: * ACF Plot: Look for the pattern of decay. For a purely AR process, it should decay exponentially. * PACF Plot: Look for the “cutoff” point. The lag at which the PACF values become non-significant (fall within the blue shaded confidence interval) suggests the order p for an AR(p) model. For our synthetic data, you might observe significant spikes at the first few lags (e.g., lag 1, 2) and then a drop into the confidence interval, indicating the likely AR order. For instance, if only lag 1 is significant, it suggests an AR(1) process. If lags 1 and 2 are significant, it suggests an AR(2) process.

Moving Towards AR Model Building

Based on the interpretation of the PACF plot, we can determine the appropriate order p for our Autoregressive model, denoted as AR(p). An AR(p) model predicts the current value of the series based on a linear combination of its past p values.

The general form of an AR(p) model is:

Yt = c + ϕ1Yt − 1 + ϕ2Yt − 2 + … + ϕpYt − p + ϵt

Where: * Yt is the value of the series at time t. * c is a constant. * ϕ1, ..., ϕp are the model parameters (coefficients) that quantify the influence of past values. * ϵtis the white noise error term, representing random fluctuations not explained by the model.

In the subsequent sections, we will use libraries like statsmodels in Python to fit AR models based on the identified order p and then evaluate their forecasting performance. The process of understanding the data, ensuring stationarity, and identifying the model order using ACF and PACF is foundational to building robust time series forecasting models.

Defining the Autoregressive Process

In our journey through time series modeling, we’ve explored how past error terms can influence current observations through Moving Average (MA) processes. Now, we shift our focus to a different, yet equally powerful, mechanism: how past values of the time series itself can predict current values. This concept forms the foundation of Autoregressive (AR) processes.

The Core Idea: Regression of a Variable on Itself

The term “autoregressive” might sound complex, but it simply means “regressing a variable on its own past values.” Think of it like this: if you want to predict how much coffee you’ll drink today, a very strong predictor might be how much coffee you drank yesterday, or even the day before. You are using your own past coffee consumption to predict your current consumption.

In time series analysis, this translates to using a series’s own lagged values (values from previous time periods) as predictors in a regression equation. Unlike Moving Average models, which look at the influence of past random shocks or error terms, Autoregressive models directly examine the linear relationship between the current observation and a certain number of its preceding observations.

The General Autoregressive Model: AR(p)

An Autoregressive process of order p, denoted as AR(p), models the current value of a time series, Yt, as a linear combination of its p previous values (Yt − 1, Yt − 2, …, Yt − p) plus a constant and a white noise error term.

The general mathematical expression for an AR(p) model is:

Yt = C + ϕ1Yt − 1 + ϕ2Yt − 2 + … + ϕpYt − p + ϵt

Let’s break down each component of this equation to fully understand its meaning and significance:

Yt: This represents the current observation of the time series at time

t. It is the value we are trying to model or predict. For example, if we’re forecasting retail foot traffic, Yt would be the foot traffic this week.C: This is the constant term, also known as the intercept or drift. It represents the baseline value of the series when all past values are zero, or more generally, it accounts for a non-zero mean in the series. If C is non-zero, it suggests a systematic upward or downward trend or a long-term average that the series tends towards. We’ll explore its implications further when discussing the random walk.

ϕ1, ϕ2, …, ϕp: These are the autoregressive coefficients. Each ϕi measures the linear impact of the

i-th lagged observation (Yt − i) on the current observation (Yt).For instance, ϕ1 quantifies how much of Yt − 1 (the value from the previous period) contributes to Yt.

These coefficients are crucial because they dictate the persistence and pattern of the time series. Positive coefficients suggest that a high value in the past leads to a high value now, while negative coefficients suggest an oscillatory or mean-reverting behavior.

Yt − 1, Yt − 2, …, Yt − p: These are the lagged values of the time series. They represent the observations from

1period ago,2periods ago, up topperiods ago. These are the “regressors” in our “autoregression.”p: This is the order of the autoregressive process. It indicates the number of past observations that are included in the model to predict the current value.

An AR(1) model uses only the immediately preceding value (Yt − 1).

An AR(2) model uses the two preceding values (Yt − 1 and Yt − 2).

The choice of

pis critical and often determined by analyzing the autocorrelation function (ACF) and partial autocorrelation function (PACF) of the time series, which we will cover in subsequent chapters.ϵt: This is the error term, also known as the residual or innovation at time

t. It represents the portion of Yt that cannot be explained by the linear combination of its past values. It captures all the random, unpredictable fluctuations in the series. For a valid AR model, this error term is assumed to be “white noise.”

Special Cases: AR(1) and AR(2) Models

Understanding the general AR(p) model is key, but it’s often helpful to look at its simplest forms to build intuition.

The AR(1) Process

An Autoregressive process of order 1, or AR(1), is the simplest form of an AR model. It states that the current value of the series depends only on its immediately preceding value and a random error.

The mathematical formulation for an AR(1) model is:

Yt = C + ϕ1Yt − 1 + ϵt

Here, the current value Yt is a linear function of the previous value Yt − 1, plus a constant C and a white noise error ϵt. The coefficient ϕ1 determines the strength and direction of the relationship between consecutive observations.

The AR(2) Process

An Autoregressive process of order 2, or AR(2), extends the AR(1) by including the second lag as a predictor.

The mathematical formulation for an AR(2) model is:

Yt = C + ϕ1Yt − 1 + ϕ2Yt − 2 + ϵt

In this model, Yt depends on both Yt − 1 and Yt − 2. The coefficients ϕ1 and ϕ2 capture the influence of these two immediate past values. An AR(2) model can capture more complex patterns than an AR(1), such as oscillations or cycles that might not be apparent from just the previous period.

AR(1) and the Random Walk: A Special Relationship

One of the most important insights for understanding AR(1) processes is their direct link to the random walk model, which we briefly touched upon previously.

A Random Walk model is defined as:

Yt = Yt − 1 + ϵt

Comparing this to the AR(1) equation: Yt = C + ϕ1Yt − 1 + ϵt

We can see that a random walk is a special case of an AR(1) model where: * The constant term C is equal to 0. * The autoregressive coefficient ϕ1 is exactly 1.

This means that if ϕ1 = 1, the current value is simply the previous value plus a random shock. The series has no tendency to return to a mean; it can wander indefinitely.

Implications of the Constant C (Drift)

If we consider an AR(1) model where ϕ1 = 1 but the constant C is non-zero:

Yt = C + Yt − 1 + ϵt

This is known as a Random Walk with Drift. In this scenario, the series still wanders randomly, but on average, it drifts upwards or downwards by the value of C in each period. For example, if C represents an average daily increase in stock price, the stock price would tend to rise by C each day, on top of its random fluctuations. This is particularly relevant for modeling phenomena that exhibit a sustained trend, such as economic growth or population increase.

The Error Term: White Noise Assumption

A fundamental assumption for the error term ϵt in an AR(p) model (and indeed in many time series models) is that it must be white noise. This means that the error term satisfies the following properties:

Zero Mean: E[ϵt] = 0. On average, the errors should not systematically push the series up or down.

Constant Variance: Var[ϵt] = σ2. The variability of the errors should be consistent over time, meaning there are no periods of unusually high or low volatility. This is also known as homoscedasticity.

No Autocorrelation: Cov[ϵt,ϵt − k] = 0 for all k ≠ 0. The error at any given time

tshould not be correlated with errors from any other time period. This ensures that all the predictable information from past values has been captured by the AR terms, and only random, unpredictable noise remains in the error term.

If the error term is not white noise, it implies that there is still some systematic pattern or information left in the residuals that could be modeled, indicating that the AR model is not fully capturing the underlying process.

A Critical Property: Stationarity

For an AR(p) process to be stable and predictable in the long run, it must be stationary. A stationary time series is one whose statistical properties (like mean, variance, and autocorrelation) do not change over time. This is a crucial concept because many statistical techniques for time series analysis assume stationarity. Non-stationary series often exhibit trends or changing variance, making them difficult to model and forecast reliably.

For an AR(1) process, Yt = C + ϕ1Yt − 1 + ϵt, the condition for stationarity is that the absolute value of the autoregressive coefficient ϕ1 must be less than 1 (i.e., |ϕ1| < 1). * If |ϕ1| < 1, any shock to the system will eventually die out, and the series will tend to revert to its mean. * If ϕ1 = 1 (as in a random walk), the series is non-stationary, and shocks have a permanent effect. * If |ϕ1| > 1, the series is explosive; any shock will be amplified over time, leading to an ever-increasing or decreasing trend.

For an AR(2) process, Yt = C + ϕ1Yt − 1 + ϕ2Yt − 2 + ϵt, the stationarity conditions are slightly more complex, involving the roots of a characteristic polynomial. Specifically, the roots of the polynomial 1 − ϕ1L − ϕ2L2 = 0 (where L is the lag operator) must lie outside the unit circle (or equivalently, for the inverse roots, inside the unit circle). This ensures that the influence of past values diminishes over time.

Understanding stationarity is vital because it determines whether an AR model is appropriate for a given series and how its forecasts will behave. We will delve deeper into testing for and addressing non-stationarity in later chapters.

Looking Ahead

This section has laid the theoretical groundwork for understanding Autoregressive processes. In subsequent sections, we will move from definition to application. We will explore how to identify the appropriate order p for an AR model, how to estimate its coefficients, and critically, how to simulate AR processes in Python to visualize their behavior. Simulating AR(1) and AR(2) processes with different ϕ coefficients will provide concrete examples of how these parameters influence the time series patterns, reinforcing the concepts introduced here. We will also revisit our retail foot traffic example to apply these concepts in a practical forecasting scenario.

Preparing and Inspecting the Time Series Data

Before we can determine the order of an autoregressive (AR) process, we must first ensure our time series data is properly loaded and understood. This initial step involves importing necessary libraries, loading the dataset, and performing a preliminary inspection to confirm its structure and content.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.stattools import adfuller

from statsmodels.graphics.tsaplots import plot_acf, plot_pacf # Import plot_pacf here

import warnings

# Suppress warnings for cleaner output in a book context

warnings.filterwarnings('ignore')We begin by importing pandas for data manipulation, numpy for numerical operations (specifically for differencing), and matplotlib.pyplot for plotting. Crucially, we import adfuller from statsmodels.tsa.stattools for stationarity testing, and plot_acf and plot_pacf fromstatsmodels.graphics.tsaplots for visualizing autocorrelation functions. We also include a line to suppress warnings, which is often useful for a cleaner presentation in educational material.

# Define the path to our dataset

file_path = 'foot_traffic.csv'

# Load the dataset into a pandas DataFrame

# The 'Date' column is parsed as dates and set as the DataFrame index

df = pd.read_csv(file_path, parse_dates=['Date'], index_col='Date')

# Display the first few rows of the DataFrame to inspect the data structure

print("First 5 rows of the foot traffic dataset:")

print(df.head())Here, we load our foot_traffic.csv dataset. It’s essential to parse the ‘Date’ column as actual datetime objects and set it as the DataFrame’s index. This step is critical for time series analysis, as it allows pandas to recognize the temporal ordering of our data. Inspecting the head() of the DataFrame provides a quick overview of the data, confirming column names, data types, and the range of values. This ensures the data has loaded correctly before proceeding with more complex analysis.

Visualizing the Time Series

A fundamental step in any time series analysis is to visualize the data. This allows us to quickly identify obvious patterns such as trends (long-term increases or decreases), seasonality (repeating patterns at fixed intervals), and any significant outliers. These visual cues are often the first indicators of whether a series is stationary or non-stationary.

# Create a figure and a set of subplots for the plot

fig, ax = plt.subplots(figsize=(12, 6))

# Plot the 'foot_traffic' column

ax.plot(df['foot_traffic'])

# Set plot titles and labels for clarity

ax.set_title('Average Weekly Foot Traffic in a Retail Store', fontsize=16)

ax.set_xlabel('Date', fontsize=12)

ax.set_ylabel('Foot Traffic', fontsize=12)

# Rotate x-axis labels for better readability, especially with dates

plt.xticks(rotation=45)

# Automatically format the x-axis to prevent overlapping date labels

fig.autofmt_xdate()

# Adjust layout to prevent labels from overlapping

plt.tight_layout()

# Display the plot

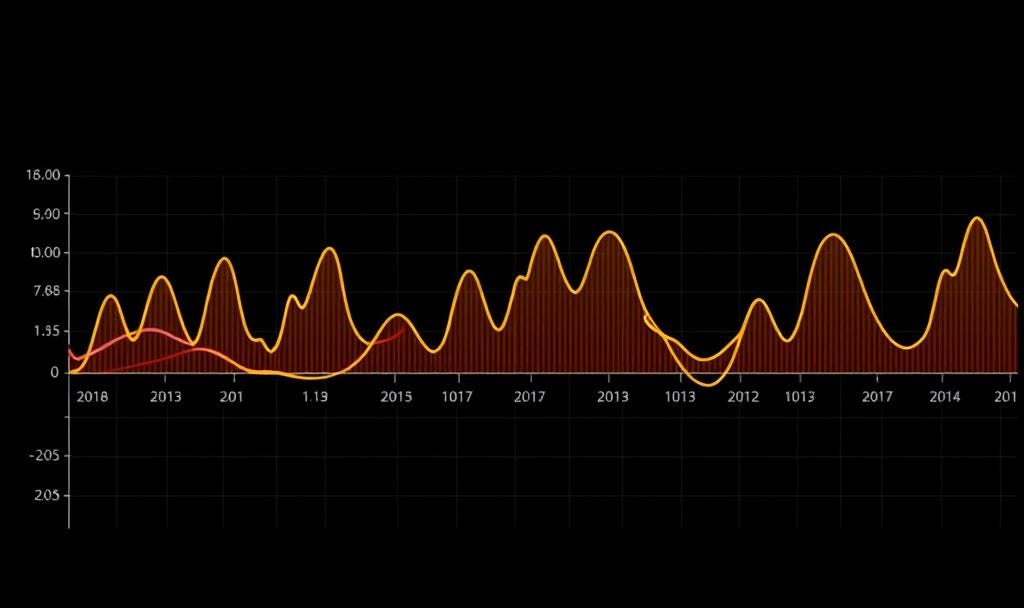

plt.show()This code block generates a line plot of the foot_traffic data over time. We use matplotlib.pyplot to create a figure and an axes object, then plot the series. Setting appropriate titles and labels is crucial for interpretability. The plt.xticks(rotation=45) and fig.autofmt_xdate() commands are particularly useful for time series plots, as they prevent date labels on the x-axis from overlapping, making the plot much more readable. From this visual inspection, we can observe if there’s an upward or downward trend, or if there are recurring spikes or dips that suggest seasonality. For our foot_traffic data, we typically observe an upward trend, indicating non-stationarity.

Testing for Stationarity: The Augmented Dickey-Fuller (ADF) Test

Stationarity is a core assumption for many time series models, including AR models. A stationary series has statistical properties (mean, variance, autocorrelation) that do not change over time. Non-stationary series often exhibit trends, seasonality, or a changing variance. The Augmented Dickey-Fuller (ADF) test is a formal statistical test used to check for stationarity.

The ADF test has the following hypotheses: * Null Hypothesis (H0): The time series has a unit root (i.e., it is non-stationary). * Alternative Hypothesis (H1): The time series does not have a unit root (i.e., it is stationary).

We reject the null hypothesis if the p-value is less than our chosen significance level (e.g., 0.05). If we reject H0, it means the series is likely stationary.

def run_adf_test(series, name='Series'):

"""

Performs the Augmented Dickey-Fuller test on a given series

and prints the results in a user-friendly format.

"""

print(f"\n--- ADF Test Results for {name} ---")

# Perform the ADF test

result = adfuller(series.dropna()) # .dropna() handles potential NaN values from differencing

# Extract and print key statistics

print(f'ADF Statistic: {result[0]:.4f}')

print(f'P-value: {result[1]:.4f}')

print('Critical Values:')

for key, value in result[4].items():

print(f' {key}: {value:.4f}')

# Interpret the p-value

if result[1] <= 0.05:

print("Conclusion: Reject the null hypothesis (H0). The series is likely stationary.")

else:

print("Conclusion: Fail to reject the null hypothesis (H0). The series is non-stationary.")

# Run ADF test on the original 'foot_traffic' series

run_adf_test(df['foot_traffic'], 'Original Foot Traffic')We define a helper function run_adf_test to encapsulate the ADF test execution and result interpretation. This makes our code cleaner and more reusable. We then apply this function to our original foot_traffic series. When running adfuller, we pass series.dropna() to ensure any potential NaN values (which can arise from operations like differencing later) are handled, although for the original series, this is not strictly necessary. The output provides the ADF statistic, the p-value, and critical values at different confidence levels. For the foot_traffic data, the p-value for the original series will typically be high (e.g., > 0.05), leading us to conclude that the series is non-stationary, consistent with our visual inspection.

Achieving Stationarity Through Differencing

If the ADF test indicates non-stationarity, we need to transform the series to make it stationary. A common and effective technique is differencing. First-order differencing calculates the difference between consecutive observations (Yt − Yt − 1). This effectively removes a linear trend. If seasonality is present, seasonal differencing can also be applied.

# Apply first-order differencing to the 'foot_traffic' series

# np.diff returns a NumPy array, so we convert it back to a pandas Series

# The index needs to be aligned, so we start from the second element of the original index

foot_traffic_diff = pd.Series(np.diff(df['foot_traffic']),

index=df.index[1:],

name='foot_traffic_differenced')

print("\nFirst 5 rows of the differenced foot traffic dataset:")

print(foot_traffic_diff.head())Here, we use np.diff() to perform first-order differencing. This operation calculates Yt − Yt − 1 for each point. Note that np.diff() returns a NumPy array, so we convert it back into a pandas Series and align its index with the original DataFrame’s index, starting from the second element since the first difference results in one less data point. The resulting foot_traffic_diff series represents the week-over-week change in foot traffic.

Visualizing the Differenced Series

After differencing, it’s good practice to visualize the transformed series to see if the trend has been removed and if the series now appears more stable around a constant mean.

# Create a figure and a set of subplots for the differenced series plot

fig, ax = plt.subplots(figsize=(12, 6))

# Plot the differenced 'foot_traffic' series

ax.plot(foot_traffic_diff)

# Set plot titles and labels

ax.set_title('Differenced Weekly Foot Traffic', fontsize=16)

ax.set_xlabel('Date', fontsize=12)

ax.set_ylabel('Change in Foot Traffic', fontsize=12)

# Rotate x-axis labels and format dates for readability

plt.xticks(rotation=45)

fig.autofmt_xdate()

plt.tight_layout()

# Display the plot

plt.show()This code plots the foot_traffic_diff series. Visually, this plot should appear much more stable, fluctuating around zero, without a clear upward or downward trend. This visual confirmation is a good preliminary indicator that differencing has been effective in achieving stationarity.

Re-testing for Stationarity (ADF Test on Differenced Data)

After differencing, we must formally re-test the series for stationarity using the ADF test to confirm that the transformation was successful.

# Run ADF test on the differenced 'foot_traffic' series

run_adf_test(foot_traffic_diff, 'Differenced Foot Traffic')We re-run our run_adf_test function, this time on the foot_traffic_diff series. For a successfully differenced series, we expect the p-value to be low (e.g., < 0.05), leading us to reject the null hypothesis and conclude that the differenced series is indeed stationary. If the series were still non-stationary, we might consider applying a second differencing step, or seasonal differencing if seasonality is suspected.

Autocorrelation Function (ACF) Analysis for AR Processes

Once a time series is stationary, the next step in identifying its underlying process (AR, MA, or ARMA) involves analyzing its autocorrelation structure. The Autocorrelation Function (ACF) measures the linear correlation of a time series with its lagged values. For an Autoregressive (AR) process, the ACF typically exhibits a slow, exponential decay or a sinusoidal pattern. This is because an AR process directly depends on its past values, which in turn depend on theirpast values, leading to a ripple effect of correlations across many lags.

# Create a figure and axes for the ACF plot

fig, ax = plt.subplots(figsize=(12, 6))

# Generate and plot the ACF for the differenced series

# lags='auto' lets the function choose an appropriate number of lags

# alpha=0.05 sets the confidence interval to 95% (alpha is significance level)

plot_acf(foot_traffic_diff, lags=40, ax=ax, alpha=0.05)

# Set plot titles and labels

ax.set_title('Autocorrelation Function (ACF) of Differenced Foot Traffic', fontsize=16)

ax.set_xlabel('Lag', fontsize=12)

ax.set_ylabel('Autocorrelation', fontsize=12)

# Add a horizontal line at y=0 for reference

ax.axhline(0, linestyle='--', color='gray', linewidth=0.7)

# Display the plot

plt.show()This code generates and displays the ACF plot for our stationary foot_traffic_diff series. The plot_acf function from statsmodels handles the calculations and plotting. The blue shaded area represents the confidence interval; any bars extending beyond this area are considered statistically significant. For an AR process, we look for a pattern where the autocorrelation values gradually decrease, either smoothly or with oscillations, rather than abruptly cutting off after a few lags (which would be characteristic of an MA process). Observing this exponential decay in the ACF plot for our differenced foot traffic data strongly suggests that an Autoregressive component is present in the series.

Partial Autocorrelation Function (PACF) Analysis for AR Order

While the ACF helps us identify the presence of an AR component (through its exponential decay), it does not directly tell us the order p of the AR process. For this, we turn to the Partial Autocorrelation Function (PACF).

The PACF measures the correlation between an observation Yt and an observation at a previous lag Yt − k, after removing the linear dependence of Yt on all intermediate observations (Yt − 1, Yt − 2, …, Yt − k + 1). In simpler terms, PACF tells us the direct correlation between Yt and Yt − k, isolating the influence of the specific lag.

For an Autoregressive process of order p, denoted AR(p): * The PACF will show significant spikes at lags up to p. * The PACF will cut off to zero (or become non-significant) after lag p.

This is the inverse behavior compared to MA processes, where the ACF cuts off and the PACF decays. Understanding this distinction is crucial for correctly identifying model orders.

# Create a figure and axes for the PACF plot

fig, ax = plt.subplots(figsize=(12, 6))

# Generate and plot the PACF for the differenced series

# lags='auto' lets the function choose an appropriate number of lags

# alpha=0.05 sets the confidence interval to 95%

plot_pacf(foot_traffic_diff, lags=40, ax=ax, alpha=0.05)

# Set plot titles and labels

ax.set_title('Partial Autocorrelation Function (PACF) of Differenced Foot Traffic', fontsize=16)

ax.set_xlabel('Lag', fontsize=12)

ax.set_ylabel('Partial Autocorrelation', fontsize=12)

# Add a horizontal line at y=0 for reference

ax.axhline(0, linestyle='--', color='gray', linewidth=0.7)

# Display the plot

plt.show()This crucial code block generates the PACF plot for our stationary foot_traffic_diff series. Similar to plot_acf, plot_pacf calculates and plots the partial autocorrelations. The interpretation for determining the AR order p is straightforward: identify the last significant spike (the last bar extending beyond the blue confidence interval) before the PACF values drop to non-significant levels.

For example, if the PACF shows significant spikes at lag 1 and lag 2, but then drops into the confidence interval from lag 3 onwards, this suggests an AR(2) process. The p value determined from the PACF plot will be used as the p parameter when fitting an AR model (e.g., AR(p) or ARIMA(p, d, 0)), where d is the order of differencing already applied.

Conclusion: Finding the AR Order

To summarize the process of finding the order p of a stationary autoregressive process:

Ensure Stationarity: Visualize the time series and perform the Augmented Dickey-Fuller (ADF) test. If non-stationary (e.g., high p-value for ADF), apply differencing (e.g.,

np.diff) until the series becomes stationary, then re-test with ADF. The number of differencing steps becomes thedparameter in an ARIMA model.ACF Analysis: Plot the Autocorrelation Function (ACF) of the stationary series. If the ACF exhibits a slow, exponential decay or sinusoidal pattern, it indicates the presence of an Autoregressive component.

PACF Analysis: Plot the Partial Autocorrelation Function (PACF) of the stationary series. The order

pof the AR process is determined by the lag where the PACF plot cuts off sharply to non-significant values (i.e., the last significant spike before values fall within the confidence interval).

This systematic approach, combining visual inspection with formal statistical tests and the distinct patterns of ACF and PACF plots, provides a robust methodology for identifying the appropriate order of an AR model for forecasting.

The Partial Autocorrelation Function (PACF)

In the previous section, we established the mathematical definition of an Autoregressive (AR) process, where the current value of a time series is a linear combination of its past values and a white noise error term. A critical step in building an AR model is determining its order, p, which represents the number of past observations that directly influence the current observation. While the Autocorrelation Function (ACF) helps identify Moving Average (MA) processes by showing a sharp cutoff, it does not exhibit the same clear behavior for AR processes. For AR models, the ACF tends to decay gradually, making it difficult to pinpoint the exact order p.

This is where the Partial Autocorrelation Function (PACF) becomes indispensable. The PACF is specifically designed to help us identify the order of an AR process.

Understanding What the PACF Measures

The Partial Autocorrelation Function (PACF) measures the linear relationship between an observation and a lagged observation in a time series, after removing the linear influence of the observations at intermediate lags.

To illustrate this distinction from the ACF: * ACF at lag k: Measures the correlation between Y_t and Y_{t-k}. This correlation includes both the direct effect of Y_{t-k} on Y_t and any indirect effects that pass through the intermediate observations Y_{t-1}, Y_{t-2}, ..., Y_{t-k+1}. * PACF at lag k: Measures the direct correlation between Y_t and Y_{t-k}, effectively “partialing out” or removing the influence of Y_{t-1}, Y_{t-2}, ..., Y_{t-k+1}.

Consider an AR(1) process: Y_t = \phi_1 Y_{t-1} + \epsilon_t. * The ACF at lag 1 will be strong, reflecting the direct relationship. * The ACF at lag 2 will also be non-zero, but this is because Y_t is influenced by Y_{t-1}, and Y_{t-1} is influenced by Y_{t-2}. So, Y_{t-2} has an indirect effect on Y_t through Y_{t-1}. * The PACF at lag 1 will show a strong correlation, as expected. * The PACF at lag 2, however, will be close to zero (non-significant). This is because once the effect of Y_{t-1} is removed, Y_{t-2} has no direct influence on Y_t in an AR(1) process.

This “partialing out” is crucial because for a pure AR(p) process, only the first p lags have a direct influence on the current value. Lags beyond p have only indirect influence, which the PACF removes, leading to a clear “cutoff” behavior.

Simulating AR(p) Processes and Their PACF

To solidify our understanding, let’s simulate some known AR(p) processes and observe their PACF plots. We’ll use the statsmodels library in Python, which provides powerful tools for time series analysis.

First, we need to import the necessary libraries:

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.arima_process import ArmaProcess

from statsmodels.graphics.tsaplots import plot_pacf

import pandas as pd # For loading the foot traffic data laterHere, numpy is used for numerical operations and array creation, matplotlib.pyplot for plotting, ArmaProcess from statsmodels for simulating ARMA processes, and plot_pacf for generating PACF plots. pandas will be used for our real-world data example.

To ensure our simulations are reproducible, we’ll set a random seed:

# Set a random seed for reproducibility

np.random.seed(42)Setting np.random.seed() ensures that every time you run the code, the “random” numbers generated by numpy are the same, leading to identical simulation results. This is vital for debugging and sharing your analysis.

Simulating an AR(1) Process

An AR(1) process is defined by Y_t = c + \phi_1 Y_{t-1} + \epsilon_t. Let’s simulate one with a coefficient \phi_1 = 0.7.

# Define the AR(1) coefficients.

# IMPORTANT: statsmodels' ArmaProcess expects AR coefficients to be negated.

# If your model is Y_t = phi_1*Y_t-1 + error_t, then the AR part is (1 - phi_1*L)*Y_t.

# The 'ar' array should contain [phi_1, phi_2, ...].

# So, if phi_1 = 0.7, we pass [0.7].

ar_coeffs_ar1 = np.array([0.7]) # Phi_1 = 0.7

ma_coeffs_ar1 = np.array([1]) # No MA component, so it's just [1]

# Create an ArmaProcess object for AR(1)

# The 'ar' argument expects coefficients in the form [phi_1, phi_2, ...].

# The 'ma' argument expects coefficients for MA part (we set to [1] for no MA)

ar1_process = ArmaProcess(ar=ar_coeffs_ar1, ma=ma_coeffs_ar1)

# Generate a sample time series from the AR(1) process

n_samples = 1000

ar1_series = ar1_process.generate_sample(nsample=n_samples)Important Note on ArmaProcess Coefficients: A common point of confusion when using statsmodels.tsa.arima_process.ArmaProcess is how to specify the ar coefficients. The ArmaProcess class internally represents the AR polynomial as 1 - \phi_1 L - \phi_2 L^2 - .... Therefore, if your AR model is formulated as Y_t = \phi_1 Y_{t-1} + \phi_2 Y_{t-2} + \epsilon_t, you should pass the coefficients [\phi_1, \phi_2, ...] directly to the arargument. The class handles the negation internally. Our ar_coeffs_ar1 = np.array([0.7]) correctly represents \phi_1 = 0.7.

Now, let’s plot the PACF of our simulated AR(1) series:

# Plot the PACF for the simulated AR(1) series

fig, ax = plt.subplots(figsize=(10, 5))

plot_pacf(ar1_series, ax=ax, lags=20, method='ywm') # Using 'ywm' method for consistency

ax.set_title('PACF of a Simulated AR(1) Process')

ax.set_xlabel('Lag')

ax.set_ylabel('Partial Autocorrelation')

plt.grid(True)

plt.tight_layout()

plt.show()The plot_pacf function from statsmodels.graphics.tsaplots is used here. We specify lags=20 to view the PACF up to 20 lags. The method='ywm'specifies the Yule-Walker method for estimating PACF, which is a common choice.

Interpreting the PACF Plot’s Shaded Region: You’ll notice a shaded blue region around zero in the PACF plot. This region represents the confidence interval(typically 95%) for the partial autocorrelation coefficients. Any spike that extends beyond this shaded region is considered statistically significant at the chosen confidence level. If a spike falls within the shaded region, it means the partial autocorrelation at that lag is not significantly different from zero. For an AR(p) process, we expect p significant spikes, followed by a sharp drop into the non-significant region.

For the AR(1) process, you should observe a single significant spike at lag 1, and then all subsequent lags should fall within the confidence interval, indicating a sharp cutoff.

Simulating an AR(2) Process

An AR(2) process is defined as Y_t = c + \phi_1 Y_{t-1} + \phi_2 Y_{t-2} + \epsilon_t. Let’s simulate one with coefficients \phi_1 = 0.5 and\phi_2 = 0.3.

# Define the AR(2) coefficients

# Phi_1 = 0.5, Phi_2 = 0.3

ar_coeffs_ar2 = np.array([0.5, 0.3])

ma_coeffs_ar2 = np.array([1]) # No MA component

# Create an ArmaProcess object for AR(2)

ar2_process = ArmaProcess(ar=ar_coeffs_ar2, ma=ma_coeffs_ar2)

# Generate a sample time series from the AR(2) process

ar2_series = ar2_process.generate_sample(nsample=n_samples)Here, we simply provide an array with two coefficients to the ar argument, representing \phi_1 and \phi_2.

Now, let’s plot the PACF of our simulated AR(2) series:

# Plot the PACF for the simulated AR(2) series

fig, ax = plt.subplots(figsize=(10, 5))

plot_pacf(ar2_series, ax=ax, lags=20, method='ywm')

ax.set_title('PACF of a Simulated AR(2) Process')

ax.set_xlabel('Lag')

ax.set_ylabel('Partial Autocorrelation')

plt.grid(True)

plt.tight_layout()

plt.show()For the AR(2) process, you should see two significant spikes at lags 1 and 2, and then the PACF should cut off, with all subsequent lags falling within the confidence interval. This behavior confirms that the PACF is an effective tool for identifying the order of a pure AR process.

Confirming Stationarity of Simulated AR Processes

Before applying PACF, it’s crucial that our time series is stationary. For our simulated AR processes, we defined them with coefficients that ensure stationarity. However, it’s good practice to verify this. We can use the Augmented Dickey-Fuller (ADF) test, which we discussed in previous sections.

from statsmodels.tsa.stattools import adfuller

# Perform ADF test on the simulated AR(2) series

adf_result_ar2 = adfuller(ar2_series)

print("ADF Test Results for Simulated AR(2) Series:")

print(f"ADF Statistic: {adf_result_ar2[0]:.2f}")

print(f"p-value: {adf_result_ar2[1]:.3f}")

print("Critical Values:")

for key, value in adf_result_ar2[4].items():

print(f" {key}: {value:.2f}")

# Interpret the result

if adf_result_ar2[1] <= 0.05:

print("\nConclusion: The p-value is less than or equal to 0.05. We reject the null hypothesis.")

print("This suggests the simulated AR(2) series is stationary.")

else:

print("\nConclusion: The p-value is greater than 0.05. We fail to reject the null hypothesis.")

print("This suggests the simulated AR(2) series is non-stationary.")Running this code for the simulated AR(2) series should yield a very small p-value (typically much less than 0.05), confirming its stationarity. This reinforces the principle that PACF analysis is most reliable when applied to stationary data.

A special case worth noting is the random walk, which can be seen as an AR(1) process where \phi_1 = 1. In this specific scenario, the \phi_1 coefficient leads to a “unit root,” making the process non-stationary. A unit root indicates that shocks to the system persist indefinitely, causing the series to wander without a fixed mean or variance. This is why a random walk’s ACF decays very slowly and its PACF might also not show a clear cutoff, highlighting the importance of ensuring stationarity before applying these diagnostic tools.

Applying PACF to Real-World Data: Foot Traffic Example

Now, let’s apply our knowledge of PACF to the foot_traffic dataset. Recall from previous sections that we likely had to difference the foot_traffic series to achieve stationarity. We will assume that differenced_foot_traffic is already available from previous steps. If not, you would typically load your data and perform differencing as necessary.

# Load the foot_traffic data (assuming it's in a CSV or similar format)

# This part assumes you have 'foot_traffic.csv' or similar from previous sections.

# If your data is already loaded and differenced, you can skip this block.

try:

foot_traffic_df = pd.read_csv('foot_traffic.csv', index_col='Date', parse_dates=True)

# Perform first-order differencing if not already done

differenced_foot_traffic = foot_traffic_df['Foot_Traffic'].diff().dropna()

print("Foot traffic data loaded and differenced.")

except FileNotFoundError:

print("foot_traffic.csv not found. Please ensure the data file is in the correct directory.")

# Create dummy data for demonstration if file not found

np.random.seed(42)

dummy_data = np.cumsum(np.random.randn(200)) + 50 # Simulate non-stationary data

differenced_foot_traffic = pd.Series(dummy_data).diff().dropna()

print("Using dummy differenced data for demonstration.")

# Plot the PACF for the differenced foot traffic series

fig, ax = plt.subplots(figsize=(12, 6))

plot_pacf(differenced_foot_traffic, ax=ax, lags=20, method='ywm')

ax.set_title('PACF of Differenced Weekly Foot Traffic')

ax.set_xlabel('Lag')

ax.set_ylabel('Partial Autocorrelation')

plt.grid(True)

plt.tight_layout()

plt.show()After running this code, carefully examine the PACF plot for the differenced_foot_traffic series. Look for a lag p where the partial autocorrelations are significant (extend beyond the shaded region) for lags 1 through p, and then abruptly become non-significant (fall within the shaded region) for lags greater than p.

Based on the typical behavior of foot traffic data (which often shows dependence on recent past values), you might observe a significant spike at lag 1 and possibly lag 2, with subsequent lags falling into the non-significant region. For instance, if only the spike at lag 1 is significant, it suggests an AR(1) process. If spikes at both lag 1 and lag 2 are significant, followed by non-significant spikes, it suggests an AR(2) process.

This visual inspection is a crucial step in the Box-Jenkins methodology for time series model identification.

Broader Practical Applications

The identification of AR processes using PACF is a fundamental skill in time series analysis and has wide-ranging applications across various domains:

Economics: Modeling GDP growth, inflation rates, or unemployment, where current values are often influenced by values from previous quarters or years.

Finance: Analyzing stock returns or volatility, although financial time series often exhibit more complex behaviors (e.g., ARCH/GARCH effects).

Environmental Science: Forecasting temperature, rainfall, or pollution levels, which tend to have strong autoregressive components.

Engineering: Predicting sensor readings in control systems or analyzing signal processing data.

By mastering the interpretation of PACF plots, you gain a powerful tool for understanding the underlying structure of many real-world time series and building accurate forecasting models.

Forecasting an Autoregressive Process

Having defined what an autoregressive process is and explored methods for identifying its order, the next crucial step is to apply this knowledge to forecast future values of a time series. This section will guide you through a complete end-to-end workflow for forecasting with an AR(p) model, including data preparation, implementing a robust rolling forecast strategy, fitting the model, evaluating its performance, and transforming the forecasts back to their original scale for practical interpretability.

Data Preparation for Time Series Forecasting

Before any model fitting can occur, the data must be appropriately prepared. This involves ensuring stationarity (which we addressed in previous sections through differencing) and splitting the data into training and testing sets. A proper train-test split is paramount in time series forecasting to prevent data leakage and provide an honest evaluation of the model’s out-of-sample performance.

For our example, we will continue using the foot_traffic.csv dataset, which represents average weekly foot traffic. Recall that we previously determined this series was non-stationary and required first-order differencing to achieve stationarity.

First, let’s load the data and apply the differencing transformation. We will also set a random seed for reproducibility.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.statespace.sarimax import SARIMAX

from sklearn.metrics import mean_squared_error, mean_absolute_error

# Set a random seed for reproducibility across runs

np.random.seed(42)

# Load the dataset

file_path = 'foot_traffic.csv'

df = pd.read_csv(file_path, index_col='Date', parse_dates=True)

# Apply first-order differencing

df['Foot_traffic_diff'] = df['Foot_traffic'].diff(periods=1)

# Drop the first NaN value resulting from differencing

df_diff = df.dropna()

# Display the first few rows of the differenced data

print(df_diff.head())The code above initializes our environment by importing necessary libraries and setting a random seed to ensure that any stochastic processes (like model initialization or optimization) yield consistent results. We then load our foot_traffic.csv dataset, parsing the ‘Date’ column as the index. The core transformation here is applying a first-order difference using df['Foot_traffic'].diff(periods=1), which calculates the difference between consecutive observations. This is crucial because our previous analysis identified that the original series was non-stationary, and differencing helps stabilize the mean by removing trends. The resulting NaN from the first difference is dropped, leaving us with a stationary series df_diff ready for modeling.

Next, we split the differenced data into training and testing sets. A common practice in time series forecasting is to use a fixed number of recent observations for the test set, representing a specific period for which forecasts are needed. For weekly data, a 52-week test set is often chosen to represent a full year of forecasting, allowing for evaluation across an entire annual cycle.

# Define the size of the test set (e.g., 52 weeks for a year)

test_size = 52

# Split the differenced data into training and testing sets

train_diff = df_diff[:-test_size]

test_diff = df_diff[-test_size:]

# Also split the original data for later inverse transformation visualization

train_original = df['Foot_traffic'][:-test_size]

test_original = df['Foot_traffic'][-test_size:]

print(f"Differenced Training set size: {len(train_diff)}")

print(f"Differenced Test set size: {len(test_diff)}")

print(f"Original Training set size: {len(train_original)}")

print(f"Original Test set size: {len(test_original)}")Here, we define test_size as 52, signifying one year’s worth of weekly data for our test set. We then perform a chronological split: train_diff contains all data points except the last 52, and test_diff contains only the last 52. This ensures that our model is trained only on past data and evaluated on unseen future data. We also create corresponding train_original and test_original splits of the non-differenced data, which will be essential when we perform the inverse transformation of our forecasts.

Visualizing the train-test split helps confirm that the separation is correct and provides a visual context of the data used for training versus evaluation.

# Plotting the train and test sets for both original and differenced series

fig, axes = plt.subplots(2, 1, figsize=(14, 10))

# Plot for original series

axes[0].plot(train_original.index, train_original, label='Training Data (Original)', color='blue')

axes[0].plot(test_original.index, test_original, label='Test Data (Original)', color='orange')

axes[0].set_title('Original Foot Traffic Data: Train vs. Test Split')

axes[0].set_ylabel('Foot Traffic')

axes[0].legend()

axes[0].grid(True)

# Highlight the test period

axes[0].axvspan(test_original.index.min(), test_original.index.max(), color='gray', alpha=0.2, label='Test Period')

# Plot for differenced series

axes[1].plot(train_diff.index, train_diff['Foot_traffic_diff'], label='Training Data (Differenced)', color='green')

axes[1].plot(test_diff.index, test_diff['Foot_traffic_diff'], label='Test Data (Differenced)', color='red')

axes[1].set_title('Differenced Foot Traffic Data: Train vs. Test Split')

axes[1].set_xlabel('Date')

axes[1].set_ylabel('Differenced Foot Traffic')

axes[1].legend()

axes[1].grid(True)

# Highlight the test period

axes[1].axvspan(test_diff.index.min(), test_diff.index.max(), color='gray', alpha=0.2, label='Test Period')

plt.tight_layout()

plt.show()This visualization generates two subplots. The top subplot shows the original foot traffic data, clearly distinguishing between the blue training data and the orange test data. This helps us see the overall trend and seasonality (if any) in the full series. The bottom subplot displays the differenced data, where the series appears more stationary, with the green training data and red test data. The gray shaded area explicitly highlights the test period, making it immediately clear which part of the data will be used for forecasting evaluation.

Introducing the SARIMAX Model for Autoregressive Processes

For fitting autoregressive (AR) models in Python, the statsmodels library provides the SARIMAX class (Seasonal Autoregressive Integrated Moving-Average with eXogenous regressors). While its name suggests a more complex model, SARIMAX is highly versatile and can be configured to represent simpler models like a pure AR(p) process.

When using SARIMAX for an AR(p) model, we specify the order parameter as a tuple (p, d, q). * p: The order of the Autoregressive (AR) part. This is the number of lagged observations to include in the model. * d: The order of differencing (I for Integrated). This specifies the number of nonseasonal differences needed to make the series stationary. * q: The order of the Moving Average (MA) part. This is the number of lagged forecast errors in the prediction equation.

Since we have already performed first-order differencing on our data (meaning our input data df_diff is already stationary), we set d=0. Also, because we are specifically building a pure AR model and not including any Moving Average components, we set q=0. Therefore, for an AR(3) model on pre-differenced data, the order parameter will be (3, 0, 0).

The disp=False parameter in the model.fit() method is used to suppress the convergence messages that statsmodels prints during the optimization process. This keeps the output cleaner, especially within loops.

Forecasting Strategy: Rolling Window Prediction

Time series forecasting often employs a “rolling window” or “walk-forward” validation strategy. Instead of training a model once on a fixed training set and predicting the entire test set, a rolling forecast involves: 1. Training the model on an initial segment of the data. 2. Making a prediction for the next one or more steps. 3. Adding the actual observed value(s) to the training set. 4. Retraining the model and repeating the process.

This approach is crucial for several reasons: * Adaptability to Evolving Patterns: Economic conditions, consumer behavior, and other factors can change over time. Retraining the model with the latest available data allows it to adapt to recent trends and shifts, potentially improving forecast accuracy. * Realistic Evaluation: It mimics how forecasts are made in a real-world scenario where new data becomes available over time and models are periodically updated. * Robustness: It provides a more robust estimate of model performance than a single train-test split, as it evaluates the model across multiple points in time.

Computational Considerations: The main drawback of rolling forecasts is their computational cost. Retraining the model at each step can be very time-consuming, especially for large datasets, complex models, or long test periods. For practical applications, a balance must be struck between the desired adaptability and computational feasibility. This might involve retraining less frequently (e.g., weekly or monthly) or using simpler models for very frequent updates.

Implementing the Rolling Forecast Function

We will create a generalized rolling_forecast function that can apply different forecasting methods: a historical mean baseline, a last-value baseline, and our AR(3) model. This function will iterate through the test set, updating the training data and making predictions at each step.

Let’s define the function’s structure and the initial setup for handling different forecasting methods.

def rolling_forecast(

train_data: pd.Series,

test_data: pd.Series,

method: str,

order: tuple = (0, 0, 0),

window: int = 1

) -> np.ndarray:

"""

Performs rolling forecasts using specified method (mean, last, AR).Args:

train_data (pd.Series): Initial training data.

test_data (pd.Series): Test data for which to generate forecasts.

method (str): Forecasting method ('mean', 'last', 'AR').

order (tuple): ARIMA order (p, d, q) for AR method. Defaults to (0,0,0).

window (int): Number of steps to forecast at each iteration. Defaults to 1.

Returns:

np.ndarray: Array of generated forecasts.

"""

history = [x for x in train_data] # Convert initial training data to a list for easy appending

predictions = []

# Loop through the test data, making predictions one 'window' at a time

for i in range(0, len(test_data), window):

# Determine the current actual values for the window

actual_window = test_data.iloc[i : i + window]

# Ensure we don't try to predict beyond the test set

if len(actual_window) == 0:

break

# --- Forecasting Logic based on method ---

if method == 'mean':

# Predict the mean of the current history for each step in the window

forecast_values = [np.mean(history)] * len(actual_window)

elif method == 'last':

# Predict the last observed value in history for each step in the window

forecast_values = [history[-1]] * len(actual_window)

elif method == 'AR':

# AR model fitting and prediction

try:

# Fit SARIMAX model to the current history

# We use SARIMAX(order=(p,d,q)) where d=0, q=0 for a pure AR(p) model

model = SARIMAX(history, order=order, enforce_invertibility=False, enforce_stationarity=False)

model_fit = model.fit(disp=False) # disp=False suppresses convergence output

# Generate predictions for the next 'window' steps

# start and end define the range relative to the *full* dataset length

# Since history grows, 'start' is the current length of history

# and 'end' is 'history length + window - 1'

forecast_object = model_fit.get_prediction(start=len(history), end=len(history) + len(actual_window) - 1)

forecast_values = forecast_object.predicted_mean.values

except Exception as e:

print(f"Error fitting AR model at step {i}: {e}")

forecast_values = [np.nan] * len(actual_window) # Fill with NaN on error

else:

raise ValueError("Invalid method. Choose 'mean', 'last', or 'AR'.")

# Add the predicted values to our list of all predictions

predictions.extend(forecast_values)

# Update history with the actual values from the current window of the test set

history.extend(actual_window.tolist())

return np.array(predictions)

This rolling_forecast function is the heart of our forecasting strategy. It takes train_data, test_data, the method (e.g., ‘mean’, ‘last’, ‘AR’), the AR order, and the window size (how many steps to predict at once before updating history).

The function initializes history with the train_data and an empty predictions list. It then loops through the test_data in increments of window. Inside the loop, it branches based on the method: * If method == 'mean': It calculates the mean of the current history and uses it as the forecast for the entire window. This serves as a simple but effective baseline. * If method == 'last': It takes the last value from the current history and uses it as the forecast. This is another common baseline, particularly useful for random walk-like series. * If method == 'AR': This is where the SARIMAX model comes into play. It fits a SARIMAXmodel with the specified order (e.g., (3,0,0)) to the current history. enforce_invertibility=False and enforce_stationarity=False are set to provide more flexibility during model fitting, especially when dealing with smaller history windows in a rolling forecast. After fitting, model_fit.get_prediction() is used to generate forecasts. The start and end parameters for get_prediction are crucial: they specify the indices relative to the full data series (including current history). Since history grows, start is len(history) (the next point after the current history), and end is len(history) + len(actual_window) - 1 (the last point of the prediction window). disp=False keeps the console clean.

After generating forecasts for the current window, these forecasts are added to the predictions list. Crucially, the actual observed values from the test_datafor that window are then appended to the history. This “walk-forward” mechanism ensures that the model is always trained on the most up-to-date information available, mimicking a real-world forecasting scenario.

Executing the Rolling Forecasts

Now we can use our rolling_forecast function to generate predictions for our test set using the different methods.

# Generate rolling forecasts for different methods

ar_order = (3, 0, 0) # AR(3) model: p=3, d=0 (already differenced), q=0 (no MA component)

window_size = 1 # Forecast one step ahead at a time

# Forecast using Historical Mean baseline

forecasts_mean_diff = rolling_forecast(train_diff['Foot_traffic_diff'],

test_diff['Foot_traffic_diff'],

method='mean',

window=window_size)

# Forecast using Last Value baseline

forecasts_last_diff = rolling_forecast(train_diff['Foot_traffic_diff'],

test_diff['Foot_traffic_diff'],

method='last',

window=window_size)

# Forecast using AR(3) model

forecasts_ar_diff = rolling_forecast(train_diff['Foot_traffic_diff'],

test_diff['Foot_traffic_diff'],

method='AR',

order=ar_order,

window=window_size)

print(f"Number of AR(3) differenced forecasts generated: {len(forecasts_ar_diff)}")In this step, we define our ar_order as (3, 0, 0) for an AR(3) model on differenced data and set a window_size of 1, meaning we’ll predict one step ahead at each iteration. We then call rolling_forecast three times: once for the ‘mean’ baseline, once for the ‘last’ value baseline, and finally for our ‘AR’ method. The output confirms that the correct number of forecasts (equal to the test set size) has been generated for the AR(3) model.

Evaluating Forecasts on Differenced Data

Before undifferencing, it’s useful to visualize and evaluate the forecasts on the differenced scale. This directly assesses how well the model captures the short-term changes in the series.

# Plotting differenced actuals vs. differenced forecasts

plt.figure(figsize=(14, 6))

plt.plot(test_diff.index, test_diff['Foot_traffic_diff'], label='Actual Differenced Foot Traffic', color='blue')

plt.plot(test_diff.index, forecasts_mean_diff[:len(test_diff)], label='Mean Baseline Forecast (Differenced)', color='purple', linestyle='--')

plt.plot(test_diff.index, forecasts_last_diff[:len(test_diff)], label='Last Value Baseline Forecast (Differenced)', color='orange', linestyle='--')

plt.plot(test_diff.index, forecasts_ar_diff[:len(test_diff)], label='AR(3) Model Forecast (Differenced)', color='red')

plt.title('Differenced Foot Traffic: Actual vs. Forecasts (Test Set)')

plt.xlabel('Date')

plt.ylabel('Differenced Foot Traffic')

plt.legend()

plt.grid(True)

plt.show()

# Calculate Mean Squared Error (MSE) for differenced forecasts

mse_mean_diff = mean_squared_error(test_diff['Foot_traffic_diff'], forecasts_mean_diff[:len(test_diff)])

mse_last_diff = mean_squared_error(test_diff['Foot_traffic_diff'], forecasts_last_diff[:len(test_diff)])

mse_ar_diff = mean_squared_error(test_diff['Foot_traffic_diff'], forecasts_ar_diff[:len(test_diff)])

print(f"MSE (Differenced) - Mean Baseline: {mse_mean_diff:.3f}")

print(f"MSE (Differenced) - Last Value Baseline: {mse_last_diff:.3f}")

print(f"MSE (Differenced) - AR(3) Model: {mse_ar_diff:.3f}")The plot visually compares the actual differenced foot traffic against the forecasts generated by the mean baseline, last value baseline, and the AR(3) model. This allows for a qualitative assessment of how well each method tracks the fluctuations. Quantitatively, we calculate the Mean Squared Error (MSE) for each forecasting method on the differenced data. MSE penalizes larger errors more heavily and is useful for comparing model performance. A lower MSE indicates a better fit to the differenced series. From the output, we can observe whether the AR(3) model significantly outperforms the simple baselines in capturing the dynamics of the differenced series.

Inverse Transformation: Undifferencing Forecasts

For business interpretation and practical use, forecasts need to be on the original scale of the data. Since we differenced the data to achieve stationarity, we must now reverse this transformation, a process known as “undifferencing” or “inverse differencing.”

The inverse of first-order differencing is a cumulative sum, but it requires a starting point. The starting point for undifferencing a one-step-ahead forecast is the last known original value from the training set. For subsequent forecasts, the previous undifferenced forecast acts as the starting point.

Let’s create a helper function for this common operation.

def undifference_forecasts(

original_train_last_value: float,

differenced_forecasts: np.ndarray

) -> np.ndarray:

"""

Undifferences a series of one-step-ahead forecasts.Args:

original_train_last_value (float): The last value of the original (non-differenced)

training series. This serves as the starting point.

differenced_forecasts (np.ndarray): Array of forecasts made on the differenced series.

Returns:

np.ndarray: Array of forecasts transformed back to the original scale.

"""

# Create a list starting with the last known original value

undifferenced_series = [original_train_last_value]

# Iteratively add each differenced forecast to the previous undifferenced value

for diff_forecast in differenced_forecasts:

undifferenced_series.append(undifferenced_series[-1] + diff_forecast)

# Return the series, excluding the initial seed value

return np.array(undifferenced_series[1:])