Coding a RNN for Financial Time Series Analysis

Unlocking Insights from the S&P 500 with LSTM Neural Networks

Download the code, by clicking the link at the end of this article.

In this comprehensive article, we delve into the fascinating world of Recurrent Neural Networks (RNNs), particularly focusing on Long Short-Term Memory (LSTM) models, as applied to financial time series data. Using Python’s robust libraries and TensorFlow’s powerful capabilities, we explore the process of analyzing and forecasting the S&P 500 Index. From data preprocessing to model training and evaluation, we provide a step-by-step guide on how to leverage these advanced techniques for practical financial analysis. Our journey encompasses everything from suppressing Python warnings for cleaner output, handling data with Pandas and NumPy, to the intricacies of building, training, and evaluating LSTM models. Whether you’re a data scientist, a financial analyst, or simply a tech enthusiast, this article offers valuable insights into the intersection of finance and machine learning.

import warnings

warnings.filterwarnings('ignore')The code blocks are used in Python to suppress warnings. Python generates warnings to inform the user about certain situations that may arise during program execution. A program may be still running but results won’t be what the user expects, or it may signal deprecated features that won’t be supported in a future library version or language. This code disables the warnings system for all warnings. It is possible that developers do this for various reasons. When deploying applications, they may want to prevent end users from seeing warnings that aren’t critical. If you use a particular library that generates frequent warnings that aren’t relevant to the current task, ignoring them may help keep your output clean.

%matplotlib inline

from pathlib import Path

import numpy as np

import pandas as pd

import pandas_datareader.data as web

from scipy.stats import spearmanr

from sklearn.metrics import mean_squared_error

from sklearn.preprocessing import MinMaxScaler

import tensorflow as tf

from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM

from tensorflow import keras

import matplotlib.pyplot as plt

import seaborn as snsThe code is primarily composed of Python, with several imports suggesting different functions. Firstly, the code was set up so that graphs can be plotted inline with the notebook, so charts and graphs will be displayed directly in the user interface. A filesystem path is specified by using the pathlib library, which is useful for object-oriented filesystems, suggesting that it may interact with local files. In addition, it uses NumPy and Pandas, which are essential for carrying out numerical computations and analyzing data. A pandas_datareader library is used to fetch data from the internet, possibly financial market data.

There is also the possibility of performing statistical analysis, as shown by the inclusion of the scipy.stats module. Moreover, the code incorporates elements of machine learning by using Scikit-learn’s metrics and preprocessing modules. As emphasized above, MinMaxScaler suggests that normalization or scaling may be applied to data to fit it within a range, which is often a prerequisite to training machine learning models. Moreover, the code depicts TensorFlow and Keras as the tools used to build and train a neural network. It is expected to be a Sequential model using LSTM layers, which are a special kind of neural network that can model time series data.

In order to optimize the training process, callbacks are set up to save the best model and to stop training early if it stops improving. In addition, matplotlib and seaborn visualization libraries are imported, suggesting that data and results will be visualized using plots and graphs, which is common in such analyses. By doing so, we are better able to understand the data and convey the findings to the public. By setting up the necessary libraries and tools for data fetching, preprocessing, modeling, and visualization, this code appears to prepare for machine learning tasks in which time series data are involved.

gpu_devices = tf.config.experimental.list_physical_devices('GPU')

if gpu_devices:

print('Using GPU')

tf.config.experimental.set_memory_growth(gpu_devices[0], True)

else:

print('Using CPU')This code snippet is intended to configure the hardware that is going to be used by the machine learning script, specifically the GPU or CPU. By using TensorFlow’s configuration methods, the code attempts to find a list of available GPU devices. When the code finds a GPU, it will print a confirmation message indicating it will be used. Furthermore, it enables a setting called “memory growth” on the first GPU device it detects. When a program is running, memory growth allows the GPU to allocate only the amount of memory it needs at runtime. It prevents the GPU from being allocated all of its memory at once, thus leaving resources free for other applications. In the absence of GPU devices, the code defaults to processing on the CPU. GPUs are optimized for parallel processing capabilities, which are often needed in these types of computations, so CPUs will typically perform slower than GPUs in machine learning tasks.

results_path = Path('results', 'univariate_time_series')

if not results_path.exists():

results_path.mkdir(parents=True)A variable results_path is assigned to a Path object, which represents the directory ‘univariate_time_series’ inside the directory ‘results’. Afterwards, it checks whether this directory path exists in the filesystem. Upon detecting that the directory does not exist, it creates the directory, along with any necessary parent directories. As long as the parents argument is true and the directory does not already exist, the univariate_time_series directory should be created. In essence, the code creates a directory structure if one doesn’t already exist, possibly to store output from some process.

Get Data

sp500 = web.DataReader('SP500', 'fred', start='2010', end='2020').dropna()

ax = sp500.plot(title='S&P 500',

legend=False,

figsize=(14, 4),

rot=0)

ax.set_xlabel('')

sns.despine()A historical financial analysis of the S&P 500 index is conducted focusing on 2010 to 2020 and measures the stock performance of 500 large companies listed on U.S. stock exchanges. A data request in the script is made for the specified decade by contacting an online data source (“FRED” — Federal Reserve Economic Data). A missing entry or a “NaN” (Not a Number) entry in the data is then removed to prevent errors during further analysis.

Preprocessing

scaler = MinMaxScaler()Using MinMaxScaler from scikit-learn, a popular Python library for machine learning, you can scale or normalize data. In applying the scaler to a dataset, each feature is transformed to a specified range, usually between 0 and 1. Subtracting the minimum value from the maximum value, then dividing by the range (maximum value minus minimum value), results in this result. Scaling features to a range provides a uniform scale for all inputs to a machine learning model. Having features at vastly different scales can cause problems during learning because the model might become biased towards features with larger scales if the features have different scales. By normalizing the data, the learning algorithm will become more convergent and the overall performance of the model will be improved. Scaling is crucial when using algorithms such as k-nearest neighbor (k-NN) and principal component analysis (PCA) that compute distance or assume normality or when using gradient-based optimization algorithms. It’s important to fit the scaler to the training set only and then transform the training set and test set (or new data), ensuring that the model has no prior knowledge of the test set.

sp500_scaled = pd.Series(scaler.fit_transform(sp500).squeeze(),

index=sp500.index)

sp500_scaled.describe()A pandas Series named sp500, representing the S&P 500 stock index value, is taken and transformed using a scaler method named fit_transform. As part of its two-step process, the fit_transform method first fits the scaler to the data, learning any scaling parameters, and then transforms it by scaling it. As data is scaled in this context, it is typically done to standardize or normalize the values, which makes further analytical or machine learning tasks easier. In this example, we have a numpy array that probably has two dimensions. In order to convert this back into a one-dimensional pandas Series, we use the method “squeeze()”. Through this method, single-dimensional entries are removed from the array, resulting in one-dimensional arrays. It is then converted back into a pandas series with the same index as the original `sp500` series. By preserving the index, time-series data like stock prices can be scaled to correspond to the original data points. The code uses the `describe()` method on the new scaled Series. Using this method, you can generate a summary of the Series’ statistics. Statistics such as count, mean, standard deviation, minimum, quartiles, and maximum are typically used. Scaled data is displayed as a quick overview of their distribution and spread. Simply put, the code scales S&P 500 index data, converts it back to Series, then generates descriptive statistics about the scaled data.

Generating Recurrent Sequences From Our Time Series

def create_univariate_rnn_data(data, window_size):

n = len(data)

y = data[window_size:]

data = data.values.reshape(-1, 1) # make 2D

X = np.hstack(tuple([data[i: n-j, :] for i, j in enumerate(range(window_size, 0, -1))]))

return pd.DataFrame(X, index=y.index), yAn univariate Recurrent Neural Network (RNN) can be trained with this dataset prepared as per the following code section. A multivariate RNN predicts future values of a time series based on its past values. It works by taking a series of data points (time series) and specifying a window size. In order to predict the next value, the number of past data points is determined by the window size. Following this, the code constructs two new structures: X and Y. X is the features matrix, and Y is the target vector. As shown in the features matrix, each row represents a sequence of data points from the time series, the length of which is determined by the window size. Data points that immediately follow each of these sequences are contained in the target vector y; these will be the values the RNN is trained to predict.

Train-Test Split

To respect the time series nature of the data, we set aside the data at the end of the sample as hold-out or test set. More specifically, we’ll use the data for 2018.

ax = sp500_scaled.plot(lw=2, figsize=(14, 4), rot=0)

ax.set_xlabel('')

sns.despine()S&P 500 financial index data is used in the snippet, which seems to have been scaled prior to usage. In the beginning, the S&P 500 scaled data is plotted as a line graph with certain visual specifications. Suitable for timelines or time series data, the line width is set to a medium thickness, the figure size is adjusted so that it is moderately wide and not too tall, and the tick labels on the x-axis are rotated to 0 degrees, meaning they are not rotated and should appear horizontally.

Then, an empty string is set as the label for the x-axis, which effectively removes any text that might be there-this could be for visual neatness or because the label is not required. To remove the spines from the plot, the seaborne library’s despine function is invoked. When creating plots for publication or presentations, this is a common practice since it results in a cleaner-looking graph by removing distracting box-like frames while maintaining the essential parts of the graph.

X_train = X[:'2018'].values.reshape(-1, window_size, 1)

y_train = y[:'2018']

# keep the last year for testing

X_test = X['2019'].values.reshape(-1, window_size, 1)

y_test = y['2019']Training and test sets appear to be separated based on dates, with training separated from test using the year. As an example, the code above selects a portion of the feature dataset ‘X’ that contains data up until the end of 2018. Next, the slice of the dataset is transformed into a machine learning model-suitable shape. Data are reshaped to have a set number of time steps (known as ‘window_size’) containing one feature (dimension) at a time. The training set’s corresponding target variable ‘y’, which holds the values we want to predict, is also sliced to include only data up to the end of 2018. By doing this, the training feature set matches the target variable. For the test dataset, the code repeats the third and fourth lines. The year 2019 feature data is treated as training data by reshaping it. In the same way, the target variable will only include values for 2019 in the test set. Essentially, the code sets up training and test datasets for time-series forecasting. During training, data up to the year 2018 was used, while test data were used from the following year, 2019. It ensures that the forecasting model is trained with historical data and tested with unseen, future data, which is similar to how forecasting is carried out in the real world.

Keras LSTM Layer

```

LSTM(units,

activation='tanh',

recurrent_activation='hard_sigmoid',

use_bias=True,

kernel_initializer='glorot_uniform',

recurrent_initializer='orthogonal',

bias_initializer='zeros',

unit_forget_bias=True,

kernel_regularizer=None,

recurrent_regularizer=None,

bias_regularizer=None,

activity_regularizer=None,

kernel_constraint=None,

recurrent_constraint=None,

bias_constraint=None,

dropout=0.0,

recurrent_dropout=0.0,

implementation=1,

return_sequences=False,

return_state=False,

go_backwards=False,

stateful=False,

unroll=False)

```Define the Model Architecture

After preparing input/output pairs from our time series data and dividing them into training and testing sets, we are ready to build our Recurrent Neural Network (RNN) using Keras. The architecture of our RNN consists of two hidden layers with the following configuration:

- The first layer is an LSTM (Long Short-Term Memory) module with 20 hidden units. Note that the input shape should be (window_size, 1).

- The second layer is a fully connected layer with one unit.

- For the loss function, use ‘mean_squared_error’, which is appropriate for regression tasks.

Building this model in Keras is straightforward and requires only a few lines of code. Refer to the general Keras documentation and the LSTM documentation for guidance on how to efficiently use Keras for constructing neural network models. Also, ensure you initialize your optimizer following the recommended approach for RNNs by Keras.

rnn = Sequential([

LSTM(units=10,

input_shape=(window_size, n_features), name='LSTM'),

Dense(1, name='Output')

])In order to process data, this RNN has two layers: an LSTM layer and a density layer. An LSTM layer is the first layer, which is a type of RNN specifically designed to remember information over time. When dealing with sequences of data, such as time series or texts, this is useful. This network has an LSTM layer with a specific number of units, which can be viewed as memory cells that determine how well the system learns patterns.

Additionally, the layer takes an input defining the size and number of features of the window. As the sequence input to the LSTM is sized by its length, so is its number of features by its dimensionality. After LSTM, there is a Dense layer that consists of a fully connected neural network. Transforms the output from the LSTM layer into a single value in this layer. Dense layers are generally used to generate the final output of neural networks. This code predicts or produces a continuous value based on the learned patterns of the LSTM layer.

Essentially, the network consists of two layers: LSTM and Dense. The first layer is designed to process sequential input data, followed by learning from it via LSTM. This network is simple enough that it could be used for a variety of sequence prediction tasks such as forecasting time series or any other problem associated with the relationship between sequence elements.

The summary shows that the model has 1,781 parameters:

rnn.summary()Code involved here is a method invocation used in machine learning, specifically on neural networks, and even more specifically on recurrent neural networks (RNN). Summary() provides a concise overview of a model’s architecture. In this case, we are assuming that the “summary()” method has been called on an RNN labeled as “rnn_model”. It will output detailed information about the layers within the RNN. Training involves learning the underlying layers, the shapes of the outputs that flow between them, and the number of parameters (weights and biases) that each layer has. Preparing the model for training with this method helps ensure its structure and configuration are correct. Using it, developers and data scientists can figure out the model’s architecture and ensure that it is configured correctly to solve the problem. In this model, only the structure is provided, not information about the model’s performance.

Train The Model

We train the model using the RMSProp optimizer recommended for RNN with default settings and compile the model with mean squared error for this regression problem:

optimizer = keras.optimizers.RMSprop(lr=0.001,

rho=0.9,

epsilon=1e-08,

decay=0.0)A RMSprop optimizer is used to adjust the learning rate during training in this case. A small positive value, lr=0.001, determines the size of the steps taken during optimization by determining the initial learning rate. Whether a network learns quickly or slowly depends on the learning rate. ‘rho=0.9’ dictates the decay rate of the moving average of squared gradients, a parameter specific to RMSprop. This smooths out gradient computations by using more weight for recent gradients than for older gradients. The epsilon=1e-08 is small to avoid confusion in the implementation. This is a way to ensure that mathematical errors do not occur in the algorithm. Finally, decay=0.0 is another parameter that can be used to decrease the learning rate. If the learning rate is 0.0, the learning rate will be constant throughout the training process. Code configurations the RMSprop optimizer with specific parameter settings. A model’s accuracy will be improved by minimizing the loss function with these settings.

rnn.compile(loss='mean_squared_error',

optimizer=optimizer)In the training phase, it prepares the RNN. This method is called on an object named rnn, which is assumed to be a pre-constructed recurrent neural network. In preparation for training, this method is essential because it configures the network with some basic settings. The configuration specifies two main parameters: loss and optimizer. Using the mean squared error function to quantify how well the model performs, the loss parameter is set to ‘mean_squared_error.’ By squaring the discrepancies, this function penalizes larger errors more severely for larger deviations between predicted and actual outputs. For regression tasks, where the objective is to predict continuous values, it is commonly used. The `optimizer` parameter is specified as `optimizer`, which suggests that there’s a predefined optimization algorithm assigned to the variable `optimizer`. Training neural networks may involve a variety of gradient descent variations. As the model is trained over multiple iterations, the optimizer adjusts the weights of the network to minimize the loss function, thus improving predictions.

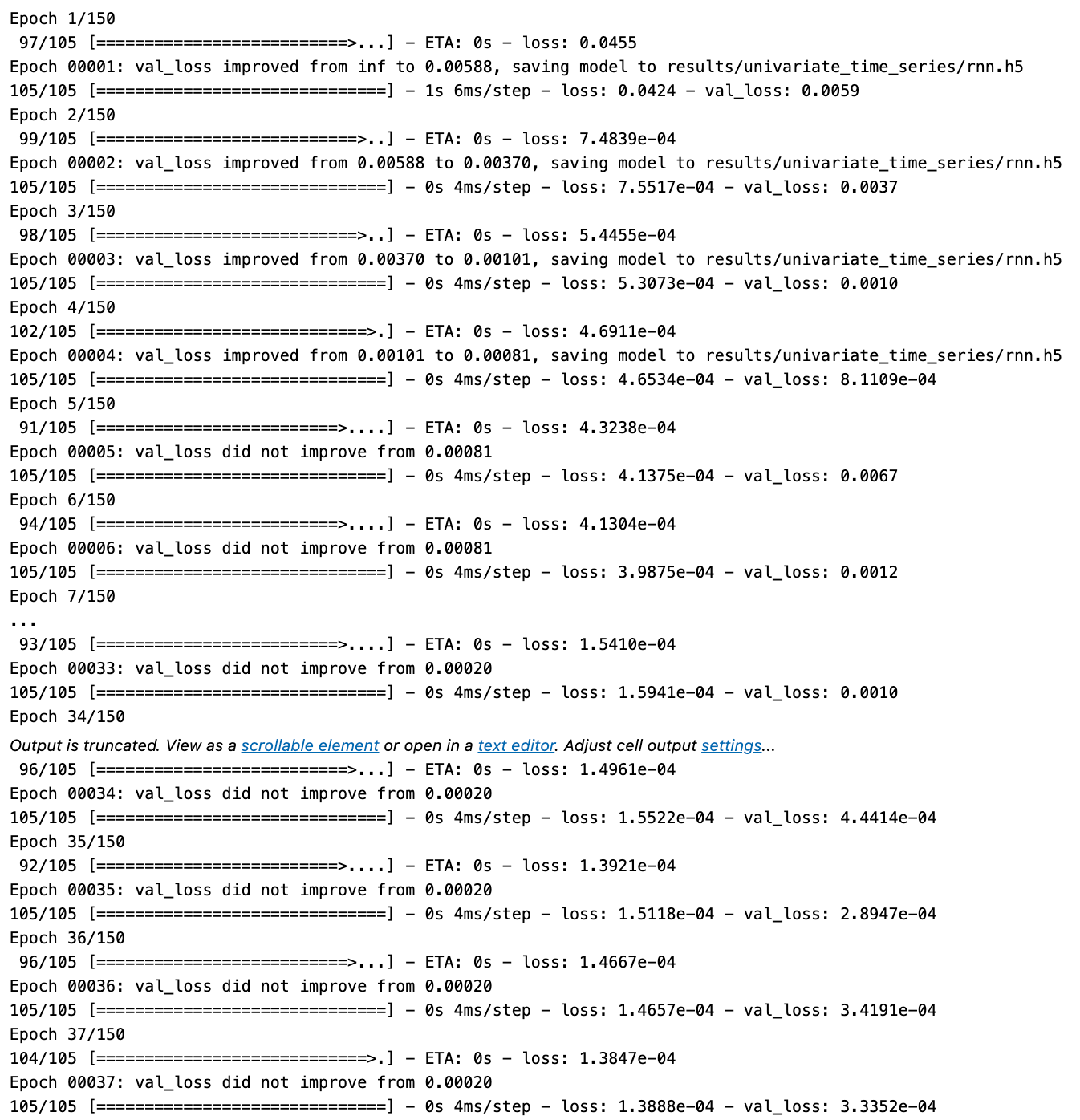

We define an EarlyStopping callback and train the model for up to 100 episodes.

rnn_path = (results_path / 'rnn.h5').as_posix()

checkpointer = ModelCheckpoint(filepath=rnn_path,

verbose=1,

monitor='val_loss',

save_best_only=True)In this case, the file path points to a file called ‘rnn.h5’ within a directory called ‘results_path’. A .as_posix() method ensures that the file path is formatted correctly based on the operating system in use. A ‘ModelCheckpoint’ object with several parameters is created once the file path has been specified. During the training process in Keras, the ‘ModelCheckpoint’ callback is executed. For this configuration, the ‘filepath’ points to the ‘rnn_path’, which is where the model is saved. If ‘verbose=1’ is specified, a message will be printed each time the callback saves the model. It checks the model’s validation loss using ‘monitor=’val_loss’’ — a metric for determining how well the model does on data it hasn’t seen during training. When the ‘save_best_only=True’ parameter is set, the checkpoint will only save the model if it has the lowest validation loss so far. As a result, the saved model will be the one that performed best on the validation dataset after training has been completed instead of simply the last model to be trained. It is particularly useful for preventing overfitting and ensuring that the model is generalizable to new data.

early_stopping = EarlyStopping(monitor='val_loss',

patience=20,

restore_best_weights=True)A model overfits when it learns not only the underlying patterns in the training data, but also noise and details that are irrelevant for predicting on new data. In early stopping, the model’s performance is monitored on a validation dataset that is separate from the training data. A validation loss is the specific metric being monitored here, which measures the model’s error on the validation data. A model might overfit as it ages, which indicates that the validation loss stopped decreasing and even started increasing. After the validation loss continues to improve, the patience parameter determines how long to continue training. As long as the validation loss does not improve, training will continue for 20 more epochs without stopping. As a result of early stopping, the model’s weights will be reset to those that produced the lowest validation loss after the parameter restore_best_weights is set to true. As a result, the model retains its best learned weights even if it overfits in subsequent epochs.

lstm_training = rnn.fit(X_train,

y_train,

epochs=150,

batch_size=20,

shuffle=True,

validation_data=(X_test, y_test),

callbacks=[early_stopping, checkpointer],

verbose=1)With the variable ‘rnn’, the LSTM model is taught to recognize patterns or predict outcomes using input features and output values from a training dataset. Iterations, or epochs, are limited to 150. The data is divided into batches of 20 samples within each epoch, as specified by the batch_size parameter. Before each epoch, the data is shuffled, so that the model is not exposed to any order bias in the training data. Furthermore, the code uses a separate dataset for validation, consisting of some X tests as well as some Y tests. To check whether the LSTM is overfitting, validation data are used to monitor its performance on data that has not been trained on. Training can also include ‘callbacks,’ which are additional functions or routines that can be executed at specific points. In this case, there are two callbacks: `early_stopping` which stops the training early if the validation performance doesn’t improve over a number of epochs (helping to reduce overfitting and save time), and `checkpointer` which saves the best version of the model based on some measure of performance. Finally, with the ‘verbose’ parameter set to 1, the progress of the training will be displayed on the console epoch by epoch so the user can monitor its progress. The results of the training are stored in the variable lstm_training.

Evaluate Model Performance

fig, ax = plt.subplots(figsize=(12, 4))

loss_history = pd.DataFrame(lstm_training.history).pow(.5)

loss_history.index += 1

best_rmse = loss_history.val_loss.min()

best_epoch = loss_history.val_loss.idxmin()

title = f'5-Epoch Rolling RMSE (Best Validation RMSE: {best_rmse:.4%})'

loss_history.columns=['Training RMSE', 'Validation RMSE']

loss_history.rolling(5).mean().plot(logy=True, lw=2, title=title, ax=ax)

ax.axvline(best_epoch, ls='--', lw=1, c='k')

sns.despine()

fig.tight_layout()

fig.savefig(results_path / 'rnn_sp500_error', dpi=300);To visualize the long-term memory (LSTM) neural network model training history, the code snippet you provided uses libraries like Matplotlib, Pandas, and Seaborn in Python. While training the model, it plots the Root Mean Square Error (RMSE) of the training and validation datasets. For plotting, it creates a figure and an axes object, with specific dimensions set for the figure size. As a result, the training history data (most likely containing loss values) is converted to a Pandas DataFrame. RMSE is then calculated by applying a square root transformation to the loss values since MSE is often used to calculate loss values. In the code, the minimum RMSE on the validation dataset is calculated and the epoch at which the best performance is achieved is determined. To create a plot title, we use the best RMSE value formatted as a percentage. Column labels are then added to the data frame to indicate that the columns represent RMSEs. RMSE values are smoothed out and trends are displayed on a logarithmic scale using a rolling average with an epoch window of five epochs. In order to distinguish training from validation, the RMSEs are plotted with different line widths. An epoch-based dashed line appears at the epoch with the best RMSE so that the best RMSE can be fast identified. The right and left axes’ spines are then removed using Seaborn’s despise function. During saving, the figure’s layout is adjusted to ensure that no content is clipped. Finally, a high-resolution copy of the figure is saved to a specified location.

train_rmse_scaled = np.sqrt(rnn.evaluate(X_train, y_train, verbose=0))

test_rmse_scaled = np.sqrt(rnn.evaluate(X_test, y_test, verbose=0))

print(f'Train RMSE: {train_rmse_scaled:.4f} | Test RMSE: {test_rmse_scaled:.4f}')Based on two training datasets and two testing datasets, the code provided evaluates recurrent neural network (RNN) models. For both datasets, it calculates the root mean square error (RMSE). This measure of the difference between predicted values and observed values is commonly used. Especially useful in contexts where larger errors should be penalized more severely, because it squares errors before averaging them, thereby giving larger errors more weight. To do this, the code compares the predictions made by the RNN model on both the training and testing datasets, and calculates the mean squared error for each. This is followed by the extraction of the square root of these mean squared errors to determine the RMSE. A parameter called ‘verbose=0’ indicates the model’s performance is evaluated in silence. A clean, focused final output is the result of the evaluation process itself, with no output generated. RMSE values are calculated and displayed on the screen in a formatted string with four decimal places for the training and testing RMSE. RNN models make predictions on data they have seen before (training data) and generalize to new, unknown data (testing data) based on this output. As RMSE values are lower, the RNN model’s prediction quality can be assessed by looking at this output.

Rescale Predictions

train_predict = pd.Series(scaler.inverse_transform(train_predict_scaled).squeeze(), index=y_train.index)

test_predict = (pd.Series(scaler.inverse_transform(test_predict_scaled)

.squeeze(),

index=y_test.index))The workflow comes in two main steps: the first is handling predictions made by a model on a training dataset, and the second is handling predictions on a testing dataset. It is common practice in machine learning to scale input features in order to improve performance. If the results of a model are being used for analysis or reporting, it is often necessary to reverse the scaling process in order to interpret the results accurately. In the first step, the code reverses the scaling transformation applied to the scaled predictions from the training dataset (train_predict_scaled). It is then converted into a pandas Series (a one-dimensional array-like object), which is easier to manipulate and analyze. Y_train data is indexed to align predictions with original training data, ensuring they match the original training data accurately. For scaled predictions of the testing dataset (test_predict_scaled), the same process is repeated. As a result of the inverse transformation, the results are converted to a pandas Series, and the index is adjusted to align with the y_test data, which reflects the true values of each test variable.

y_train_rescaled = scaler.inverse_transform(y_train.to_frame()).squeeze()

y_test_rescaled = scaler.inverse_transform(y_test.to_frame()).squeeze()Data is transformed into a dataframe by passing it into the `to_frame()` method. The inverse_transform method of the scaler expects two-dimensional inputs. An inverse_transform function is then applied to this dataframe using a scaler. It contains information about the scaling that was applied to the data previously. The scaler is derived from a library like scikit-learn. Using the inverse_transform method, we reverse that scaling operation. In this instance, if the data had previously been standardized or normalized, it will be undone by this step. Data resulting from the inverse transform function is likely to be in the form of a two-dimensional dataframe or array. Squeeze() can be used to convert the array or series into a one-dimensional array or series, which is more useful for analysis in machine learning pipelines. As a result of this method, single-dimensional entries are removed from the array shape. Data from y_test is also transformed in the same manner. Training and test target data are consistently rescaled to their original scales after any initial transformation. A model’s predictions and original target values must often be compared with one another in order to make meaningful interpretations of the results.

Keep reading with a 7-day free trial

Subscribe to Onepagecode to keep reading this post and get 7 days of free access to the full post archives.