Convolutional Neural Networks

I have meticulously configured a Convolutional Neural Network with the aid of TensorFlow.

This sophisticated design encompasses convolutional layers, max-pooling, and judiciously implemented dropout techniques. This is further complemented by fully-connected layers. The model underwent training with batches of 128, over a span of 30 cycles.

So without wasting time let’s get start

import os

os.environ['KMP_DUPLICATE_LIB_OK']='True'

from sklearn import datasets, metrics

from sklearn.model_selection import train_test_split

import numpy as np

import tensorflow as tf

print('Tensorflow version:',tf.__version__)

tf.random.set_seed(2)

np.random.seed(0) # using this to help make results more reproducible

tf.config.list_physical_devices('GPU')

digits = datasets.load_digits()

# NORMALIZATION, SO IMPORTANT

X = digits.data.astype(np.float32)/16.0 - 0.5

y = digits.target.astype(np.int32)

X = X.reshape((X.shape[0],8,8,1)) # reshape as images

# Split it into train / test subsets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

# Split X_train again to create validation data

#X_train, X_val, y_train, y_val = train_test_split(X_train,y_train,test_size=0.2)

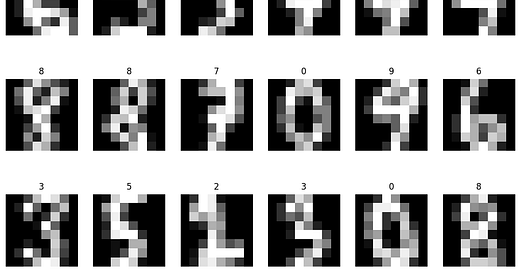

X_train.shapeIn our latest experiment, we started off by pulling the digits dataset straight from sklearn. Once we had that, we took the time to carefully normalize our data. You know how crucial that is, right? Then, we divvied it up: one chunk for training and another for testing. To fit the image processing mold, we had to reshape our data a bit. Oh, and by the way, we did check the TensorFlow version we’re working with and also took a peek at the size of our training batch.