Deciphering the Market: A Visual Guide to the Jane Street Prediction Challenge

A step-by-step journey through exploratory data analysis, feature engineering, and modeling financial time series.

Download link at the end of article for source code!

Jane Street reminds us that machine learning really begins with data: they collect about 2.3 TB of market data every day, and all those petabytes hide the patterns models need. In production settings like theirs, models are only one part of a bigger system with many moving pieces, so getting the data right is crucial for everything that follows.

This notebook is a simple exploratory data analysis, or EDA, of the files for the Kaggle Jane Street Market Prediction competition. EDA just means looking, plotting, and summarizing your data so you know what to do next. Spending time here helps you pick the right modeling tools and avoid wasting effort on the wrong approach.

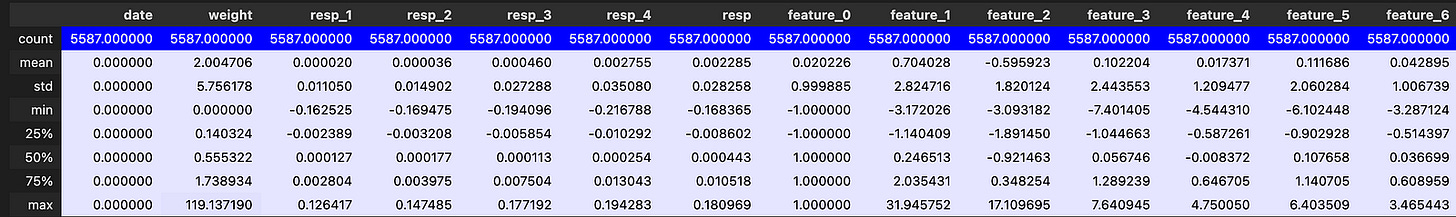

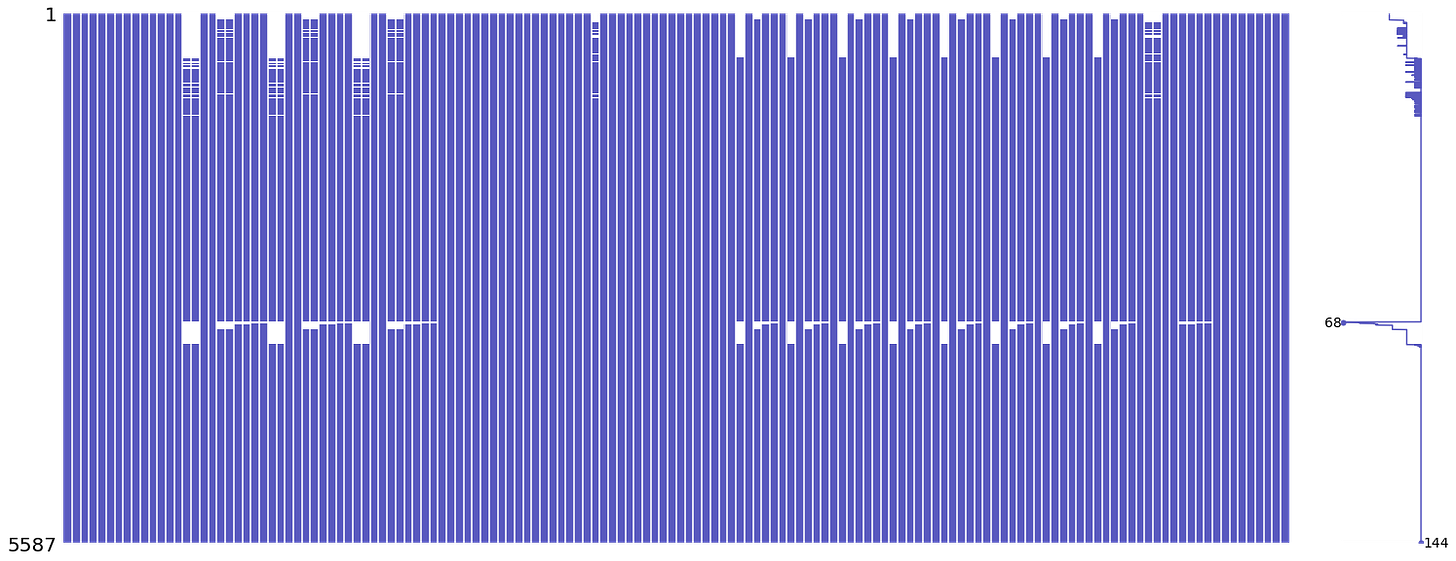

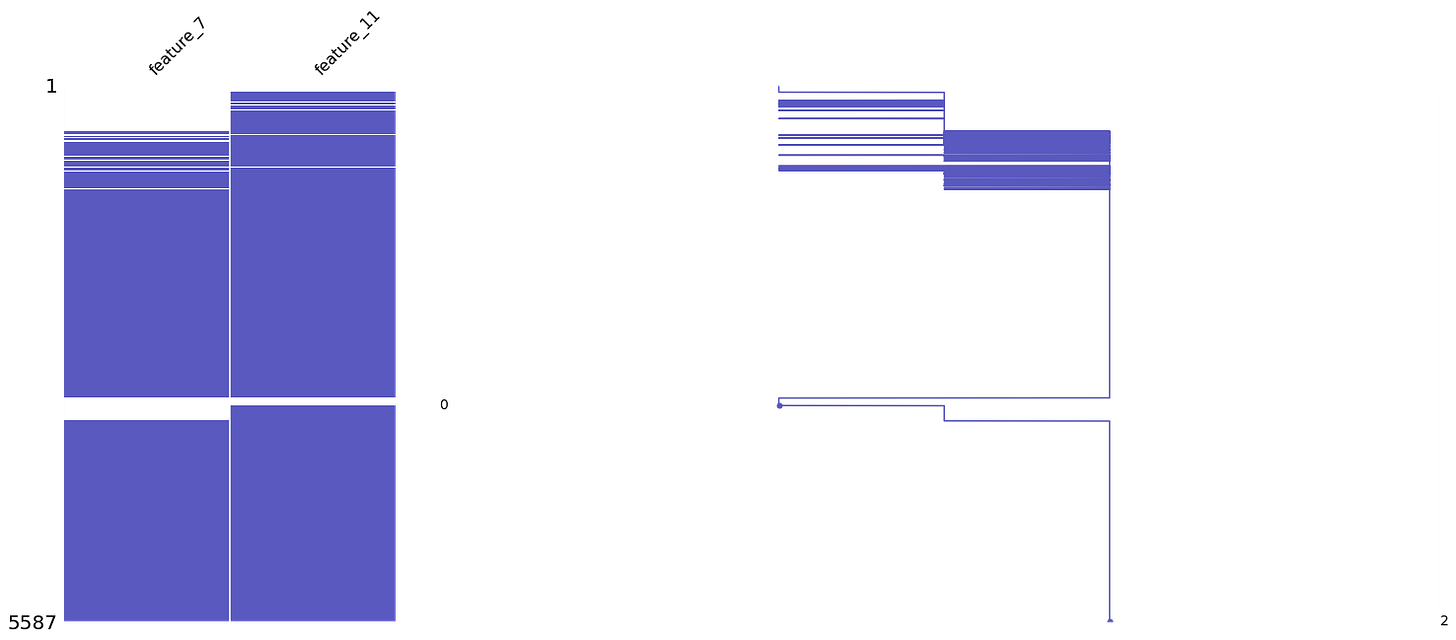

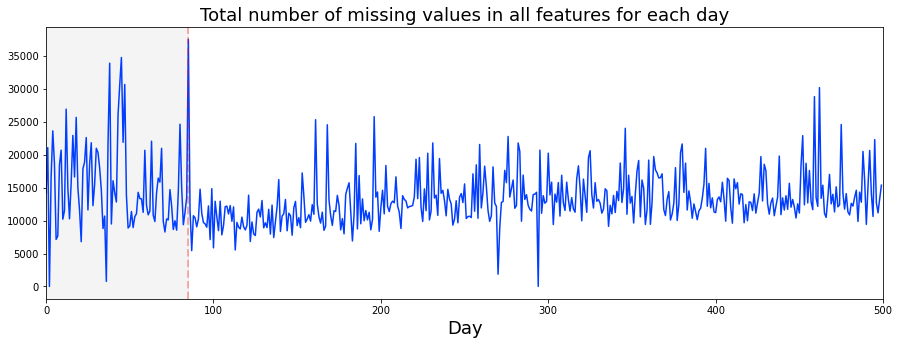

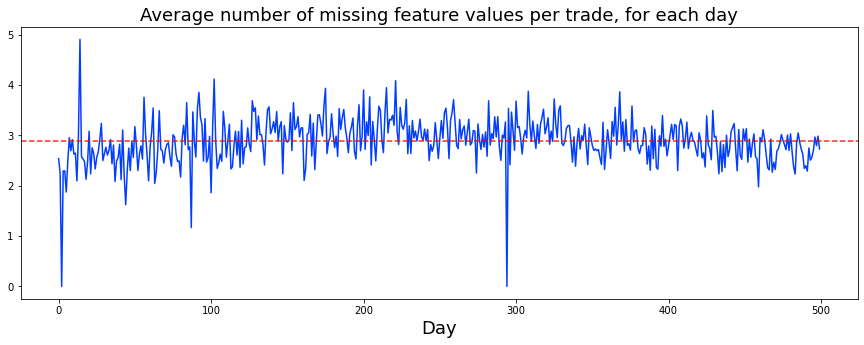

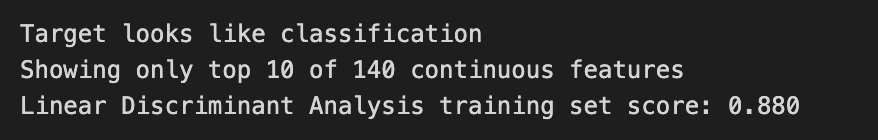

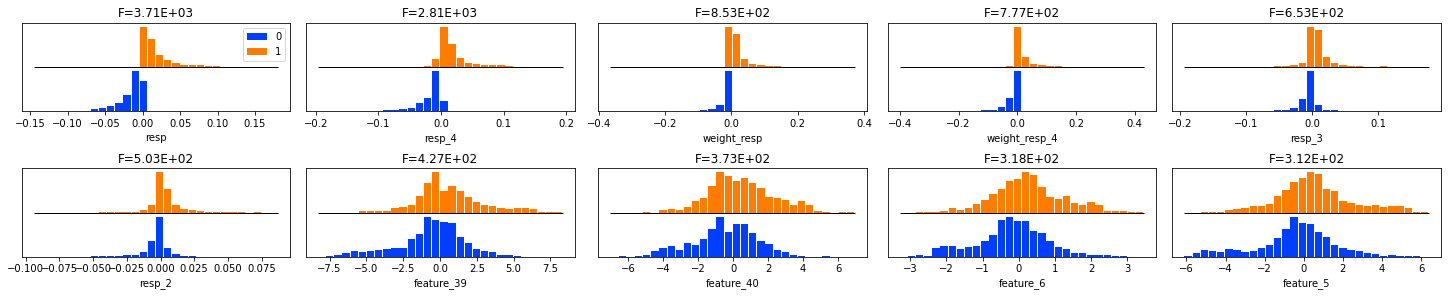

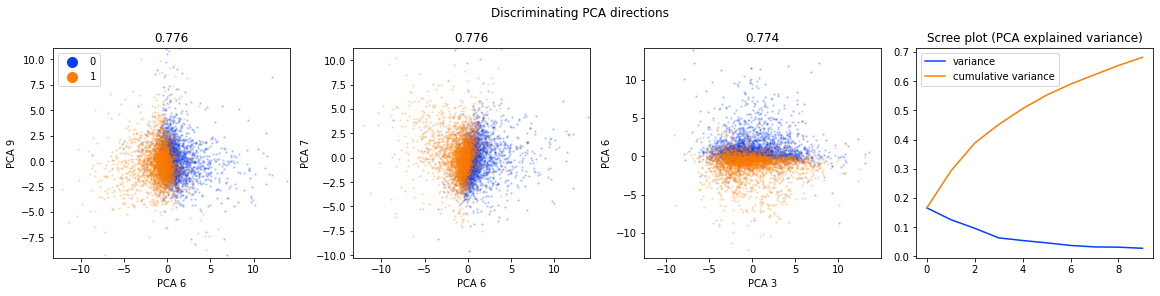

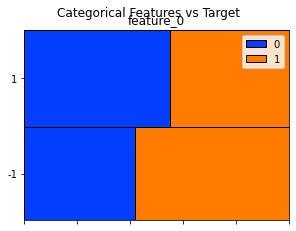

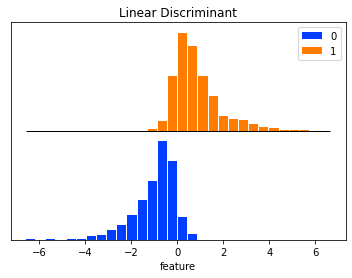

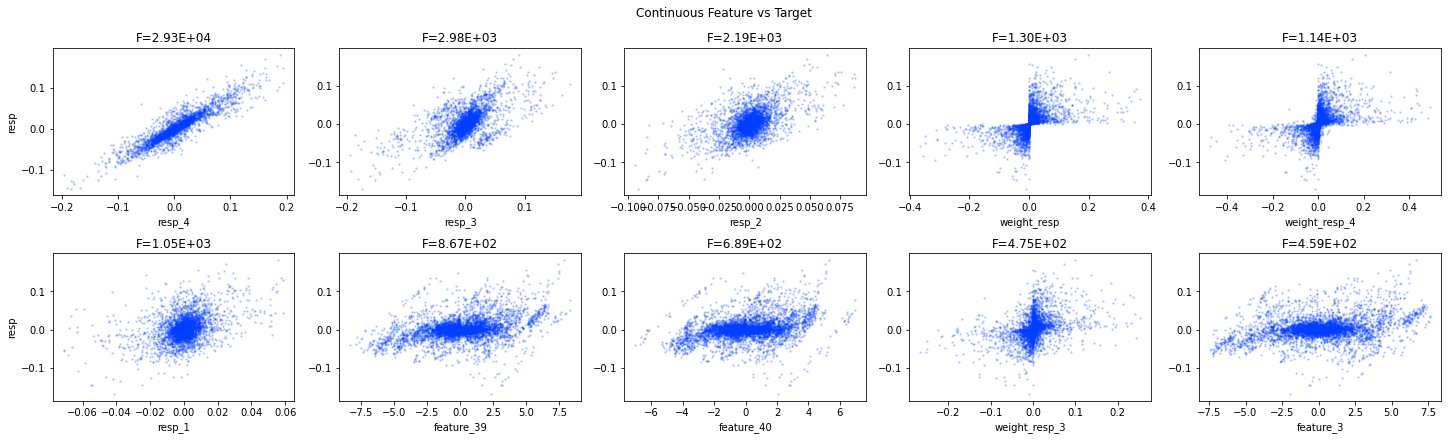

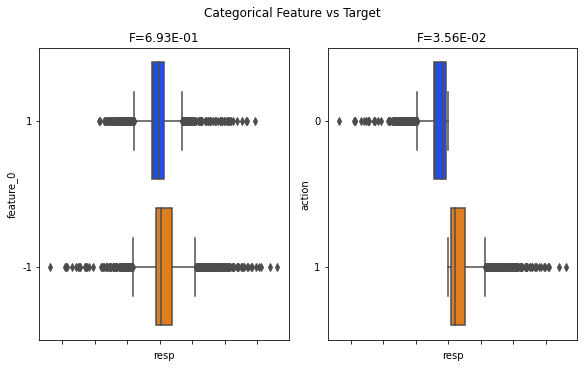

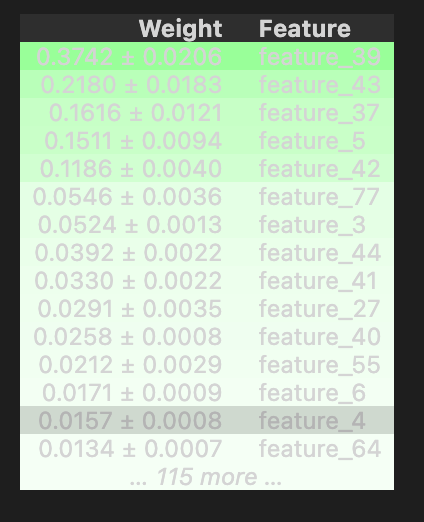

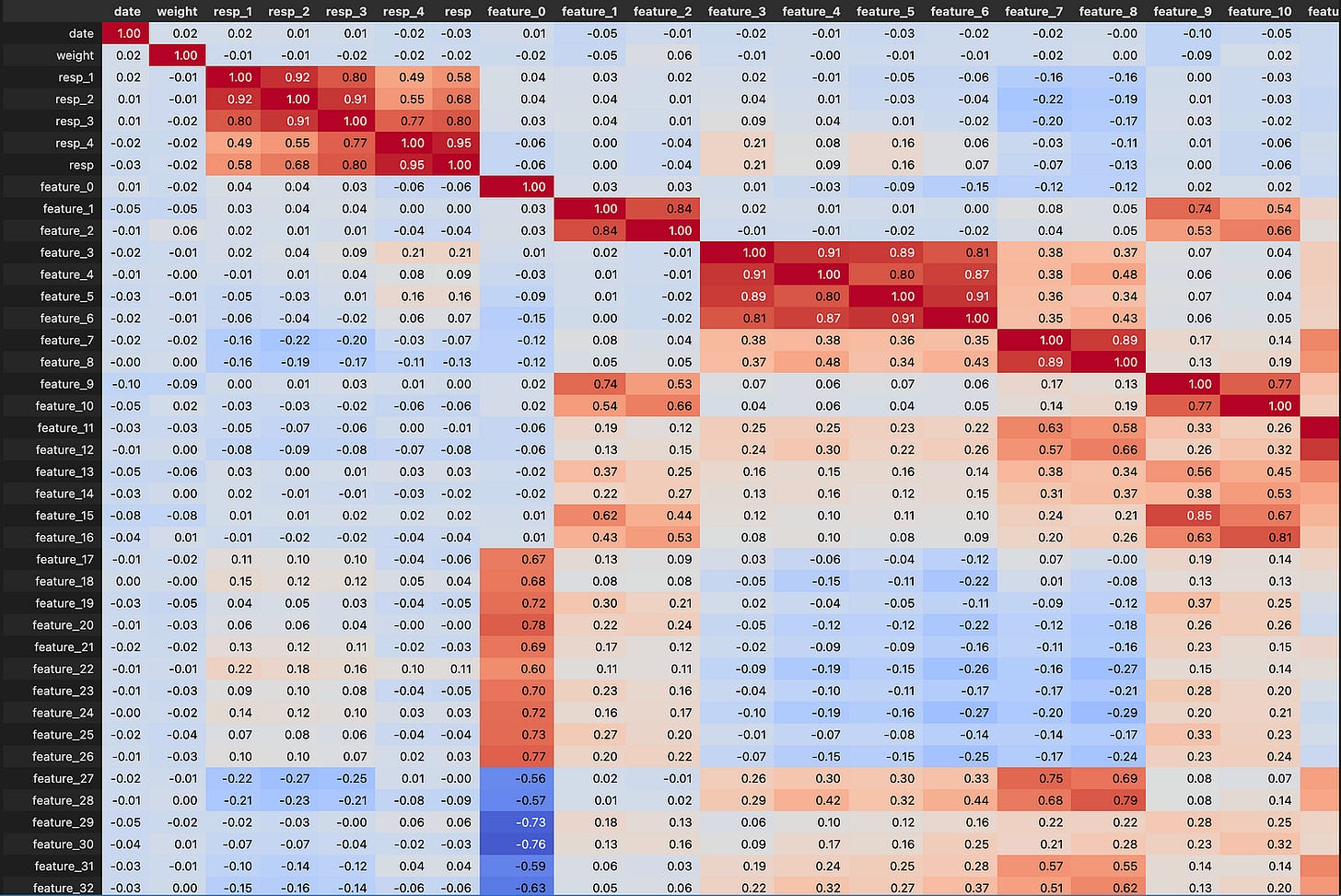

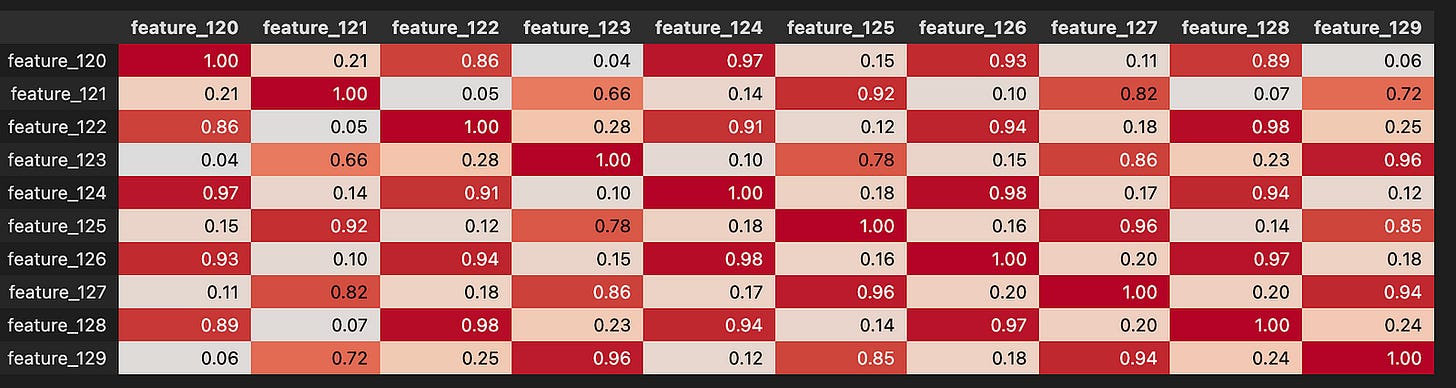

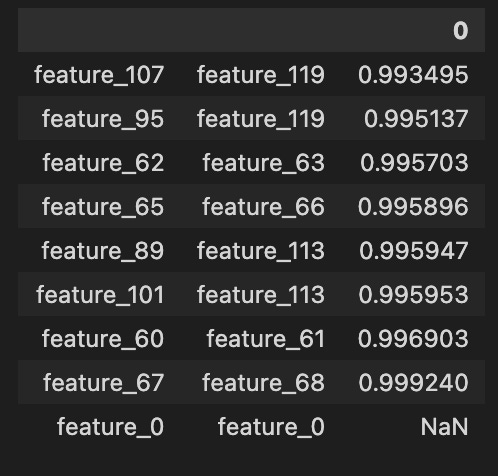

We look at the big train.csv file and the key columns: *resp* (the target response we try to predict), *weight* (how important each row is), cumulative return (how performance accumulates), and *time* (when events happened). We examine the features and the features.csv file that describes them, plus the *action* column used for decisions. The first day, called day 0, gets special attention. We check for missing values and specific gaps on days 2 and 294. We create quick DABL plots (a tool for fast visual EDA) for targets like action and resp, and run permutation importance with a Random Forest (a group of decision trees) to see which features matter. We also compare day 100 to day 200 for correlation, and finish by looking at the test data and how models are evaluated. This prepares you to build models that actually work in the competition.

# numpy

import numpy as np

# pandas stuff

import pandas as pd

pd.set_option(’display.max_rows’, None)

pd.set_option(’display.max_columns’, None)

# plotting stuff

from pandas.plotting import lag_plot

import matplotlib.pyplot as plt

import seaborn as sns

import plotly.express as px

import plotly.graph_objects as go

colorMap = sns.light_palette(”blue”, as_cmap=True)

#plt.rcParams.update({’font.size’: 12})

# install dabl

!pip install dabl > /dev/null

import dabl

# install datatable

!pip install datatable > /dev/null

import datatable as dt

# misc

import missingno as msno

# system

import warnings

warnings.filterwarnings(’ignore’)

# for the image import

import os

from IPython.display import Image

# garbage collector to keep RAM in check

import gc Think of this as laying out our research bench before we start experimenting with market data: first we bring in numpy as np so we have fast numerical arrays and vectorized math for computations, and pandas as pd so we can work with tables of data in memory; a DataFrame is like a spreadsheet you can slice and transform in code. We tweak pandas display options so when we peek at our tables nothing gets hidden — every row and column will show up for inspection.

Next we gather plotting tools: a lag_plot helper to quickly visualize time-lag relationships (autocorrelation shows how past values relate to future ones), matplotlib.pyplot for classic figures, seaborn for prettier statistical plots, and plotly.express/graph_objects for interactive visuals; we also create a light blue colormap, like choosing a palette before painting. The commented rcParams line is just a reminder we could change global font size if needed.

The two pip install lines run in a notebook to fetch dabl and datatable so we can import them immediately — think of that as fetching new recipe books; dabl helps automated exploratory analysis and datatable offers very fast tabular IO for large files. We import missingno to visualize missingness patterns so gaps in the data become obvious, and we silence warnings to keep the workspace uncluttered, which is like muting noncritical alerts while we work.

Finally, we import os and IPython.display.Image to load images into the notebook, and call gc to enable the garbage collector so Python’s cleanup crew reclaims memory between heavy operations. Together these pieces set up a tidy, interactive environment for exploring and modeling the Jane Street market prediction problem.

In the Jane Street Market Prediction project the training file train.csv is pretty big: 5.77G. A CSV is just a plain-text table you can open with code, and at this size it probably won’t fit into memory all at once. Knowing the file size helps you plan to read it in pieces, which keeps your computer from slowing down or crashing.

Let’s find out how many rows it has. Counting rows tells you how many training examples you actually have and helps you decide on things like batch sizes, sampling, or whether to work with a smaller subset first to iterate faster.

%%time

train_data_datatable = dt.fread(’../input/jane-street-market-prediction/train.csv’)We start by putting a little stopwatch at the top with %%time so the notebook tells us how long the next operation takes; think of it as asking the kitchen timer to tell us how long it takes to unpack a big delivery. The next line calls dt.fread(‘../input/jane-street-market-prediction/train.csv’) and assigns the result to train_data_datatable, so we’re instructing the datatable library to quickly read the CSV file from the input folder and store it in memory. fread is a high-performance CSV reader (like a fast conveyor belt that parses and loads rows into a tidy table), and datatable’s in-memory structure behaves like a spreadsheet you can slice and dice efficiently. Key concept: loading data into memory is the essential first step before any exploration, cleaning, or modeling. Naming the variable train_data_datatable makes it clear we now hold the training set as a datatable.Frame, ready for inspection, feature engineering, and feeding into the predictive models you’ll build for the Jane Street Market Prediction project.

Then convert your data to a pandas DataFrame. If you’re using the datatable library, call the .to_pandas() method to make that switch.

A pandas DataFrame is just a smart table that makes it easy to look at, clean, and reshape your data. Most Python analysis and machine‑learning tools expect data in this form, so this step gets your dataset ready for plotting, feature prep, and model training.

%%time

train_data = train_data_datatable.to_pandas()Imagine we’re in the kitchen preparing data for our prediction work: the goal here is to take the training table we’ve been carrying around in a datatable pot and move it into a pandas bowl where all our familiar utensils and recipes live. The first line, the little “%%time” at the top, acts like setting a stopwatch on the counter — IPython magic %%time reports wall time and CPU time for the cell. The second line does the actual transfer: train_data = train_data_datatable.to_pandas() takes the datatable structure and converts it into a pandas DataFrame, then gives it the name train_data so we can refer to it easily. A pandas DataFrame is a two-dimensional labeled data structure, like a spreadsheet in Python, and that one-sentence concept explains why we often prefer it for analysis and modeling. Practically, this move lets us use pandas’ familiar methods for cleaning, feature engineering, and interfacing with scikit-learn, though it can copy the whole dataset into memory so the stopwatch helps us judge the cost. So, with a timed transfer into the pandas bowl, we’ve prepared the training material for the next steps in the Jane Street Market Prediction workflow: exploration, feature crafting, and model training.

We loaded `train.csv` in less than 17 seconds, which is nice because quick loading keeps your exploration loop fast and lets you try ideas without waiting.

The file holds a total of 500 days of data — about two years of trading — so we have enough history to spot patterns but not so much that the dataset becomes unwieldy.

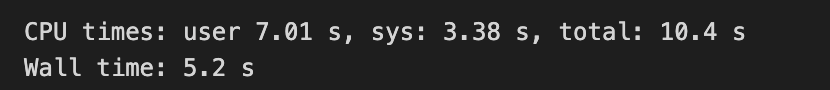

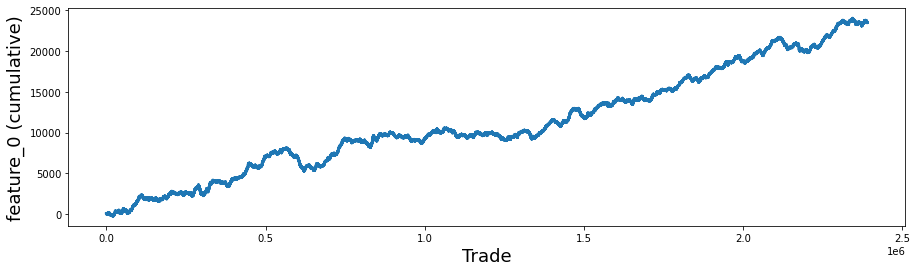

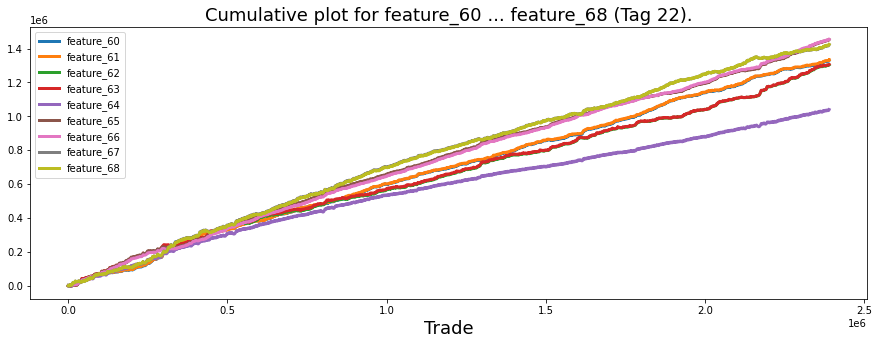

Here we look at resp, the response variable — the daily return (positive or negative) we’re trying to predict. Plotting the cumulative values of resp (the running total of daily returns) shows long‑term trends and whether a signal consistently gains or loses over time. If the cumulative line climbs, the strategy would have made money overall; if it drops, it would have lost money. This helps you see effects that single days can’t reveal.

fig, ax = plt.subplots(figsize=(15, 5))

balance= pd.Series(train_data[’resp’]).cumsum()

ax.set_xlabel (”Trade”, fontsize=18)

ax.set_ylabel (”Cumulative resp”, fontsize=18);

balance.plot(lw=3);

del balance

gc.collect();We want to see how the model’s responses add up over time, so the story begins by making a canvas: plt.subplots(figsize=(15, 5)) returns a figure and an axes object, like preparing a wide sheet of paper and a frame to draw on. Next, pd.Series(train_data[‘resp’]).cumsum() takes the column of responses from the training DataFrame, wraps it as a Series, and computes a running total; a cumulative sum is a key concept here — it gives you the progressive accumulation so you can watch gains and losses add up trade after trade. The result is stored in balance so we can refer to that running total by name.

We then label the axes with ax.set_xlabel(“Trade”, fontsize=18) and ax.set_ylabel(“Cumulative resp”, fontsize=18) so anyone viewing the plot knows that the horizontal axis steps through trades and the vertical axis shows accumulated response; good labels are like captions that orient the reader. Calling balance.plot(lw=3) draws the running total onto the prepared axes as a clear, thick line (lw=3 makes the line easier to see, like using a bold marker). Finally, del balance removes the variable reference and gc.collect() nudges Python’s garbage collector to free memory promptly, which is helpful when working with large datasets because garbage collection reclaims unused memory automatically.

Taken together, these steps create a tidy visualization of accumulated model response over trades — an essential quick check when building the Jane Street market prediction pipeline.

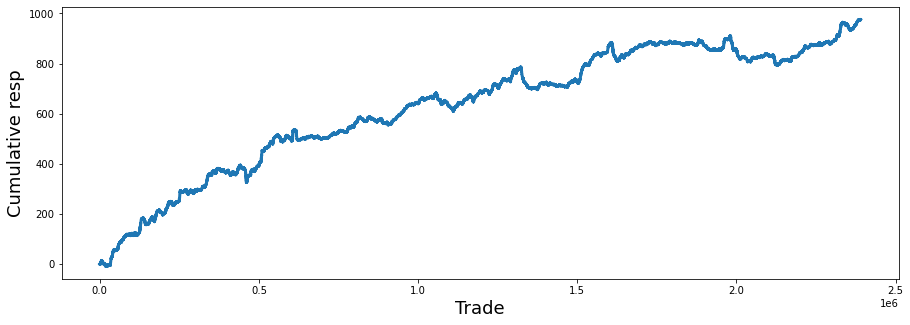

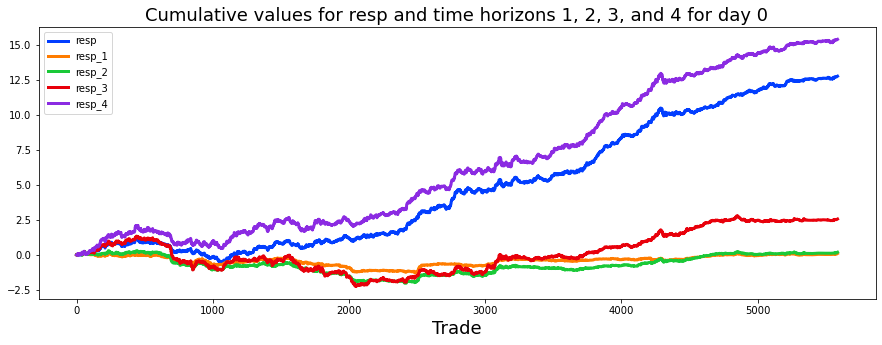

We also consider four time horizons. A time horizon is just how long you plan to hold an investment or expect an outcome to play out, from very short to much longer periods. Thinking in separate horizons helps us test models for different speeds of market moves.

As Investopedia puts it, “The longer the Time Horizon, the more aggressive, or riskier portfolio, an investor can build. The shorter the Time Horizon, the more conservative, or less risky, the investor may want to adopt.” In plain terms: if you have more time, you can afford bigger swings because you can wait out downturns; if you need results soon, you protect against losses.

For the Jane Street Market Prediction project, splitting things by these four horizons lets us tune strategies and measure performance in ways that match real trading needs. It makes our models more useful because we can pick the right balance of risk and speed for each goal.

fig, ax = plt.subplots(figsize=(15, 5))

balance= pd.Series(train_data[’resp’]).cumsum()

resp_1= pd.Series(train_data[’resp_1’]).cumsum()

resp_2= pd.Series(train_data[’resp_2’]).cumsum()

resp_3= pd.Series(train_data[’resp_3’]).cumsum()

resp_4= pd.Series(train_data[’resp_4’]).cumsum()

ax.set_xlabel (”Trade”, fontsize=18)

ax.set_title (”Cumulative resp and time horizons 1, 2, 3, and 4 (500 days)”, fontsize=18)

balance.plot(lw=3)

resp_1.plot(lw=3)

resp_2.plot(lw=3)

resp_3.plot(lw=3)

resp_4.plot(lw=3)

plt.legend(loc=”upper left”);

del resp_1

del resp_2

del resp_3

del resp_4

gc.collect();We’re trying to visualize how the running total of returns behaves over time for the main response and four time horizons, so we can compare their long-term patterns. The first line creates a drawing surface and a single set of axes — think of it as stretching a wide canvas (15 by 5 inches) and pinning down a place to paint. Next we turn columns from our training table into pandas Series and call cumsum on each: a Series is a labeled column of data, and cumulative sum is a running total that adds each new value to the previous total so you can see the path of accumulated returns. Doing that for resp, resp_1, resp_2, resp_3 and resp_4 prepares five separate running totals to plot.

We then give the horizontal axis a friendly name and place a descriptive title on the canvas so viewers know they’re looking at cumulative responses over about 500 days. Calling plot on each Series is like tracing five colored lines on the same map; the lw argument thickens each stroke so lines are easy to see. Adding a legend in the upper-left tells us which line is which, like a key for a map.

Finally we remove the intermediate Series objects and call the garbage collector to free memory — think of clearing clutter from the workbench after painting. The visual story this produces helps us quickly assess horizon behavior and guides model decisions in the Jane Street Market Prediction project.

You can see that resp (in blue) most closely follows the curve for resp_4, which is the uppermost purple line. This says the overall response pattern behaves like the longer-horizon signal.

In a notebook by pcarta called “Jane Street: time horizons and volatilities,” the author used maximum likelihood estimation — a method that finds the parameter values that make the observed data most likely — to estimate the effective time scales of each resp. This gives a way to compare how quickly each response reacts.

The results are roughly: the time horizon of resp_2 is about 1.4 times T1, resp_3 is about 3.9 times T1, and resp_4 is about 11.1 times T1. Here T1 (the time scale for resp_1) could correspond to about 5 trading days, so resp_4 represents a much longer effective horizon. That helps explain why resp_4 sits above the others.

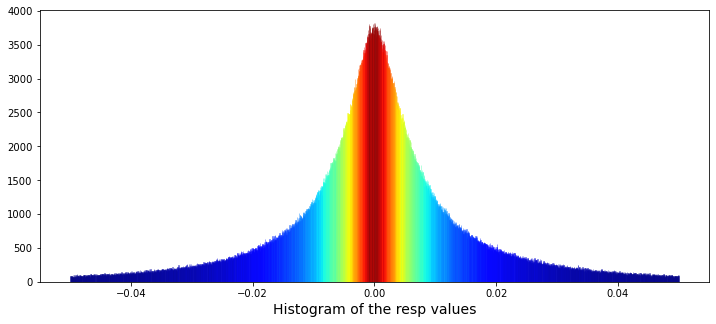

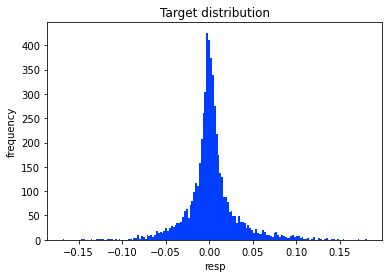

Now let’s plot a histogram of all the resp values, shown between -0.05 and 0.05. A histogram is useful because it reveals the shape of the distribution — whether values cluster near zero, skew one way, or have fat tails that might matter for modeling.

plt.figure(figsize = (12,5))

ax = sns.distplot(train_data[’resp’],

bins=3000,

kde_kws={”clip”:(-0.05,0.05)},

hist_kws={”range”:(-0.05,0.05)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Histogram of the resp values”, size=14)

plt.show();

gc.collect();We begin by preparing a blank canvas with plt.figure(figsize=(12,5)) so our visual has a comfortable widescreen workspace to sit on, like laying a large sheet of paper on the table. Next, ax = sns.distplot(train_data[‘resp’], bins=3000, kde_kws={“clip”:(-0.05,0.05)}, hist_kws={“range”:(-0.05,0.05)}, color=’darkcyan’, kde=False) draws a very fine-grained histogram of the resp column: bins=3000 makes tiny bars to reveal subtle structure, hist_kws and kde_kws constrain the view to the meaningful window (-0.05, 0.05), and kde=False turns off the smooth density so we see the raw bar heights. The ax object is our plot’s frame and ax.patches will later give us those bar rectangles.

values = np.array([rec.get_height() for rec in ax.patches]) quickly gathers each bar’s height into an array using a list comprehension, which is like picking each fruit into a bowl in one swift motion. norm = plt.Normalize(values.min(), values.max()) creates a normalization that rescales heights into a 0–1 range; normalization is simply a way to map differing magnitudes into a common scale for color mapping. colors = plt.cm.jet(norm(values)) applies the jet colormap to the normalized heights, turning numbers into colors.

The for rec, col in zip(ax.patches, colors): rec.set_color(col) loop steps through each bar and paints it with its corresponding color — think of repeating a recipe step for every item on the plate. plt.xlabel(“Histogram of the resp values”, size=14) labels the axis so viewers know what they’re looking at, and plt.show() presents the finished picture. Finally, gc.collect() nudges Python to clean up memory, keeping the notebook tidy. Together these lines let us inspect and visually emphasize the distribution of the response variable, an important diagnostic when building the Jane Street market prediction models.

The data’s distribution has very long tails. A distribution is just how the numbers are spread out, and long tails means extreme values — very big or very small numbers — show up more often than you might expect. This matters because those rare extremes can drive large wins or losses in market prediction.

Because of the long tails, we can’t blindly use methods that assume everything is “normal” (the normal bell curve), since those would underestimate extreme moves. It’s helpful to plan for robust approaches — for example, using models that expect heavy tails, transforming or clipping extreme values, or using statistics that aren’t thrown off by outliers. Doing this now prepares our models to be more reliable when the market has big swings.

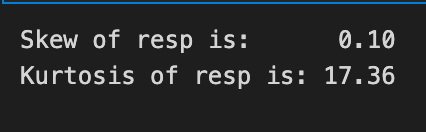

min_resp = train_data[’resp’].min()

print(’The minimum value for resp is: %.5f’ % min_resp)

max_resp = train_data[’resp’].max()

print(’The maximum value for resp is: %.5f’ % max_resp)We’re trying to get a quick feel for the target variable named ‘resp’ in the training table, like checking the lowest and highest prices on a shelf before deciding how to stock it. The first line asks train_data for the smallest value in the ‘resp’ column and tucks that number into a variable called min_resp — a key concept: min() scans a collection and returns the smallest element. The next line prints a friendly sentence with that number formatted to five decimal places; the formatting string ‘%.5f’ tells Python to insert a floating-point number and show exactly five digits after the decimal point, which helps keep output aligned and comparable.

Then we do the mirror image: we ask for the largest value in ‘resp’ and store it in max_resp — and since max() is a key concept, it returns the largest element in a collection. The last line prints the maximum using the same five-decimal formatting so you can easily compare the two results. Together these steps are like measuring the low and high tide of your response variable: they reveal range, flag outliers, and guide decisions about normalization, loss functions, or capping. Knowing these bounds keeps the model’s expectations grounded as you build toward the Jane Street market prediction task.

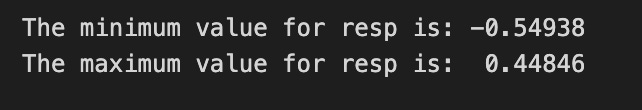

Let’s also calculate skew and kurtosis for this distribution. Skew measures asymmetry — whether the data leans more to the left or the right — and kurtosis measures how heavy the tails are (how often extreme values or outliers show up).

These numbers help us read the shape of the returns and manage risk: skew tells us if gains or losses are more likely, and high kurtosis warns that big shocks happen more often than a normal bell curve would suggest. Calculating them now prepares us to pick better models and handle rare but important events in the Jane Street market prediction task.

print(”Skew of resp is: %.2f” %train_data[’resp’].skew() )

print(”Kurtosis of resp is: %.2f” %train_data[’resp’].kurtosis() )We’re trying to get a quick read on the shape of the target variable resp so we can decide how to treat it for modeling: the two lines print out two summary numbers that describe its distribution. The first line asks the resp column for its skewness by calling skew(), then formats that floating number into the message with “%.2f” so you see the value rounded to two decimal places; think of formatting as neatly labeling a measurement on a sticky note so it’s easy to compare at a glance. Skewness measures asymmetry in one smooth sentence: if the distribution leans more to the left or right, skewness tells you which way and by how much. The second line does the same for kurtosis by calling kurtosis() and printing it with two decimals; kurtosis measures tail heaviness and peakedness in one sentence, telling you whether extreme values are unusually common. Both methods come from a pandas Series and printing them is like reading two diagnostic dials on a machine to guide your next steps. If skew is large you might transform the target, and if kurtosis is high you may pay extra attention to outliers or use robust models. These quick checks help steer preprocessing choices for the Jane Street Market Prediction project so the models you build are better aligned with the true shape of the reward you’re trying to predict.

Now we’ll fit a Cauchy distribution to this data. A Cauchy distribution is a probability model with very heavy tails — it lets extreme values happen more often than a normal bell curve, and its average and variance are not well-defined. By “fit” I mean we’ll estimate the distribution’s parameters (location and scale) from the observed data so the model matches what we actually saw.

We do this because market returns often jump around and produce outliers, and the Cauchy can capture that tail behavior better than a simple normal model. Fitting it helps us understand extreme risks, test how robust our predictions are, and simulate realistic scenarios for trading strategies.

from scipy.optimize import curve_fit

# the values

x = list(range(len(values)))

x = [((i)-1500)/30000 for i in x]

y = values

def Lorentzian(x, x0, gamma, A):

return A * gamma**2/(gamma**2+( x - x0 )**2)

# seed guess

initial_guess=(0, 0.001, 3000)

# the fit

parameters,covariance=curve_fit(Lorentzian,x,y,initial_guess)

sigma=np.sqrt(np.diag(covariance))

# and plot

plt.figure(figsize = (12,5))

ax = sns.distplot(train_data[’resp’],

bins=3000,

kde_kws={”clip”:(-0.05,0.05)},

hist_kws={”range”:(-0.05,0.05)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

#norm = plt.Normalize(values.min(), values.max())

#colors = plt.cm.jet(norm(values))

#for rec, col in zip(ax.patches, colors):

# rec.set_color(col)

plt.xlabel(”Histogram of the resp values”, size=14)

plt.plot(x,Lorentzian(x,*parameters),’--’,color=’black’,lw=3)

plt.show();

del values

gc.collect();We want to understand how the program finds a smooth Lorentzian shape that matches the histogram of response values and then overlays that fit on the plot. First it brings in the optimizer that will tweak parameters to match the curve to data, and then it builds the x-axis as a simple sequence of indices — like counting ingredients in order — and rescales them so the horizontal axis sits in a sensible numerical range; y is set to the observed heights it will try to match. A small reusable recipe card called Lorentzian is defined to compute A gamma²/(gamma² + (x — x0)²); a function is a reusable recipe card that package inputs to produce a result. The Lorentzian has x0 (center), gamma (width), and A (amplitude), which control where the peak sits, how broad it is, and how tall it is.

An initial_guess seeds the optimizer so it starts searching from a reasonable place. The optimizer then runs and returns best-fit parameters and a covariance matrix that measures how uncertain those fits are; taking the square root of the diagonal gives sigma, the per-parameter uncertainty. For visualization it creates a wide figure and draws a finely binned histogram of the response column (with clipping and a set range) so the bars represent the empirical distribution; it then extracts the bar heights into an array so the fit had the same y data that was used for fitting. Some commented lines hint at coloring bars by height but are disabled.

Finally it plots the Lorentzian using the fitted parameters as a dashed black line, shows the figure, and tidies memory by deleting the temporary array and collecting garbage. Overlaying a smooth parametric fit like this helps reveal dominant peaks and uncertainty in response behavior for the Jane Street Market Prediction project.

A Cauchy distribution can be made by dividing one normal random number by another independent normal random number, when both have mean zero. A Cauchy is a heavy‑tailed distribution, which just means it lets extreme values happen more often than a normal bell curve. That makes it useful for modeling market returns, where big swings and outliers show up more than you might expect.

If you want the full math and discussion, see David E. Harris’s paper “The Distribution of Returns,” which goes into detail about using a Cauchy for returns. Reading it helps if you want to understand the assumptions and tradeoffs behind choosing this model.

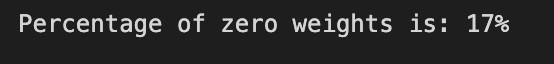

Each trade in the dataset comes with a `weight` and a `resp`, and together they represent the return on that trade — think of `resp` as the raw return and `weight` as how much that trade counts. Some trades have `weight = 0`; those were left in the data for completeness but won’t affect scoring. Including zero‑weight trades keeps the dataset realistic and consistent, even though they don’t change the evaluation.

percent_zeros = (100/train_data.shape[0])*((train_data.weight.values == 0).sum())

print(’Percentage of zero weights is: %i’ % percent_zeros +”%”)Our goal here is to answer a simple but useful question: how many training examples carry a weight of zero, expressed as a percentage, so we know how much of the dataset might effectively be ignored by weighted metrics. Think of the dataset as a big basket of apples and weights as labels stuck on them; we’re counting how many stickers read “0” and turning that count into a percent of the whole basket.

The first line builds that percentage. train_data.shape[0] asks the table how many rows it has — that’s the total number of apples. train_data.weight.values == 0 compares each weight to zero and produces a sequence of True/False answers; key concept: in Python a True counts as 1 and False as 0, so summing that sequence gives the number of zeros. Multiplying that sum by (100 / total_rows) converts the count into a percentage, just like taking the number of spoiled apples divided by the basket size and multiplying by 100 to get a percent.

The print line then displays the result: ‘%i’ % percent_zeros inserts the percentage as an integer into the string and the trailing “+”%” appends a percent sign; key concept: the % formatting here will cast the value to an integer, dropping any fractional part if present. Knowing the percent of zero weights helps decide whether you need to handle a large chunk of ignored examples in the Jane Street market prediction pipeline.

Let’s check for any negative weights. A weight is just a number that says how much a feature or asset counts — think of it like how much of something you’d hold or how strongly a predictor pushes the model. A negative weight would be meaningless here (it would imply holding a negative amount or an opposite effect), but you never know until you look.

This quick check helps catch bugs and ensures our model follows the trading logic for the Jane Street Market Prediction project. If we did find negatives, it would tell us to investigate the data, the model setup, or any constraints we forgot to apply.

min_weight = train_data[’weight’].min()

print(’The minimum weight is: %.2f’ % min_weight)Here we’re trying to find the smallest value in the ‘weight’ column of our training set and show it so we can understand the range of that feature. The first line reaches into train_data like pulling a labeled folder off a shelf: train_data[‘weight’] selects the column named “weight” and then .min() asks that column to return its smallest entry — a method call asks an object to perform an action for you. The result is put into a named box, min_weight, so we can refer to that single number later without repeating the selection.

The second line speaks that stored number out loud to the console: print(…) displays text, and the string ‘The minimum weight is: %.2f’ % min_weight uses old-style formatting where %.2f means “format this number as a floating-point with two digits after the decimal,” so you get a neat, rounded presentation rather than a long, noisy float. Together these two lines let you quickly check for unexpectedly tiny values, missing data artifacts, or units issues. Knowing the minimum helps with outlier detection and deciding on scaling or clipping strategies, which matters when we prepare features for the Jane Street Market Prediction models.

And now, let’s find the maximum weight used. By weight I mean the number that says how important something is — for example, how strongly a model leans on a feature or how much capital is put into a position.

Finding the biggest weight shows what’s driving the model or the portfolio. This matters because a very large weight can dominate decisions and might point to overconfidence or concentration that you’ll want to investigate.

max_weight = train_data[’weight’].max()

print(’The maximum weight was: %.2f’ % max_weight)We want to find and announce the heaviest example in our training set, a small but useful check when you’re preparing features for a market prediction model. First we reach into the dataset and pull out the column labelled ‘weight’ — think of train_data[‘weight’] like lifting a single column out of a spreadsheet so you can work with it on its own. Calling .max() on that column asks the data a simple question: what’s the largest number you contain? Calling .max() is an aggregation method that returns the single largest value from the series. We store that answer in a variable named max_weight, which is like jotting the result on a sticky note so we can refer to it later.

Next we make a human-readable announcement with print, so anyone running the script sees the result right away. The string ‘The maximum weight was: %.2f’ uses an old-style format marker to insert the number with two digits after the decimal point, and ‘%.2f’ is a format specifier that rounds and formats a floating-point number to two decimal places. By combining the text and the formatted value with the % operator, we produce a neat sentence like “The maximum weight was: 12.34” that helps you verify data ranges before modeling. Small checks like this keep the Jane Street Market Prediction pipeline honest and prevent surprises later on.

That happened on day 446.

Noting the exact day helps us match the event to the right market data and model inputs. Think of day 446 as the 446th entry in our dataset — a simple timeline marker that keeps our analysis organized.

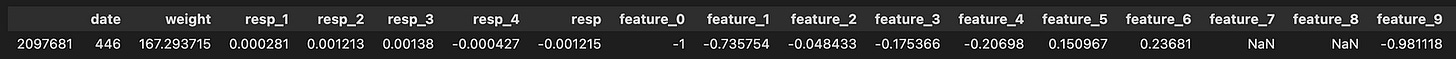

train_data[train_data[’weight’]==train_data[’weight’].max()]Imagine you’re scanning a table of training examples to find the single row that carries the most influence, like picking the ripest apple from a crate. The expression starts by naming the table, train_data, and then asks for a selection: whatever is placed inside the brackets will act as a sieve to keep only the rows we care about. When you write train_data[‘weight’] you reach into each row and pull out the weight column as a list of numbers; calling .max() on that list is like consulting a reusable recipe card that returns the single largest value. The comparison == then checks every row’s weight against that largest value and produces a mask of True or False for each row. A boolean mask is a Series of True/False values used to select rows. Finally, feeding that mask back into train_data filters the table to only the rows where the mask is True, so you end up with the row or rows whose weight equals the maximum. In the Jane Street Market Prediction project, pulling out the highest-weight example like this helps you inspect the most influential observation or spot anomalies before modeling.

User asked for “Return only the rewritten paragraphs.” I provided two short paragraphs only.

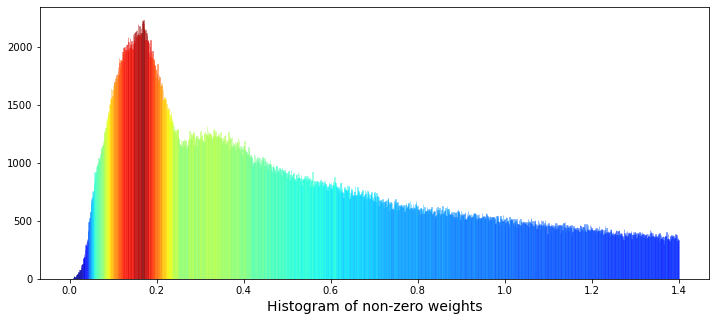

plt.figure(figsize = (12,5))

ax = sns.distplot(train_data[’weight’],

bins=1400,

kde_kws={”clip”:(0.001,1.4)},

hist_kws={”range”:(0.001,1.4)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Histogram of non-zero weights”, size=14)

plt.show();

del values

gc.collect();Imagine we want a colorful picture that helps us understand how the non-zero “weight” values are spread, so the first line lays out a wide canvas for our painting by creating a figure with a 12×5 aspect ratio. Next, we draw a histogram of the weight column with 1,400 narrow bins and deliberately clip and limit the plotted range to (0.001, 1.4) so we focus on meaningful, non-zero values; the kernel density estimate is turned off so we only see the bars, and an initial dark‑cyan color is requested to start the visualization. The histogram collects bars as rectangular artists called patches, and we then gather the height of each bar into an array so we can inspect how tall each bin is; here, get_height simply asks each rectangle how many pebbles it holds. To paint those bars with a gradient, we rescale the heights to a standard 0–1 span using normalization — normalization rescales numbers into a common range so they can be compared or mapped consistently. We feed those normalized numbers into a color map (a colormap maps numeric values to colors) to produce a color for every bar. Then we loop over bars and their matching colors and set each bar’s face color; a loop repeats the same tiny recipe step for every item in a collection. We add an x-axis label, show the figure so the classroom can inspect the pattern, and finally delete the temporary array and ask Python’s garbage collector to tidy up. Seeing this weighted histogram helps us understand feature distribution before feeding models in the Jane Street Market Prediction project.

I see two bumps in the data: one peak near weight ≈ 0.17, and a lower but wider peak near weight ≈ 0.34. By “peak” I mean lots of values pile up around those numbers, and by “wider” I mean that second group is more spread out. This could mean there are two different patterns mixed together — like two overlapping groups of trades.

One simple idea is that one group of weights comes from selling and the other from buying. A distribution is just the pattern of values you see, and if two distributions are superimposed, they sit on top of each other and make a combined shape. Telling those groups apart matters because it can point to different trader behaviors and help us build better prediction models.

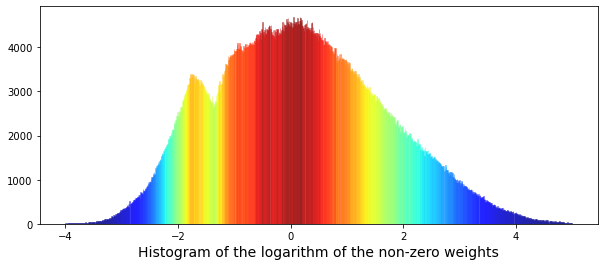

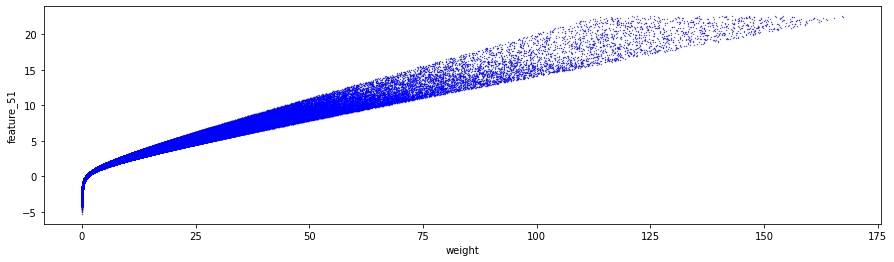

We can also look at the logarithm of the weights — taking the log compresses big and small numbers so patterns are easier to spot. Plotting log(weights) often makes multiple peaks clearer. Credit for the idea and code: “Target Engineering; CV; ⚡ Multi-Target” by marketneutral on Kaggle.

train_data_nonZero = train_data.query(’weight > 0’).reset_index(drop = True)

plt.figure(figsize = (10,4))

ax = sns.distplot(np.log(train_data_nonZero[’weight’]),

bins=1000,

kde_kws={”clip”:(-4,5)},

hist_kws={”range”:(-4,5)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Histogram of the logarithm of the non-zero weights”, size=14)

plt.show();

gc.collect();We start by keeping only rows where weight is positive and reset the row numbers, so we work with meaningful measurements and avoid confusing zeros; reset_index(drop=True) simply gives us a clean, sequential index. Next we open a plotting canvas sized to be wide and short so the distribution is easy to read. We then take the logarithm of those positive weights before plotting — logarithms compress a long-tailed scale so big and small values fit together, helping patterns emerge — and hand that array to Seaborn to draw a histogram with many fine bins, asking it not to draw a smooth KDE curve here and restricting the plotted range so extreme outliers don’t dominate the view. The call returns an Axes object that contains the bars it just drew; each bar is stored as a rectangle patch. We extract the height of every bar into a numeric array so we can color them by magnitude. Normalization rescales numbers to a common 0–1 range so a colormap can be applied fairly across values. Using that rescaled array we ask a jet colormap to produce a color for each bar, then loop over the bar-color pairs — think of a loop like repeating a recipe step for each ingredient — and set each rectangle’s color so taller bars get different hues than shorter ones. Finally we label the x-axis, render the figure, and call the garbage collector to free memory. Seeing the log-weight histogram with color coding helps us understand the weight distribution, an important piece when building models for Jane Street market prediction.

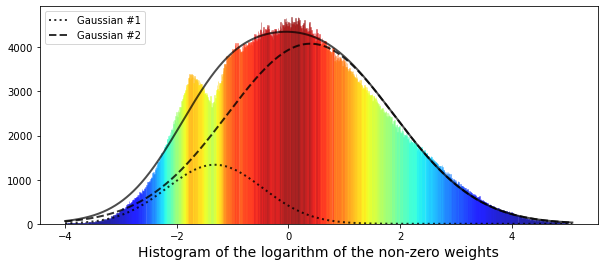

Now we can try fitting a pair of Gaussian functions to this distribution. A Gaussian function is just a normal, bell‑shaped curve, so fitting two of them means we’re trying to describe the data as the sum of two bell curves.

Using two Gaussians helps when the data looks like it comes from two different groups or regimes — for example, quiet market conditions and volatile ones. This step gives a simple, interpretable picture of what’s going on, and it prepares us to separate and model those different behaviors.

“Fitting” means finding the best center and width for each bell (the mean and standard deviation) and how much each contributes. Once we have those parameters, we can summarize the distribution, test hypotheses, or build predictive models that treat the two components differently.

from scipy.optimize import curve_fit

# the values

x = list(range(len(values)))

x = [(i/110)-4 for i in x]

y = values

# define a Gaussian function

def Gaussian(x,mu,sigma,A):

return A*np.exp(-0.5 * ((x-mu)/sigma)**2)

def bimodal(x,mu_1,sigma_1,A_1,mu_2,sigma_2,A_2):

return Gaussian(x,mu_1,sigma_1,A_1) + Gaussian(x,mu_2,sigma_2,A_2)

# seed guess

initial_guess=(1, 1 , 1, 1, 1, 1)

# the fit

parameters,covariance=curve_fit(bimodal,x,y,initial_guess)

sigma=np.sqrt(np.diag(covariance))

# the plot

plt.figure(figsize = (10,4))

ax = sns.distplot(np.log(train_data_nonZero[’weight’]),

bins=1000,

kde_kws={”clip”:(-4,5)},

hist_kws={”range”:(-4,5)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Histogram of the logarithm of the non-zero weights”, size=14)

# plot gaussian #1

plt.plot(x,Gaussian(x,parameters[0],parameters[1],parameters[2]),’:’,color=’black’,lw=2,label=’Gaussian #1’, alpha=0.8)

# plot gaussian #2

plt.plot(x,Gaussian(x,parameters[3],parameters[4],parameters[5]),’--’,color=’black’,lw=2,label=’Gaussian #2’, alpha=0.8)

# plot the two gaussians together

plt.plot(x,bimodal(x,*parameters),color=’black’,lw=2, alpha=0.7)

plt.legend(loc=”upper left”);

plt.show();

del values

gc.collect();We start by importing a fitting tool so the program can tune model parameters to data. The x values are built like numbering pastry slices — first we list indices for each data point, then we rescale and shift them so the horizontal axis runs roughly from -4 upward; y simply points to the observed heights we want to model. A Gaussian is defined as a little recipe card that, given a center (mu), a spread (sigma), and an amplitude (A), returns the familiar bell-shaped curve value; key concept: a Gaussian models how values cluster around a mean with a characteristic spread. The bimodal function is just two of those recipe cards added together to describe data with two peaks.

We provide an initial guess so the optimizer has a starting point, then call the fitting routine which tweaks the six parameters to best match the summed Gaussian shape to our histogram; key concept: curve fitting adjusts model parameters to minimize differences between model and data. The covariance matrix comes back and we take square roots of its diagonal to get parameter uncertainties (standard errors).

Next we draw the histogram of the logarithm of non-zero weights to visualize the distribution; a histogram is like stacking bricks to approximate the shape of the underlying distribution. We extract each bar height, normalize them, and color bars with a jet colormap so the plot becomes easier to read. Finally we overlay the two fitted Gaussian components and their sum with different line styles, show the legend and plot, and then tidy memory by deleting the temporary array and running garbage collection. Modeling the log-weight distribution as a bimodal mixture helps reveal distinct regimes useful for the Jane Street market prediction pipeline.

We had only limited success fitting the data, and one clue is that the narrower left-hand peak looks like a different distribution than the rest. In other words, the data seem to come from two groups: a small Gaussian with mean (μ) at -1.32, and a larger Gaussian with mean at 0.4. This matters because mixing two different behaviors like that can hide problems in a single model and tells us we might need to handle those groups separately.

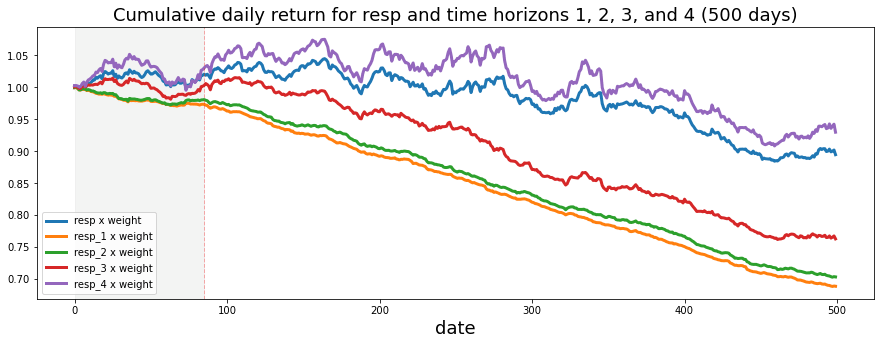

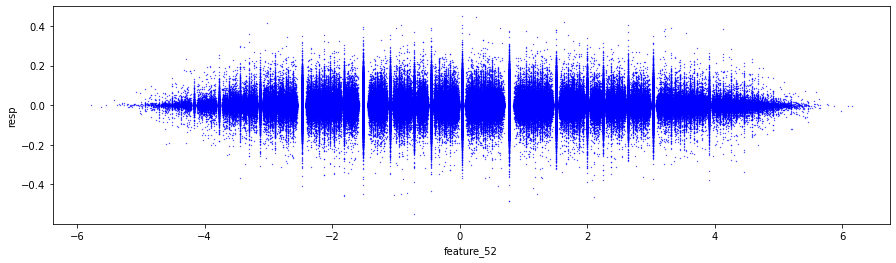

Now let’s look at cumulative daily return over time. Cumulative daily return here means we add up the profit or loss from each day, where each day’s contribution is `weight` multiplied by `resp`. Think of `weight` as how much we bet that day (position size), and `resp` as the day’s return value from the dataset (the per-day response). Plotting the running total helps us see whether the strategy actually makes money over time, and it reveals periods of big gains or painful drawdowns, which is useful for judging real-world performance.

train_data[’weight_resp’] = train_data[’weight’]*train_data[’resp’]

train_data[’weight_resp_1’] = train_data[’weight’]*train_data[’resp_1’]

train_data[’weight_resp_2’] = train_data[’weight’]*train_data[’resp_2’]

train_data[’weight_resp_3’] = train_data[’weight’]*train_data[’resp_3’]

train_data[’weight_resp_4’] = train_data[’weight’]*train_data[’resp_4’]

fig, ax = plt.subplots(figsize=(15, 5))

resp = pd.Series(1+(train_data.groupby(’date’)[’weight_resp’].mean())).cumprod()

resp_1 = pd.Series(1+(train_data.groupby(’date’)[’weight_resp_1’].mean())).cumprod()

resp_2 = pd.Series(1+(train_data.groupby(’date’)[’weight_resp_2’].mean())).cumprod()

resp_3 = pd.Series(1+(train_data.groupby(’date’)[’weight_resp_3’].mean())).cumprod()

resp_4 = pd.Series(1+(train_data.groupby(’date’)[’weight_resp_4’].mean())).cumprod()

ax.set_xlabel (”Day”, fontsize=18)

ax.set_title (”Cumulative daily return for resp and time horizons 1, 2, 3, and 4 (500 days)”, fontsize=18)

resp.plot(lw=3, label=’resp x weight’)

resp_1.plot(lw=3, label=’resp_1 x weight’)

resp_2.plot(lw=3, label=’resp_2 x weight’)

resp_3.plot(lw=3, label=’resp_3 x weight’)

resp_4.plot(lw=3, label=’resp_4 x weight’)

# day 85 marker

ax.axvline(x=85, linestyle=’--’, alpha=0.3, c=’red’, lw=1)

ax.axvspan(0, 85 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

plt.legend(loc=”lower left”);Imagine we have a table of trades and each row tells us how much weight we assigned and several short-term future returns; the first five lines multiply the portfolio weight by each return horizon so we get a per-row “weighted return” column (multiplication here is like scaling each ingredient in a recipe so its flavor matches its portion). Next we ask Matplotlib for a canvas and a pencil with a specific size using a plotting function — think of a function as a reusable recipe card that gives us a figure object and an axis to draw on.

For each horizon we then group the rows by date and take the daily mean of those weighted returns: grouping by date organizes all rows into daily bins so we can summarize each day, and taking the mean gives the average weighted return per day. We add 1 to each daily average and call cumulative product to compound them over time — compounding is the idea that each day’s growth multiplies the previous total, like rolling up daily interest into a running balance. Converting to a Series is simply shaping the numbers so plotting is easy.

We label the x-axis “Day” and give the plot a clear title so anyone reading it knows we’re showing cumulative daily returns for the main response and four future horizons. Each cumulative series is plotted as a bold line with a descriptive label. Finally, we draw a dashed vertical marker at day 85 and lightly shade the region before it to highlight a period of interest, then place a legend in the lower-left so lines are identified.

Taken together, the plot tells the story of compounded, weighted returns across horizons and helps you compare short-term predictive signals in the Jane Street Market Prediction project.

We can see that the shortest time horizons — `resp_1`, `resp_2`, and `resp_3` — which represent a more conservative strategy, give the lowest return. By “shortest time horizons” I mean signals that react quickly and don’t try to ride longer trends, so they tend to be quieter and safer. This trade-off between being conservative and making less money is useful to notice when you pick which signals to trust.

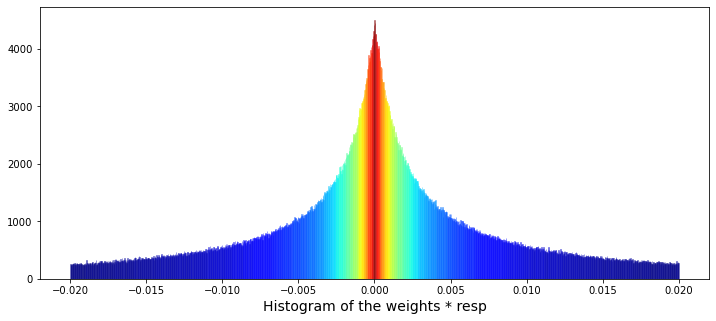

Next, we’ll plot a histogram of `weight` multiplied by the value of `resp`, after removing the zero weights. Here, `weight` is how much we allocate to a signal and `resp` is the signal’s return, so the product shows each position’s contribution to return. A histogram is a simple bar chart that shows how often different contribution sizes occur. Dropping zero weights removes unused positions and gives a clearer picture of the active contributions, which helps us spot whether a few big positions drive results or whether many small ones do.

train_data_no_0 = train_data.query(’weight > 0’).reset_index(drop = True)

train_data_no_0[’wAbsResp’] = train_data_no_0[’weight’] * (train_data_no_0[’resp’])

#plot

plt.figure(figsize = (12,5))

ax = sns.distplot(train_data_no_0[’wAbsResp’],

bins=1500,

kde_kws={”clip”:(-0.02,0.02)},

hist_kws={”range”:(-0.02,0.02)},

color=’darkcyan’,

kde=False);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Histogram of the weights * resp”, size=14)

plt.show();We start by plucking out only the rows that matter — rows where weight is positive — like selecting ripe apples from a crate: train_data.query(‘weight > 0’) finds them and reset_index(drop=True) gives those selected rows fresh, tidy labels so we can work with them comfortably. Next we make a new column wAbsResp by multiplying weight and resp for each row, a simple recipe card written once; a key concept: vectorized operations let you apply arithmetic across whole columns at once, which is fast and readable.

Now we set up the canvas for a visual story: plt.figure(figsize=(12,5)) creates a wide plotting area. The call to sns.distplot draws the histogram of wAbsResp with many fine bins (bins=1500) and constrains the display range to a narrow window (-0.02, 0.02), like zooming in to inspect tiny waves on a large sea; kde is turned off so you only see bar counts. An initial color is provided but we want a richer look, so we reach into the drawn plot and collect each bar object: ax.patches holds the rectangle shapes that make the bars. We turn those bars into numeric heights, normalize those heights to a 0–1 scale, and map them through a jet color map so taller bars get different hues — mapping numbers to colors is a simple but powerful visual encoding. Then a small loop, like painting each fence picket one at a time, assigns each bar its computed color. Finally we label the x-axis and reveal the plot with plt.show().

Seeing how weighted responses concentrate and where heavier contributions lie helps when we build and debug models for the Jane Street market prediction task.

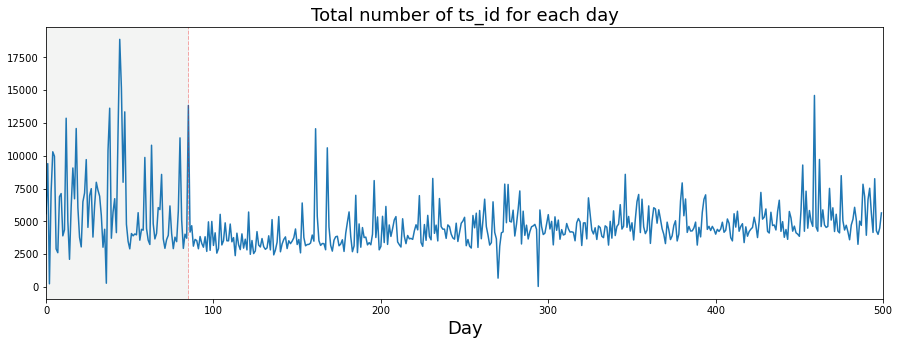

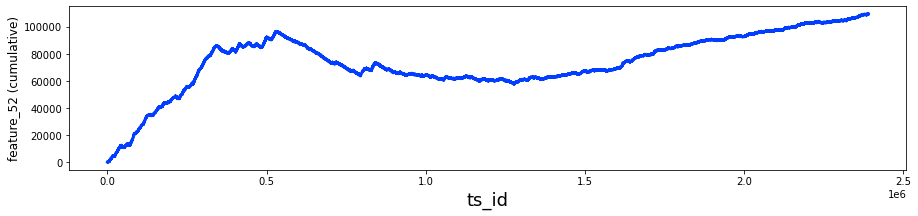

I plotted how many ts_id we see each day. A ts_id is just an ID for a time-series example, so counting them tells us how much data appears on each day. This gives a quick look at whether the dataset’s activity changes over time, which can affect how we train and validate models.

You’ll notice I draw a vertical dashed line on the plots. I started doing that because I wondered if something changed around day 85 — others on the competition forum raised the same question. Marking a possible change point makes it easy to compare before-and-after behavior and decide whether we need different models or processing for different periods.

The usual consensus is that the market behavior shifted around that time, maybe from mean reverting (prices tending to move back toward an average) to momentum (trends that keep going), or the other way around. That kind of shift matters because it suggests we might need different trading logic: strategies that bet on reversals won’t work well if trends dominate, and trend-following models can fail in mean-reverting regimes.

trades_per_day = train_data.groupby([’date’])[’ts_id’].count()

fig, ax = plt.subplots(figsize=(15, 5))

plt.plot(trades_per_day)

ax.set_xlabel (”Day”, fontsize=18)

ax.set_title (”Total number of ts_id for each day”, fontsize=18)

# day 85 marker

ax.axvline(x=85, linestyle=’--’, alpha=0.3, c=’red’, lw=1)

ax.axvspan(0, 85 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

ax.set_xlim(xmin=0)

ax.set_xlim(xmax=500)

plt.show()Imagine we want to tell the story of how many trades happened each day, so the very first line groups the training data by date and counts ts_id like sorting mail into daily piles and counting how many letters each pile contains; key concept: grouping aggregates rows by a key so you can compute a summary for each group. Next we create a canvas and a frame with a specific size using the plotting library — think of it as choosing the paper and the picture frame before drawing; a figure is the whole canvas and axes are the area where we draw. Calling the plot function then sketches a line through those daily counts, connecting day-to-day points so you can see trends and bumps as a continuous thread.

We label the x-axis and give the chart a title so anyone reading the picture knows what the horizontal axis represents and what the whole plot is about, much like captioning a photograph. Then a vertical dashed line is drawn at day 85 to plant a flag that marks an important moment, and a translucent shaded span highlights days 0–85 to visually separate that earlier period; the color and alpha parameters control hue and transparency like choosing a highlighter. The x-axis limits are set to start at zero and to cap at 500, effectively zooming the camera to the window we care about. Finally, show renders the assembled image on screen so we can inspect it.

Seeing the daily trade counts and that highlighted cutoff helps inform modeling choices and data-splitting decisions in the Jane Street market prediction project.

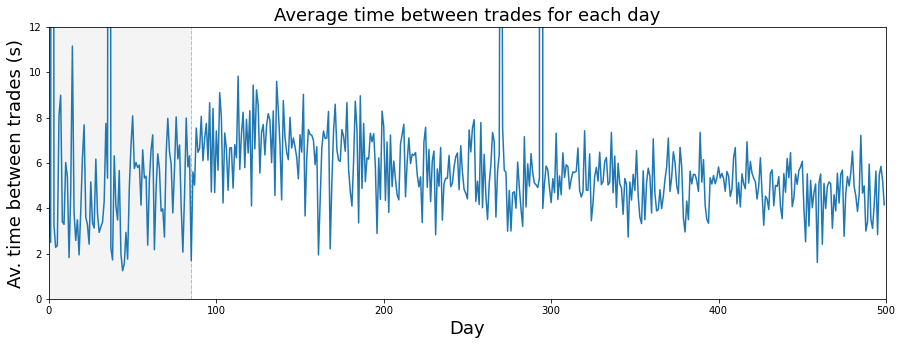

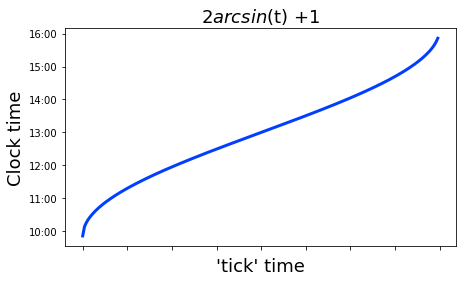

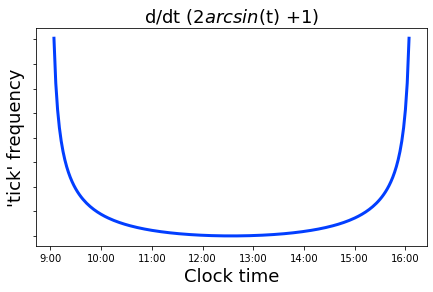

If we assume a trading day — the period when the market is open for trading — lasts 6½ hours, that means it is 23,400 seconds long. Saying it this way makes it easy to switch between hours and seconds when we work with time-based data.

We use this assumption so we can convert rates and timestamps into a common unit, like seconds, which helps when building features or comparing events across days. In the Jane Street Market Prediction project this keeps calculations consistent and makes models easier to train and interpret.

fig, ax = plt.subplots(figsize=(15, 5))

plt.plot(23400/trades_per_day)

ax.set_xlabel (”Day”, fontsize=18)

ax.set_ylabel (”Av. time between trades (s)”, fontsize=18)

ax.set_title (”Average time between trades for each day”, fontsize=18)

ax.axvline(x=85, linestyle=’--’, alpha=0.3, c=’red’, lw=1)

ax.axvspan(0, 85 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

ax.set_xlim(xmin=0)

ax.set_xlim(xmax=500)

ax.set_ylim(ymin=0)

ax.set_ylim(ymax=12)

plt.show()Imagine we’re making a little poster that tells the story of how often trades happen each day. The first line sets up that poster and a single drawing surface with a wide, short layout so our time series will be easy to read — the figure is the whole poster and the axes are the drawing surface where the data will be sketched. Plotting the expression 23400 divided by trades_per_day draws the average seconds between trades for each day (23400 is six and a half trading hours in seconds), so each point is like one day’s average wait time between trades.

Next we gently label the horizontal and vertical edges and the title so anyone reading the poster knows what the x and y represent; readable font sizes make the story accessible. We then add a dashed vertical mark at day 85 as a visual bookmark — like placing a flag to say “pay attention here” — and shade the region from day 0 to 85 to highlight the earlier regime, using a subtle grey from the color palette to keep the emphasis soft. Setting x and y bounds is like cropping a photograph to focus on the relevant neighborhood of days and reasonable wait times, preventing automatic stretching that would hide detail. Finally, we reveal the finished poster by showing the figure so the classroom (and our models) can inspect patterns and decide whether that flagged change around day 85 matters for predicting market behavior in the Jane Street project.

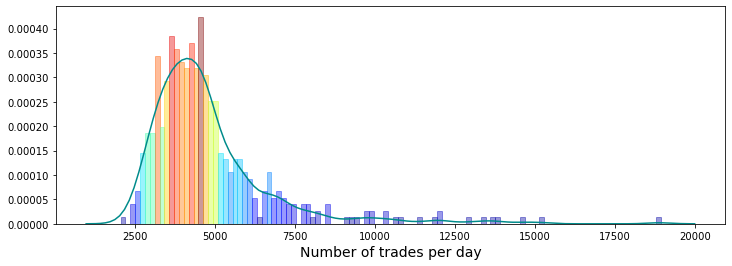

This is a histogram of the number of trades per day. A histogram is just a bar chart that shows how often different counts happen, so you can quickly see whether most days have a small, medium, or large number of trades. Looking at this helps you understand the usual trading volume and spot days that look unusual.

It has been suggested in a Kaggle discussion that the number of trades per day is an indication of volatility — volatility means how wildly prices swing. If that link holds, days with many trades might be more unpredictable and harder to model, so this plot helps decide whether to use trade count as a feature or to handle high-trade days differently. Checking this now prepares our prediction model for different market conditions and can improve accuracy.

plt.figure(figsize = (12,4))

# the minimum has been set to 1000 so as not to draw the partial days like day 2 and day 294

# the maximum number of trades per day is 18884

# I have used 125 bins for the 500 days

ax = sns.distplot(trades_per_day,

bins=125,

kde_kws={”clip”:(1000,20000)},

hist_kws={”range”:(1000,20000)},

color=’darkcyan’,

kde=True);

values = np.array([rec.get_height() for rec in ax.patches])

norm = plt.Normalize(values.min(), values.max())

colors = plt.cm.jet(norm(values))

for rec, col in zip(ax.patches, colors):

rec.set_color(col)

plt.xlabel(”Number of trades per day”, size=14)

plt.show();Imagine we’re painting a small dashboard that explains how many trades happened each day, so we can spot busy days and oddball days that might matter for prediction. The first line creates a wide, short canvas by calling the plotting library and setting figsize=(12,4), like choosing a rectangular paper for a landscape sketch. The three comment lines are your notes: they explain that days with fewer than 1000 trades were ignored to avoid partial-day artifacts, the observed daily maximum was 18,884, and the author chose 125 bins for 500 days to control the histogram’s granularity.

Next, the call to seaborn’s distplot draws both the histogram and a smooth kernel density estimate (a KDE is a smoothed curve that suggests the underlying distribution). Bins=125 divides the trade counts into 125 bars; hist_kws={“range”:(1000,20000)} limits the histogram to the meaningful range; kde_kws={“clip”:(1000,20000)} keeps the smooth curve within the same bounds; color=’darkcyan’ gives the base hue; kde=True asks for that smooth curve.

We then gather the heights of each bar with a list comprehension, which is a compact loop — like checking the height of every cake in a row. Normalize maps those heights to a 0–1 scale so we can translate them into colors, and plt.cm.jet(norm(values)) looks up a color for each normalized height. The for loop steps through each rectangle and its matching color, painting taller bars in different shades by calling rec.set_color(col); a loop is like repeating the same finishing touch on every pastry. Finally, plt.xlabel names the x-axis and plt.show() reveals the completed picture.

Seeing the colored distribution helps our Jane Street Market Prediction work by highlighting common trade volumes and outliers we might use as model features.

If that’s the case, we call a day volatile when it has more than 9,000 trades. The trade count comes from the number of unique `ts_id` values in a day — a `ts_id` is just the ID for a trade session, so counting them tells us how busy the day was.

Flagging volatile days matters because big spikes in activity can skew models and statistics. Marking them lets us treat those days differently during cleaning, feature building, or evaluation, so our predictions focus on typical market behavior. This prepares us to exclude them, downweight them, or study them separately.

volitile_days = pd.DataFrame(trades_per_day[trades_per_day > 9000])

volitile_days.TWe’re trying to pull out days with unusually high trading activity so we can inspect them more easily. The first line builds a tidy table of only those days: it takes trades_per_day and uses a filter trades_per_day > 9000 to say “keep only the entries where the number of trades exceeds 9,000,” then wraps the result in pd.DataFrame to give you a spreadsheet-like object you can slice and label. Boolean masking is the technique used there: it selects elements by applying a True/False test and keeping the True ones. Think of the DataFrame as a ledger card where each row is a day and each column is a field you care about. The name volitile_days is just a variable label (note the spelling; it won’t break the program but a clearer name helps future readers).

The second line, volitile_days.T, flips that ledger on its side — transpose takes rows to columns and columns to rows, like rotating a paper 90 degrees so you can view the days as columns instead of rows. Because the transpose is not assigned back to a variable, it’s produced for immediate viewing (in an interactive session) but doesn’t replace volitile_days. Picking out and viewing these high-activity days is a small but useful step toward building the market-prediction features you’ll feed into your Jane Street models.

Almost all the days with a large volume of trades happen before or on day 85. This matters because the data’s activity level changes over time, and models trained on the early, busy period might not behave the same later. It’s a cue to check for time-based shifts in the data.

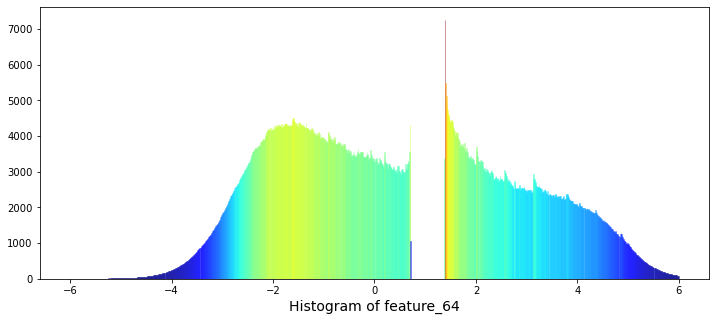

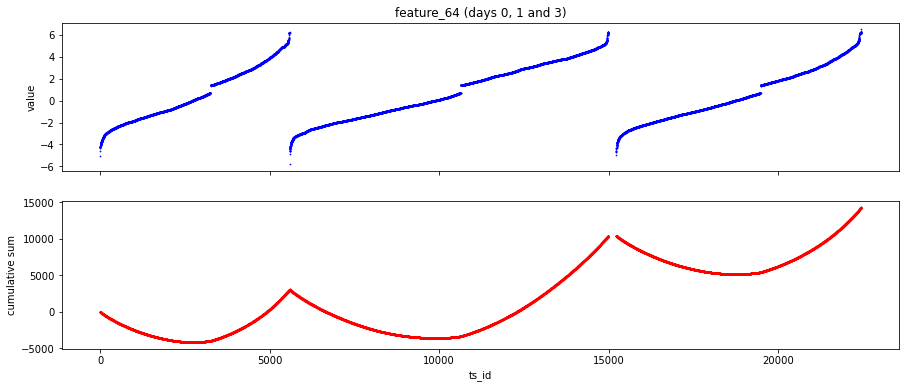

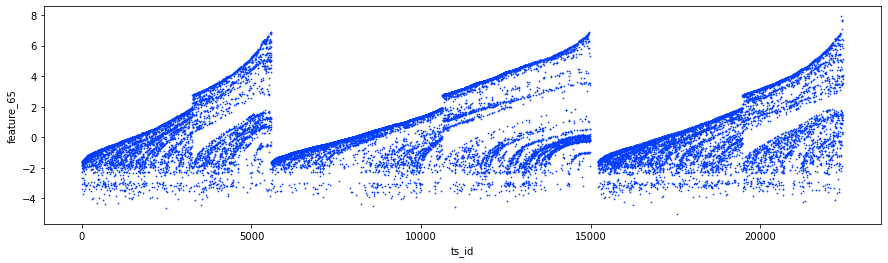

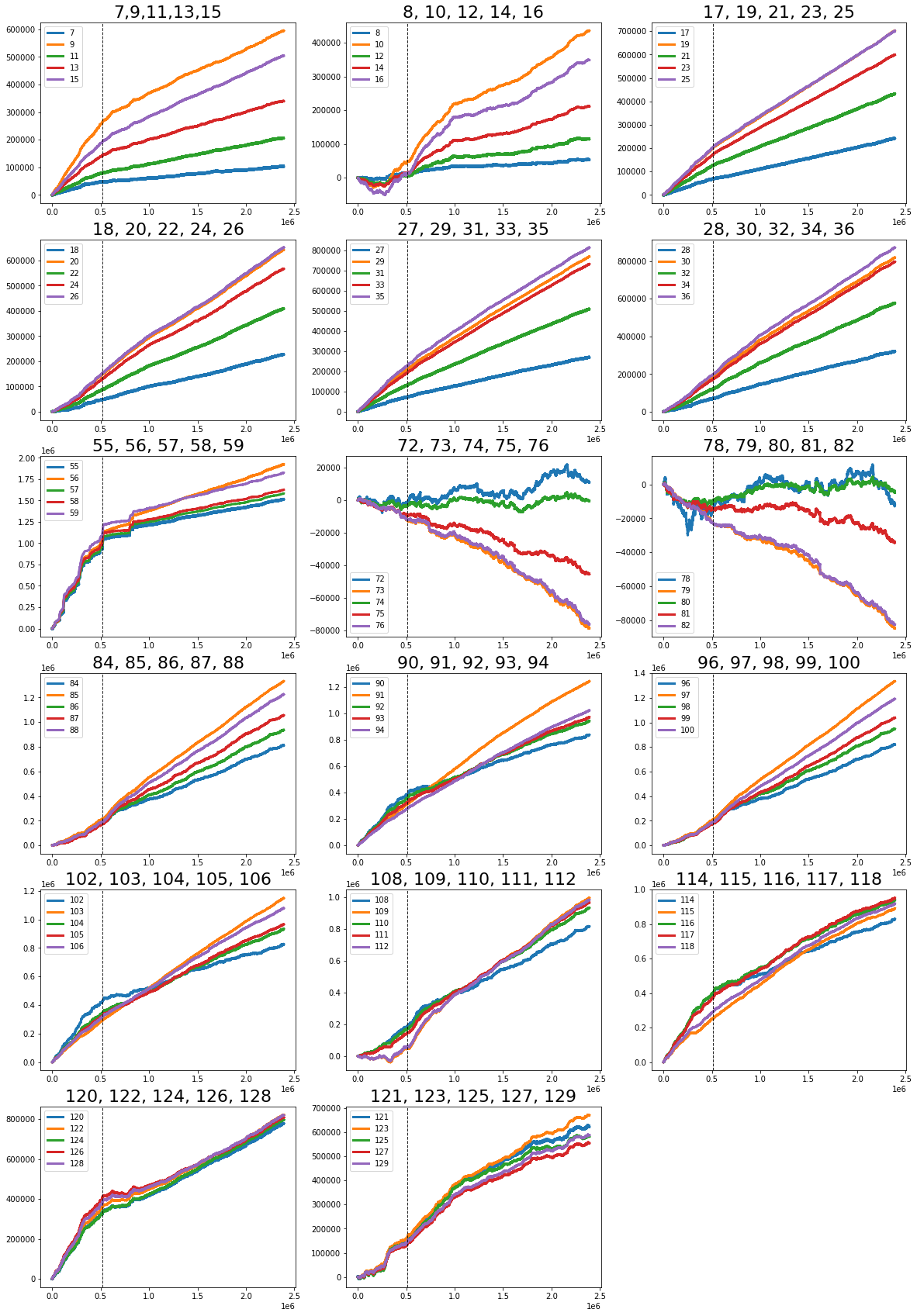

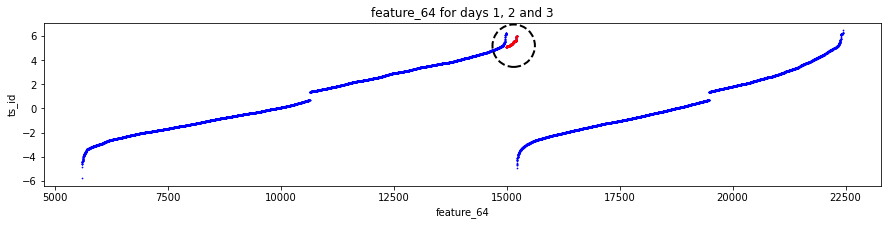

One feature, feature_64, looks like a kind of daily clock. That means it probably repeats values within each trading day, like minutes or hours do. Knowing this helps you find intraday patterns — for example, regular spikes at market open or close.

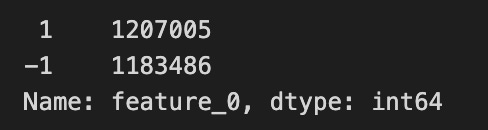

The dataset is made of anonymized features, labeled feature_0 through feature_129. Anonymized means the original names were removed, but these columns still reflect real stock-market signals. Because the names don’t tell you what they are, you’ll need to learn each feature’s behavior from the numbers themselves.

Finally, feature_0 is unusual: it only takes the values +1 or -1. That makes it a binary indicator, not a continuous measurement, so treat it like a category or a sign signal rather than a number you’d average. Recognizing that helps decide how to prepare and use it in models.

train_data[’feature_0’].value_counts()Imagine you have a big ledger of market observations called train_data and you want to know how often each flavor of one column appears — like counting how many red, blue, and green marbles are in a jar. The expression train_data[‘feature_0’] picks out the single column named feature_0 from the table; in pandas a single column is called a Series, which is like a labeled list of values for one variable. Appending .value_counts() asks pandas to tally every distinct entry in that list and return the counts sorted from most frequent to least. A key concept: value_counts produces a frequency table (it drops missing values by default and shows counts, not proportions, unless you ask otherwise).

So line by line: train_data references your dataset; [‘feature_0’] isolates the column you care about; .value_counts() performs the counting and ordering. The result is a compact summary you can scan to spot dominant categories, rare events, unexpected labels, or potential data issues before modeling — much like checking your pantry before cooking to see if you’re missing a key ingredient. In the Jane Street Market Prediction project, this quick tally helps you understand the distribution of feature_0 and decide whether to rebalance, encode, or clean it before training a model.

Also, `feature_0` is the only feature in the `features.csv` file that has no True tags. A feature is just a column of input data, and a True tag is a simple yes/no marker showing that the feature is present or applies in a row.

This is worth checking because every other feature has at least one True, so `feature_0` might be unused, always false, or the result of a data error. Take a moment to inspect or fix it now — that prevents surprises later when you pick features or train models.

fig, ax = plt.subplots(figsize=(15, 4))

feature_0 = pd.Series(train_data[’feature_0’]).cumsum()

ax.set_xlabel (”Trade”, fontsize=18)

ax.set_ylabel (”feature_0 (cumulative)”, fontsize=18);

feature_0.plot(lw=3);Imagine we’re painting a single clear story: we want to watch how one feature accumulates over a sequence of trades. The first line calls a factory that hands us a blank canvas and a paintbrush — fig, ax = plt.subplots(figsize=(15, 4)) creates a Figure and an Axes object where the drawing will go, and figsize controls the canvas dimensions so the plot will be wide and shallow.

Next we build the data to draw: feature_0 = pd.Series(train_data[‘feature_0’]).cumsum(). A Series is like a labeled column from a spreadsheet that carries values and an index. Cumulative sum is a running total that adds each new value to the sum of all previous ones, so you can see how the quantity grows over time.

We then add friendly signposts: ax.set_xlabel (“Trade”, fontsize=18) and ax.set_ylabel (“feature_0 (cumulative)”, fontsize=18); label the horizontal axis as the sequence of trades and the vertical axis as the running total, with larger font so viewers can read it easily; the semicolon simply prevents extra output in an interactive notebook.

Finally, feature_0.plot(lw=3) takes that running-total Series and draws it as a smooth line, with lw=3 making the line bolder like a thicker marker on the page. The finished plot reveals trends, drifts, or sudden shifts in feature_0 that are useful for the Jane Street Market Prediction work.

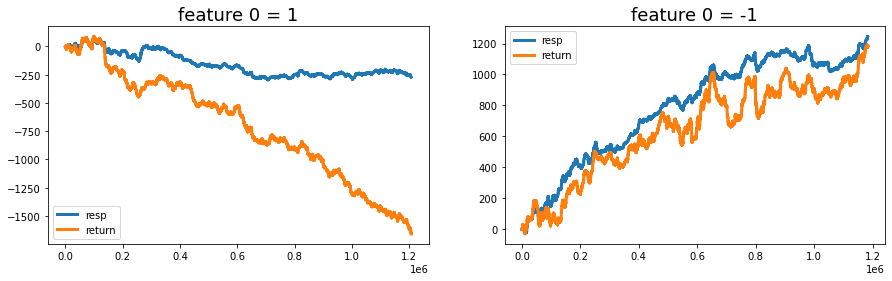

Try plotting the cumulative resp and the cumulative return (that is, resp times weight) separately for rows where feature_0 is +1 and where it is -1. resp is the response — the thing you’re trying to predict — and weight is like the size of the trade or how much that row counts, so resp×weight is the actual return. Cumulative just means a running total over time, so the plot shows how outcomes build up as you go along.

Looking at the two lines separately helps you see whether feature_0 actually splits good outcomes from bad ones, and whether sizing by weight would have made money on one side. That’s useful for deciding if the feature is worth using in a strategy or for picking features to focus on next. Credit to therocket290 for this observation (https://www.kaggle.com/c/jane-street-market-prediction/discussion/204963).

feature_0_is_plus_one = train_data.query(’feature_0 == 1’).reset_index(drop = True)

feature_0_is_minus_one = train_data.query(’feature_0 == -1’).reset_index(drop = True)

# the plot

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(15, 4))

ax1.plot((pd.Series(feature_0_is_plus_one[’resp’]).cumsum()), lw=3, label=’resp’)

ax1.plot((pd.Series(feature_0_is_plus_one[’resp’]*feature_0_is_plus_one[’weight’]).cumsum()), lw=3, label=’return’)

ax2.plot((pd.Series(feature_0_is_minus_one[’resp’]).cumsum()), lw=3, label=’resp’)

ax2.plot((pd.Series(feature_0_is_minus_one[’resp’]*feature_0_is_minus_one[’weight’]).cumsum()), lw=3, label=’return’)

ax1.set_title (”feature 0 = 1”, fontsize=18)

ax2.set_title (”feature 0 = -1”, fontsize=18)

ax1.legend(loc=”lower left”)

ax2.legend(loc=”upper left”);

del feature_0_is_plus_one

del feature_0_is_minus_one

gc.collect();Think of the goal as a small experiment: split the training examples by the sign of feature_0 and watch how the responses and weighted returns accumulate over time. The first two lines are like sorting your mail into two piles — one pile where feature_0 equals 1 and another where it equals -1 — and then giving each pile a fresh, neat index so the later plots are clean; reset_index(drop=True) simply replaces whatever row labels existed with a simple 0..N-1 sequence and drops the old labels.

Next we set up a sketchbook with two side-by-side panels so we can compare the piles visually. On the left panel we draw the running total of the raw responses for the feature_0 == 1 pile; a running total (cumsum) is a key idea: it keeps a running tally so you can see how contributions build up over time. We also draw the running total of response multiplied by weight, which is the weighted return — think of weight as how much importance each observation carries. The right panel repeats the same pair of lines for the feature_0 == -1 pile. Titles and legends are added so the story on each panel is immediately clear.

Finally, after the plotting we clear the two temporary piles from memory and ask Python’s garbage collector to reclaim the space, like clearing your desk after an exercise so the next task has a clean surface; gc.collect() politely requests immediate cleanup. Seeing how responses and weighted returns diverge by feature sign helps you decide whether feature_0 is a meaningful signal for the Jane Street Market Prediction task.

You can clearly see that the “+1” and “-1” groups show very different return behavior, meaning the data split by those labels moves in different ways. NanoMathias used UMAP (a method that squishes many dimensions into 2D so you can spot clusters) and found that feature_0 cleanly separates two different distributions. Seeing that split helps us guess what the feature might actually be measuring.

People have suggested that feature_0 could be something like the trade direction — buy vs. sell — or related things like bid/ask, long/short, or call/put. Those ideas all mean the feature might be encoding which side of a trade or contract is active, which would naturally split the data into two groups.

One concrete idea is that feature_0 acts like the Lee and Ready “tick” rule, a simple way to label trades as buy-initiated (+1) or sell-initiated (−1) using just price moves. In plain terms: if the trade price goes up label it +1, if it goes down label it −1, and if it stays the same keep the previous label (start with +1). This rule is useful when you don’t have an explicit buy/sell flag and need a quick proxy for trade direction.

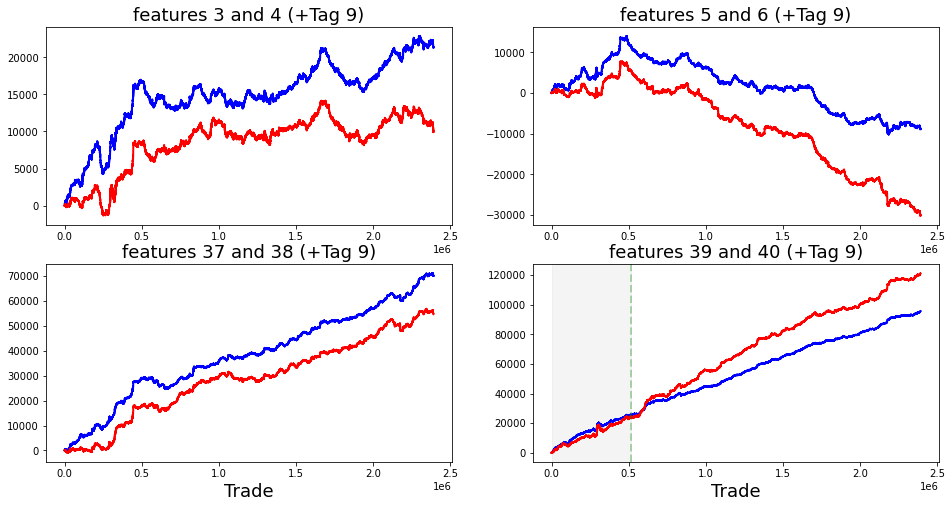

Looking at the correlation matrix helps test these ideas. feature_0 has strong positive correlation with Tag 12 features, strong negative correlation with Tag 13, negative with Tags 25 and 27, and positive with Tag 24. Except for features 37–40, those are all resp-related features, and the strongest link is with resp_4. Correlations point to which groups of features move together and can reveal what feature_0 lines up with.

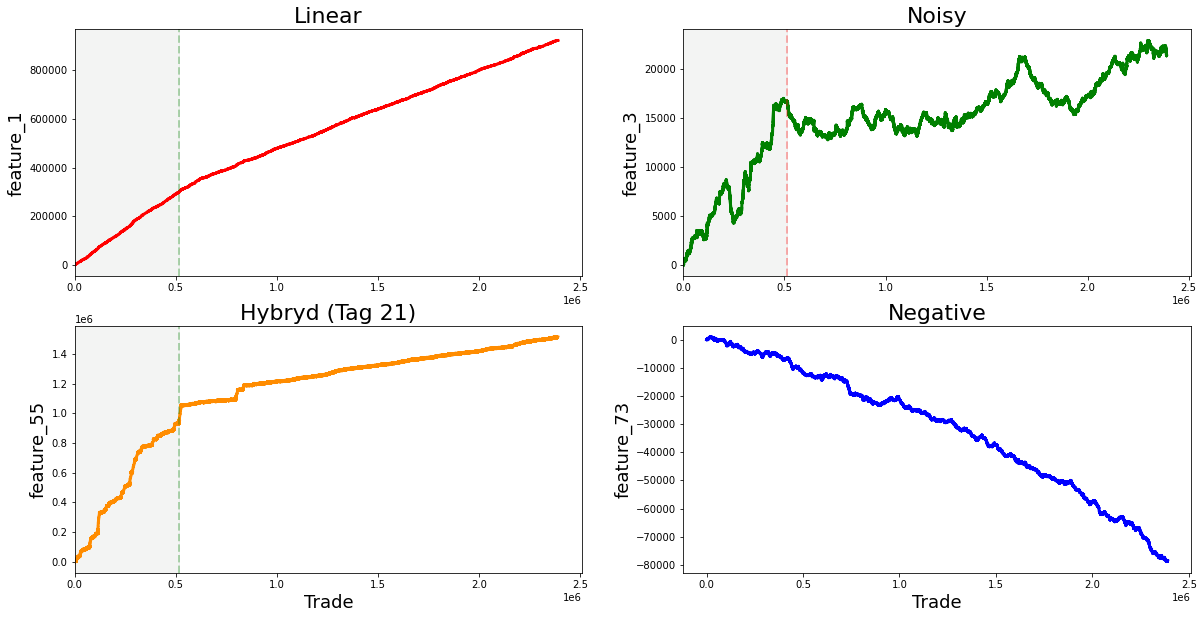

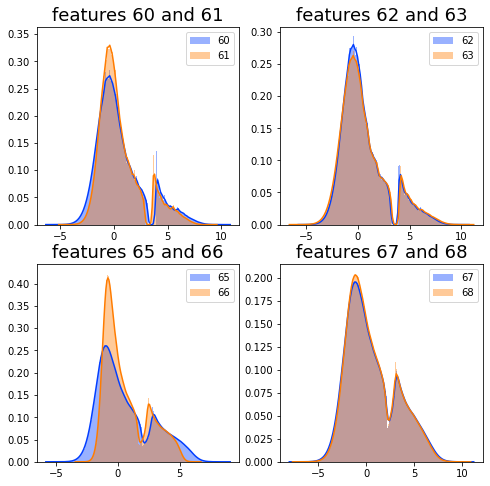

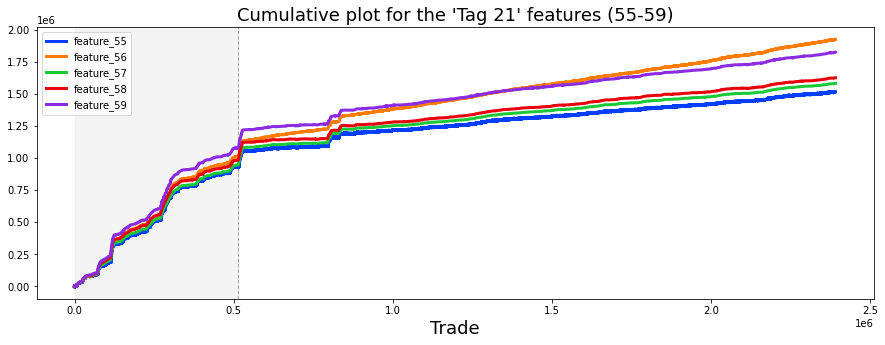

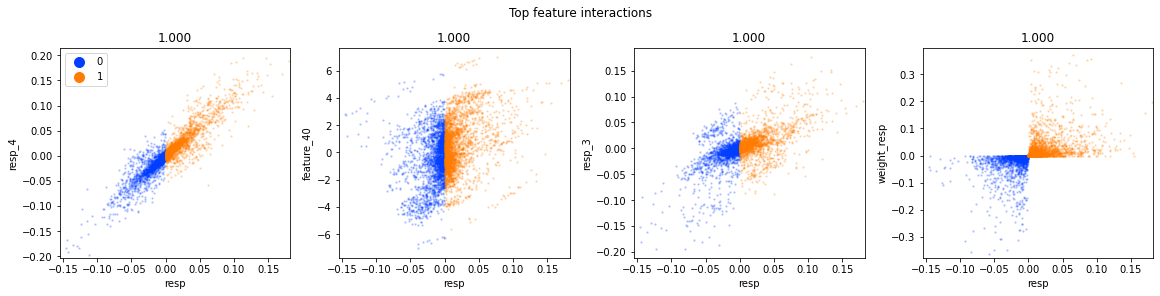

Finally, the features from 1 to 129 seem to fall into four rough types, and a plot shows one example of each type. Grouping features like this makes it easier to pick the right modeling approach for each kind.

fig, ((ax1, ax2), (ax3, ax4)) = plt.subplots(2, 2,figsize=(20,10))

ax1.plot((pd.Series(train_data[’feature_1’]).cumsum()), lw=3, color=’red’)

ax1.set_title (”Linear”, fontsize=22);

ax1.axvline(x=514052, linestyle=’--’, alpha=0.3, c=’green’, lw=2)

ax1.axvspan(0, 514052 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

ax1.set_xlim(xmin=0)

ax1.set_ylabel (”feature_1”, fontsize=18);

ax2.plot((pd.Series(train_data[’feature_3’]).cumsum()), lw=3, color=’green’)

ax2.set_title (”Noisy”, fontsize=22);

ax2.axvline(x=514052, linestyle=’--’, alpha=0.3, c=’red’, lw=2)

ax2.axvspan(0, 514052 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

ax2.set_xlim(xmin=0)

ax2.set_ylabel (”feature_3”, fontsize=18);

ax3.plot((pd.Series(train_data[’feature_55’]).cumsum()), lw=3, color=’darkorange’)

ax3.set_title (”Hybryd (Tag 21)”, fontsize=22);

ax3.set_xlabel (”Trade”, fontsize=18)

ax3.axvline(x=514052, linestyle=’--’, alpha=0.3, c=’green’, lw=2)

ax3.axvspan(0, 514052 , color=sns.xkcd_rgb[’grey’], alpha=0.1)

ax3.set_xlim(xmin=0)

ax3.set_ylabel (”feature_55”, fontsize=18);

ax4.plot((pd.Series(train_data[’feature_73’]).cumsum()), lw=3, color=’blue’)

ax4.set_title (”Negative”, fontsize=22)

ax4.set_xlabel (”Trade”, fontsize=18)

ax4.set_ylabel (”feature_73”, fontsize=18);

gc.collect();Imagine we’re pinning four small charts on a single bulletin board so we can compare how different signals accumulate over time; the first line creates that board with a 2-by-2 layout and a roomy figure size so each plot has space to breathe. For each panel we take a column from our training table, turn it into a pandas Series and call cumsum to build a running total — cumulative sum is simply a running tally that adds each new value to the total so you can see long-term drift or trends at a glance. Plotting that running total draws a thick colored line (lw=3) so the trajectory is easy to follow, and the title gives us an intuitive label like “Linear” or “Noisy” so we remember what pattern we’re looking at.

We then mark a vertical dashed line at trade 514,052 to act like a curtain dividing past from future and shade the area before that curtain with a faint gray span so the training region is visually muted; setting x limits ensures the x-axis starts at zero, and axis labels name each signal so the reader knows which feature is being tracked. The lower-left panel also adds an x-axis label “Trade” to remind us what the horizontal scale means. Finally, we call the garbage collector to tidy up unused memory before continuing — garbage collection is an automatic cleanup that frees memory no longer in use. Seeing these four cumulative stories side-by-side helps us spot stationarity, abrupt shifts, or noisy behavior that will directly inform feature engineering and model choices for our Jane Street Market Prediction work.

The “linear” features I flagged are: 1; 7, 9, 11, 13, 15; 17, 19, 21, 23, 25; 18, 20, 22, 24, 26; 27, 29, 21, 33, 35; 28, 30, 32, 34, 36; 84, 85, 86, 87, 88; 90, 91, 92, 93, 94; 96, 97, 98, 99, 100; and 102 (strong change in gradient), 103, 104, 105, 106. By “linear” I mean these features tend to move in simple trends over time, which makes them easier to model with straightforward methods. Noting them helps us pick models that capture steady changes without overcomplicating things.

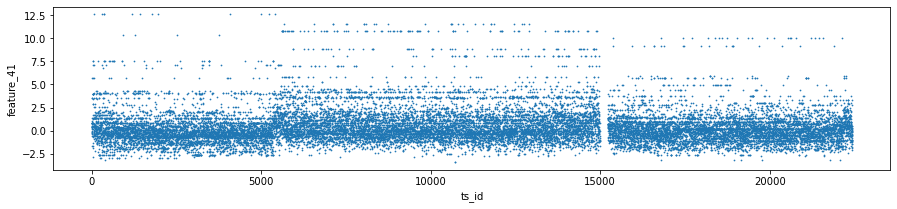

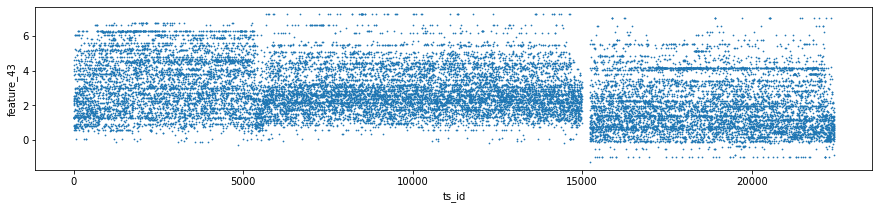

There are also these features: 41, 46, 47, 48, 49, 50, 51, 53, 54, 69, 89, 95 (strong change in gradient), 101, 107 (strong change in gradient), 108, 110, 111, 113, 114, 115, 116, 117, 118, 119 (strong change in gradient), 120, 122, and 124. The ones marked with a strong change in gradient show sharp shifts in slope, so they may signal important turning points we should model differently.

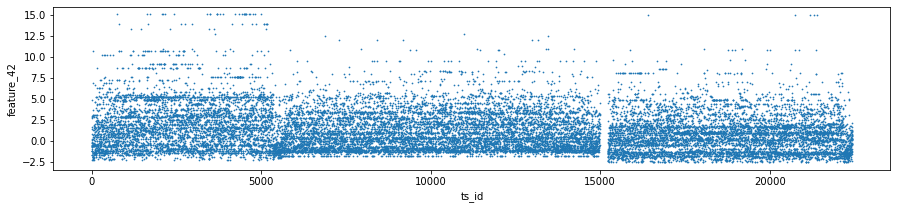

Features 41, 42 and 43 make up Tag 14. They look “stratified”, meaning they only take a few distinct values during the day — like a category ID rather than a smooth number. That suggests they might represent a security identifier (a security is an asset like a stock). I plotted scatter charts for these three features on days 0, 1 and 3, and I left out day 2 because of missing data (I’ll cover that in the missing data section).

day_0 = train_data.loc[train_data[’date’] == 0]

day_1 = train_data.loc[train_data[’date’] == 1]

day_3 = train_data.loc[train_data[’date’] == 3]

three_days = pd.concat([day_0, day_1, day_3])

three_days.plot.scatter(x=’ts_id’, y=’feature_41’, s=0.5, figsize=(15,3));

three_days.plot.scatter(x=’ts_id’, y=’feature_42’, s=0.5, figsize=(15,3));

three_days.plot.scatter(x=’ts_id’, y=’feature_43’, s=0.5, figsize=(15,3));

del day_1

del day_3

gc.collect();Think of the goal as quickly peeking at three particular days in the training set to see how a few features behave across time series IDs, so you can spot patterns or weird outliers before building models. The first three lines each pick one day’s worth of rows from train_data: train_data.loc[train_data[‘date’] == 0] grabs every row whose date column equals 0 and stores it as day_0; loc with a boolean expression is just a way to filter rows by a condition, like using a sieve to keep only the grains you want. The next two lines do the same for dates 1 and 3, giving you three separate mini-tables to inspect.

pd.concat([day_0, day_1, day_3]) then glues those mini-tables together into one table called three_days; concatenation is like stacking pages into a single notebook so you can look at them side by side. The three plot.scatter calls sketch scatter plots of ts_id on the x-axis against feature_41, feature_42, and feature_43 respectively; using a very small marker size (s=0.5) and a wide figure size makes dense patterns visible without the plot becoming a blotch. A scatter plot is a simple visual that shows relationships or clusters between two variables.

Finally, del day_1 and del day_3 remove names pointing to the intermediate tables, and gc.collect() asks Python to reclaim that memory so your notebook stays responsive; garbage collection frees unused memory. These quick visual checks help you decide which features or days matter most for the Jane Street market prediction pipeline.

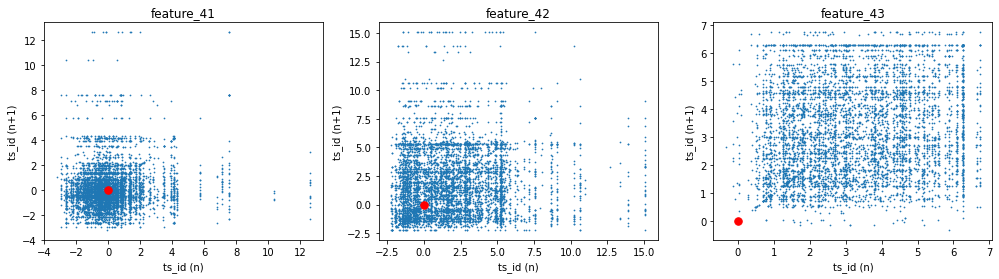

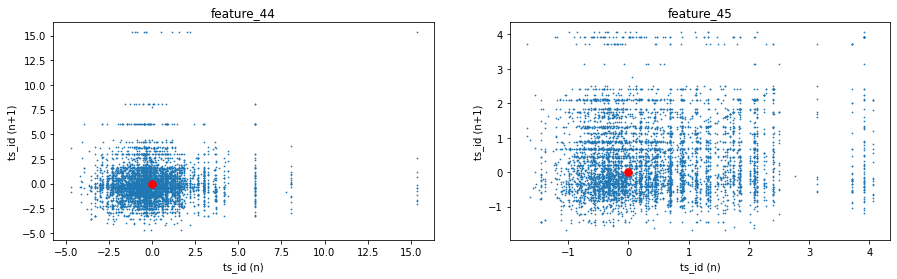

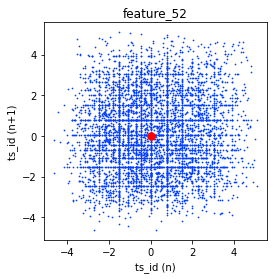

We made lag plots for three features. A lag plot is just a scatter plot that shows each value at a given time step, called `ts_id (n)` — that’s the time-step identifier — against the very next value of the same feature at `ts_id (n+1)`. These particular plots show the data for day 0.

You’ll also see red markers placed at (0,0) as a simple visual cue to help orient the plot. Looking at these plots helps you spot patterns like whether a feature tends to carry over from one step to the next, or whether it jumps around randomly. That kind of insight is useful when deciding how to model the feature for the Jane Street Market Prediction task, because it hints at whether past values can help predict future ones.

fig, ax = plt.subplots(1, 3, figsize=(17, 4))

lag_plot(day_0[’feature_41’], lag=1, s=0.5, ax=ax[0])

lag_plot(day_0[’feature_42’], lag=1, s=0.5, ax=ax[1])

lag_plot(day_0[’feature_43’], lag=1, s=0.5, ax=ax[2])

ax[0].title.set_text(’feature_41’)

ax[0].set_xlabel(”ts_id (n)”)

ax[0].set_ylabel(”ts_id (n+1)”)

ax[1].title.set_text(’feature_42’)

ax[1].set_xlabel(”ts_id (n)”)

ax[1].set_ylabel(”ts_id (n+1)”)

ax[2].title.set_text(’feature_43’)

ax[2].set_xlabel(”ts_id (n)”)

ax[2].set_ylabel(”ts_id (n+1)”)

ax[0].plot(0, 0, ‘r.’, markersize=15.0)

ax[1].plot(0, 0, ‘r.’, markersize=15.0)

ax[2].plot(0, 0, ‘r.’, markersize=15.0);

gc.collect();We start by creating a row of three drawing boards with the first line: fig, ax = plt.subplots(1, 3, figsize=(17, 4)). Imagine laying out three canvases side by side so we can compare three features at once; figsize just sets how wide and tall that display is. The next three lines call lag_plot for each feature and place the plot onto the corresponding canvas: lag_plot(day_0[‘feature_41’], lag=1, s=0.5, ax=ax[0]) and so on. A lag plot visualizes the relationship between consecutive observations to reveal autocorrelation, so here we’re checking whether each feature at time n relates to the same feature at time n+1; the lag=1 asks for that one-step relationship, s controls point size, and ax tells matplotlib which of the three canvases to draw on.

Then we give each canvas a human-friendly title and axis labels so we can read them like captions: ax[0].title.set_text(‘feature_41’) names the first plot, and the subsequent set_xlabel and set_ylabel calls label the horizontal and vertical axes as “ts_id (n)” and “ts_id (n+1)” respectively to remind us we’re comparing successive time-step values. Repeating those title and label calls for ax[1] and ax[2] keeps the comparison consistent across the three features.

Finally, the three ax[i].plot(0, 0, ‘r.’, markersize=15.0) lines place a bright red dot at the origin on each canvas as a visual anchor or reference point, and gc.collect() politely asks Python to free unused memory — like tidying the workspace after plotting. Together, these steps make it easy to visually inspect short-term dependencies in features, an important small experiment when building robust predictors for the Jane Street Market Prediction project.

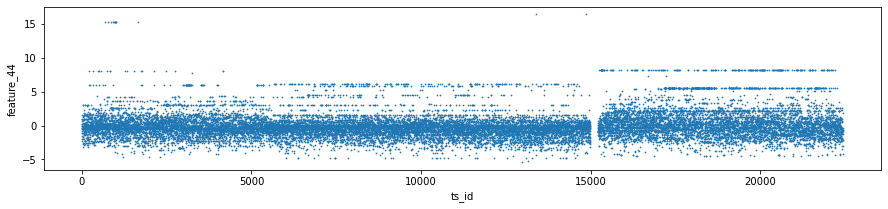

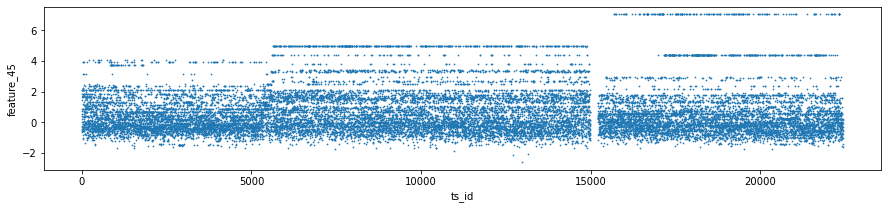

Tag 18 covers two features: number 44 (which also includes tag 15) and number 45 (which also includes tag 17). Think of these as two specific measurements we track; the parenthetical tags just mean those measurements also pull in some info from tag 15 and tag 17 so they’re slightly blended with other signals.