Feature Selection Techniques

Feature selection techniques are reviewed in this article, along with questions on why it is important and how to implement it in practice with Python.

We have compiled a list of interview questions here to help you:

Feature selection: what does it mean?

How does feature selection benefit you?

Is there a technique you are familiar with for Feature Selection?

Establish the differences between univariate analysis, bivariate analysis, and multivariate analysis.

Is PCA a good method for selecting features?

Can you explain the difference between forwarding and Backward Feature Selection?

Feature selection is important, but what exactly is it?

By selecting features, you are ensuring that your ML model is uniform, non-redundant, and relevant. Your ML project must use feature selection for the following reasons:

ML models are trained more efficiently when the dataset is smaller and simpler, which reduces the amount of power needed to train them;

It is easier to understand and explain simple machine learning models with fewer features;

By doing so, overfitting can be avoided. A model becomes more complex as more features are added, and as more features are added, errors become more frequent (the error increases as the number of features increases).

Which methods are used to select features?

In terms of feature selection, there are two common approaches:

Feature selection in the forward direction. This method begins by fitting the model with one feature (or a small subset of features) and keeps adding features until no impact is noticeable on the model metrics. If you want to start with a single feature or subset of features, you can fit a model with methods like correlation analysis (e.g., based on the Pearson coefficient).

Selection of features in reverse order. In contrast to 1), this approach is the reverse. As long as the ML model metrics stay the same, you can start with the full set of features and then iteratively reduce them feature by feature.

We can highlight the following methods among the most popular:

A method based on filtered data. The methodology that is easiest to understand. Machine learning algorithms are not used to select the features. Our decision-making processes are based on statistics (e.g., Pearson’s Correlation, LDA, etc.) based on how each feature affects the target outcome. Compared to other methodologies, this one is the least compute-intensive and fastest.

An encapsulation method. The ML training metrics are used to select the features in this method. Following training, each subset receives a score, then features are added or removed, and ultimately the ML metric threshold is reached. This could be accomplished by moving forward, going backwards or recursively. Due to the number of ML models you need to train, this method is the most compute-intensive.

Method embedding. Combining filtered and wrapper methodologies, this method is more complex. There are several popular algorithms that employ this method, such as LASSO and a tree algorithm.

The following strategy will be used in this article:

In order to select features, we consult with business stakeholders and understand the features they require

To avoid multicollinearity, we identify highly correlated features

Using filtering and wrapper methods, find correlations between features and target variables

As a final step, we dive deep into the most correlated features of the data to gain extra insight into the business

A hands-on introduction to Python

As a reminder, we will work with a fintech dataset that contains past loan applicants’ data, such as the applicant’s credit grade, income, and DTI. In addition to identifying patterns, the goal is to use machine learning to predict if a loan applicant will default (not pay back the loan), which will allow businesses to make more informed decisions, including rejecting loan applications, reducing loan amounts, or lending to riskier applicants with a higher interest rate.

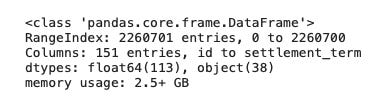

Kaggle is the environment I use to run the code. Let’s load the dataset and get started:

There are over a million rows of observations in the dataset as well as over 150 features. It would be impossible to train ML without validating the quality and suitability of this huge amount of data. Typically, there is a considerable amount of “noise” in this data that isn’t helpful to ML training.

Stakeholders should be consulted first.

Performing an analysis over such an exhaustive list of features may be time-consuming and require significant computing resources. You should know the attributes of each dataset in detail if you want to communicate effectively with your stakeholders.

When analyzing the fintech dataset, you may want to consult with loan officers who evaluate loans daily, for example, to understand what features are essential. You can automate the loan officer’s decision-making process by using machine learning (ML).

The code snippet below shows what we might receive if we have taken this step (see the code snippet below). The recommendations should be treated with caution, but they give us a good starting point for our initial work.

You should spend some time understanding what each feature in your dataset means:

loan_amnt — The amount of the loan requested by the borrower.

term — A measurement of the number of payments required on the loan. Values are expressed in months with a maximum of 60 for a 36-month loan.

int_rate — Interest rate for the loan

sub_grade — Credit history-based subgrade assigned to loans

emp_length — The number of years the borrower has been employed.3

home_ownership — Whether the borrower is a homeowner (i.e., rents, owns, mortgages, etc.)

annual_inc — Borrower’s self-reported income

addr_state — Refers to the state the borrower provided when applying for the loan

dti — Calculated by dividing the borrower’s monthly income by the borrower’s total monthly debt payments on all debt obligations, excluding mortgages.

mths_since_recent_inq — The number of months since the most recent inquiry

revol_util — The borrower’s utilization of revolving credit, or the amount the borrower owes on revolving credits compared to the total amount of revolving credit available.

bc_open_to_buy — The total number of revolving bankcard purchases

bc_util — Calculates the ratio between total current balance and high credit/credit limit for all bankcards

num_op_rev_tl — Instances of open revolving accounts

A loan’s current status (such as fully paid or charged off) is stored in loan_status. The model will predict this label.

The first thing we should do is to process the data before taking any further action. The preparation steps include missing values, outliers, and categorical feature handling.

#preprocessing

#remove missing values

loans = loans.dropna()

#remove outliers

q_low = loans["annual_inc"].quantile(0.08)

q_hi = loans["annual_inc"].quantile(0.92) loans = loans[(loans["annual_inc"] < q_hi) & (loans["annual_inc"] > q_low)]

loans = loans[(loans['dti'] <=45)]

q_hi = loans['bc_open_to_buy'].quantile(0.95)

loans = loans[(loans['bc_open_to_buy'] < q_hi)]

loans = loans[(loans['bc_util'] <=160)]

loans = loans[(loans['revol_util'] <=150)]

loans = loans[(loans['num_op_rev_tl'] <=35)]

#categorical features processing

cleaner_app_type = {"term": {" 36 months": 1.0, " 60 months": 2.0},

"sub_grade": {"A1": 1.0, "A2": 2.0, "A3": 3.0, "A4": 4.0,

"A5": 5.0, "B1": 11.0, "B2": 12.0, "B3": 13.0, "B4": 14.0,

"B5": 15.0, "C1": 21.0, "C2": 22.0, "C3": 23.0, "C4":

24.0, "C5": 25.0, "D1": 31.0, "D2": 32.0, "D3": 33.0,

"D4": 34.0, "D5": 35.0, "E1": 41.0, "E2": 42.0, "E3":

43.0, "E4": 44.0, "E5": 45.0, "F1": 51.0, "F2": 52.0,

"F3": 53.0, "F4": 54.0, "F5": 55.0, "G1": 61.0, "G2":

62.0, "G3": 63.0, "G4": 64.0, "G5": 65.0, },

"emp_length": {"< 1 year": 0.0, '1 year': 1.0, '2 years': 2.0,

'3 years': 3.0, '4 years': 4.0, '5 years': 5.0, '6 years':

6.0, '7 years': 7.0, '8 years': 8.0, '9 years': 9.0, '10+

years': 10.0 }

}

loans = loans.replace(cleaner_app_type)Following the preselection of features, univariate analysis is deployed. When you analyze a single feature, the most common techniques are 1) removing features with a large variance (more than 90%) and 2) removing features with frequent missing values.

The variance is low. Imagine that you have two features, 1) gender containing only one value (e.g., Female), and 2) age containing values between 30 and 50 years old. Gender has a low variance in this case since the values in this attribute are all the same, and when the model is trained, it won’t detect patterns, so this feature is dropped.

In this case, Sklearn’s VarianceThreshold function can be used to implement the threshold. Using the following code, we can identify features that are the same in 90% of the cases.

This case does not have any low variance features, so nothing needs to be dropped.

Incomplete values. In this set of features, there are no features with a large number of missing values, so we skip this step. I suggest you read this article for tips on identifying and dealing with missing values.

The second step is to identify features that are highly correlated.

The second step involves features that are multicollinear. It is important to note that step #2 and step #3 will use bi-variate analysis. Bivariate analysis is used to determine whether two sets of variables are related (correlated).

Insights can be gained by using these correlations, including:

A multicollinear relationship may be caused by the interdependence of one or more variables;

Correlation can be used to predict one variable based on another, indicating the presence of a causal relationship;

In our case, this is how each feature impacts loan payment outcome. Understanding what factors are causing the label outcome from a business perspective.

ML models may be impacted by multicollinearity when the dataset has a high correlation between features. It is possible for features that are highly correlated to provide the same information, which makes them redundant. When this happens, the results may appear skewed or misleading, and we can solve the problem by keeping just one feature and removing redundant features.

It would be possible to compare the features of a monthly salary and an annual salary; they may not be identical, but they likely have the same pattern. These redundant features may lead to misleading results if logistic regression and linear regression models are trained with redundant features. It is therefore important to eliminate one of them.

What is the best way to deal with multicollinearity?

It can be dealt with in a variety of ways. If you use Pearson correlation to detect highly correlated features, you should delete one of the perfectly (~ 90%) correlated features. It is also possible to use Principle Component Analysis (PCA) for dimension reduction. By projecting each point onto only the first few principals, we can reduce the dimensionality of each data point Keeping as much variation as possible while obtaining lower-dimensional data.

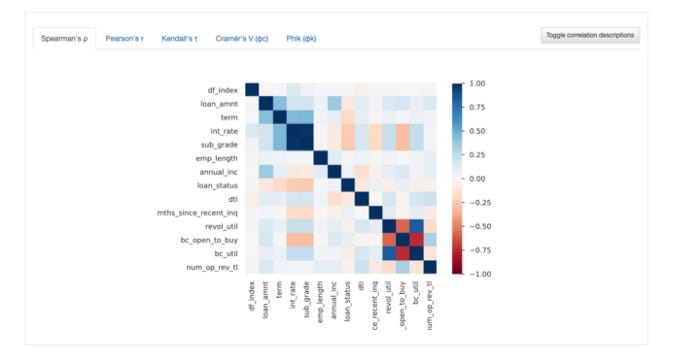

Pandas profiling is going to assist us here in applying the correlation technique. To generate the pandas report, see the code below.

Toward the end of the report, you’ll find two sections called “Interactions” and “Correlations.” The correlation section will help you determine the relationship between variables quickly.

From Pearson’s to Phik’s, there are various types of correlations. With Phik, you can handle categorical features that haven’t yet been handled. On the correlation matrix, we can see that the “grade” categorical feature is well plotted:

Matrix interpretation: Note that possible correlations are between +1 and -1, where:

When there is no correlation between two variables, there is no relationship between them;

There is a perfect negative correlation between variables when the variance is –1, indicating that the variance of one variable decreases as the variance of the other increases;

It is possible for two variables to move in the same direction simultaneously if they are correlated with a factor of +1.

High correlations can be observed in the following features:

Features for customers. bc_open_to_buy/num_op_rev_tl. There is a high correlation between these two features since both are related to bank cards and revolving accounts. In order to avoid multicollinearity issues, let’s drop the bc_open_to_buy feature in our initial model. revol_util/bc_util. It is a similar case, and we can drop the bc_util feature.

Information about loan features. Int_rate and grade are derivatives of sub_grade based on the lending club proprietary model; therefore, they are highly correlated; we should eliminate them. We prefer to keep the term, sub_grade, and loan_amount correlated as well, but they are less correlated.

Drop these features:

The third step is to find a correlation between the target variable and the features

We are now looking for highly correlated features with the target variable, in our case, loan_status. The first method is based on filtering, while the second method is based on wrapping.