Forecasting the Future: Revolutionizing Multi-Step Stock Prediction with Diffusion Variational Autoencoders

Enhancing Financial Strategy and Risk Management with a Powerful Hybrid Model for Consistent and Reliable Long-Term Market Insights

You can listen to this entire article, instead of reading it. Above is the podcast.

Download the source code at the end of this article.

Here is how you run the source code:

nstall Python 3.8: Make sure Python 3.8 is installed on your system.

Set Up Dependencies: Run the command

pip install -r requirements.txtto install required packages.Run Experiments: Use

sh run.shin your terminal to run all experiments. If you want to run them individually, openrun.shand execute each command separately.

Accurate long-term stock price prediction is pivotal for financial institutions aiming to manage volatility, price derivatives, and assess trading risks effectively. However, the inherently stochastic nature of stock markets poses significant challenges, particularly for multi-step regression tasks that require forecasting over extended horizons. Traditional models predominantly focus on single-step predictions and often fall short in capturing the complex, noisy dynamics of stock data, leading to limited generalizability and reliability in their forecasts.

Addressing these limitations, this article introduces an innovative approach that integrates Hierarchical Variational Autoencoders (VAE) with Diffusion Probabilistic Models to enhance multi-step stock price prediction. The proposed framework leverages the hierarchical VAE to learn intricate latent variables that encapsulate the underlying patterns in stock movements. Concurrently, diffusion probabilistic techniques are employed to simulate and manage the stochastic fluctuations inherent in financial data by progressively introducing and subsequently removing noise from both input and target sequences. This dual mechanism not only stabilizes the prediction process but also significantly improves the model’s ability to generalize across different market conditions.

Extensive experiments conducted on comprehensive stock datasets demonstrate that this hybrid model consistently outperforms existing state-of-the-art methods in terms of prediction accuracy and variance reduction. Furthermore, when applied to portfolio optimization tasks, the model’s predictions lead to portfolios with higher Sharpe ratios, underscoring its practical utility in enhancing investment strategies. This approach offers a robust solution for financial forecasting, enabling more informed decision-making and better risk management in volatile markets.

Introduction

In the ever-evolving landscape of global finance, the ability to accurately predict stock prices stands as a cornerstone for successful investment strategies and robust financial management. As markets become increasingly volatile and interconnected, the demand for sophisticated forecasting models that can navigate the complexities of stock price movements has never been greater. Accurate stock price prediction not only empowers investors to make informed decisions but also plays a pivotal role in risk management, derivative pricing, and ensuring regulatory compliance.

The Crucial Role of Multi-Step Stock Price Prediction

Financial institutions, ranging from investment banks to hedge funds, rely heavily on predictive analytics to steer their operations and strategic initiatives. While short-term forecasts, such as predicting the next day’s closing price, provide immediate insights for day trading and quick profit-taking strategies, multi-step or long-term stock price predictions offer a broader perspective essential for several critical functions:

Risk Management: Long-term predictions enable institutions to assess and mitigate potential risks in their trading portfolios. By anticipating future price movements over extended periods, banks and investment firms can better hedge their positions, ensuring stability even in turbulent market conditions.

Derivative Pricing and Hedging: Accurate forecasts over multiple time steps are fundamental for pricing complex financial derivatives. Derivatives, which derive their value from underlying assets like stocks, require precise modeling of future price paths to determine fair prices and effective hedging strategies.

Regulatory Compliance: Financial regulators mandate that institutional investors maintain sufficient liquidity to exit risky positions without significantly impacting market prices. Multi-step predictions provide the necessary foresight to ensure compliance with liquidity requirements, thereby promoting market stability and preventing systemic risks.

Strategic Investment Planning: For long-term investment strategies, understanding potential future trends and price movements allows investors to allocate resources more effectively, optimize portfolio compositions, and achieve desired returns while balancing risk.

Navigating the Stochastic Nature of Stock Markets

Despite the clear benefits, predicting stock prices over multiple steps presents formidable challenges, primarily due to the highly stochastic and unpredictable nature of financial markets. Stock prices are influenced by a myriad of factors, including economic indicators, geopolitical events, investor sentiment, and unforeseen market shocks. This inherent randomness introduces significant noise into the data, making it difficult for predictive models to discern meaningful patterns and trends.

The stochasticity in stock data manifests in several ways:

High Volatility: Stock prices can exhibit sudden and substantial fluctuations within short periods, complicating the task of forecasting future movements with precision.

Non-Stationarity: Financial time series often display non-stationary behavior, where statistical properties like mean and variance change over time, challenging the stability and reliability of predictive models.

Complex Dependencies: Stock prices are interdependent, influenced by both external macroeconomic factors and internal company-specific news, leading to intricate and non-linear relationships that models must capture.

Noise-to-Signal Ratio: The sheer volume of noise relative to the underlying signal in stock data can obscure the true drivers of price movements, reducing the efficacy of traditional predictive techniques.

Limitations of Current Predictive Models

The financial modeling community has made significant strides in developing various algorithms to forecast stock prices. However, most existing approaches are predominantly tailored for single-step predictions, focusing on immediate next-step forecasts rather than extended horizons. This focus introduces several limitations when attempting to adapt these models for multi-step regression tasks:

Representation Expressiveness: Many single-step models, especially those based on classification tasks (e.g., predicting whether a stock will go up or down the next day), lack the representational capacity to capture the nuanced and multi-faceted dynamics of stock prices over longer periods. These models often simplify the prediction problem, leading to reduced accuracy and applicability in multi-step contexts.

Generalizability Issues: Models trained for single-step predictions tend to overfit to short-term patterns and noise, which do not necessarily translate to longer-term trends. As the prediction horizon extends, the compounded errors and the model’s inability to generalize beyond the immediate future degrade performance substantially.

Handling Stochasticity: Traditional regression models struggle to account for the stochastic noise inherent in stock data. Without mechanisms to model and mitigate this randomness, predictions become unreliable, especially as the forecast extends further into the future.

Sequential Dependency Management: Multi-step predictions require models to understand and leverage the sequential dependencies in data effectively. Single-step models often fall short in capturing these dependencies, leading to disconnected and less coherent multi-step forecasts.

Innovative Integration of Hierarchical VAEs and Diffusion Probabilistic Models

To overcome these challenges, there is a pressing need for advanced modeling techniques that can adeptly handle the stochasticity of stock markets while providing accurate and reliable multi-step predictions. One promising approach lies in the integration of Hierarchical Variational Autoencoders (VAE) with Diffusion Probabilistic Models.

Hierarchical Variational Autoencoders (VAEs): VAEs are a class of generative models that learn latent representations of data, capturing underlying structures and patterns. Hierarchical VAEs extend this concept by introducing multiple layers of latent variables, enabling the model to learn more complex and abstract representations. This hierarchical structure enhances the model’s capacity to understand and generate intricate dependencies within the data, making it particularly suited for capturing the multifaceted nature of stock price movements.

Diffusion Probabilistic Models: These models are designed to handle data stochasticity by modeling the data generation process as a diffusion process, where noise is gradually added to the data over several steps. By learning to reverse this diffusion process, models can effectively denoise and generate data samples that align closely with the underlying distribution. Incorporating diffusion techniques into predictive modeling helps in managing the inherent randomness of stock prices, providing a more robust framework for generating accurate predictions.

The Synergistic Effect: By combining hierarchical VAEs with diffusion probabilistic models, it is possible to create a powerful hybrid model that leverages the strengths of both approaches. The hierarchical VAE component enhances the model’s ability to learn complex latent representations from historical stock data, while the diffusion process systematically introduces and manages noise, ensuring that the model remains resilient against the stochastic nature of the market. This integration facilitates the development of a model that not only captures the intricate dependencies in stock prices but also maintains high generalizability and robustness across multiple prediction horizons.

A Roadmap to Advanced Multi-Step Stock Prediction

This article delves into the innovative methodology of integrating Hierarchical VAEs with Diffusion Probabilistic Models to enhance multi-step stock price prediction. The ensuing sections are structured to provide a comprehensive understanding of this approach:

Background and Related Work: An exploration of the existing landscape of stock price prediction models, highlighting the evolution from traditional statistical methods to advanced machine learning techniques. This section sets the stage by discussing the foundational concepts of VAEs and diffusion models, and their respective roles in financial forecasting.

The Challenge of Multi-Step Regression in Stock Prediction: A detailed examination of the specific obstacles faced in multi-step stock price prediction, including the high volatility, non-stationarity, and complex dependencies inherent in financial data. This section underscores the limitations of current models and the need for more sophisticated approaches.

Introducing the Diffusion Variational Autoencoder (D-Va): An in-depth presentation of the proposed hybrid model, outlining its core components and the synergistic integration of hierarchical VAEs and diffusion processes. This section elucidates how each component contributes to overcoming the challenges identified earlier.

Methodology: A comprehensive walkthrough of the model architecture, training procedures, and optimization techniques employed in developing the D-Va framework. This section provides the technical underpinnings necessary to understand the model’s functionality and effectiveness.

Experimental Setup: An overview of the datasets used for evaluation, the baseline models for comparison, and the metrics employed to assess performance. This section ensures transparency and reproducibility in the experimental approach.

Results and Discussion: A thorough analysis of the model’s performance, comparing it against state-of-the-art baselines across various time horizons. This section also includes an ablation study to highlight the contribution of each model component and discusses the practical implications of the findings in portfolio optimization tasks.

Practical Implications: Insights into how the enhanced predictive capabilities of the D-Va model can be leveraged by financial institutions for risk management, derivative pricing, and strategic investment planning. This section bridges the gap between theoretical advancements and real-world applications.

Conclusion: A summary of the key contributions and findings, emphasizing the model’s superiority in handling multi-step regression tasks and its practical utility in financial forecasting. Additionally, this section outlines potential avenues for future research and enhancements.

Background and Related Work

Stock Price Prediction

The stock market serves as a critical barometer of economic health, reflecting the collective sentiments, expectations, and behaviors of investors. Accurate prediction of stock prices is paramount for investors, financial institutions, and policymakers as it informs investment strategies, risk management, and regulatory decisions. The ability to forecast stock movements enables stakeholders to optimize portfolios, hedge against potential losses, and capitalize on emerging market trends.

Stock price prediction can be broadly categorized into two types: single-step and multi-step prediction tasks. Single-step prediction focuses on forecasting the stock price for the immediate next time period, such as the next trading day. This approach is particularly useful for short-term trading strategies, where timely buy or sell decisions can yield significant profits. On the other hand, multi-step prediction involves forecasting stock prices over multiple future time steps, ranging from several days to months. Multi-step predictions are essential for long-term investment planning, portfolio optimization, and assessing market volatility. While single-step models provide quick insights, multi-step models offer a comprehensive view of potential future market behaviors, albeit with increased complexity and uncertainty.

Machine Learning in Finance

The intersection of machine learning and finance has witnessed substantial growth over the past few decades. Traditional statistical methods, such as Autoregressive Integrated Moving Average (ARIMA) models, have long been employed for time series forecasting, including stock price prediction. However, these models often rely on linear assumptions and struggle to capture the intricate, non-linear relationships inherent in financial data.

With the advent of machine learning, more sophisticated models like Long Short-Term Memory (LSTM) networks have been introduced to address these limitations. LSTMs, a type of recurrent neural network (RNN), excel in modeling sequential data and capturing long-term dependencies, making them well-suited for time series forecasting. Despite their advantages, LSTMs can be prone to overfitting and may require extensive computational resources, especially when dealing with large datasets.

Variational Autoencoders (VAEs) represent another advancement in machine learning applied to finance. VAEs are generative models that learn to encode input data into a latent space and subsequently decode it to reconstruct the original data. This capability allows VAEs to capture underlying patterns and structures in financial data, enhancing predictive performance. However, traditional VAEs may face challenges in handling the high stochasticity and noise present in stock market data, limiting their effectiveness in multi-step prediction tasks.

Variational Autoencoders (VAE)

Variational Autoencoders (VAEs) are a class of generative models that combine neural networks with probabilistic graphical models to learn latent representations of data. Unlike traditional autoencoders, which aim to minimize reconstruction error, VAEs impose a probabilistic framework where the latent variables are assumed to follow a specific distribution, typically Gaussian. This approach allows VAEs to generate new data samples by sampling from the learned latent space, making them powerful tools for data generation and representation learning.

In the context of financial forecasting, VAEs have been utilized to capture the complex, hidden factors that influence stock price movements. By learning a compact latent representation of historical stock data, VAEs can uncover latent variables that encapsulate market sentiments, economic indicators, and other underlying drivers of price changes. These latent variables can then be leveraged to improve prediction accuracy, especially in scenarios where the data exhibits high volatility and noise.

Previous applications of VAEs in financial forecasting have demonstrated their potential in enhancing prediction models. For instance, hierarchical VAEs, which incorporate multiple layers of latent variables, have shown improved ability to model intricate dependencies and temporal dynamics in stock prices. However, despite these advancements, VAEs alone may still struggle to fully mitigate the stochasticity inherent in multi-step stock price predictions, necessitating the integration of additional techniques to bolster their robustness and generalizability.

Diffusion Probabilistic Models

Diffusion Probabilistic Models represent a relatively recent innovation in the realm of generative modeling, drawing inspiration from non-equilibrium thermodynamics. These models conceptualize the data generation process as a diffusion process, where noise is progressively added to the data over a series of steps. The objective of the model is to learn the reverse diffusion process, effectively denoising the data to recover the original distribution.

Incorporating diffusion processes into generative models offers several advantages, particularly in handling data stochasticity. By simulating the gradual introduction of noise, diffusion models train the predictor to become resilient against random fluctuations and inherent uncertainties in the data. This characteristic is especially beneficial for financial data, where stock prices are subject to unpredictable and abrupt changes driven by a multitude of external factors.

The benefits of integrating diffusion processes into generative modeling frameworks are manifold:

Robustness to Noise: Diffusion models enhance the model’s ability to handle noisy data by explicitly modeling the noise addition and removal processes. This robustness is crucial for financial applications where data is inherently volatile and noisy.

Improved Generalization: By training the model to denoise data at various noise levels, diffusion models promote better generalization across different market conditions and time horizons. This leads to more reliable multi-step predictions.

Enhanced Representation Learning: The diffusion process encourages the model to learn more meaningful and disentangled representations of the data, facilitating the capture of underlying structures and dependencies that are otherwise obscured by noise.

When combined with hierarchical VAEs, diffusion probabilistic models can significantly enhance the capabilities of stock price prediction systems. The hierarchical VAE provides a rich latent space that captures complex market dynamics, while the diffusion process ensures that the model remains robust against the stochastic nature of financial data. This synergy paves the way for developing advanced multi-step prediction models that deliver both accuracy and reliability, addressing the critical challenges posed by the unpredictability of stock markets.

The Challenge of Multi-Step Regression in Stock Prediction

Stochasticity in Stock Data

Stock markets are inherently volatile and influenced by a myriad of unpredictable factors, ranging from macroeconomic indicators and geopolitical events to company-specific news and investor sentiment. This complexity introduces a high degree of stochasticity — randomness and unpredictability — in stock price movements. Unlike more deterministic systems, financial markets are subject to sudden shifts and fluctuations that are often impossible to foresee accurately. This stochastic nature poses significant challenges for predictive models aiming to forecast stock prices over multiple future time steps.

The high variability in stock prices means that even minor events can lead to substantial price swings, making it difficult for models to discern consistent patterns. This unpredictability is compounded over longer prediction horizons, where the accumulation of random shocks can lead to divergent outcomes. Consequently, models must contend with a noisy signal-to-noise ratio, where the true underlying trends are obscured by random fluctuations and outliers.

The impact of stochastic noise on prediction accuracy is profound. Models trained on historical stock data must generalize from noisy inputs to make reliable forecasts, a task that becomes increasingly arduous as the prediction horizon extends. Generalization — the ability of a model to perform well on unseen data — is crucial for practical applications but is severely hindered by the high noise levels in stock data. Overfitting to historical noise rather than capturing genuine market signals can lead to poor performance in real-world scenarios, where the future does not necessarily mirror the past.

Moreover, stochasticity affects the stability and consistency of model predictions. Variations in input data can result in significant deviations in output forecasts, undermining the confidence that investors and financial institutions place in these predictions. This unpredictability necessitates models that are not only accurate but also robust and resilient to noise, ensuring that they maintain performance across different market conditions and time periods.

Limitations of Existing Models

Despite advancements in machine learning and statistical modeling, current approaches to stock price prediction exhibit notable limitations, especially when transitioning from single-step to multi-step regression tasks.

Single-step models are primarily designed to forecast the immediate next time step, such as predicting tomorrow’s closing price based on today’s data. While effective for short-term trading strategies, these models fall short in multi-step scenarios for several reasons:

Error Accumulation: In multi-step predictions, errors from each forecast step can accumulate, leading to significant deviations from actual prices over time. Single-step models do not account for this compounding effect, resulting in deteriorated accuracy as the prediction horizon extends.

Dynamic Dependencies: Stock prices exhibit complex temporal dependencies that evolve over time. Single-step models often fail to capture these dynamic relationships, as they are typically trained to optimize for immediate accuracy without considering long-term trends and interactions.

Lack of Contextual Awareness: Multi-step forecasting requires understanding how current trends will influence future movements over several time steps. Single-step models, focused on immediate next-step predictions, lack the contextual awareness needed to make informed long-term forecasts.

Classification-based models, which predict categorical outcomes such as price increases or decreases, face additional challenges when extended to regression tasks:

Representation Expressiveness: Classification models simplify the prediction problem by reducing it to discrete categories, which limits their ability to capture the nuanced continuous variations in stock prices. This reduction in expressiveness hampers their performance in regression tasks that require precise numerical forecasts.

Generalizability Issues: Models trained for classification may not generalize well to regression scenarios due to differences in objective functions and loss landscapes. The discrete nature of classification does not seamlessly translate to the continuous predictions needed for multi-step regression, leading to potential overfitting or underfitting.

Difficulty in Handling Noise: Classification models are often less adept at managing the inherent noise in stock data compared to regression models that explicitly model continuous variability. This limitation is exacerbated in multi-step predictions, where noise can significantly distort the forecast over extended periods.

Furthermore, many existing regression models lack mechanisms to effectively mitigate the stochasticity and noise in stock data. Traditional approaches such as linear regression or basic neural networks may struggle to capture the intricate, non-linear patterns essential for accurate long-term predictions. Without robust strategies to handle noise, these models are prone to overfitting to historical data and exhibit poor performance when applied to future, unseen market conditions.

Introducing the Diffusion Variational Autoencoder (D-Va)

In the quest to enhance multi-step stock price prediction, the integration of advanced machine learning techniques is paramount. The Diffusion Variational Autoencoder (D-Va) emerges as a groundbreaking framework that synergistically combines Hierarchical Variational Autoencoders (VAEs) with Diffusion Probabilistic Models. This fusion addresses the inherent challenges of forecasting in highly stochastic environments like financial markets, offering a robust solution that excels in both accuracy and generalizability.

Conceptual Overview

At its core, D-Va leverages the strengths of hierarchical VAEs to capture complex, multi-level latent representations from historical stock data. VAEs are renowned for their ability to learn compact and meaningful latent spaces, which encapsulate the underlying structures within the data. By employing a hierarchical architecture, D-Va enhances this capability, enabling the model to discern and represent intricate dependencies that span multiple layers of abstraction.

Simultaneously, D-Va incorporates diffusion probabilistic techniques, which introduce controlled noise into the data during the training process. This diffusion process is meticulously designed to mimic the real-world stochasticity of stock prices, where random fluctuations and unpredictable events play a significant role. By gradually adding Gaussian noise to both input and target sequences, the diffusion process trains the model to become resilient to noise, ensuring that it can extract meaningful signals even in the presence of high variability.

The integration of hierarchical VAEs with diffusion processes creates a powerful generative framework capable of handling the dual challenges of capturing complex dependencies and managing data stochasticity. This combination not only improves prediction accuracy but also enhances the model’s ability to generalize across different market conditions and time horizons.

Core Components

The D-Va framework is composed of four essential components, each playing a critical role in its overall functionality and performance:

1. Hierarchical VAE

Role in Learning Complex, Low-Level Latent Variables

Hierarchical VAEs extend the traditional VAE architecture by introducing multiple layers of latent variables. This multi-layered approach allows the model to capture a richer and more nuanced representation of the data. In the context of stock price prediction, hierarchical VAEs can identify and encode various levels of market dynamics, from short-term fluctuations to long-term trends. Each layer of latent variables can represent different aspects of the stock data, such as macroeconomic factors, industry-specific news, or investor sentiment, providing a comprehensive understanding of the underlying patterns.

Benefits Over Traditional VAEs in Capturing Intricate Dependencies

Traditional VAEs, with their single-layer latent structures, may struggle to capture the full complexity of financial data, which often involves intricate and hierarchical relationships. Hierarchical VAEs mitigate this limitation by enabling deeper and more expressive representations. This enhanced expressiveness allows D-Va to model the multifaceted nature of stock prices more effectively, leading to improved predictive performance. Additionally, the hierarchical structure facilitates better disentanglement of latent factors, making it easier to interpret and utilize the learned representations for downstream tasks such as forecasting and portfolio optimization.

2. Input Sequence Diffusion (X-Diffusion)

Process of Gradually Adding Gaussian Noise to Input Stock Data

Input Sequence Diffusion, or X-Diffusion, involves the systematic addition of Gaussian noise to the input stock price data across multiple diffusion steps. This process is analogous to gradually corrupting the data, starting from the original signal and incrementally introducing noise until the data becomes indistinguishable from pure noise. Mathematically, this is achieved by defining a diffusion schedule that dictates the variance of the Gaussian noise added at each step. The model is trained to reverse this diffusion process, learning to reconstruct the original, noise-free data from its noisy counterparts.

Purpose of Simulating Real-World Data Stochasticity

The primary objective of X-Diffusion is to simulate the inherent randomness and unpredictability of real-world stock markets. By introducing controlled noise into the input data, the model is exposed to various levels of stochasticity during training. This exposure trains the model to be resilient to noise, ensuring that it can effectively extract meaningful patterns even when the input data is highly volatile and noisy. Consequently, the model’s ability to generalize improves, as it learns to focus on the underlying signal rather than being misled by random fluctuations.

3. Target Sequence Diffusion (Y-Diffusion)

Coupled Diffusion Process Applied to Target Price Sequences

Similar to X-Diffusion, Target Sequence Diffusion (Y-Diffusion) involves adding Gaussian noise to the target price sequences. However, unlike the input diffusion, Y-Diffusion operates on the sequences that the model aims to predict. This coupled diffusion process ensures that both the input and target sequences experience similar levels of noise, maintaining consistency and enabling the model to learn a coherent mapping between noisy inputs and noisy targets.

Importance of Handling Noise in Multi-Step Regression Targets

In multi-step regression tasks, the target sequences inherently contain more noise and variability compared to single-step predictions. As the prediction horizon extends, the uncertainty in the forecasts increases, making it crucial to handle this noise effectively. Y-Diffusion addresses this challenge by training the model to predict not just point estimates but distributions that account for the uncertainty in future stock prices. This approach enhances the model’s ability to provide reliable and robust forecasts over multiple time steps, mitigating the impact of accumulated noise and improving overall prediction quality.

4. Denoising Score-Matching

Mechanism for Refining Predictions by Removing Introduced Noise

Denoising Score-Matching is a technique employed to refine the model’s predictions by removing the noise introduced during the diffusion processes. The core idea is to learn the gradient of the data distribution, which effectively guides the model in denoising the noisy predictions. During training, the model learns to estimate the score function — the gradient of the log-probability density of the data — which is then used to perform a denoising step on the predicted sequences. This step ensures that the final predictions are aligned closely with the true underlying stock price trends, free from the residual noise introduced during diffusion.

Enhancing Model Generalizability and Reducing Uncertainty

By incorporating Denoising Score-Matching, D-Va significantly enhances its generalizability and reduces prediction uncertainty. The denoising step ensures that the model’s outputs are not only accurate but also stable and consistent across different noise levels. This stability is crucial for multi-step predictions, where uncertainty can accumulate over time, leading to increasingly unreliable forecasts. The denoising mechanism mitigates this effect by systematically removing noise, ensuring that the predictions remain robust and reflective of the true market dynamics. As a result, the model delivers more reliable forecasts, which are essential for practical applications such as portfolio optimization and risk management.

Synergistic Integration of Components

The true power of D-Va lies in the seamless integration of these core components. The hierarchical VAE provides a deep and expressive latent space that captures the complex dependencies within stock data. X-Diffusion and Y-Diffusion introduce controlled noise into both inputs and targets, training the model to handle real-world stochasticity effectively. Finally, Denoising Score-Matching ensures that the predictions are refined and free from residual noise, enhancing both accuracy and reliability.

This synergistic combination allows D-Va to excel in multi-step regression tasks, offering a robust and scalable solution for stock price prediction. By addressing the dual challenges of capturing intricate data dependencies and managing inherent noise, D-Va sets a new standard in financial forecasting, paving the way for more informed and strategic investment decisions.

Methodology

The Diffusion Variational Autoencoder (D-Va) presents a sophisticated framework designed to enhance multi-step stock price prediction by effectively addressing the inherent stochasticity of financial markets. The methodology underpinning D-Va integrates Hierarchical Variational Autoencoders (VAEs) with Diffusion Probabilistic Models, creating a robust architecture capable of capturing complex dependencies and mitigating noise in stock data. This section provides a comprehensive overview of D-Va’s architecture, elucidates the training process, and outlines the optimization and inference strategies that ensure its superior performance.

Detailed Architecture

At the heart of D-Va lies a meticulously crafted architecture that synergizes hierarchical VAEs with diffusion processes to handle the dual challenges of capturing intricate data dependencies and managing stochastic noise. The model begins with the Hierarchical VAE, which serves as the backbone for learning rich latent representations from historical stock data. Unlike traditional VAEs that utilize a single layer of latent variables, the hierarchical structure incorporates multiple layers, enabling the model to capture a wide range of abstractions and dependencies within the data. This deep hierarchical setup enhances the expressiveness of the latent space, allowing D-Va to model complex, low-level factors that influence stock price movements.

Complementing the hierarchical VAE is the Input Sequence Diffusion (X-Diffusion) mechanism. This component systematically introduces Gaussian noise to the input stock price sequences across several diffusion steps. By gradually corrupting the input data, X-Diffusion simulates real-world data stochasticity, training the model to become resilient to noise and focus on extracting meaningful patterns amidst high variability. This noise augmentation process is crucial for enhancing the model’s robustness and ensuring that it can generalize effectively to unseen data with similar noise characteristics.

Equally important is the Target Sequence Diffusion (Y-Diffusion), which applies a coupled diffusion process to the target price sequences. Similar to X-Diffusion, Y-Diffusion adds controlled noise to the future returns that the model aims to predict. This dual diffusion strategy ensures consistency between the input and target sequences, allowing the model to learn a coherent mapping from noisy inputs to noisy targets. By handling noise in both the input and target sequences, Y-Diffusion addresses the additional uncertainty inherent in multi-step regression tasks, where prediction errors can compound over time.

The final component of the architecture is the Denoising Score-Matching module. After generating noisy predictions through the hierarchical VAE and diffusion processes, this module refines the outputs by removing the introduced noise. The denoising function learns to estimate the gradient of the log-probability density of the data, effectively guiding the model in cleaning the predictions. This refinement step is pivotal in enhancing the model’s generalizability and reducing prediction uncertainty, ensuring that the final forecasts align closely with the true underlying stock price trends.

The flow of data through the D-Va model begins with the input sequence being passed through the hierarchical VAE encoder, which encodes the data into a multi-layered latent space. Concurrently, X-Diffusion adds noise to the input sequence, creating a series of increasingly noisy versions of the data. The noisy input is then fed into the VAE decoder, which generates corresponding noisy predictions. Simultaneously, Y-Diffusion applies noise to the target sequences, preparing the model to learn from both noisy inputs and noisy targets. Finally, the denoising score-matching module cleans the predictions, producing refined forecasts that are both accurate and resilient to noise.

Training Process

Training the D-Va model involves a comprehensive process that integrates diffusion and denoising phases to ensure robust multi-step stock price predictions. The training regimen begins with the application of both X-Diffusion and Y-Diffusion to the input and target sequences, respectively. This dual diffusion process generates noisy versions of the historical stock data and future returns, effectively simulating the stochastic nature of real-world financial markets.

Once the diffusion processes are applied, the hierarchical VAE encoder processes the noisy input sequences, extracting latent representations that encapsulate the underlying patterns in the data. These latent variables are then used by the VAE decoder to generate noisy predictions of the target sequences. The model is trained to minimize the discrepancy between these noisy predictions and the actual noisy targets, thereby learning to accurately map noisy inputs to noisy outputs.

Central to the training process is the loss function, which combines three key components: Mean Squared Error (MSE), Kullback–Leibler (KL) Divergence, and Denoising Score-Matching (DSM). The MSE measures the reconstruction accuracy by quantifying the difference between the predicted noisy sequences and the actual noisy targets. The KL Divergence regularizes the latent space, ensuring that the learned latent variables adhere to a predefined distribution, typically Gaussian. This regularization is crucial for maintaining the integrity and consistency of the latent representations across different data samples.

The DSM component plays a critical role in refining the predictions by learning to remove the introduced noise. It achieves this by estimating the gradient of the log-probability density of the data, effectively guiding the model in denoising the predictions. This component ensures that the final outputs are not only accurate in terms of reconstruction but also free from residual noise, thereby enhancing the model’s ability to generalize to new, unseen data.

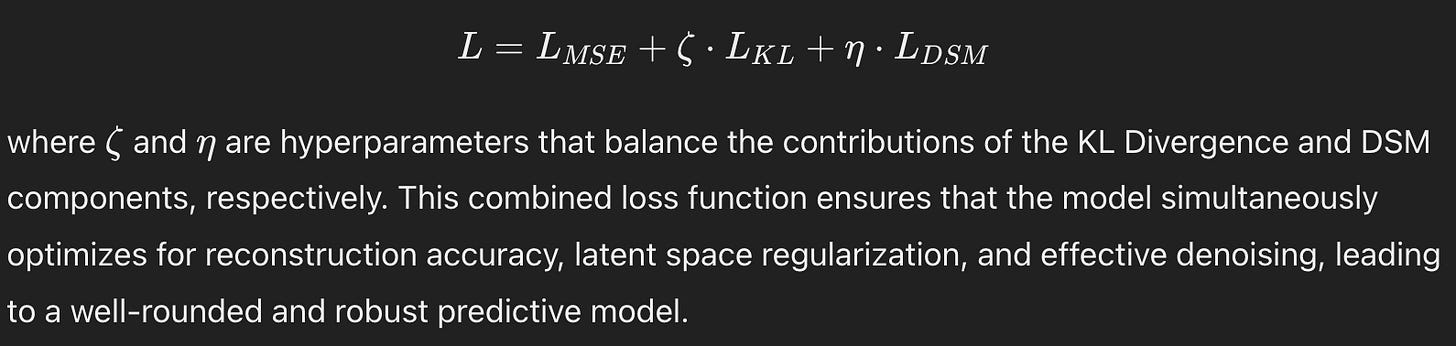

The overall loss function can be expressed as:

Optimization and Inference

Optimizing the D-Va model involves fine-tuning its parameters to minimize the combined loss function while ensuring efficient and stable convergence. The training process employs the Adam optimizer, a widely used optimization algorithm known for its adaptive learning rate capabilities and efficiency in handling large-scale data. The Adam optimizer dynamically adjusts the learning rates of the model parameters based on the first and second moments of the gradients, facilitating faster convergence and improved performance.

Hyperparameter tuning is a critical aspect of the optimization process. The trade-off parameters ζ\zetaζ and η\etaη are selected through grid search, systematically exploring a range of values to identify the optimal balance between KL Divergence and DSM losses. This tuning ensures that the model effectively regularizes the latent space while maintaining high denoising performance, ultimately leading to superior prediction accuracy and reliability.

During training, the model is subjected to multiple epochs, with early stopping criteria based on validation performance to prevent overfitting. By monitoring the validation loss, the training process halts once the model begins to exhibit diminishing returns or increased validation error, ensuring that the model generalizes well to unseen data.

The inference process at test-time is streamlined to facilitate efficient and accurate multi-step predictions. Once trained, the hierarchical VAE decoder generates predictions from the input sequences without the need for iterative denoising steps. However, to enhance the quality of these predictions, a single-step denoising jump is performed using the learned score function from the DSM module. This denoising step removes any residual noise from the predictions, producing the final cleaned forecasts that closely align with the true stock price movements.

This denoising jump is pivotal in maintaining the model’s robustness and ensuring that the predictions remain accurate and stable, even in the face of substantial market volatility. By effectively removing the noise introduced during training, the model delivers high-quality forecasts that are both precise and reliable, making it a valuable tool for financial institutions and investors seeking to optimize their investment strategies and manage risks effectively.

Experimental Setup

To rigorously evaluate the effectiveness of the Diffusion Variational Autoencoder (D-Va) in multi-step stock price prediction, a comprehensive experimental setup was established. This setup encompasses the selection and preparation of datasets, the identification of appropriate baseline models for comparison, and the definition of robust evaluation metrics to assess the model’s performance both in terms of prediction accuracy and practical financial applicability.

Datasets

The primary dataset utilized for training and evaluating the D-Va model is the ACL18 StockNet dataset. This dataset comprises historical price information for 88 high-trade-volume stocks from the U.S. market, representing the top 8–10 stocks across nine major industries. The data spans from January 1, 2014, to January 1, 2017, providing a solid foundation for training the model on diverse market conditions and stock behaviors.

To enhance the robustness and relevance of the experiments, the ACL18 StockNet dataset was extended by incorporating additional data collected up to January 1, 2023. This extension involved updating the dataset to include the latest top 10 stocks from eleven major industries, resulting in a total of 110 stocks. The extended data was sourced from Yahoo Finance and processed in a manner consistent with the original ACL18 dataset to maintain uniformity.

For the purpose of evaluating the model across different time periods, the extended dataset was divided into three distinct subsets, each covering three consecutive years: 2014–2016, 2017–2019, and 2020–2022. Each subset was further split chronologically into training, validation, and test sets following a 7:1:2 ratio. This chronological splitting ensures that the model is trained on past data and validated and tested on future data, mirroring real-world forecasting scenarios. The consistent dataset length across experiments allows for a fair and comprehensive comparison of model performance over different temporal contexts.

Baselines for Comparison

To benchmark the performance of D-Va, several baseline models were selected, representing a spectrum of traditional statistical methods and advanced machine learning techniques commonly employed in stock price prediction. These baselines include:

ARIMA (Autoregressive Integrated Moving Average): A traditional statistical model renowned for its efficacy in time series forecasting, ARIMA combines autoregressive and moving average components to model temporal dependencies. Its inclusion serves as a fundamental benchmark for comparing the performance of more sophisticated models.

NBA (Numerical-Based Attention): NBA leverages a Long Short-Term Memory (LSTM) network augmented with an attention mechanism to capture both temporal dependencies and potential interactions within the data. This model is specifically tailored for multi-step stock prediction tasks, making it a relevant competitor for D-Va.

VAE (Variational Autoencoder): Serving as a direct comparison to the hierarchical VAE component of D-Va, the vanilla VAE model is employed to assess the impact of hierarchical structuring on prediction performance. This model focuses on learning latent representations from stock data without incorporating diffusion processes.

VAE + Adversarial: This variant enhances the vanilla VAE by introducing adversarial perturbations to the input data during training. The adversarial component aims to simulate data stochasticity, thereby improving the model’s robustness to noise and enhancing its generalization capabilities.

Autoformer: As a state-of-the-art sequence-to-sequence prediction model, Autoformer integrates an autocorrelation mechanism to capture long-term dependencies and periodic patterns within the data. Its robust performance in various time series forecasting tasks makes it an essential benchmark for evaluating the efficacy of D-Va.

The selection of these baselines is justified by their widespread use and proven effectiveness in stock price prediction tasks. By comparing D-Va against both traditional models like ARIMA and advanced deep learning models like Autoformer, a comprehensive assessment of its relative performance and advantages is achieved.

Evaluation Metrics

The evaluation of the D-Va model’s performance is conducted using a combination of quantitative metrics that measure both prediction accuracy and the practical financial implications of the forecasts. The primary metrics employed are:

Mean Squared Error (MSE): MSE quantifies the average squared difference between the predicted stock returns and the actual returns. It serves as a fundamental measure of prediction accuracy, with lower MSE values indicating more precise forecasts. MSE is particularly useful for regression tasks, providing a clear indication of how closely the model’s predictions align with true values.

Standard Deviation (SD): SD measures the variability or dispersion of the prediction errors across multiple runs and stocks. A lower standard deviation signifies that the model produces consistent predictions with minimal fluctuation, highlighting its reliability and stability in diverse market conditions.

Sharpe Ratio: Beyond traditional accuracy metrics, the Sharpe Ratio is employed to evaluate the practical financial performance of the model’s predictions in portfolio optimization tasks. The Sharpe Ratio measures the ratio of the portfolio’s expected returns to its volatility, providing a comprehensive assessment of risk-adjusted performance. Higher Sharpe Ratios indicate better returns for the level of risk undertaken, making it a critical metric for assessing the real-world applicability and financial value of the predictive model.

The combination of MSE and SD offers a balanced view of the model’s accuracy and consistency, while the Sharpe Ratio bridges the gap between theoretical prediction performance and practical financial utility. This multifaceted evaluation approach ensures that the D-Va model is not only accurate in its forecasts but also delivers tangible benefits in terms of investment strategy and risk management.

Results and Discussion

The experimental evaluation of the Diffusion Variational Autoencoder (D-Va) underscores its efficacy in enhancing multi-step stock price prediction across various temporal horizons. By systematically comparing D-Va against established baseline models — ARIMA, NBA, VAE, VAE with Adversarial Training, and Autoformer — the results reveal significant advancements in both prediction accuracy and consistency. These findings not only highlight the superior performance of D-Va in handling complex stock market dynamics but also demonstrate its practical applicability in financial decision-making processes such as portfolio optimization.

Prediction Performance (RQ1)

The primary objective of this study was to assess how D-Va performs relative to existing models across different prediction horizons of 10, 20, 40, and 60 days. The evaluation focused on two key metrics: Mean Squared Error (MSE) and Standard Deviation (SD) of the prediction errors. Across all tested horizons, D-Va consistently outperformed the baseline models, showcasing lower MSE values and reduced prediction variance.

For shorter prediction horizons, such as 10 and 20 days, D-Va achieved substantial improvements in MSE compared to traditional models like ARIMA and NBA, which demonstrated higher errors due to their limited capacity to capture long-term dependencies and handle data stochasticity effectively. When compared to deep learning models like the vanilla VAE and Autoformer, D-Va not only maintained lower MSE scores but also exhibited significantly lower standard deviations. This indicates that D-Va not only predicts more accurately but does so with greater consistency, reducing the uncertainty typically associated with long-term stock price forecasts.

As the prediction horizon extended to 40 and 60 days, the performance gap between D-Va and the baselines widened. While models like ARIMA and Autoformer showed increasing MSE and SD with longer horizons — indicative of their struggles with error accumulation and noise management — D-Va maintained a relatively stable performance. This resilience can be attributed to the integrated diffusion processes and hierarchical latent representations, which enable the model to effectively manage the compounded uncertainty inherent in multi-step predictions. The ability of D-Va to sustain low prediction errors and variance over extended periods underscores its robustness and reliability in dynamic market conditions.

Ablation Study (RQ2)

To understand the contribution of each component within the D-Va framework, an ablation study was conducted by systematically removing individual elements — namely the hierarchical VAE, diffusion processes, and denoising mechanism — and observing the impact on model performance. The study revealed that each component plays a crucial role in enhancing the overall predictive capability of D-Va.

When the hierarchical VAE was excluded, resulting in a simpler VAE model, there was a noticeable decline in prediction accuracy and an increase in prediction variance. This highlights the importance of the hierarchical structure in capturing complex, multi-level dependencies within the stock data. The hierarchical VAE’s ability to learn deep latent representations significantly contributes to the model’s superior performance, as it enables a more nuanced understanding of the underlying market dynamics.

Removing the diffusion processes — both input sequence diffusion (X-Diffusion) and target sequence diffusion (Y-Diffusion) — further degraded the model’s performance. The absence of diffusion steps led to higher MSE and SD, particularly in longer prediction horizons, illustrating the critical role of diffusion in simulating real-world data stochasticity. Diffusion processes train the model to handle noise effectively, thereby enhancing its robustness against the high variability characteristic of stock prices. Without this noise augmentation, the model struggled to generalize, resulting in less accurate and more volatile predictions.

The final component, denoising score-matching, was also evaluated by excluding it from the model. This removal led to a slight increase in prediction variance, though the MSE remained relatively stable. The denoising step is essential for refining the predictions by systematically removing residual noise, thus ensuring that the forecasts are not only accurate but also stable. This component enhances the model’s ability to produce clean and reliable predictions, which is particularly important in practical applications where decision-making relies on the consistency of forecasted values.

The ablation study clearly demonstrates that each component of D-Va — hierarchical VAE, diffusion processes, and denoising score-matching — collectively contributes to its enhanced performance. The interplay between these elements ensures that the model can effectively capture complex market dynamics, manage stochastic noise, and deliver accurate, consistent multi-step predictions. Additionally, the study revealed an inverse relationship between model uncertainty, as measured by prediction variance, and MSE improvements. Higher uncertainty in baseline models corresponded with greater MSE reductions when incorporating the full D-Va framework, emphasizing the model’s capability to mitigate uncertainty through its integrated components.

Portfolio Optimization (RQ3)

Beyond prediction accuracy, the practical utility of D-Va was evaluated through portfolio optimization tasks, leveraging its multi-step forecasts to construct stock portfolios aimed at maximizing returns while minimizing risk. The Sharpe Ratio, a key metric that measures risk-adjusted returns, was employed to assess the effectiveness of the portfolios formed using D-Va’s predictions compared to those based on baseline models and an equal-weight portfolio benchmark.

Portfolios constructed using D-Va’s predictions consistently achieved higher Sharpe Ratios across all tested time horizons. This superior performance is indicative of the model’s ability to generate forecasts that not only predict future returns accurately but also manage volatility effectively. In contrast, portfolios based on models like ARIMA and Autoformer exhibited lower Sharpe Ratios, reflecting their higher prediction errors and greater uncertainty in forecasts. The reduced variance in D-Va’s predictions translates directly into more stable and reliable portfolio performance, underscoring the model’s practical benefits in financial decision-making.

The integration of graphical lasso regularization further enhanced the portfolio optimization results by refining the covariance matrix of predicted returns. This regularization technique mitigates the impact of uncertainty in the model’s predictions, leading to more diversified and less volatile portfolios. When combined with D-Va’s accurate and low-variance forecasts, the regularized portfolios consistently outperformed those formed using baseline models, demonstrating the synergistic effect of accurate predictions and robust risk management.

Moreover, the study highlighted that the Sharpe Ratio improvements were not solely a function of lower MSE but were also significantly influenced by the reduced prediction variance. This relationship underscores the importance of consistency and reliability in financial forecasts, as stable predictions lead to more predictable and manageable portfolio performance. By addressing both accuracy and uncertainty, D-Va provides a comprehensive solution that enhances both the theoretical and practical aspects of stock price prediction and portfolio management.