Keras Machine Learning Models: Three Ways to Create Them

There are a few different ways to create Keras models if you’ve looked at Keras models on Github.

You can define an entire model with the Sequential model in just one line, usually with line breaks for readability, you can use the Functional interface for more complex model architectures, and you can also use the Model subclass to make the model reusable. The goal of this article is to provide you with the knowledge you need to build your own machine learning models in Keras by examining the different ways of creating models in Keras, along with their benefits and disadvantages.

You will learn the following after completing this tutorial:

Model building methods offered by Keras

A description of the Sequential class, its functional interface, and how to subclass the keras.Model class

The different methods for creating Keras models and when to use them

Here we go!

The big picture

There are three parts to this tutorial that cover how to build machine learning models in Keras:

Sequential class usage

The functional interface of Keras

A subclass of keras.Model can be created

Using the Sequential class

Sequential Models are exactly what they sound like. It consists of layers stacked one on top of the other. According to the documentation for Keras,

It is appropriate for a stack of layers consisting of exactly one input tensor and one output tensor to use a Sequential model.

Getting started with Keras is simple and easy with this tool. After importing Tensorflow, import the Sequential model:

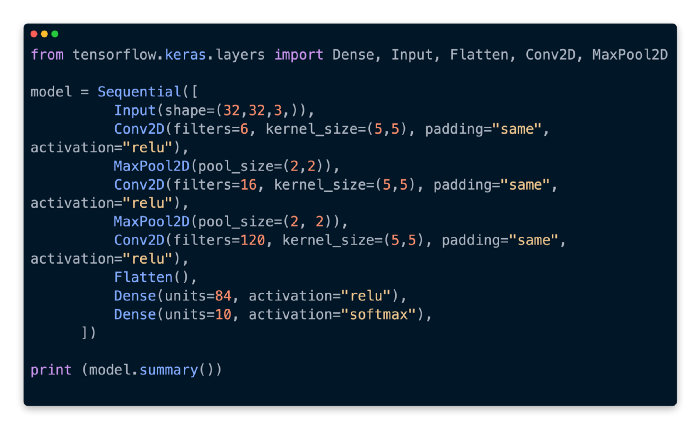

By stacking various layers together, we can start building our machine learning model. With the classic CIFAR-10 image dataset as input, let’s build a LeNet5 model:

In the Sequential model constructor, we simply pass in an array of layers that our model should contain. We can see the model’s architecture by looking at model.summary().

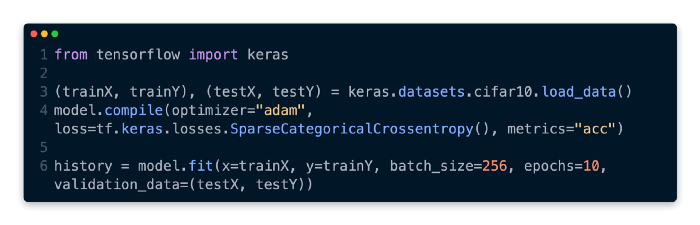

Lastly, let’s run model.compile and model.fit on the CIFAR-10 dataset as just a test:

which gives us this output.

A first pass at a model like that is pretty good. After putting together the code for LeNet5 using a sequential model,

Let’s explore how Keras models can be constructed using other methods, starting with the functional interface!

A functional interface for Keras

In the next step, we’ll explore Keras’ functional interface for constructing models. As a function, the functional interface accepts tensors and outputs tensors. In contrast to sequential models with layers stacked upon each other, the functional interface offers greater flexibility in representing Keras models. The alternative is to build models that branch into multiple paths, have multiple inputs, etc.

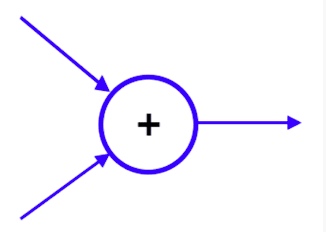

A layer that adds tensors from two or more paths is called an Add layer.

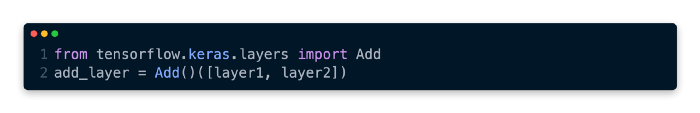

Due to the multiple inputs, this cannot be defined using a Sequential object since it cannot be represented as a linear stack of layers. The functional interface of Keras comes into play here. As an example of an Add layer with two input tensors, we can define it as follows:

Let’s examine the LeNet5 model that we defined by instantiating a Sequential class using a functional interface now that we’ve seen an example of the functional interface.

And looking at the model summary,

Both LeNet5 models implemented using the functional interface and Sequential class have the same model architecture.

As we have seen, Keras’ functional interface has many possible implementations. Let’s examine one. The Sequential class cannot be used. ResNet introduces a residual block for this example. This is how the residual block appears visually:

The residual block can be found at: https://arxiv.org/pdf/1512.03385.pdf

The skip connection prevents this block from being represented as a simple layer stack in a model defined using the Sequential class. We can define ResNet blocks using the functional interface as follows:

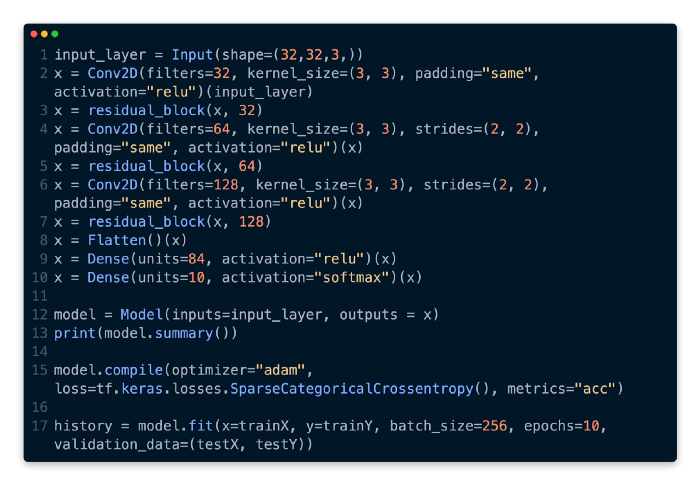

Using these residual blocks as well as the functional interface, we can build a simple network.

The training results and model summary can be viewed by running this code.

In addition, we have combined the code for our residual block network with the code for our simple network.