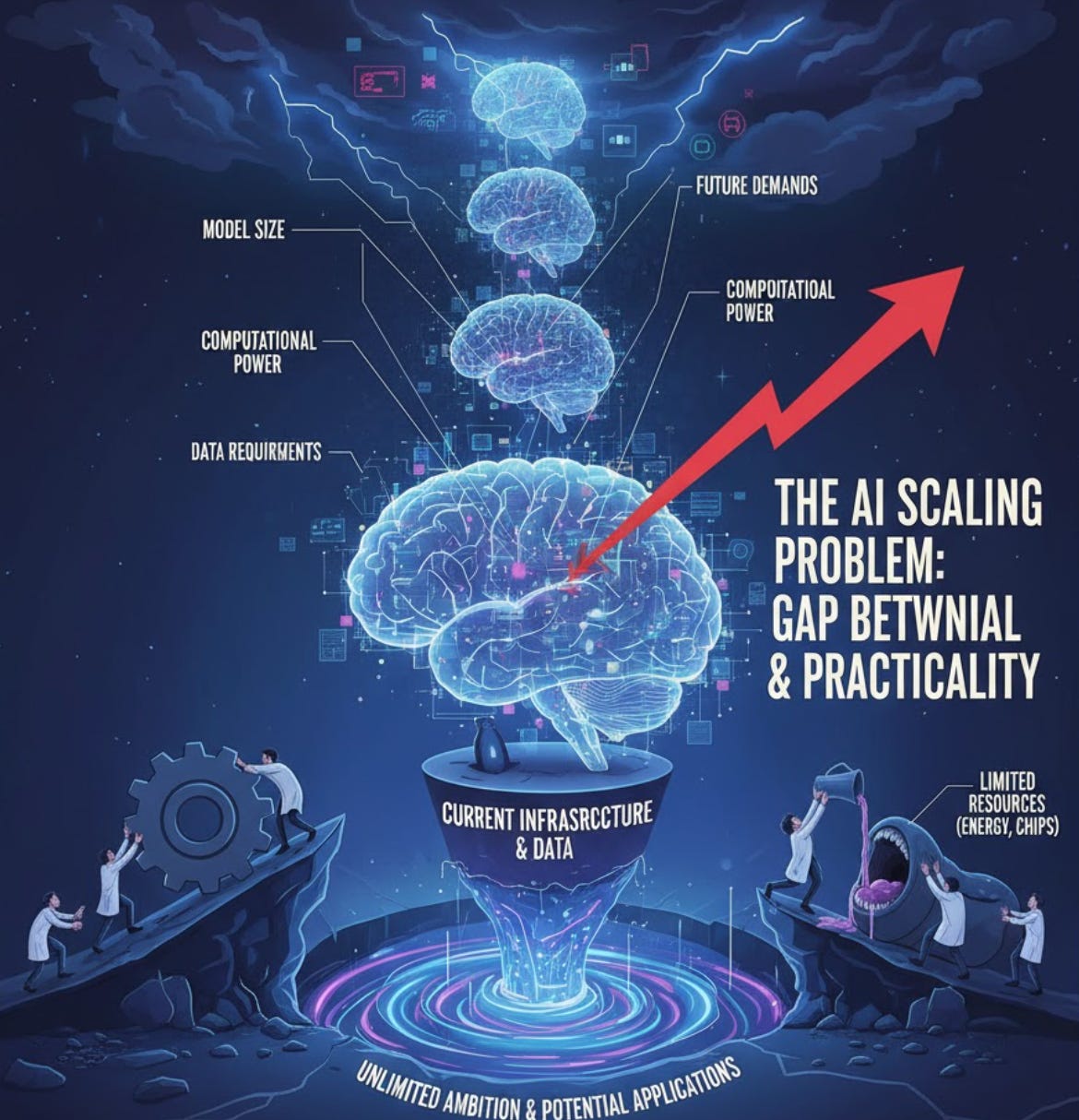

The AI Scaling Problem

How AI Grapples with Real-World Challenges in Pursuit of AGI

Free books to download, url at the end of article:

Snowflake Cortex for Generative AI Applications

Scaling Enterprises with Large Language Models

In the fall of 2025, artificial intelligence continues to dominate headlines with breathless announcements of breakthroughs. From OpenAI’s latest frontier model acing graduate-level exams in quantum physics to Anthropic’s Claude 4.0 generating novel protein structures that rival human biochemists, the narrative is clear: AI is scaling toward superhuman intelligence at an exponential pace. Social media amplifies this frenzy—tweets from industry leaders like Sam Altman hint at “transformative” capabilities just around the corner, while venture capital pours billions into compute clusters the size of small towns. Yet, beneath this veneer of progress lies a profound scaling problem: current AI systems excel at regurgitating pre-trained knowledge but falter spectacularly when confronted with the dynamic, data-sparse realities of the real world. This isn’t mere hype fatigue; it’s a fundamental mismatch between how we measure AI progress and what true artificial general intelligence (AGI) demands.

This article delves into the AI scaling problem, drawing on foundational critiques from AI researchers and recent advancements as of October 2025. We’ll unpack why benchmarks—those shiny scorecards of AI prowess—fall short, propose a learning-centric vision for AGI, and dissect three interlocking challenges: continual learning, single-stream experiential data, and compute-efficient scaling. By focusing on technical underpinnings, we’ll see how today’s large language models (LLMs) and reinforcement learning (RL) agents, despite their scale, are architecturally ill-equipped for lifelong adaptation. The goal? To illuminate a path where scaling isn’t about hoarding data but harnessing compute to mimic human-like learning efficiency.

The Benchmark Mirage: Application Without Acquisition

At the heart of the scaling illusion are benchmarks like MMLU (Massive Multitask Language Understanding), GPQA (Graduate-Level Google-Proof Q&A), and MATH, which have propelled models from GPT-4’s 2023-era scores of ~70% to near-human parity by mid-2025. These tests probe an AI’s ability to apply knowledge across domains: solving differential equations, debugging Rust code, or even hypothesizing climate models. Frontier models now surpass humans on many, with reports of Grok-3 achieving 92% on the 2025 ARC-AGI benchmark for abstract reasoning—a feat once deemed AGI-exclusive.

But here’s the rub: intelligence, per cognitive science definitions, isn’t task proficiency alone. It’s the ability to acquire and apply knowledge and skills (Gardner, 1983; updated in modern neuroscience as neuroplasticity-driven adaptation). Benchmarks overwhelmingly test the latter—static recall and pattern-matching from a frozen parameter set—while ignoring acquisition. Current LLMs are trained once on trillions of tokens scraped from the internet, then deployed as immutable oracles. Post-training, their “learning” is bolted-on: fine-tuning on niche datasets or in-context prompting with a handful of examples.

This creates a scaling trap. As models balloon to trillions of parameters (e.g., xAI’s rumored Grok-4 at 10T+ params in late 2025), they generalize within their training distribution but crumble outside it. Consider a 2025 study on LLM robustness: models like Llama 3.1 (405B) solve 85% of LeetCode mediums but fail 70% of custom problems involving underrepresented libraries like JAX or Equinox, requiring thousands of synthetic examples to adapt—far from human intuition after one tutorial. The result? AI that decodes whale songs or designs drugs in controlled settings but can’t iterate on a user’s personal writing style without exhaustive retraining.

Early AI pioneers recognized this. In the 1930s, Thomas Ross’s “thinking machine” and Steven Smith’s robotic rat emphasized iterative, experience-driven adaptation over encyclopedic knowledge. By the 2020s, however, the field pivoted to “foundation models”—static giants pretrained on Big Data. This shift, fueled by scaling laws like those from Kaplan et al. (2020) and updated in Hoffmann et al. (2022), equates progress to parameter count and FLOPs. Yet, as data exhausts (internet text is ~90% scraped by 2025, per Epoch AI estimates), we’re hitting walls: training runs now bottleneck on synthetic data quality, not compute alone.

Redefining AGI: A Continual, Goal-Driven Learner

To escape this quagmire, we must redefine AGI not as an “all-knowing” oracle but as a general-purpose learning algorithm—an agent that autonomously curates its data stream, pursues goals, and bootstraps knowledge from sparse interactions, much like biological intelligence. Humans don’t memorize the world’s libraries; we learn from a lifetime of ~10^9 sensory experiences, extracting causal patterns with mere dozens of examples via mechanisms like hippocampal replay and prefrontal abstraction.

In this vision, AGI is animal-inspired: a system that interacts with the environment in real-time, encoding episodic memories (timestamped event sequences) and temporally extended reasoning (e.g., “if I pivot my research after a failed startup, it informs my next video”). Training isn’t a one-off phase but a perpetual loop: observe-act-reflect-update. This aligns with 2025’s emerging “lifelong learning” paradigms, where agents like those from DeepMind’s 2025 Gato successor integrate RL with LLMs for open-ended exploration.

Why compelling? Efficiency. A human child masters language from ~10^4 conversational turns, not billions of tokens. Scaling here means amplifying learning capacity—more compute yields sharper abstractions from fewer samples—yielding agents teachable via dialogue, not data dumps.

Challenge 1: Continual Learning – Battling Forgetting and Rigidity

The first hurdle is enabling models to learn indefinitely without erasure. Current LLMs deploy in phases: pretrain → fine-tune → RLHF (reinforcement learning from human feedback) → deploy. Weights stabilize post-deployment, rendering further adaptation brittle.

Enter catastrophic forgetting: when fine-tuning on Task B erases Task A knowledge. In LLMs, this manifests as parameter drift in shared layers; a 2025 arXiv study on models under 10B params showed 40-60% accuracy drops on prior tasks after adapting to new ones, worsening with scale due to denser representations. Another issue: loss of plasticity, where repeated updates degrade the model’s gradient flow, akin to neural burnout. A July 2025 Scienceline report highlighted that unassisted deep nets “can’t learn forever,” requiring human-curated interventions like replay buffers (revisiting old data) or elastic weight consolidation (EWC), which penalize changes to important parameters.

Mitigations abound in 2025 literature. Function vector regularization (Zhang et al., ICLR 2025) stabilizes updates by projecting gradients orthogonal to “forgotten” directions, boosting retention by 25% on continual NLP benchmarks. Parameter-efficient techniques like LoRA (Low-Rank Adaptation) allow task-specific “adapters” without touching the core model, though they scale poorly beyond 100 tasks. For true continuity, hybrid approaches blend synaptic intelligence (tracking parameter importance) with generative replay (synthesizing past data on-the-fly).

Counterarguments persist: In-context learning (ICL)—feeding examples into prompts—mimics acquisition. GPT-4o and kin “learn” novel APIs from 5-10 shots, achieving 80% efficacy on unseen code. But ICL is ephemeral: knowledge evaporates post-context window (typically 128K tokens in 2025 models), and it’s limited to shallow patterns (e.g., no deep causal inference beyond linear analogies). A February 2025 Cranium AI analysis argues ICL caps at “next-token prediction,” far from AGI’s meta-cognition. For lifelong AGI, we need parametric updates that persist, not transient hacks.

Challenge 2: Single-Stream Experiential Learning – From Disjointed Data to Lifelong Narratives

LLMs train on “Big Data”: random samples from disjoint corpora (books, tweets, code repos) in massive batches (e.g., 1M+ sequences). This yields broad coverage but no temporal coherence—models never “live” a sequence longer than ~4K tokens.

Contrast humans: We process a single experiential stream, weaving observations into episodic memory for causal chaining (e.g., “failed startup → pivot to continual RL → this article”). Without this, AI can’t bootstrap niche expertise; your unique research trajectory—grad school debates, GitHub stars shy of 10K—eludes generic pretraining.

Technically, disjoint training hampers long-horizon reasoning. In RL terms, it’s like optimizing policies on i.i.d. episodes, ignoring autocorrelation. A 2025 Toloka blog notes distribution shifts amplify this: real-world streams drift (e.g., seasonal trends), causing 30-50% performance cliffs in continual setups.

Solutions? Shift to online RL: agents generate data via world interaction, maintaining a unified buffer. Techniques like hindsight experience replay (HER) relabel past trajectories for goal relevance, while transformer-based memory modules (e.g., Perceiver IO variants) compress lifelong streams into queryable latents. Yet, scaling these to LLM sizes remains unsolved—current prototypes (e.g., RT-2 from Google, 2025) handle ~10^6 steps but choke on 10^9 without custom hardware.

Challenge 3: Compute-Centric Scaling – Beyond Data-Hungry Paradigms

Scaling laws posit loss ~ C^{-α} (C=compute), but they’re data-entangled: α≈0.05 for LLMs, demanding 10x data per 10x FLOPs (Hoffmann, 2022; extended in 2025 DeepMind reports). In continual RL, this fails—static datasets vanish, and batching disrupts stream integrity.

The crux: Algorithms optimized for “big data” (e.g., AdamW optimizers with cosine annealing) overfit to noise in small, sequential samples. A October 2025 arXiv paper on RL scaling introduces “compute-optimal” schedules, swapping cosine for linear warmups to enable indefinite training, yielding 2x sample efficiency on Atari benchmarks.

Promising avenues include model-based RL (world models predict futures from observations, slashing real interactions by 10x) and auxiliary tasks (e.g., curiosity-driven prediction errors to densify rewards). Nathan Lambert’s 2025 Interconnects analysis extrapolates RL curves: with these, performance scales as power laws in compute alone, targeting 10^24 FLOPs for human-level robotics by 2030. Yet, instabilities persist—overfitting in value-based RL requires regularization like SWA-Gaussian (stochastic weight averaging).

Insiders whisper of data walls: Meta’s 2025 Llama runs augmented with 50% synth data, yet yields plateau. Humans thrive on sparse inputs; AI must too.

Forging Ahead: A Compute-Learning Revolution

As of October 2025, labs like Thinking Machines challenge OpenAI’s data-first dogma, advocating continual paradigms. Progress accelerates: ICLR 2025 papers tout 20% forgetting reductions, while RL scaling baselines predict breakthroughs by 2027.

The scaling problem isn’t insurmountable—it’s directional. By prioritizing agents that learn like us—from streams, continually, compute-smart—we inch toward AGI that augments humanity, not just mimics benchmarks. Researchers diving into PhDs on compute-efficient RL signal momentum; subscribe to this shift, and the future looks less like replacement, more like collaboration.

Access the file URL after the paywall by subscribing. Your subscription lets you download free books included with each article—helping you learn, grow, and advance your skills.