The Strange Math That Predicts (Almost) Anything

How a 1906 feud in revolutionary Russia gave birth to Markov chains—the quiet equations behind nuclear weapons, Google search, and artificial intelligence.

In a world drowning in uncertainty—from the flip of a coin to the spread of a virus, from the ranking of a webpage to the next word in a sentence—one unlikely invention from over a century ago stands as a quiet oracle. It’s a branch of mathematics so deceptively simple it feels like a parlor trick, yet it underpins the explosions of nuclear bombs, the algorithms of trillion-dollar search engines, and the eerie prescience of artificial intelligence. This strange math, known as Markov chains, emerged not from a grand quest for knowledge but from a petty academic brawl in revolutionary Russia. It predicts the unpredictable by assuming the past is mostly forgettable, focusing only on the here and now. And in doing so, it has reshaped our understanding of randomness, dependence, and the hidden patterns in chaos.

Books to download:

Understanding Markov Chains: Examples & Applications

Probability, Markov Chains, Queues, and Simulation:

Link at the end of the article!

To grasp its magic, consider a few riddles that have puzzled humanity for generations. How many riffle shuffles does it take to randomize a deck of 52 cards? (The answer, surprisingly, is just seven.) How much uranium-235 is needed to trigger a nuclear chain reaction capable of leveling a city? (Around 52 kilograms for a basic bomb, but simulating that threshold required revolutionary computation.) How does a machine guess your next email reply with uncanny accuracy? (By chaining probabilities from the words you’ve already typed.) And why does Google, with a single query, surface the exact page you didn’t know you were seeking? (By modeling the web as a probabilistic surfer’s wanderings.) These aren’t coincidences. They’re the fingerprints of Markov chains, a tool that turns dependence into prediction, complexity into clarity.

Born in 1906 amid the smoke of Russia’s failed revolution, this math was wielded as a weapon in a feud between two mathematicians whose worldviews clashed as violently as the politics around them. What began as a defense of free will ended up liberating probability from rigid assumptions, allowing us to model everything from weather patterns to stock trades. Over the next few thousand words, we’ll unravel its origins, mechanics, and triumphs—proving that sometimes, the strangest ideas predict almost anything.

The Revolutionary Rift: Probability Enters the Fray

Picture St. Petersburg in 1905: Tsar Nicholas II’s autocratic empire is fracturing under the weight of industrialization, famine, and war. Bloody Sunday sees troops gunning down unarmed protesters outside the Winter Palace, igniting strikes, mutinies, and socialist fervor. The air crackles with demands for reform—or revolution. In this tinderbox, even the ivory towers of academia ignite. Mathematicians, those guardians of abstract truths, pick sides. The Tsarists cling to the old order; the socialists dream of a new one. And at the heart of this intellectual civil war stands a debate over dice rolls and divine intent.

Enter Pavel Nekrasov, a towering figure in Russian mathematics, dubbed the “Tsar of Probability” for his dominance in the field. A devout Orthodox Christian and unyielding loyalist to the monarchy, Nekrasov saw math as a bridge to the divine. For two centuries, probability theory had rested on the Law of Large Numbers (LLN), a cornerstone laid by Swiss mathematician Jacob Bernoulli in 1713. The LLN states that if you repeat an experiment many times—like flipping a fair coin—the average outcome will converge to the expected value. Flip a coin 10 times, and you might get six heads and four tails, a wild deviation from 50/50. But after 1,000 flips? It’s 501 heads and 499 tails, snugly close to even. This “law” explained why casinos always win in the long run and why insurance companies thrive on aggregated risks.

Bernoulli’s proof, however, hinged on a crucial assumption: independence. Each trial must stand alone, uninfluenced by the last—like solitary coin flips or isolated guesses in a survey. Nekrasov, ever the philosopher-king, extended this aggressively. Gazing at social statistics—Belgian marriage rates from 1841 to 1845 averaging a steady 29,000 per year, or Russian crime and birth tallies showing similar stability—he declared victory for free will. If these human decisions (to wed, to steal, to procreate) followed the LLN, they must be independent acts of volition, unswayed by fate or society. To Nekrasov, this wasn’t just math; it was empirical proof of God’s design, a scientific bulwark against deterministic socialism. “Independence implies free will,” he thundered in his papers, using equations to evangelize the Tsarist status quo.

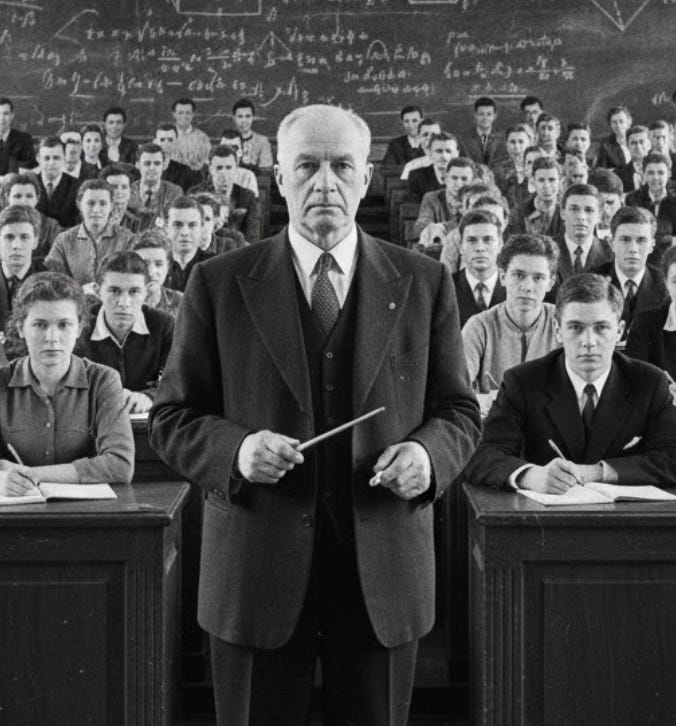

His nemesis? Andrey Andreyevich Markov, a brilliant but prickly atheist whose socialist leanings earned him the moniker “Andrey the Furious.” Markov had little patience for what he called the “abuses of mathematics”—fusing rigor with mysticism. Born into a minor noble family, he studied under the legendary Pafnuty Chebyshev and inherited a disdain for sloppiness. To Markov, Nekrasov’s leap from statistics to souls was not just wrong; it was dangerous, propping up an oppressive regime with pseudoscience. In 1906, amid the revolution’s hangover, Markov struck back in the Communications of the Mathematical Society. His weapon: a proof that the LLN holds even for dependent events. Convergence to the expected value didn’t require independence—it could emerge from chains of influence.

Markov’s coup de grâce was elegant and audacious. He turned to the Russian soul itself: Alexander Pushkin’s 1833 novel Eugene Onegin, a verse epic of love, duels, and existential drift. Stripping away spaces and punctuation, Markov analyzed the first 20,000 letters, tallying 43% vowels and 57% consonants. If letters were independent, vowel-vowel pairs should occur about 18% of the time (0.43 × 0.43). Reality? Just 6%. Dependencies abounded: A’s love to follow O’s, but vowels rarely cluster. Undeterred, Markov built a “prediction machine”—two states (vowel or consonant), linked by transition probabilities. From a vowel, the next letter was 13% likely another vowel, 87% a consonant. From a consonant: 33% vowel, 67% consonant.

Simulate this: Start at a random letter, generate a uniform random number between 0 and 1, and hop states accordingly. Early ratios swing wildly—60% vowels one moment, 30% the next. But after hundreds of steps? It settles at 43% vowels, 57% consonants—the exact empirical split. Dependence didn’t derail the LLN; it danced with it. Markov’s chains obeyed the law without isolation. “Thus, free will is not necessary to do probability,” he concluded with a smirk in his paper. Independence? Optional. Nekrasov was routed, his theological tower toppled. But Markov, ever the purist, shrugged off applications: “I am concerned only with questions of pure analysis.” Little did he know, his indifferent scribbles would ignite the 20th century.

Chains of Memory: The Engine of Prediction

At its core, a Markov chain is a probabilistic graph—a set of states connected by arrows weighted with transition odds. The “Markov property” is its secret sauce: the future depends only on the present, not the full history. It’s memoryless, like a goldfish plotting its next swim based solely on its current bubble. Formally, for states S={s1,s2,…,sn} S = \{s_1, s_2, \dots, s_n\} S={s1,s2,…,sn}, the transition matrix P P P has entries pij=P(next state sj∣current si) p_{ij} = P(\text{next state } s_j \mid \text{current } s_i) pij=P(next state sj∣current si), with rows summing to 1. Starting from initial distribution π0 \pi_0 π0, the state after t t t steps is πt=π0Pt \pi_t = \pi_0 P^t πt=π0Pt. For ergodic chains (irreducible and aperiodic), πt \pi_t πt converges to a stationary distribution π \pi π, where π=πP \pi = \pi P π=πP—the long-run equilibrium.

This simplicity scales to infinity. Weather? States: sunny, rainy, cloudy. Tomorrow’s forecast hinges on today, ignoring last week’s drought. Stock prices? Model as price levels, with jumps based on current volatility. Epidemics? Infected, recovered, susceptible—transitions capture contagion without replaying every handshake. The beauty lies in approximation: instead of exact paths (exponentially explosive), sample thousands of chains via Monte Carlo methods, averaging outcomes. It’s why Markov chains power everything from Google Maps’ traffic predictions to Netflix’s binge recommendations.

Yet this memorylessness is no flaw—it’s a feature. Real systems often exhibit “Markovian approximation”: distant history fades, leaving the now-dominant. In Pushkin’s prose, grammar enforces local dependencies, but global themes emerge from aggregated letters. In physics, Brownian motion (a particle’s jittery dance) approximates a chain, ignoring ancient collisions. As one mathematician quipped, “Problem-solving is often a matter of cooking up an appropriate Markov chain.” But could this kitchen alchemy crack the atom?

Fission and Fantasy: Birth of the Monte Carlo Method

July 16, 1945: The New Mexico desert blooms with unnatural fire as the world’s first atomic bomb detonates, yielding 21 kilotons of fury from a plutonium core the size of a grapefruit. The Manhattan Project, a $2 billion wartime frenzy, had marshaled geniuses like J. Robert Oppenheimer and Enrico Fermi. But victory masked a gnawing puzzle: scaling up. Fissile uranium-235 was rarer than hen’s teeth, enriched agonizingly from natural ore. How much for a bomb? Too little, and neutrons fizzle out; too much, and it’s a reactor, not a weapon.

Enter Stanislaw Ulam, a Polish-Jewish mathematician whose whimsy masked brilliance. Post-war, as encephalitis sidelined him to bedridden boredom, Ulam tinkered with Solitaire. With 52 cards, there are 52! ≈ 8 × 10⁶⁷ possible deals—an astronomically vast state space. Analytic solution? Futile. But simulate 100 random shuffles, tally winnable games, and approximate the odds (about 1 in 20, as it turns out). Eureka: random sampling for intractable problems.

Back at Los Alamos, Ulam pitched this to John von Neumann, the polymath who could compute π to 1,000 digits in his head. Neutrons weren’t independent coins; each smash released 2–3 more, dependent on speed, position, and density. A chain reaction is a branching Markov tree: states like “traveling neutron,” transitions to “scatter” (bounce off non-fissile atoms), “absorb” (dead end), or “fission” (spawn offspring). Probabilities vary—fast neutrons (high energy) fission 20% of the time; slow ones, just 1%. The goal: multiplication factor k = average neutrons produced per incident one. k < 1: dud. k = 1: steady burn. k > 1: exponential boom.

Von Neumann saw the fit: evolve full histories via chains, not snapshots. On the behemoth ENIAC—the first programmable electronic computer, a 30-ton tangle of vacuum tubes—they ran simulations. Seed a neutron’s position and velocity, step through absorptions and splits (up to trillions of paths per run), histogram k-values over 1,000 trials. For a 52-kg U-235 sphere, k hovered at 1.05—barely supercritical, enough for a 15-kiloton yield. Precision without pencil-pushing differentials.

Ulam, evoking his gambler uncle, christened it the “Monte Carlo method”—roulette-wheel randomness for high-stakes bets. Declassified in 1948, it proliferated: Argonne National Lab optimized reactors; biologists simulated DNA folding; economists stress-tested markets. Today, it’s ubiquitous—climate models Monte Carlo greenhouse feedbacks, drug trials average molecular dances, even Bitcoin miners sample hashes. Ulam marveled: “A few scribbles on a blackboard” altered history. From Russian verse to atomic verse, Markov’s chains had gone nuclear.

Surfing the Web: PageRank’s Probabilistic Coup

By the mid-1990s, the internet was a toddler tantrum: explosive growth, zero order. Thousands of pages bloomed daily, a haystack sans needle. Early search engines like Yahoo (founded 1994 by Jerry Yang and David Filo) and Lycos trolled by keyword frequency—crude, spammable. Stuff a page with invisible “Viagra” repeats? Top billing. Quality? Irrelevant. Enter two Stanford PhD students, Sergey Brin and Larry Page, who in 1996 eyed the web not as documents, but as endorsements.

Libraries offered a clue: a book’s due-date stamps signaled popularity. Translate to hyperlinks: an incoming link is a vote of trust. But not all votes equal—a blog linking to a thousand sites dilutes each endorsement. Brin and Page modeled this as a Markov chain: webpages as states, outgoing links as transitions. Probability from A to B? 1 over A’s out-degree. A “random surfer” starts anywhere, follows links 85% of the time, teleports randomly 15% (the damping factor, averting dead-end traps). Long-run visit frequencies? Page importance scores.

Mathematically, for n pages, the transition matrix M is row-stochastic. The Google matrix G = 0.85M + 0.15(1/n)eeᵀ (e all-ones vector) ensures ergodicity. PageRank π satisfies π = Gπ, the dominant eigenvector—solvable via power iteration: guess π₀, iterate π_{k+1} = Gπ_k until convergence.

Test on a toy web: four pages (Amy links to Ben; Ben to all; etc.). The surfer lingers longest on hubs like Ben (33% time), ignoring isolates. Spam farms? They loop internally but leak value outward, their votes worthless without reciprocation. Launched as “BackRub” in 1998 (for backlinks), it rebranded Google—a misspelling of “googol,” 10¹⁰⁰, evoking infinite indexability.

The impact? Cataclysmic. Yahoo, flush with $100 million from investor Masayoshi Son, bet on portals and ads. Google bet on superiority: one-click relevance. By 2002, it dethroned Yahoo; today, Alphabet’s market cap nears $2 trillion. PageRank resisted manipulation, birthing an ad empire atop quality. Critics grumbled—fewer clicks meant fewer ad views—but users flocked. As Page put it, “It’s a democracy that works,” weighting voices by merit. Markov’s memoryless surfer had mapped the world’s knowledge.

Words as Worlds: From Shannon’s Gibberish to AI Oracles

Markov’s Onegin chain begat another lineage: linguistic prophecy. In 1948, Claude Shannon, information theory’s godfather, pondered communication’s essence—entropy, the bits needed to surprise. To quantify English’s predictability, he built chains on letters, then words. Zero-order (uniform letters): “xfmlrw” nonsense. First-order (letter-to-letter): “wldmnl” semi-coherent. Second-order (digrams): “as a ee fishmna the ch” teasingly close. Words? “The head and in frontal attack on an English writer...”—phrases like “frontal attack” gleam amid drivel.

Shannon’s insight: prediction sharpens with context windows. Four-word chains yield “the time of who ever told the problem for an unexpected,” where bursts of sense emerge. This autoregression—next token from priors—mirrors LLMs like GPT. Tokens (subwords, punctuation) form states; transformers add attention, weighting distant history (e.g., “cell” as biology, not prison, via “mitochondria” upstream). Yet the core is Markovian: P(next | previous k tokens).

Modern twist: scale. GPT-4’s 1.7 trillion parameters chain quadrillions of probabilities, trained on internet corpora. Gmail’s “Best regards” nudge? A low-order chain. Chatbots’ essays? High-order, with beam search sampling coherent paths. But perils lurk: train on AI slop, and chains collapse to “dull stable states”—repetitive loops, per 2023 studies. Feedback erodes diversity, like a language forgetting synonyms.

Still, it predicts profoundly: protein folding (AlphaFold chains amino transitions), music generation (next note from chord), even therapy bots chaining empathetic replies. Shannon’s gibberish evolved into Grok’s wit—Markov, unwittingly, scripted the talk of machines.

The Deck’s Disorder: Shuffling into Randomness

Humble origins demand humble proofs. Ulam’s Solitaire sparked the bomb; now, reverse: how random is a shuffled deck? Intuition screams 52 shuffles—one per card. Wrong. Persi Diaconis, a former magician turned Stanford statistician, modeled riffle shuffles (split, interleave) as Markov chains. States: 52! permutations. Transition: halve deck, drop cards binomial-style (probability halves interlace evenly).

Eigenvalue wizardry reveals mixing time—the steps to near-uniformity (total variation distance < 0.5). After one riffle: 2 rising sequences (ordered runs). Two: 4. Exponentially, seven yields 128—enough to blanket the space. Overhand shuffles? Sloppy, needing 2,000+ for parity. Casinos perfect-faro: 8–11. Diaconis’s 1992 paper, with Dave Bayer, used group theory; real hands vary, but seven’s the charm.

This isn’t trivia—it’s cryptography (key generation), simulations (uniform priors), even voting (fair lotteries). Markov chains demystify: dependence breeds order, but iterated, yields chaos.

Horizons and Hazards: When Chains Break

For all its prescience, Markov’s math falters where memory lingers. Climate? CO₂ warms air, boosting water vapor—a greenhouse amplifier—spiraling beyond local states. Quantum entanglement defies locality. Human behavior? Habits chain, but epiphanies recall youth. Remedies: hidden Markov models (unobserved states), or hybrids with long memory (LSTMs in AI).

Yet extensions thrive: reinforcement learning (MDPs as state-action chains), epidemiology (COVID variants as mutating states). Quantum Markov chains simulate qubits; ecological ones track species cascades. In 2025, as AI ingests exabytes, chains underpin ethical filters—predicting bias propagation.

Legacy of a Feud: Predicting the Unpredictable

From Nekrasov’s pious probabilities to Markov’s furious chains, a 1906 spat birthed a paradigm. It liberated math from isolation, embracing the tangled web of reality. Nekrasov sought God’s will in numbers; Markov found freedom in dependence. Today, it shuffles our games, ranks our thoughts, arms our fears, and voices our dreams. In a universe of cascades—from neutrons to neurons— this strange math reminds us: to predict almost anything, remember just enough.

Download the books/code using the button below:

Keep reading with a 7-day free trial

Subscribe to Onepagecode to keep reading this post and get 7 days of free access to the full post archives.