Understanding time series forecasting

Time series forecasting is a specialized area of data analysis and machine learning focused on predicting future values of a variable based on its past observations.

Unlike other statistical or machine learning tasks that assume independence between data points, time series data inherently possesses a temporal order, meaning the sequence of observations is crucial and carries significant information.

Link To Download Source Code & Dataset At the end of article!

What is a Time Series?

A time series is a sequence of data points indexed, or listed, in time order. Most commonly, a time series is a sequence taken at successive equally spaced points in time. Examples include daily stock prices, hourly temperature readings, monthly sales figures, or yearly population counts. The defining characteristic is that each data point is associated with a specific timestamp.

Consider a simple example: tracking the daily closing price of a particular stock. Each day, we record a single value, and these values, when ordered by date, form a time series.

Let’s illustrate this with a simple synthetic time series using Python. We’ll generate a series representing daily temperature over a month.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# Set a random seed for reproducibility

np.random.seed(42)We begin by importing the necessary libraries: pandas for data manipulation, numpy for numerical operations (like generating random data), and matplotlib.pyplot for plotting. Setting a random seed ensures that our synthetic data will be the same every time you run the code, which is helpful for consistent examples.

# Create a date range for 30 days

dates = pd.date_range(start='2023-01-01', periods=30, freq='D')

# Generate synthetic temperature data with a slight trend and noise

# Base temperature around 20 degrees, add a small increasing trend

# Add some random fluctuations (noise)

temperatures = 20 + np.arange(30) * 0.1 + np.random.normal(0, 1.5, 30)Here, we create our time index using pd.date_range for 30 consecutive days starting from January 1, 2023. For the temperature data, we simulate a realistic scenario by starting with a base temperature, adding a slight linear increase over the 30 days (representing a subtle trend), and then incorporating random noise using np.random.normal to mimic real-world variability.

# Create a Pandas Series with dates as the index

daily_temperatures = pd.Series(temperatures, index=dates, name='Temperature')

# Display the first few entries to see the structure

print("First 5 entries of the daily temperature time series:")

print(daily_temperatures.head())We then combine our generated temperatures and dates into a pandas.Series. A pandas.Series is an excellent data structure for time series data because it automatically handles the time index, making subsequent operations like plotting or resampling much easier. Printing the head of the series shows how the date acts as the index for each temperature reading.

# Plot the time series

plt.figure(figsize=(10, 6))

daily_temperatures.plot(title='Daily Temperature Time Series (Synthetic Data)',

xlabel='Date',

ylabel='Temperature (°C)',

grid=True)

plt.show()Finally, we visualize our synthetic time series. Plotting a time series is crucial for initial exploration, allowing us to observe patterns like trends, seasonality, and irregular fluctuations. The x-axis represents time, and the y-axis represents the observed value. This simple line plot clearly illustrates the temporal progression of the data.

The Purpose of Time Series Forecasting

The primary purpose of time series forecasting is to make informed decisions about the future. By predicting how a variable will behave over time, businesses, governments, and researchers can:

Optimize Resource Allocation: Forecast demand for products to manage inventory, schedule staffing, or plan production.

Mitigate Risks: Predict financial market volatility or potential equipment failures to take proactive measures.

Inform Policy and Strategy: Forecast economic indicators (GDP, inflation) for policy-making, or predict disease spread for public health interventions.

Understand Underlying Dynamics: Analyzing past patterns can reveal insights into the processes generating the data.

Practical applications span various domains:

Business & Finance: Sales forecasting, stock price prediction, energy consumption forecasting, call center staffing.

Meteorology: Weather forecasting, climate modeling.

Econometrics: Predicting GDP, unemployment rates, inflation.

Industrial Processes: Predictive maintenance for machinery, quality control.

Marketing: Forecasting ad campaign effectiveness, website traffic.

Healthcare: Predicting patient admissions, disease outbreaks.

Time Series Forecasting vs. Other Regression Tasks: Key Distinctions

While forecasting involves predicting a numerical value, much like a standard regression problem (e.g., predicting house prices based on features like size, location, number of bedrooms), there are fundamental differences that necessitate specialized techniques for time series data. Ignoring these distinctions can lead to flawed models and inaccurate predictions.

Temporal Dependence (Autocorrelation):

Standard Regression: Assumes that observations are independent of each other. The prediction for one house price doesn’t directly depend on another house’s price in the training set (beyond shared features).

Time Series Forecasting: The most critical distinction is temporal dependence. The value at time

tis highly dependent on values att-1,t-2, and so on. This inherent correlation between successive observations is called autocorrelation. For example, today's temperature is very likely related to yesterday's temperature.

Order Matters:

Standard Regression: The order of rows in your dataset typically doesn’t matter. If you shuffle the rows of a house price dataset, the model will still learn the same relationships.

Time Series Forecasting: The chronological order of data points is paramount. Shuffling a time series destroys its inherent temporal structure and makes it impossible to forecast. Models must respect this sequence.

Future Prediction Only:

Standard Regression: Often used to estimate relationships between variables or predict existing outcomes (e.g., predicting the price of a house currently on the market). All features used for prediction are known at the time of prediction.

Time Series Forecasting: The goal is to predict future values of the variable itself. This means that any features used for prediction (exogenous variables) must also be known for the future, or themselves be forecasted.

Stationarity:

Standard Regression: Assumes that the underlying data-generating process is stable.

Time Series Forecasting: Many time series exhibit non-stationary behavior, meaning their statistical properties (like mean, variance, or autocorrelation) change over time. For example, a company’s sales might have an increasing trend over years. Many traditional time series models require the series to be stationary (or transformed to be so) for valid inference and accurate forecasting.

Components of a Time Series

To better understand the patterns within a time series, it’s often helpful to decompose it into several underlying components. While we’ll delve into decomposition methods later, a high-level understanding is crucial from the outset:

Trend (T): This represents the long-term increase or decrease in the data over time. It’s the overall direction of the series, ignoring short-term fluctuations. For example, the growing global population exhibits an upward trend. In our synthetic temperature example, we added a slight upward trend.

Seasonality (S): These are regular, repeating patterns or cycles in the data that occur at fixed and known intervals. For instance, retail sales often peak during holidays, or electricity consumption might peak during certain hours of the day or seasons of the year. The length of a cycle is called the seasonal period (e.g., 7 days for weekly seasonality, 12 months for annual seasonality).

Residuals / Noise (R): Also known as the “remainder” or “irregular” component, this is what’s left after accounting for the trend and seasonal components. It represents the random, unpredictable fluctuations in the data that cannot be explained by trend or seasonality. This is often the part we aim to minimize in our models.

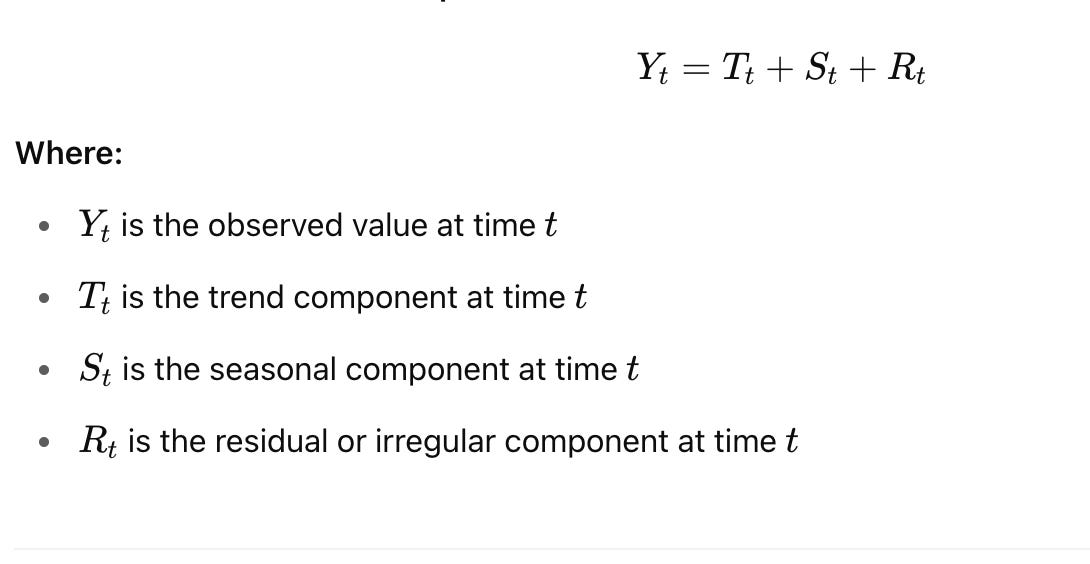

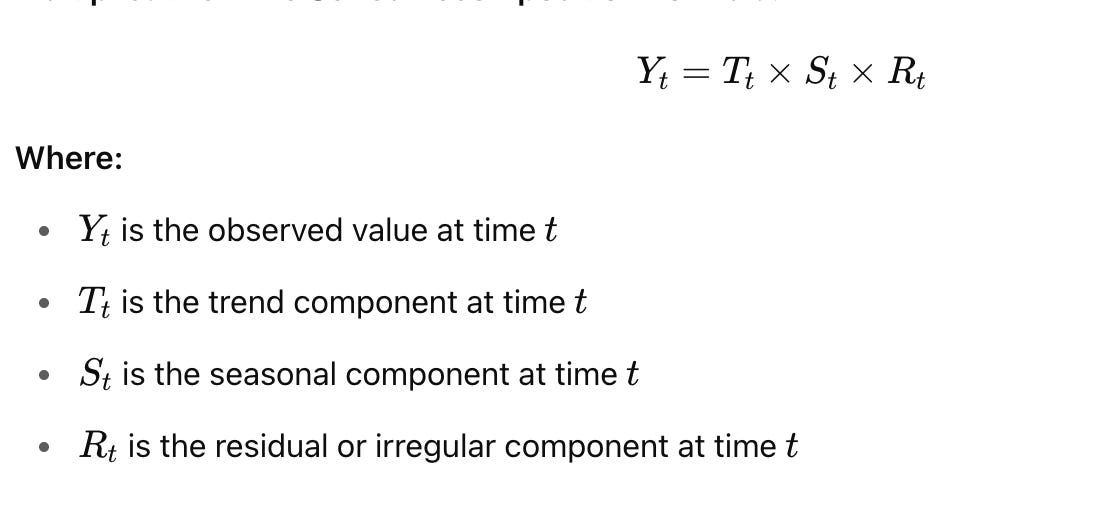

These components can be combined additively (e.g., Y = T + S + R) or multiplicatively (e.g., Y = T * S * R), depending on whether the magnitude of the seasonal fluctuations changes with the level of the series.

Python’s Prominence in Time Series Analysis

Python has emerged as the dominant programming language for data science, machine learning, and consequently, time series analysis and forecasting. Its popularity stems from several key advantages:

Versatility: Python is a general-purpose language, meaning it’s not limited to statistical computing. You can use it for data cleaning, web development, deploying models, and building entire applications, providing an end-to-end solution.

Rich Ecosystem of Libraries: Python boasts an incredibly extensive collection of open-source libraries specifically designed for data manipulation, statistical analysis, machine learning, and deep learning.

pandas: Indispensable for data manipulation, especially with time-indexed data.NumPy: Provides powerful numerical computing capabilities.MatplotlibandSeaborn: For data visualization.SciPy: A collection of scientific computing tools.statsmodels: Offers a wide array of statistical models, including classical time series models like ARIMA, ETS, and state-space models.scikit-learn: A comprehensive machine learning library with tools for regression, classification, clustering, and more, which can be adapted for time series feature engineering.Specialized Libraries: Libraries like

Prophet(developed by Facebook) andpmdarima(auto-ARIMA) streamline common forecasting tasks.Deep Learning Frameworks:

TensorFlowandPyTorchenable the development of advanced neural network models, including Recurrent Neural Networks (RNNs) and Transformers, which are highly effective for complex time series patterns.Community Support: A large and active community means abundant resources, tutorials, and quick resolution of issues.

Readability: Python’s syntax is often described as clear and intuitive, making it easier to learn and write maintainable code.

While languages like R have historically been strong in statistical computing, Python’s broader applicability and its robust machine learning and deep learning ecosystem make it the preferred choice for modern time series forecasting, especially when integrating with larger data pipelines or deploying models into production. This book will leverage Python extensively, providing practical, hands-on examples that build your proficiency in this powerful environment.

Pedagogical Progression of This Book

This book is structured to guide you through the exciting world of time series forecasting in a logical and progressive manner. We will begin with foundational concepts and simpler statistical models, gradually building complexity as we introduce more advanced techniques.

You will start by understanding the basic characteristics of time series data and classic statistical methods like Exponential Smoothing and ARIMA models, which form the bedrock of many forecasting solutions. As your understanding deepens, we will transition to machine learning approaches, where you’ll learn to engineer features from time series data and apply powerful algorithms. Finally, we will explore the cutting edge of time series forecasting with deep learning models, leveraging the capabilities of neural networks to capture intricate temporal dependencies and long-term patterns. This structured approach ensures that you build a strong conceptual and practical foundation, enabling you to tackle a wide range of real-world forecasting challenges.

What is a Time Series?

At its core, a time series is a sequence of data points indexed, or listed, in time order. This means that each data point in the series corresponds to a specific point in time, and the order of these points is crucial. Unlike other forms of data analysis where observations might be independent or arbitrarily ordered, in time series analysis, the temporal sequence is the primary independent variable and carries significant information.

Consider the daily closing price of a stock, the quarterly earnings of a company, or the hourly temperature readings from a sensor. In each case, the value observed at one point in time is often influenced by, and provides context for, values observed at previous points in time. This inherent temporal dependency is what fundamentally distinguishes time series data from other types of datasets, such as cross-sectional data (e.g., a survey of customer preferences at a single point in time) or panel data (which combines cross-sectional observations over time but often treats time as a separate dimension rather than the primary index).

Defining Characteristics of Time Series Data

While the definition is straightforward, understanding the nuances of time series data is key to effective analysis and forecasting.

Time-Ordered Sequence

The most critical characteristic is that the data points are ordered by time. This is not merely a convenience; it is a fundamental property. Changing the order of observations in a time series would fundamentally alter its meaning and the patterns it exhibits. For instance, scrambling the order of daily stock prices would render the data meaningless for financial analysis, as the progression of prices over time is what reveals trends, volatility, and market cycles.

Often Equally Spaced Intervals

Many time series, especially those amenable to traditional forecasting models, consist of observations taken at regular, equally spaced intervals. Examples include:

Hourly: Temperature readings, website traffic.

Daily: Stock prices, sales figures.

Weekly: Retail sales, energy consumption.

Monthly: Unemployment rates, inflation data.

Quarterly: Company earnings, GDP figures.

Annually: Population growth, agricultural yields.

The assumption of equally spaced data simplifies the application of many time series models, as it implies a consistent underlying sampling frequency.

However, it’s important to acknowledge that not all real-world time series are equally spaced. Data from event logs, irregular sensor readings (where data is only transmitted upon significant change), or financial trade data (where transactions occur at irregular intervals) are examples of unequally spaced or irregular time series. While this book will primarily focus on equally spaced time series due to their prevalence in many business and economic applications and the simpler modeling approaches, be aware that specialized techniques exist for handling irregular data, often involving resampling, interpolation, or event-based modeling.

Temporal Dependencies and Autocorrelation

A defining feature of time series is that observations are typically not independent of each other. The value at the current time point often depends on values from previous time points. This phenomenon is known as autocorrelation — the correlation of a variable with itself over different time lags. For example, today’s stock price is highly correlated with yesterday’s price, and last month’s sales figures are likely to influence this month’s sales. This temporal dependency is precisely why traditional regression models, which often assume independent observations, are insufficient for time series forecasting. Time series models are specifically designed to capture and leverage these internal dependencies to make accurate predictions.

Representing Time Series Programmatically: The Time Index

When working with time series data in Python, especially with the powerful pandas library, the concept of the "time index" becomes paramount. pandas DataFrames and Series are ideal for handling time series because they allow for a dedicated DatetimeIndex. This index is not just a label; it's a specialized data type that enables powerful time-based operations like resampling, slicing by date ranges, and frequency analysis.

Let’s look at a simple example of how a time series might be structured in pandas.

import pandas as pd

# 1. Define a sequence of dates (our time index)

# We'll create daily dates for a week starting from January 1, 2023

dates = pd.to_datetime(['2023-01-01', '2023-01-02', '2023-01-03', '2023-01-04',

'2023-01-05', '2023-01-06', '2023-01-07'])

# 2. Define a sequence of values (our data points)

# Let's imagine these are daily website visitors

website_visitors = [1200, 1350, 1100, 1500, 1600, 1800, 1750]

# 3. Create a pandas Series with the dates as the index

# This is a fundamental way to represent a time series in Python

daily_visitors_ts = pd.Series(website_visitors, index=dates)

# Display the time series

print(daily_visitors_ts)This first code chunk demonstrates how to create a basic time series using pandas. We define a list of dates, convert them into datetime objects suitable for a pandas index using pd.to_datetime(), and then pair them with a corresponding list of values. The pd.Series() constructor, when given both data and an index, automatically creates a time series where the dates serve as the explicit time-ordered labels for each data point.

# Check the type of the index to confirm it's a DatetimeIndex

print("\nType of index:", type(daily_visitors_ts.index))

print("Data type of index elements:", daily_visitors_ts.index.dtype)This small addition verifies that pandas correctly recognized our dates and assigned a DatetimeIndex. This specialized index type is what unlocks pandas's powerful time series capabilities, allowing for efficient operations like selecting data by date ranges or resampling data to different frequencies (e.g., converting daily data to weekly averages).

Real-World Examples of Time Series

Time series data is ubiquitous across virtually every domain. Recognizing these patterns in various contexts is the first step towards applying time series forecasting techniques.

Financial Markets:

Stock Prices: Daily, hourly, or even minute-by-minute closing prices, opening prices, high/low values for individual stocks or market indices (e.g., S&P 500).

Exchange Rates: Fluctuations of currency pairs (e.g., USD/EUR) over time.

Company Earnings: Quarterly or annual earnings per share, revenue, or profit figures.

Interest Rates: Daily or monthly changes in benchmark interest rates.

Economic Indicators:

Gross Domestic Product (GDP): Quarterly or annual economic output of a country.

Inflation Rates: Monthly or annual percentage change in consumer prices.

Unemployment Rates: Monthly percentage of the labor force that is unemployed.

Consumer Price Index (CPI): Monthly measure of the average change over time in the prices paid by urban consumers for a market basket of consumer goods and services.

Environmental and Climate Data:

Temperature Readings: Hourly, daily, or monthly average temperatures in a specific location.

Rainfall/Precipitation: Daily or monthly accumulated rainfall.

Air Quality: Hourly measurements of pollutants like PM2.5 or ozone.

Ocean Levels: Annual measurements of sea level rise.

Business and Retail:

Sales Data: Daily, weekly, or monthly sales volumes for products or services.

Website Traffic: Hourly or daily unique visitors, page views, or conversion rates.

Customer Service Calls: Number of calls received per hour or day.

Inventory Levels: Daily or weekly counts of goods in stock.

Healthcare and Medical:

EKG/ECG Readings: Continuous measurements of electrical activity of the heart.

Blood Pressure Monitoring: Hourly or daily readings for patients.

Disease Incidence: Weekly or monthly counts of new disease cases (e.g., flu outbreaks).

Internet of Things (IoT) and Sensor Data:

Smart Home Devices: Energy consumption, temperature, or humidity readings from connected devices.

Industrial Sensors: Pressure, temperature, vibration data from machinery for predictive maintenance.

Vehicle Telemetry: Speed, location, engine performance data over time.

This diverse range of examples underscores the universality of time series data and the broad applicability of forecasting techniques across various industries and scientific disciplines.

Introducing the Johnson & Johnson Earnings Dataset

Throughout this book, we will frequently refer to and analyze the Johnson & Johnson (J&J) quarterly earnings per share (EPS) dataset. This dataset is a classic example in time series analysis due to its clear and illustrative patterns. It tracks the quarterly earnings per share for the pharmaceutical and consumer goods giant Johnson & Johnson from 1960 to 1980.

Let’s load this dataset (or a similar representative one for illustration) and visualize it to understand its key properties. For this introductory section, we will use a simplified approach to loading data, assuming it’s available in a common format like a CSV file.

import matplotlib.pyplot as plt

import pandas as pd# Load the Johnson & Johnson earnings dataset

# Assuming 'jj_earnings.csv' is in the same directory

# The 'Quarter' column contains dates, and 'Earnings' contains the EPS values.

try:

jj_data = pd.read_csv('data/jj_earnings.csv', index_col='Quarter', parse_dates=True)

except FileNotFoundError:

print("jj_earnings.csv not found. Please ensure the data file is in the 'data' directory.")

# Create a synthetic dataset if the real one isn't available for demonstration

dates = pd.to_datetime(pd.date_range(start='1960-01-01', periods=84, freq='QS-OCT')) # Quarterly start, Oct for Q4

earnings = [

0.71, 0.63, 0.85, 0.44, 0.61, 0.69, 0.92, 0.55, 0.72, 0.77, 0.92, 0.60,

0.83, 0.80, 1.00, 0.67, 0.92, 0.95, 1.05, 0.76, 1.03, 1.08, 1.21, 0.88,

1.16, 1.25, 1.45, 1.05, 1.30, 1.45, 1.74, 1.25, 1.55, 1.60, 2.07, 1.62,

1.71, 1.86, 2.36, 1.70, 1.91, 2.15, 2.79, 2.00, 2.22, 2.50, 3.23, 2.37,

2.60, 2.90, 3.65, 2.80, 3.10, 3.50, 4.30, 3.40, 3.60, 4.00, 4.96, 4.00,

4.30, 4.70, 5.70, 4.60, 5.30, 6.00, 7.20, 6.00, 7.10, 8.50, 10.00, 9.00,

10.00, 11.50, 13.00, 12.00, 14.00, 16.00, 18.00, 20.00, 23.00, 26.00, 30.00, 35.00

]

jj_data = pd.Series(earnings, index=dates, name='Earnings')

jj_data = pd.DataFrame(jj_data) # Convert to DataFrame for consistency

print("Using synthetic J&J-like data for demonstration.")# Display the first few rows of the dataset

print("First 5 rows of Johnson & Johnson Earnings:")

print(jj_data.head())This code snippet prepares the J&J dataset for analysis. It attempts to load a CSV file, which is a common way to obtain real-world data. Crucially, index_col='Quarter' and parse_dates=True tell pandas to use the 'Quarter' column as the DatetimeIndex, ensuring the data is correctly recognized as a time series. A fallback synthetic dataset is included for robustness, allowing the code to run even if the specific CSV isn't present, mimicking the real data's characteristics. Displaying .head() allows us to quickly inspect the structure and ensure the time index is correctly set.

Now, let’s visualize the data:

# Plot the time series

plt.figure(figsize=(12, 6)) # Set the figure size for better readability

plt.plot(jj_data.index, jj_data['Earnings'], marker='o', linestyle='-', markersize=4)

plt.title('Johnson & Johnson Quarterly Earnings Per Share (1960-1980)')

plt.xlabel('Year')

plt.ylabel('Earnings Per Share ($)')

plt.grid(True) # Add a grid for easier reading of values

plt.xticks(rotation=45) # Rotate x-axis labels for better visibility

plt.tight_layout() # Adjust layout to prevent labels from overlapping

plt.show()This plotting code uses matplotlib to generate a line plot, which is the standard way to visualize a time series. The jj_data.index (our DatetimeIndex) is used for the x-axis, and the 'Earnings' column for the y-axis. Markers and line styles are added for clarity, and standard plotting enhancements like titles, labels, and a grid are applied to make the visualization informative.

Visual Properties of the J&J Dataset

Upon examining the plot of the Johnson & Johnson earnings, several key characteristics become immediately apparent:

Trend: There is a clear upward trend over the entire period. Earnings per share generally increased from 1960 to 1980, indicating consistent growth for the company. This suggests that future earnings are likely to be higher than past earnings, a critical piece of information for forecasting.

Cyclical/Seasonal Behavior: Within each year, there’s a distinct pattern. Earnings tend to be lower in the first quarter (Q1) and then peak in the fourth quarter (Q4). This repeating pattern within a fixed period (a year, in this case) is known as seasonality. For a business, this often reflects seasonal demand for products, holiday sales, or fiscal reporting cycles.

Increasing Variability: As time progresses and the overall earnings increase, the magnitude of the seasonal fluctuations also appears to increase. The peaks and troughs become more pronounced in later years. This suggests that the variance (or standard deviation) of the series is not constant over time, a property known as heteroscedasticity. This is an important consideration for more advanced forecasting models.

These visual properties — trend, seasonality, and changing variability — are common in many real-world time series and will be central to the forecasting techniques explored in later sections.

Why is Time Series Forecasting Different?

You might wonder why we need specialized techniques for time series forecasting when standard regression methods can predict a dependent variable based on independent variables. The crucial distinction lies in the temporal dependence we discussed earlier.

In typical regression problems, observations are often assumed to be independent and identically distributed (i.i.d.). This means that the value of one observation does not influence another, and all observations come from the same underlying distribution. However, for time series data, this assumption is fundamentally violated.

The past values of a time series directly influence its future values. This inherent autocorrelation means that simply using time as an independent variable in a standard linear regression model often fails to capture the complex temporal dynamics. Such models might provide a general trend but would likely miss crucial patterns like seasonality or the impact of recent fluctuations. Time series forecasting models are specifically designed to explicitly account for these dependencies, leveraging the rich information contained within the sequence of observations to make more accurate and robust predictions.

Components of a Time Series

Time series data, by its very nature, is a collection of observations recorded over time. While a simple line plot can show the overall movement, a deeper understanding often requires us to break down the series into its fundamental building blocks. This process, known as time series decomposition, helps us identify and isolate the distinct patterns that contribute to the observed data. Understanding these components is crucial for diagnosing the behavior of a series, making informed forecasting decisions, and developing robust models.

The Observed Time Series

Before diving into components, let’s establish what we mean by the “observed time series.” This is simply the raw, actual data points collected over time, often denoted as $Y_t$, where $t$ represents a specific point in time. For instance, the monthly sales figures for a retail store, the daily temperature readings in a city, or the hourly electricity consumption of a building are all observed time series.

Trend

The trend component captures the long-term, underlying direction or movement of a time series. It represents the persistent increase, decrease, or stagnation in the data over a significant period.

Characteristics:

Long-term: Trends are not short-term fluctuations but reflect patterns spanning multiple years, decades, or even longer.

Directional: They indicate whether the series is generally moving upwards (e.g., growing economy, increasing population), downwards (e.g., declining product sales, decreasing birth rates), or remaining relatively constant.

Not Necessarily Linear: While a linear trend (straight line) is common, trends can also be non-linear (e.g., exponential growth, S-shaped curves, parabolic movements).

Influenced by Macro Factors: Trends are often driven by broad societal, economic, technological, or demographic changes. For example, the increasing adoption of e-commerce might show an upward trend in online sales, while a shift in consumer preferences could show a downward trend for a specific product category.

Real-world Applications: Identifying trends is vital for strategic planning. A business needs to know if its market is growing or shrinking; a government needs to understand population growth trends to plan infrastructure.

Common Pitfalls: Confusing a short-term fluctuation with a long-term trend. A temporary dip in sales due to an unusual event is not a trend, but a sustained decline over several years likely is.

Seasonality

The seasonal component refers to patterns that repeat over a fixed, known period. These patterns are regular, predictable, and occur within a specific timeframe, such as a day, week, month, quarter, or year.

Characteristics:

Fixed Period: The pattern repeats at regular intervals. For example, hourly electricity consumption might peak in the afternoon every day, or retail sales might surge every December.

Recurring: The pattern is consistent across different cycles. The peak in sales happens every December, not just some Decembers.

Predictable: Because the period is fixed, the seasonal pattern can be anticipated.

Driven by Calendar/Climate: Seasonality is often influenced by calendar events (holidays, academic terms), weather patterns (temperature, rainfall), or business cycles (weekly payroll, monthly billing).

Real-world Applications: Understanding seasonality is critical for inventory management (stocking up before peak season), staffing decisions (hiring extra help for holiday rushes), and resource allocation (predicting energy demand).

Distinction: Seasonality vs. Cyclicity:

Seasonality: Fixed, known period (e.g., 12 months, 7 days).

Cyclicity: Fluctuations that are not of a fixed period, often longer than a year, and can vary in length and amplitude. Business cycles (recessions, expansions) are prime examples. A common pitfall is to confuse a business cycle (e.g., a 5–7 year economic boom-bust cycle) with true seasonality. While both are recurring, only seasonality has a predictable, fixed duration.

Residuals (Noise or Irregular Component)

The residual component, also known as the noise or irregular component, represents the random, unpredictable fluctuations in the time series that cannot be explained by the trend or seasonal patterns.

Characteristics:

Random: Ideally, residuals should exhibit no discernible pattern, meaning they are random and independent of each other.

Unexplained: They capture the variability in the data that is left over after accounting for trend and seasonality.

Often White Noise: In a well-decomposed series, the residuals should resemble “white noise” — a sequence of random variables with zero mean, constant variance, and no autocorrelation (no correlation with past values).

Contains Anomalies: Unusual events, outliers, or unforeseen circumstances (e.g., a sudden product recall, a natural disaster, a unique marketing campaign) will often show up as large spikes or deviations in the residual component.

Real-world Applications:

Model Assessment: If a forecasting model adequately captures trend and seasonality, the residuals of the model’s errors should resemble white noise. Any remaining patterns in the residuals indicate that the model is incomplete or has missed important information.

Anomaly Detection: Large deviations in residuals can signal unusual events or anomalies that warrant further investigation.

Time Series Decomposition: Breaking Down the Series

Time series decomposition is the statistical task of breaking down a time series into these underlying components. This process simplifies the analysis and forecasting of complex time series by allowing us to study each component independently.

The Concept of Decomposition

The goal of decomposition is to separate the observed series $Y_t$ into its constituent parts: trend ($T_t$), seasonal ($S_t$), and residual ($R_t$). This allows us to understand the individual contributions of each pattern to the overall series behavior.

For example, if you’re analyzing monthly retail sales, decomposition can tell you:

Is the overall sales volume increasing or decreasing over the years (trend)?

Are there predictable spikes in sales every December and dips every January (seasonality)?

What are the unpredictable variations left after accounting for these patterns (residuals)?

Additive vs. Multiplicative Models

The way these components are combined to form the observed series defines the type of decomposition model used. The choice between an additive and a multiplicative model is crucial and depends on how the amplitude of the seasonal fluctuations changes over time.

Additive Model:

Characteristics: In an additive model, the magnitude of the seasonal fluctuations (and residuals) remains roughly constant over time, regardless of the level of the trend. The seasonal component adds a fixed amount to the trend.

When to Use: This model is appropriate when the variation around the trend does not increase or decrease with the level of the series. For example, if monthly sales vary by approximately +/- $1000$ around the trend, whether the trend is at $10,000$ or $100,000$. This often applies to phenomena where the factors causing seasonality are independent of the magnitude of the series itself (e.g., fixed holiday effects).

Multiplicative Model:

Characteristics: In a multiplicative model, the magnitude of the seasonal fluctuations (and residuals) increases or decreases proportionally with the level of the trend. The seasonal component multiplies the trend.

When to Use: This model is suitable when the amplitude of the seasonal pattern grows or shrinks as the overall level of the series changes. For example, if monthly sales vary by +/- 10% of the trend, meaning the actual dollar variation is much larger when sales are $100,000$ than when they are $10,000$. This is common in economic series like GDP, sales, or population growth, where growth tends to be exponential.

Choosing the Right Model:

Visually inspect the time series plot. If the seasonal fluctuations appear to grow wider or narrower as the series progresses (heteroscedasticity), a multiplicative model is likely more appropriate. If the fluctuations remain relatively constant in width, an additive model is better. If in doubt, try both and compare the clarity of the decomposed components and the characteristics of the residuals. A common trick for multiplicative series is to apply a logarithmic transformation, which can transform a multiplicative relationship into an additive one, allowing the use of additive decomposition methods on the transformed data.

Common Decomposition Algorithms

While the underlying concept is simple, various algorithms are used to perform decomposition:

Moving Averages: A simple and intuitive method where the trend is estimated by smoothing the series using a moving average. Seasonality is then derived by averaging the detrended values for each period.

STL (Seasonal-Trend decomposition using Loess): A more robust and versatile method that uses local regression (LOESS) to estimate the trend and seasonal components. It can handle various types of seasonality and is less sensitive to outliers than traditional moving average methods.

X-11/X-13 ARIMA-SEATS: Sophisticated and widely used methods developed by the U.S. Census Bureau. These are complex seasonal adjustment programs often used for official economic statistics.

Practical Decomposition with Python

Python’s statsmodels library provides powerful tools for time series analysis, including decomposition. We will use seasonal_decompose to illustrate the process.

Setting Up the Environment

First, we need to import the necessary libraries.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from statsmodels.tsa.seasonal import seasonal_decompose

from statsmodels.graphics.tsaplots import plot_acf # For residual analysis

from statsmodels.stats.diagnostic import acorr_ljungbox # For residual analysisHere, we import pandas for data manipulation, numpy for numerical operations, matplotlib.pyplot for plotting, and seasonal_decompose from statsmodels for the decomposition itself. We also import tools for analyzing residuals later.

Generating a Synthetic Time Series

To clearly understand how decomposition works, let’s create a synthetic time series with known trend, seasonality, and noise components. This allows us to see if the decomposition algorithm can successfully recover these original patterns.

# Define time range for our synthetic data

np.random.seed(42) # For reproducibility

time_index = pd.date_range(start='2010-01-01', periods=120, freq='MS') # 10 years of monthly dataWe define a time_index using pd.date_range for 10 years of monthly data, starting from January 2010. freq='MS' specifies month start frequency. np.random.seed(42) ensures our random components are consistent for repeated runs.

# 1. Create a linear trend component

trend = np.linspace(0, 100, len(time_index))Here, we create a simple linear trend that increases from 0 to 100 over the 120 periods.

# 2. Create a seasonal component (e.g., yearly cycle)

# We'll simulate a strong annual seasonality

month_of_year = time_index.month

seasonal_pattern = np.sin(np.linspace(0, 3 * 2 * np.pi, len(time_index))) * 10This code generates a sinusoidal seasonal pattern. np.sin creates a wave, and np.linspace ensures it completes 3 cycles over 10 years (3 full cycles per year, 10 years total). Multiplying by 10 sets the amplitude of the seasonality.

# 3. Create a residual (noise) component

noise = np.random.normal(0, 2, len(time_index)) # Mean 0, Std Dev 2We generate random noise using a normal distribution with a mean of 0 and a standard deviation of 2. This represents the unpredictable part of the series.

Now, let’s combine these components to create our observed time series, first using an additive model.

# Combine components for an ADDITIVE time series

additive_series = pd.Series(trend + seasonal_pattern + noise, index=time_index)The additive_series is created by simply summing the trend, seasonal, and noise components. We wrap it in a pd.Series with our time_index.

# Combine components for a MULTIPLICATIVE time series

# For multiplicative, ensure components are positive or adjust

trend_mult = np.linspace(10, 100, len(time_index)) # Start trend > 0

seasonal_pattern_mult = 1 + (np.sin(np.linspace(0, 3 * 2 * np.pi, len(time_index))) * 0.2) # Seasonality factor around 1

noise_mult = np.random.normal(1, 0.05, len(time_index)) # Noise factor around 1multiplicative_series = pd.Series(trend_mult * seasonal_pattern_mult * noise_mult, index=time_index)For a multiplicative series, the components should typically be factors that multiply. Here, seasonal_pattern_mult and noise_mult are constructed to oscillate around 1, so they act as scaling factors. The trend also starts from a positive value.

Finally, let’s visualize our synthetic additive series.

# Plot the synthetic additive time series

plt.figure(figsize=(12, 6))

plt.plot(additive_series, label='Synthetic Additive Series')

plt.title('Synthetic Time Series (Additive Model)')

plt.xlabel('Date')

plt.ylabel('Value')

plt.legend()

plt.grid(True)

plt.show()This plot displays the combined synthetic series, clearly showing an upward trend with consistent seasonal fluctuations, as expected from an additive model.

Performing Decomposition

We’ll use statsmodels.tsa.seasonal.seasonal_decompose to perform the decomposition. This function takes the time series, the model type ('additive' or 'multiplicative'), and the period of the seasonality as arguments.

# Perform additive decomposition on the synthetic additive series

# period=12 for monthly data with yearly seasonality

result_additive = seasonal_decompose(additive_series, model='additive', period=12)We apply seasonal_decompose to our additive_series. We explicitly set model='additive' and period=12 because our synthetic data has a clear yearly (12-month) seasonality.

# Plot the decomposed components

plt.figure(figsize=(12, 8))

result_additive.plot()

plt.suptitle('Additive Time Series Decomposition', y=1.02) # Adjust suptitle position

plt.tight_layout(rect=[0, 0, 1, 0.98]) # Adjust layout to prevent title overlap

plt.show()The plot() method of the decomposition result object conveniently generates subplots for the observed series, trend, seasonal, and residual components. Observe how well the seasonal_decompose function has identified the linear trend and the sinusoidal seasonal pattern that we built into the synthetic data. The residuals should appear as random noise around zero.

Now, let’s try decomposing the multiplicative series we created.

# Perform multiplicative decomposition on the synthetic multiplicative series

result_multiplicative = seasonal_decompose(multiplicative_series, model='multiplicative', period=12)Here, we use model='multiplicative' as our synthetic series was generated with a multiplicative relationship.

# Plot the decomposed components for the multiplicative series

plt.figure(figsize=(12, 8))

result_multiplicative.plot()

plt.suptitle('Multiplicative Time Series Decomposition', y=1.02)

plt.tight_layout(rect=[0, 0, 1, 0.98])

plt.show()Again, observe how the decomposition successfully extracts the trend, seasonal, and residual components. Notice how the seasonal component’s amplitude appears to grow with the trend in the original series, which is characteristic of a multiplicative relationship.

Real-World Example: Air Passengers Dataset

Let’s apply decomposition to a classic real-world dataset: monthly international airline passenger numbers from 1949 to 1960. This dataset is famous for exhibiting a clear multiplicative seasonality.

# Load the Air Passengers dataset

# This dataset is often available in seaborn or directly online

# For simplicity, we'll create a dummy one or assume it's loaded as a pandas Series

# In a real scenario, you would load from CSV: pd.read_csv('AirPassengers.csv', index_col='Month', parse_dates=True)# Dummy Air Passengers data for demonstration (replace with actual load if desired)

data = {

'Month': pd.to_datetime(['1949-01-01', '1949-02-01', '1949-03-01', '1949-04-01', '1949-05-01', '1949-06-01',

'1949-07-01', '1949-08-01', '1949-09-01', '1949-10-01', '1949-11-01', '1949-12-01',

'1950-01-01', '1950-02-01', '1950-03-01', '1950-04-01', '1950-05-01', '1950-06-01',

'1950-07-01', '1950-08-01', '1950-09-01', '1950-10-01', '1950-11-01', '1950-12-01',

'1951-01-01', '1951-02-01', '1951-03-01', '1951-04-01', '1951-05-01', '1951-06-01',

'1951-07-01', '1951-08-01', '1951-09-01', '1951-10-01', '1951-11-01', '1951-12-01',

'1952-01-01', '1952-02-01', '1952-03-01', '1952-04-01', '1952-05-01', '1952-06-01',

'1952-07-01', '1952-08-01', '1952-09-01', '1952-10-01', '1952-11-01', '1952-12-01',

'1953-01-01', '1953-02-01', '1953-03-01', '1953-04-01', '1953-05-01', '1953-06-01',

'1953-07-01', '1953-08-01', '1953-09-01', '1953-10-01', '1953-11-01', '1953-12-01',

'1954-01-01', '1954-02-01', '1954-03-01', '1954-04-01', '1954-05-01', '1954-06-01',

'1954-07-01', '1954-08-01', '1954-09-01', '1954-10-01', '1954-11-01', '1954-12-01',

'1955-01-01', '1955-02-01', '1955-03-01', '1955-04-01', '1955-05-01', '1955-06-01',

'1955-07-01', '1955-08-01', '1955-09-01', '1955-10-01', '1955-11-01', '1955-12-01',

'1956-01-01', '1956-02-01', '1956-03-01', '1956-04-01', '1956-05-01', '1956-06-01',

'1956-07-01', '1956-08-01', '1956-09-01', '1956-10-01', '1956-11-01', '1956-12-01',

'1957-01-01', '1957-02-01', '1957-03-01', '1957-04-01', '1957-05-01', '1957-06-01',

'1957-07-01', '1957-08-01', '1957-09-01', '1957-10-01', '1957-11-01', '1957-12-01',

'1958-01-01', '1958-02-01', '1958-03-01', '1958-04-01', '1958-05-01', '1958-06-01',

'1958-07-01', '1958-08-01', '1958-09-01', '1958-10-01', '1958-11-01', '1958-12-01',

'1959-01-01', '1959-02-01', '1959-03-01', '1959-04-01', '1959-05-01', '1959-06-01',

'1959-07-01', '1959-08-01', '1959-09-01', '1959-10-01', '1959-11-01', '1959-12-01',

'1960-01-01', '1960-02-01', '1960-03-01', '1960-04-01', '1960-05-01', '1960-06-01',

'1960-07-01', '1960-08-01', '1960-09-01', '1960-10-01', '1960-11-01', '1960-12-01']),

'Passengers': [112, 118, 132, 129, 121, 135, 148, 148, 136, 119, 104, 118,

115, 126, 141, 135, 125, 149, 170, 170, 158, 133, 114, 140,

145, 150, 178, 163, 172, 178, 199, 199, 184, 162, 146, 166,

171, 180, 193, 181, 183, 218, 230, 242, 209, 191, 172, 194,

196, 196, 236, 235, 229, 243, 264, 272, 237, 211, 180, 201,

204, 188, 235, 227, 234, 264, 302, 293, 259, 229, 203, 229,

242, 233, 267, 269, 270, 315, 364, 347, 312, 274, 237, 278,

284, 277, 317, 313, 318, 374, 413, 405, 355, 306, 271, 306,

337, 305, 356, 346, 346, 412, 472, 461, 390, 342, 301, 335,

340, 318, 362, 348, 363, 435, 491, 505, 404, 359, 310, 337,

360, 342, 406, 396, 420, 472, 548, 559, 463, 407, 362, 405,

417, 391, 419, 461, 472, 535, 622, 606, 508, 461, 390, 432]

}

air_passengers = pd.Series(data['Passengers'], index=data['Month'])This block provides a hardcoded version of the Air Passengers dataset for immediate use. In a real-world scenario, you’d typically load this from a .csv file.

# Plot the original Air Passengers series

plt.figure(figsize=(12, 6))

plt.plot(air_passengers, label='Air Passengers')

plt.title('Monthly International Air Passengers (1949-1960)')

plt.xlabel('Date')

plt.ylabel('Passengers')

plt.legend()

plt.grid(True)

plt.show()The plot clearly shows an upward trend and seasonal fluctuations that increase in amplitude over time, indicating a multiplicative relationship.

# Perform multiplicative decomposition on the Air Passengers series

# period=12 for monthly data with yearly seasonality

result_air_passengers = seasonal_decompose(air_passengers, model='multiplicative', period=12)Based on the visual inspection, we choose the multiplicative model for this dataset.

# Plot the decomposed components for Air Passengers

plt.figure(figsize=(12, 8))

result_air_passengers.plot()

plt.suptitle('Air Passengers Time Series Decomposition (Multiplicative)', y=1.02)

plt.tight_layout(rect=[0, 0, 1, 0.98])

plt.show()The decomposition beautifully separates the clear upward trend, the consistent annual seasonality, and the remaining residuals. Notice how the seasonal component is now a factor (around 1), and its pattern is stable, while its effect on the original series grows due to multiplication with the increasing trend.

Analyzing Residuals

The residual component is often overlooked but is crucial for assessing the quality of the decomposition and for subsequent forecasting model development. Ideally, the residuals should be white noise — random, uncorrelated, and with a constant variance.

The Role of Residuals

Unexplained Variance: Residuals represent the part of the series that cannot be attributed to the identified trend or seasonal patterns.

Model Fit Indicator: If your decomposition (or a subsequent forecasting model) is a good fit for the data, the residuals should contain no discernible patterns. Any remaining pattern suggests that the decomposition (or model) has not fully captured all the underlying structure.

Anomaly Detection: Outliers or unusual events in the original series will often manifest as large spikes in the residual component, making them easier to spot.

Checking for White Noise

We can visually inspect and statistically test the residuals for white noise characteristics.

# Plot the residuals from the Air Passengers decomposition

plt.figure(figsize=(12, 4))

plt.plot(result_air_passengers.resid)

plt.title('Residuals from Air Passengers Decomposition')

plt.xlabel('Date')

plt.ylabel('Residual Value')

plt.grid(True)

plt.show()Visually, the residuals from the Air Passengers decomposition appear somewhat random, but there might be some small variations in variance.

# Plot the Autocorrelation Function (ACF) of the residuals

# This helps identify if there's any remaining correlation (pattern) in the residuals

plt.figure(figsize=(12, 5))

plot_acf(result_air_passengers.resid.dropna(), lags=40, ax=plt.gca()) # dropna() handles NaNs at ends

plt.title('Autocorrelation Function of Residuals')

plt.xlabel('Lag')

plt.ylabel('Autocorrelation')

plt.show()The Autocorrelation Function (ACF) plot shows the correlation of a time series with its own past values (lags). For white noise, all autocorrelations (except at lag 0, which is always 1) should be close to zero and fall within the confidence bands (the blue shaded area). If significant spikes are outside these bands, it suggests remaining correlation, meaning the residuals are not pure white noise and there’s uncaptured information. In the Air Passengers example, the ACF generally falls within the bands, suggesting the residuals are largely random.

# Perform the Ljung-Box test on the residuals

# This is a statistical test for white noise

# It tests the null hypothesis that there is no autocorrelation up to a specified lag.

# A high p-value (e.g., > 0.05) suggests that the residuals are independently distributed (white noise).

ljungbox_test_results = acorr_ljungbox(result_air_passengers.resid.dropna(), lags=[10, 20], return_df=True)

print(ljungbox_test_results)The Ljung-Box test is a formal statistical test for autocorrelation in the residuals. The null hypothesis ($H_0$) is that the data are independently distributed (i.e., residuals are white noise). If the p-value is greater than a chosen significance level (commonly 0.05), we fail to reject the null hypothesis, suggesting the residuals are indeed white noise. We test at multiple lags (e.g., 10 and 20) to check for short and longer-term dependencies. For the Air Passengers residuals, if the p-values are high, it supports the notion that the decomposition has effectively isolated the trend and seasonality.

Practical Implications of Residuals

Model Validation: Thorough analysis of residuals is a critical step in validating any time series model. If residuals show patterns, it indicates that your model is inadequate and needs refinement.

Feature Engineering: If residuals are not white noise, the patterns within them might suggest new features to incorporate into a more complex forecasting model (e.g., adding a new predictor variable, or using a more sophisticated model that can capture higher-order dependencies).

Anomaly Detection: Monitoring residuals over time is an effective way to detect sudden, unexpected changes in the underlying process, which could signal system failures, unusual market events, or data errors.

Impact on Forecasting Model Selection

Understanding the components of a time series directly informs the choice and complexity of forecasting models:

Trend: If a strong trend is present, models that can explicitly capture and extrapolate trends (e.g., ARIMA models with differencing, Exponential Smoothing methods like Holt’s or Holt-Winters, or regression models with time as a predictor) are appropriate.

Seasonality: For series with clear seasonality, models that explicitly account for seasonal patterns are necessary. This includes Seasonal ARIMA (SARIMA), Seasonal Exponential Smoothing (Holt-Winters), or models like Prophet that explicitly model seasonal effects.

Stationarity: Decomposing a series can help achieve stationarity (constant mean, variance, and autocorrelation over time), which is a key assumption for many traditional forecasting models like ARIMA. By removing trend and seasonality, the remaining residuals are often closer to a stationary process.

Feature Engineering: The seasonal component can be extracted and used to create new features for machine learning models (e.g., creating dummy variables for months or days of the week, or sine/cosine transformations to capture cyclical patterns). The trend component can also be used as a feature or modeled separately.

By systematically breaking down a time series into its fundamental components, we gain profound insights into its behavior, which is an indispensable step towards accurate analysis and reliable forecasting.

The Comprehensive Time Series Forecasting Project Roadmap

Developing accurate and actionable time series forecasts involves far more than simply running a statistical model. It’s a comprehensive, cyclical process that bridges business objectives with technical execution, requiring collaboration across various roles. A structured approach, often referred to as a “forecasting project roadmap” or “lifecycle,” is essential for success. This roadmap ensures that forecasting efforts are aligned with strategic goals, data quality is maintained, models are robust, and their outputs are effectively utilized and continuously improved. Neglecting any stage can lead to models that are technically sound but fail to deliver real-world value or quickly become obsolete.

Let’s explore the key stages of a typical time series forecasting project.

Define the Business Problem and Goals

This initial stage is arguably the most critical yet often overlooked. Before collecting any data or thinking about models, it’s paramount to clearly articulate why a forecast is needed and what specific business decision it will inform.

Why it’s Crucial:

Clarity and Direction: A well-defined problem provides a clear objective for the entire project, preventing aimless data exploration or model building.

Alignment with Business Value: It ensures the forecasting effort directly contributes to an organizational goal, such as cost reduction, revenue increase, or improved efficiency.

Defines Success Metrics: Understanding the business problem helps identify the key performance indicators (KPIs) that will measure the forecast’s success. For instance, is accuracy paramount, or is it more important to avoid stockouts?

Scope Definition: Helps to bound the problem, preventing “scope creep” where the project expands beyond its original intent.

Key Activities:

Stakeholder Interviews: Engage with business users, domain experts, and decision-makers to understand their needs, current challenges, and desired outcomes.

Problem Framing: Translate vague business questions into specific, measurable forecasting problems (e.g., “predict next month’s sales for product X,” “forecast hourly energy demand for the next 24 hours”).

KPI Identification: Define the metrics that will quantify the impact and success of the forecast (e.g.,

Mean Absolute Error (MAE),Root Mean Squared Error (RMSE),forecast bias,inventory turnover rate).Time Horizon and Granularity: Determine the required forecasting horizon (e.g., next day, next month, next year) and the granularity (e.g., hourly, daily, weekly, monthly).

Common Pitfalls:

Vague Objectives: Starting with “we need a forecast” without specifying for what purpose or how it will be used.

Lack of Business Context: Developing a technically accurate model that doesn’t solve the actual business problem or isn’t actionable.

Ignoring Stakeholders: Building a solution in isolation without input from those who will use it.

Typical Roles Involved: Business Analyst, Product Manager, Domain Expert, Lead Data Scientist.

Example: Camping Trip Scenario

Imagine you’re planning a multi-day camping trip and want to choose the right sleeping bag.

Business Problem: Select a sleeping bag that will keep me comfortable, but not overheated, given the expected overnight temperatures.

Goal: Forecast the minimum overnight temperature for each night of the trip to inform sleeping bag selection.

Success Metric: My comfort level (subjective, but we can aim for the sleeping bag’s comfort rating to match the forecast temperature).

Time Horizon/Granularity: Daily minimum temperature for the next 3–5 days.

Example: Retail Sales Forecasting

A retail chain wants to optimize inventory and staffing

Business Problem: Accurately predict future sales to minimize stockouts, reduce excess inventory holding costs, and optimize staffing levels in stores.

Goal: Forecast daily sales for each product SKU at each store location for the next 4–6 weeks.

Success Metrics:

MAPE(Mean Absolute Percentage Error) for sales,stockout rate,inventory turnover.Time Horizon/Granularity: Daily sales forecasts for individual SKUs at specific store locations, looking 4–6 weeks ahead.

Gather and Prepare Data

Once the problem is clear, the next crucial step is to acquire and ready the data that will fuel your forecasting model. Data quality directly impacts model performance; “garbage in, garbage out” is particularly true in time series forecasting.

Why it’s Crucial:

Data as Fuel: High-quality, relevant historical data is the fundamental input for any time series model.

Reliability: Clean and well-prepared data ensures that the patterns identified by the model are genuine and not artifacts of data errors or inconsistencies.

Feature Engineering Foundation: This stage lays the groundwork for creating meaningful features that capture underlying drivers of the time series.

Key Activities:

Data Source Identification: Locate all relevant data sources (e.g., internal databases, APIs, external datasets). This might include the target time series itself (e.g., sales, temperature) and potential exogenous variables (e.g., promotions, holidays, economic indicators).

Data Extraction (ETL/ELT): Retrieve data from various sources. This can involve writing scripts to connect to databases, consume APIs, or parse files.

Data Cleaning: Address common data issues:

Missing Values: Impute (e.g., interpolation, mean/median) or remove missing data points.

Outliers: Identify and handle extreme values that might distort patterns (e.g., capping, removal, or specialized robust methods).

Inconsistent Formats: Standardize data types, date formats, and categorical encodings.

Duplicates: Remove redundant entries.

Data Transformation: Reshape data for analysis, aggregate to the desired granularity (e.g., from hourly to daily), or normalize/standardize numerical features.

Preliminary Feature Engineering (Conceptual): At this stage, you might start thinking about and creating basic features like

lagged values(past observations),moving averages, ortime-based features(day of week, month, year).Common Pitfalls:

Data Silos: Relevant data being scattered across different departments or systems, making it hard to access.

Poor Data Quality: Assuming data is clean, leading to models built on flawed information.

Insufficient Historical Data: Time series models often require a significant amount of historical data to identify trends and seasonality reliably.

Data Leakage: Accidentally including future information in the training data, leading to overly optimistic evaluation results.

Typical Roles Involved: Data Engineer, Data Scientist.

Example: Camping Trip Scenario

Data Collection: Access historical weather data for the camping location (temperature, precipitation, wind speed), potentially from weather APIs or historical records. Also, gather personal notes from past trips (e.g., “felt cold with 40F sleeping bag at 35F”).

Data Preparation: Clean weather data (handle missing temperature readings), align dates, and perhaps average temperatures over the night.

Example: Retail Sales Forecasting

Data Collection: Extract historical sales transaction data (SKU, quantity, price, date, store ID), promotional calendars, holiday schedules, and possibly external economic indicators (e.g., consumer confidence index, local unemployment rates).

Data Preparation: Aggregate transactional data to daily SKU-store sales. Identify and handle missing sales records. Clean up inconsistent product IDs. Create features like

is_promotion,day_of_week,is_holiday.

Develop a Forecasting Model

This is where the core predictive engine is built. It involves selecting, training, and optimizing a model based on the prepared historical data.

Why it’s Crucial: This stage translates the patterns and relationships identified in the data into a predictive algorithm that can generate future forecasts.

Key Activities (High-Level Conceptual):

Data Splitting: Divide the historical data into

training,validation(ordevelopment), andtestsets. For time series, this is typically done chronologically (pastfor training,futurefor validation/test) to simulate real-world forecasting.Feature Engineering (Advanced): Beyond basic time-based features, this might involve creating more complex interactions, polynomial features, or transformations specific to the chosen model.

Model Selection: Choose appropriate forecasting algorithms. This could range from traditional statistical models (e.g.,

ARIMA,Exponential Smoothing,Prophet) to machine learning models (e.g.,Random Forest,XGBoost,LightGBM) or deep learning models (e.g.,LSTMs,Transformers) depending on data complexity, volume, and required interpretability.Model Training: Fit the selected model(s) to the training data. This process learns the underlying patterns and relationships between features and the target variable.

Hyperparameter Tuning: Optimize the model’s internal parameters (hyperparameters) using the validation set to achieve the best performance without overfitting. This might involve techniques like

grid search,random search, orBayesian optimization.

Common Pitfalls:

Overfitting/Underfitting: Building a model that performs perfectly on training data but poorly on new data (overfitting), or a model that is too simplistic and doesn’t capture underlying patterns (underfitting).

Ignoring Baseline Models: Not comparing complex models against simple baselines (e.g.,

naive forecast,seasonal naive) to ensure added complexity is justified.Lack of Interpretability: Choosing a “black box” model when explainability is crucial for business trust and decision-making.

Typical Roles Involved: Data Scientist, Machine Learning Engineer.

Example: Camping Trip Scenario

Model Selection: A simple rule-based model: “If the forecast minimum temperature is below 30F, use a 0F bag; if between 30–45F, use a 30F bag; otherwise, use a light bag.” Or a basic linear regression using historical temperature data.

Training: If using regression, train the model on past temperature vs. comfort data.

Example: Retail Sales Forecasting

Model Selection: Experiment with various models like

Prophet(for strong seasonality and holidays),ARIMA(for autocorrelation), orXGBoost(for incorporating many exogenous variables like promotions). For large-scale forecasting across many SKUs, a hierarchical approach or a deep learning model might be considered.Training: Train the chosen model(s) on historical sales data, incorporating features like

day_of_week,month,promotional flags, andlagged sales.

Evaluate and Validate the Model

After developing a model, it’s critical to rigorously evaluate its performance using unseen data to ensure its reliability and generalization capabilities. This step is about confirming that the model will perform well in the real world.

Why it’s Crucial:

Trust and Reliability: Provides confidence that the model’s predictions are accurate and trustworthy.

Preventing Over-Optimism: Evaluation on a separate test set prevents overestimating performance due to overfitting on training data.

Informing Deployment: A well-validated model is a prerequisite for successful deployment.

Error Analysis: Helps understand where and why the model makes mistakes, guiding further improvements.

Key Activities:

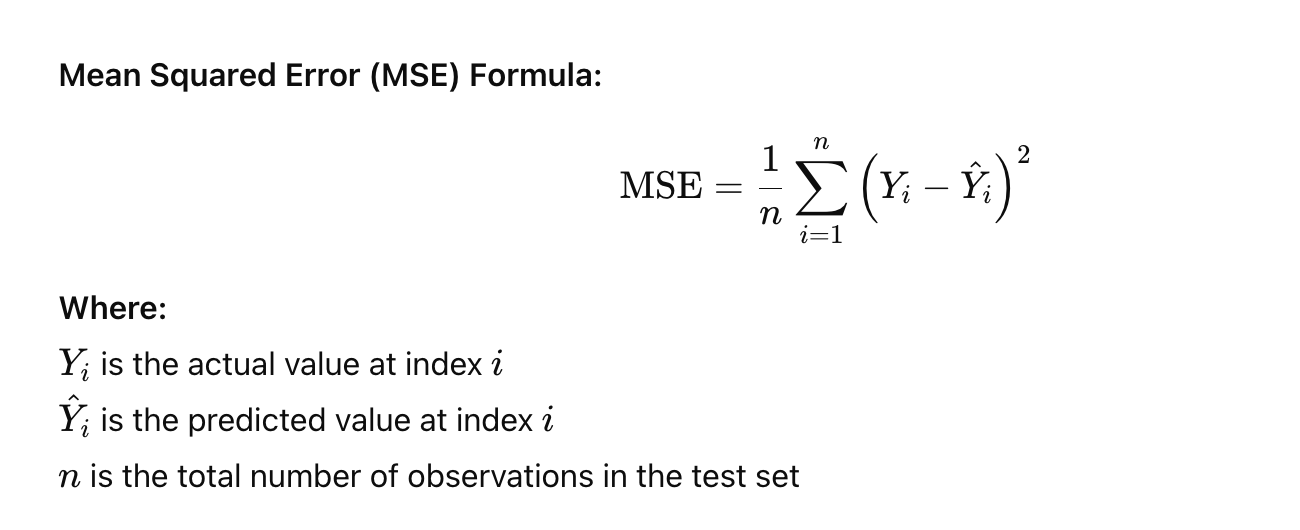

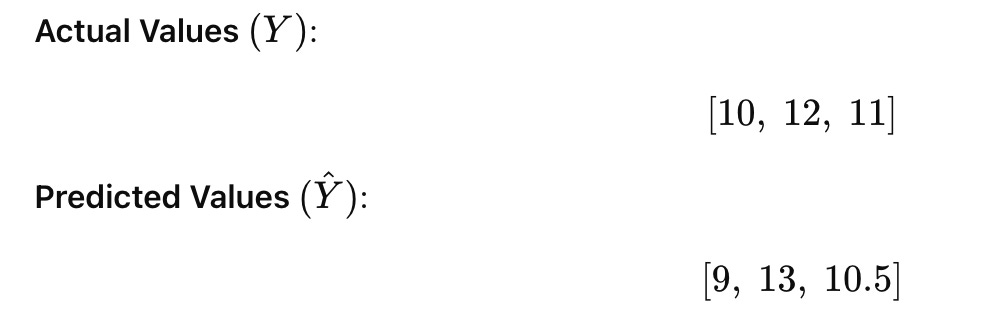

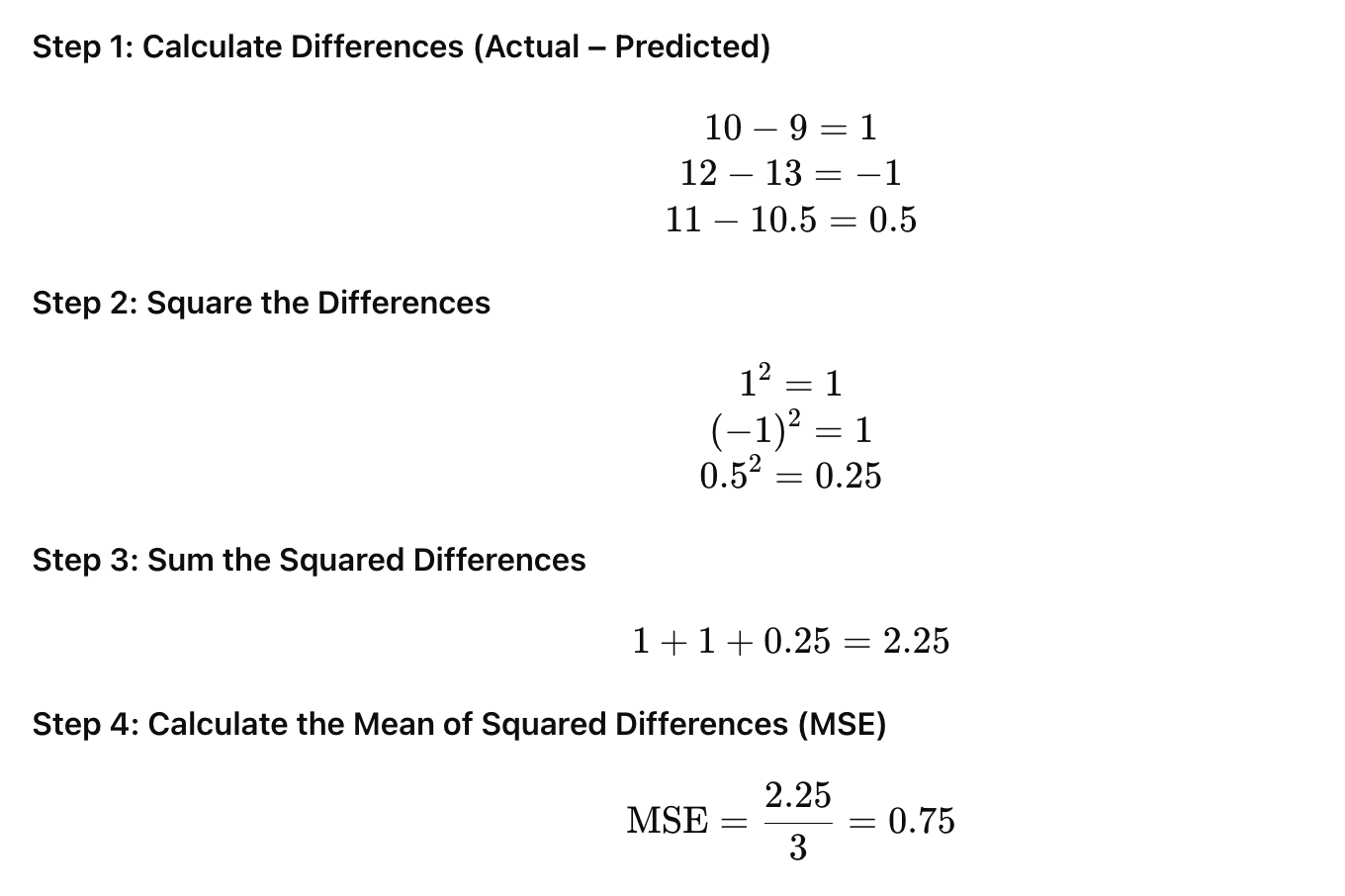

Defining Evaluation Metrics: Choose appropriate metrics based on the business problem (e.g.,

MAE,RMSE,MAPE,sMAPE,R-squared,directional accuracy). Some metrics are better for specific error types or business contexts.Backtesting/Out-of-Sample Validation: Evaluate the model’s performance on the

test set(data the model has never seen), mimicking how it would perform on future data. For time series, this often involves "rolling forecast origin" or "walk-forward validation" where the model is retrained and evaluated on successive time windows.Sensitivity Analysis: Understand how robust the model is to changes in input data or assumptions.

Error Analysis: Investigate patterns in prediction errors. Are errors higher on weekends? During promotions? For certain product categories? This provides insights for model refinement.

Business Impact Assessment: Translate statistical accuracy into business implications (e.g., “a 5%

MAPEmeans we might overstock by X units or understock by Y units, costing Z dollars").

Common Pitfalls:

Using Inappropriate Metrics: Choosing metrics that don’t align with the business goal (e.g., using

RMSEwhenMAPEis more relevant for percentage errors).Evaluating on Training Data Only: Leading to an inflated sense of accuracy.

Ignoring Business Context of Errors: Not understanding the real-world cost or impact of different types of forecasting errors.

Typical Roles Involved: Data Scientist, Business Analyst.

Example: Camping Trip Scenario

Evaluation: After the trip, compare the forecast minimum temperatures against the actual minimum temperatures recorded. Did the sleeping bag recommendation align with your comfort level? If not, why? (e.g., wind chill wasn’t factored in).

Metrics: A simple count of “comfortable” vs. “uncomfortable” nights.

Example: Retail Sales Forecasting

Evaluation: Compare the model’s daily SKU-store sales forecasts against actual sales data for the test period.

Metrics: Calculate

MAPEandRMSEfor different product categories, stores, and time periods. Analyzeforecast bias(is the model consistently over- or under-forecasting?). Present these metrics to stakeholders in an understandable way.

Deploy to Production

Once a model has been thoroughly validated and deemed fit for purpose, it needs to be integrated into the operational environment so that its forecasts can be used for real-world decision-making.

Why it’s Crucial:

Actionable Insights: Deployment makes the forecast accessible to business users and systems, allowing them to act on the predictions.

Automation: Automates the forecasting process, reducing manual effort and ensuring timely availability of predictions.

Scalability: Enables the model to generate forecasts at the required scale and frequency.

Key Activities:

Model Serialization: Save the trained model in a deployable format (e.g.,

picklefile,ONNX,PMML).API Development: Wrap the model in an API (e.g.,

REST APIusing Flask or FastAPI) so other applications can easily request forecasts.Integration with Existing Systems: Connect the forecasting service with business applications (e.g., inventory management systems, ERPs, dashboards). This might involve setting up data pipelines to feed inputs to the model and consume its outputs.

Infrastructure Provisioning: Set up the necessary computing resources (e.g., cloud instances, containers like Docker, orchestration with Kubernetes) to host the model.

Automated Pipelines: Establish

CI/CD(Continuous Integration/Continuous Deployment) pipelines for model updates and automated retraining.Common Pitfalls:

Lack of MLOps Maturity: Underestimating the complexity of operationalizing machine learning models compared to traditional software.

Integration Complexities: Difficulty connecting the model with legacy systems.

Scalability Issues: The deployed model cannot handle the required volume of predictions or concurrent requests.

Security Concerns: Neglecting data security and access control for the deployed model.

Typical Roles Involved: MLOps Engineer, Software Engineer, Data Scientist.

Example: Camping Trip Scenario

Deployment: Create a simple script or web application that takes the trip dates and location as input, queries a weather API for forecast temperatures, and then applies your sleeping bag rule to recommend a bag. Share this tool with friends.

Example: Retail Sales Forecasting

Deployment: The forecasting model is packaged into a container (e.g., Docker image) and deployed on a cloud platform (e.g., AWS SageMaker, Azure ML, Google Cloud AI Platform). An API endpoint is exposed that inventory management systems can call daily to get updated sales forecasts for all SKUs and stores. An automated data pipeline ensures the model receives fresh input data regularly.

Monitor and Maintain

Deployment is not the end of the project; it’s the beginning of its operational life. Models, especially those relying on time-dependent data, can degrade over time due to changes in underlying patterns. Continuous monitoring and maintenance are crucial.

Why it’s Crucial:

Sustained Performance: Ensures the model continues to provide accurate and relevant forecasts over time.

Detecting Degradation: Identifies “model drift” or “concept drift” where the relationship between input features and the target variable changes.

Addressing Data Quality Issues: Catches problems in the data pipeline that could impact model inputs.

Business Relevance: Ensures the model adapts to evolving business conditions and goals.

Key Activities:

Performance Monitoring: Continuously track model performance metrics (e.g.,

MAE,MAPE,bias) against actual outcomes. Set up dashboards and alerts for significant deviations.Data Quality Monitoring: Monitor the incoming data pipeline for anomalies, missing values, or changes in data distribution that could affect the model.

Concept Drift Detection: Implement mechanisms to detect when the underlying patterns the model learned are no longer valid (e.g., due to market shifts, new products, policy changes).

Retraining Schedules: Establish a regular schedule for retraining the model with new historical data to keep it updated.

A/B Testing: Experiment with new model versions or features by deploying them alongside the existing model to evaluate real-world performance before full rollout.

Model Versioning: Maintain versions of models and their associated code/data for reproducibility and rollback capabilities.

Common Pitfalls:

Stale Models: Deploying a model and never revisiting it, leading to rapidly degrading performance.

Ignoring Data Pipeline Issues: Assuming data quality will remain constant.

Lack of Alert Systems: Not being notified when model performance degrades or data issues arise.

No Feedback Loop: Not collecting feedback from business users on the usefulness or accuracy of the forecasts.

Typical Roles Involved: MLOps Engineer, Data Scientist, Operations Team.

Example: Camping Trip Scenario

Monitoring: After each trip, review how well the sleeping bag recommendation performed. Did you consistently feel too cold or too hot?

Maintenance: If you consistently feel cold, perhaps update your rule to be more conservative or incorporate additional factors like wind.

Example: Retail Sales Forecasting

Monitoring: Set up automated dashboards that track daily

MAPEandbiasfor sales forecasts across different stores and product categories. Configure alerts to notify the data science team ifMAPEexceeds a certain threshold or if significantbiasis detected.Maintenance: Schedule monthly retraining of the model with the latest sales data. If a new major promotional strategy is introduced, the model might need a significant update or even redevelopment to incorporate these new dynamics.

Setting a Goal

Before embarking on any time series forecasting endeavor, the single most crucial step is to define a clear, actionable goal. This initial phase, often overlooked in the rush to gather data and apply algorithms, determines the ultimate success and relevance of your entire forecasting project. Without a well-articulated goal, your efforts risk becoming aimless, producing forecasts that, while technically sound, provide no real value or actionable insights.

The Foundation: Why a Clear Goal is Paramount

Think of a forecasting project as a journey. Your goal is the destination. Without knowing where you’re going, any path you take will be arbitrary, and you might end up somewhere completely unhelpful. In the context of time series forecasting, defining your goal means answering the fundamental question: What decision will this forecast help me make, or what problem will it help me solve?

This seemingly simple question is the bedrock upon which all subsequent steps are built. It dictates:

What specific variable needs to be forecast.

The required accuracy and precision of the forecast.

The time horizon of the forecast (e.g., next day, next month, next year).

The resources (data, tools, personnel) you’ll need.

How the forecast will be evaluated for success.

Characteristics of a “Good” Forecasting Goal

A robust forecasting goal isn’t just a vague aspiration; it possesses specific characteristics that make it effective and actionable. While not strictly adhering to the full SMART (Specific, Measurable, Achievable, Relevant, Time-bound) criteria often used in project management, we can adapt its essence to forecasting:

Specific: The goal must clearly state what needs to be achieved. Instead of “forecast sales,” a specific goal might be “forecast daily sales of product X for the next month.”

Actionable: The forecast derived from the goal must directly lead to a decision or an action. If the forecast doesn’t inform a concrete step, its utility is limited. For example, “forecast future temperature to decide which sleeping bag to pack” is actionable.

Measurable: You must be able to quantify whether the goal has been met. This often relates to the impact of the forecast. For instance, “reduce inventory holding costs by 10% by optimizing stock levels based on demand forecasts.”

Relevant: The goal must align with broader organizational objectives or personal needs. Forecasting for the sake of forecasting is rarely beneficial. It should address a genuine problem or opportunity.

Time-bound (Implied by Horizon): While the goal itself might not always have an explicit deadline, the forecast it requires will always have a defined time horizon (e.g., “forecast for the next quarter,” “predict next week’s demand”). This implicitly makes the goal time-bound in terms of its utility.

The Perils of a Vague Goal

Failing to define a clear goal is one of the most common pitfalls in any data science or forecasting project. A vague or ill-defined goal can lead to:

Irrelevant Forecasts: You might accurately forecast something, but if it doesn’t align with a decision or problem, the forecast is useless. Imagine forecasting the price of a stock when your actual goal was to predict overall market sentiment.

Wasted Effort and Resources: Without a clear target, teams can spend significant time and money collecting irrelevant data, developing complex models that don’t address the core need, or chasing elusive metrics.

Scope Creep: The project can endlessly expand as new, loosely related questions arise, derailing progress and delaying completion.

Difficulty in Evaluation: If you don’t know what success looks like from the outset, it’s impossible to objectively evaluate the performance and value of your forecasting model.

Project Failure: Ultimately, a lack of direction can lead to projects being abandoned or failing to deliver any meaningful impact.

How the Goal Shapes the Forecast Type

Your defined goal directly influences the type of forecast you need to produce. Different goals require different outputs:

Point Forecast vs. Probabilistic Forecast:

If your goal is to know the most likely single value (e.g., “What will be the exact temperature tomorrow?”), you need a point forecast.

If your goal involves understanding uncertainty and risk (e.g., “What is the probability that the temperature will drop below freezing tomorrow?” or “What range of temperatures is most likely?”), you need a probabilistic forecast (e.g., prediction intervals or full probability distributions). This is crucial for risk management or setting safety stock levels.

Short-Term vs. Long-Term Forecast:

A goal like “optimize staffing for tomorrow’s call center volume” requires a short-term forecast (hourly, daily).